Zhipu AI releases “Glyph”: an artificial intelligence framework that scales context length through visual text compression

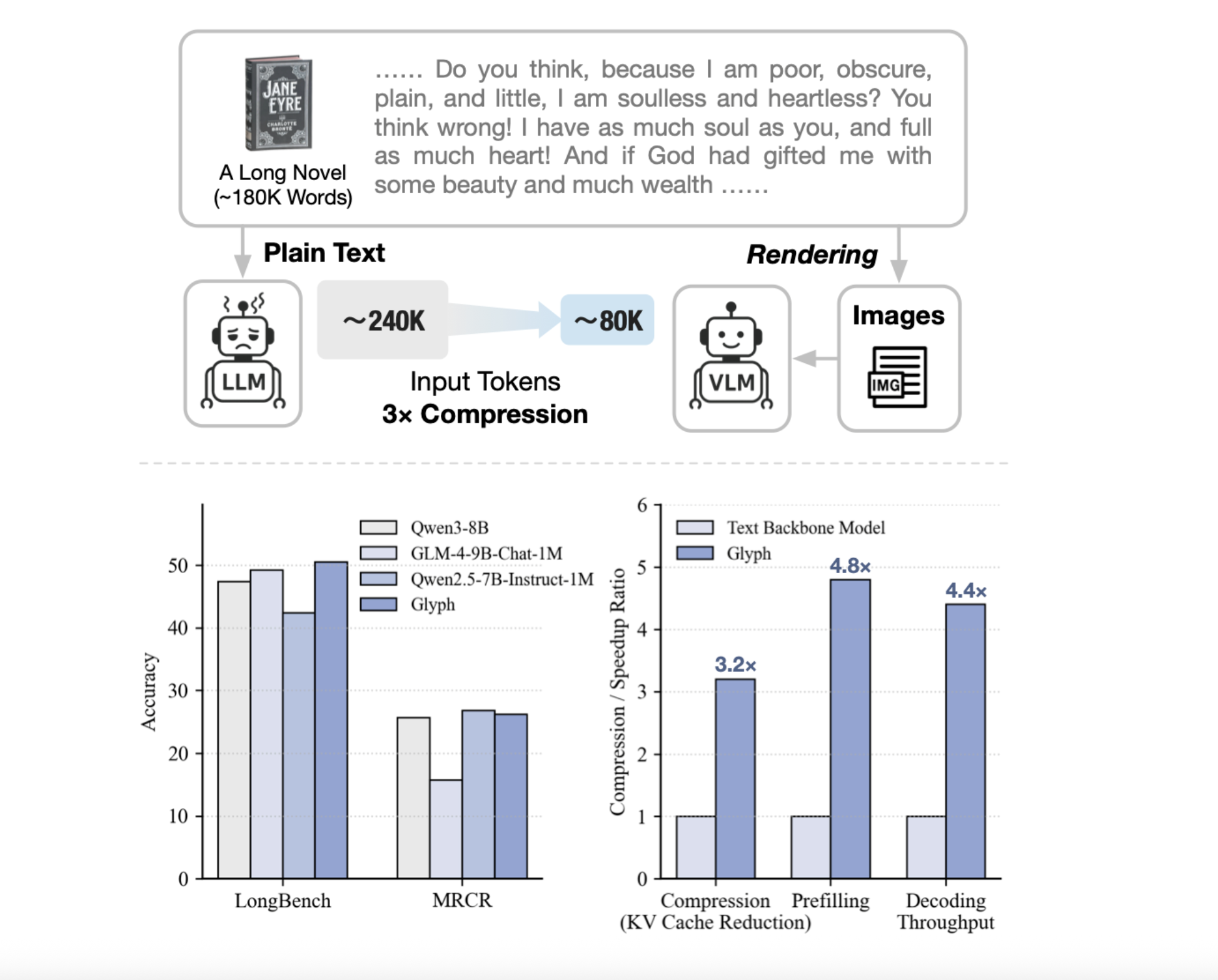

Can we render long text as an image and use VLM to achieve 3-4x token compression, scaling 128K context to a 1M token workload while maintaining accuracy? A team of researchers from Zhipu AI releases Glyphan artificial intelligence framework for scaling context length through visual text compression. It renders long text sequences into images and processes them using a visual language model. The system renders very long text into page images, and then the visual language model VLM processes these pages end-to-end. Each visual token encodes many characters, so the effective token sequence is shortened while preserving semantics. Glyph can achieve 3-4x token compression on long text sequences without sacrificing performance, significantly improving memory efficiency, training throughput, and inference speed.

Why glyphs?

Traditional approaches extend positional encoding or modify attention, and computation and memory still scale with token counting. Retrieval reduces typing but runs the risk of losing evidence and increasing latency. Glyph changes the representation, it converts text into images and shifts the burden to the VLM which has learned OCR, layout and inference. This increases the information density of each token, so a fixed token budget can cover more original context. Under extreme compression, the research team showed that a 128K context VLM can handle tasks originating from 1M token-level text.

System design and training

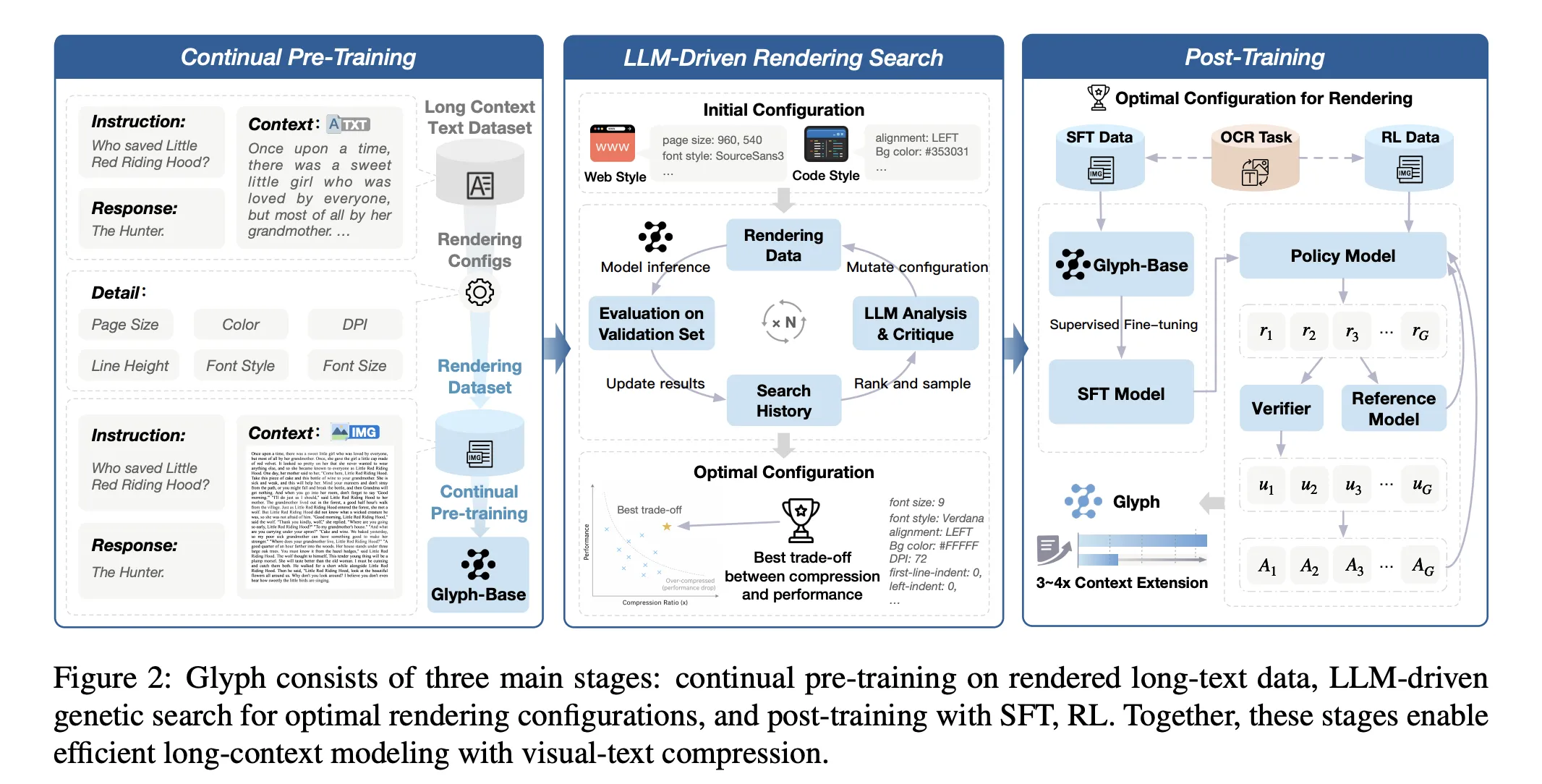

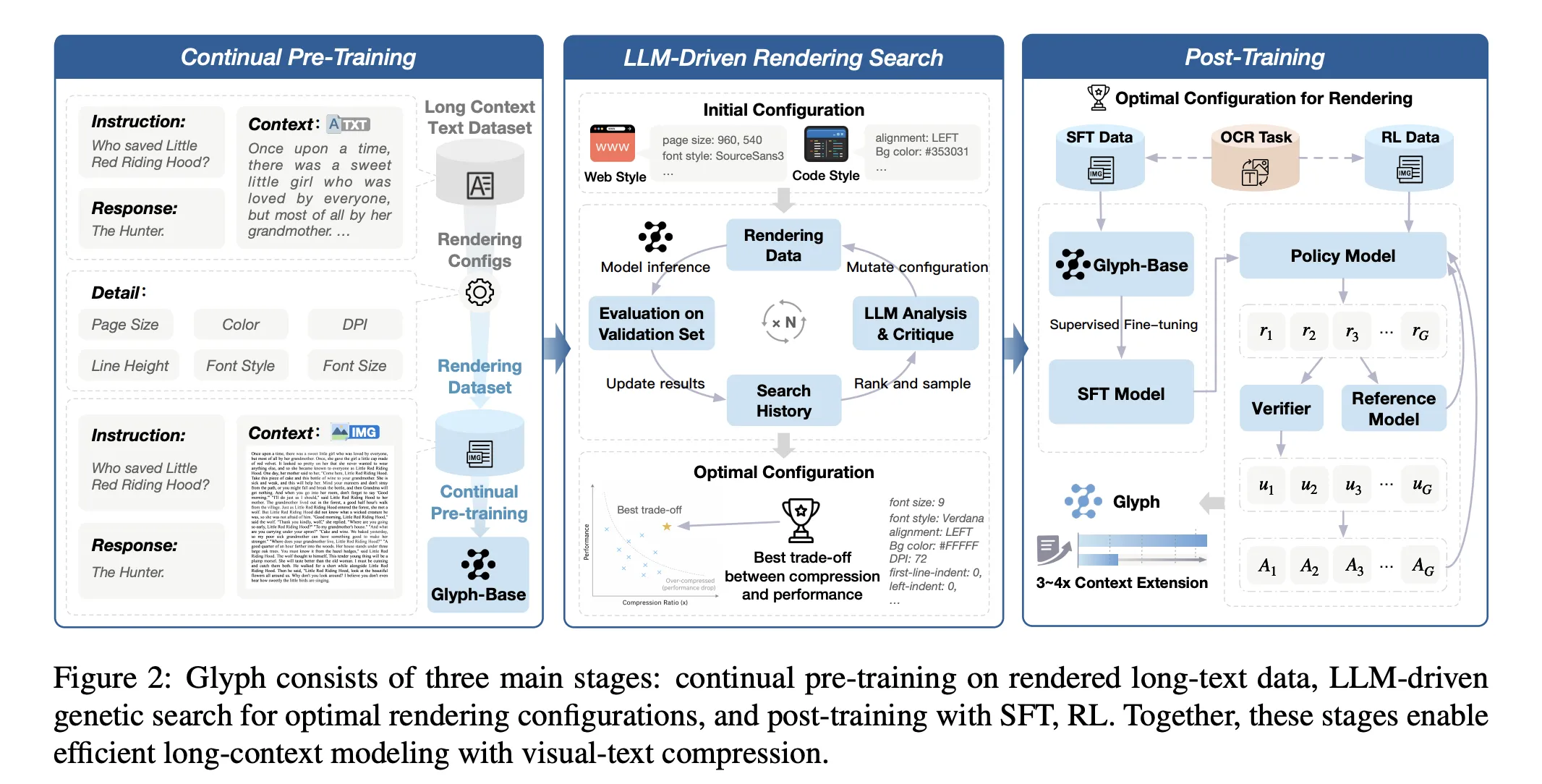

The method is divided into three stages: continuous pre-training, LLM-driven rendering search, and post-training. Continuous pre-training exposes VLM to large corpora of long text renderings with different layouts and styles. The goal is to coordinate visual and textual representations and transfer long-context skills from textual to visual markup. Render Search is a genetic cycle driven by an LL.M. It changes page size, dpi, font family, font size, line height, alignment, indentation and spacing. It evaluates candidates on the validation set to jointly optimize accuracy and compression. Post-training uses supervised fine-tuning and reinforcement learning with group relative policy optimization, and an auxiliary OCR alignment task. OCR loss improves character fidelity when fonts are smaller and spacing smaller.

Results, performance and efficiency…

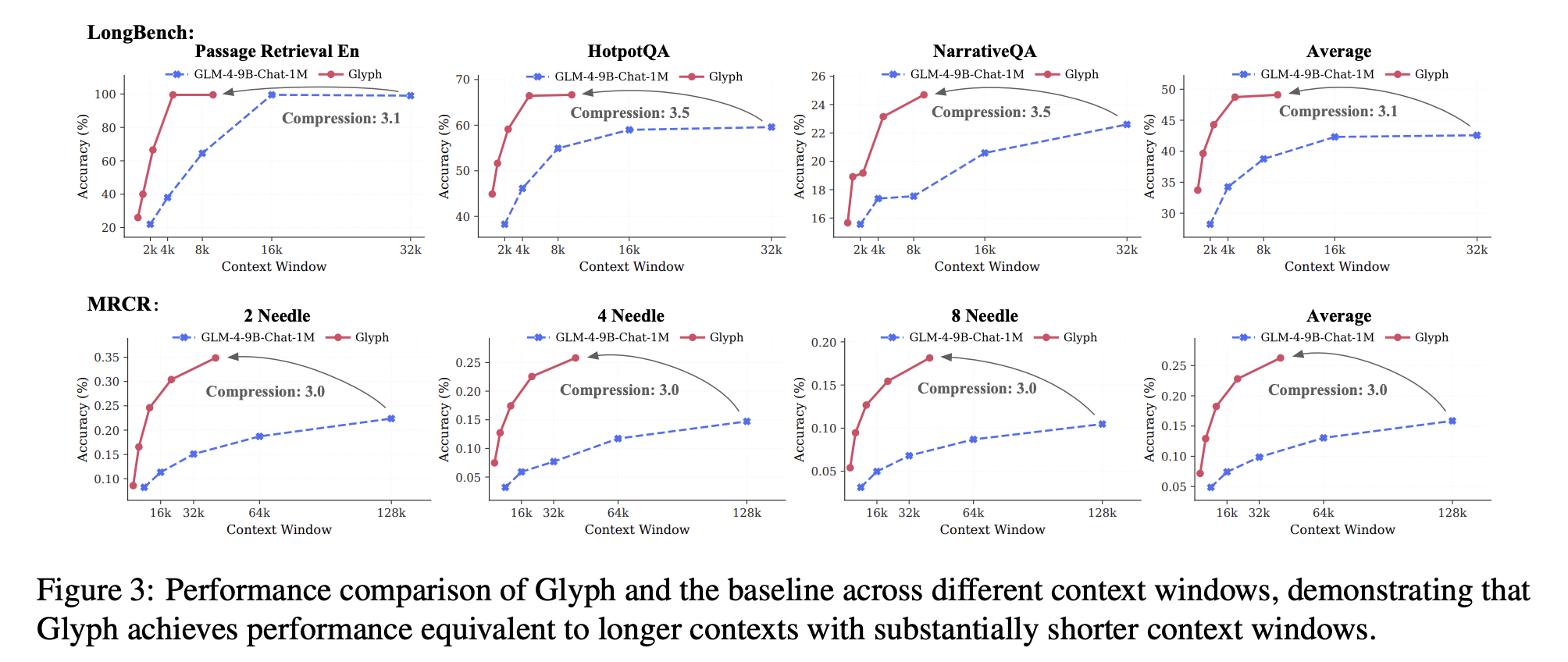

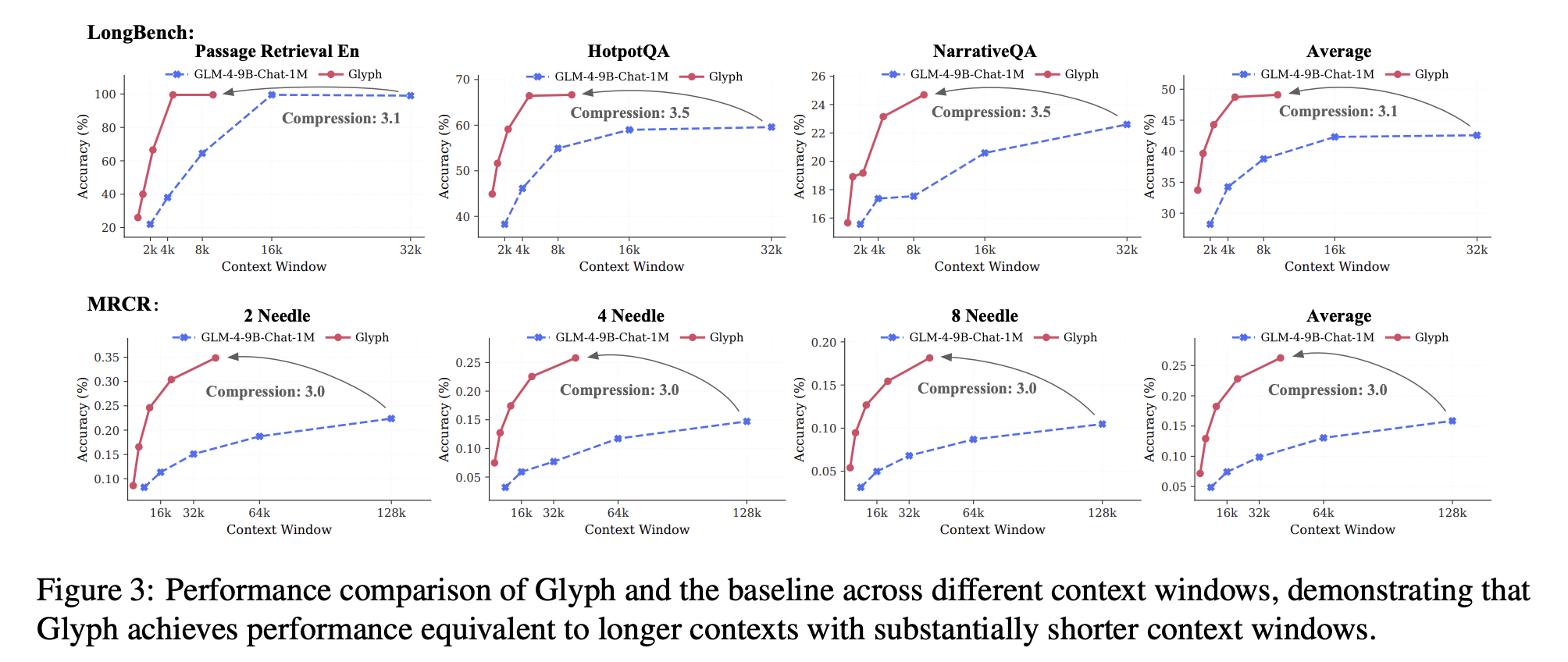

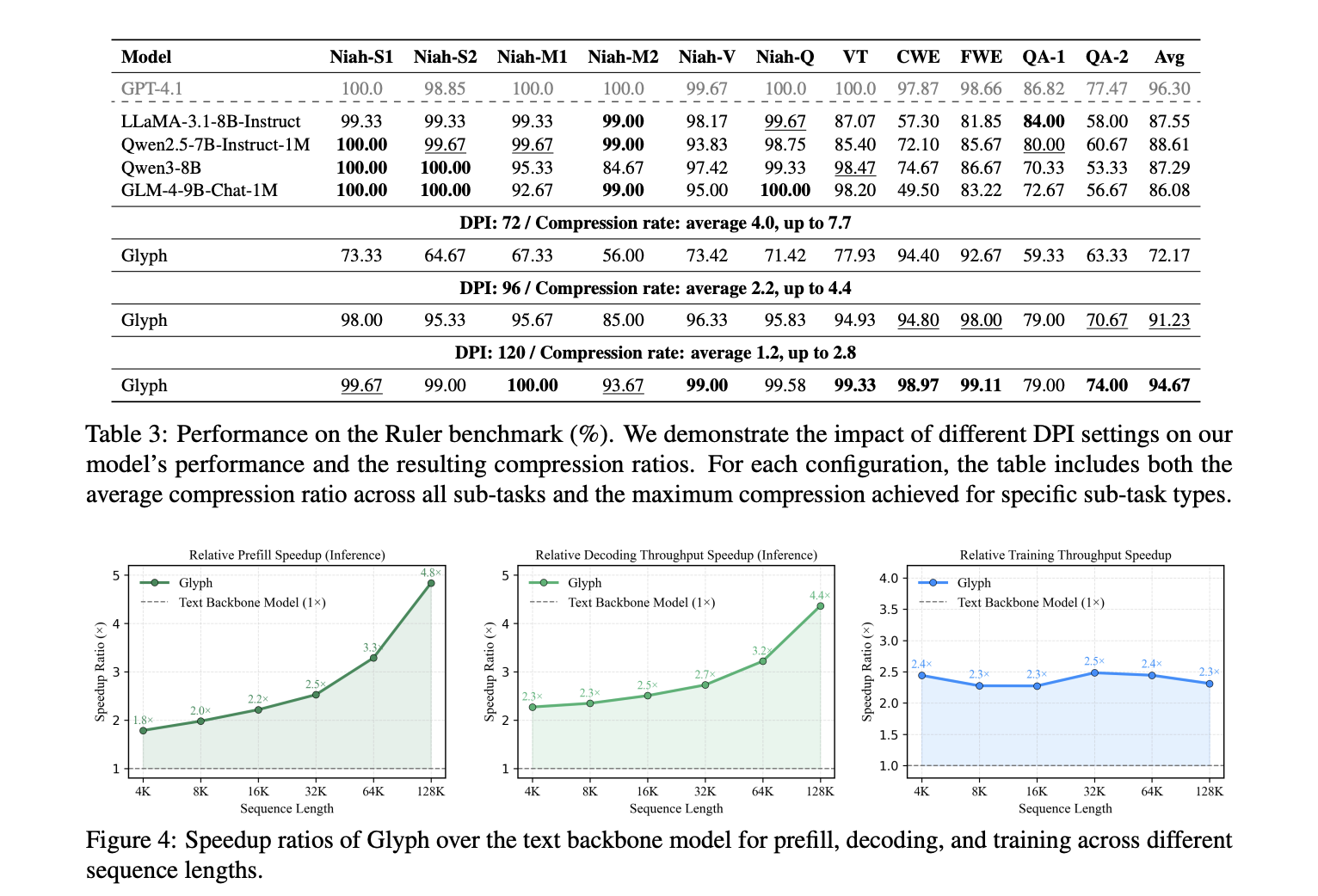

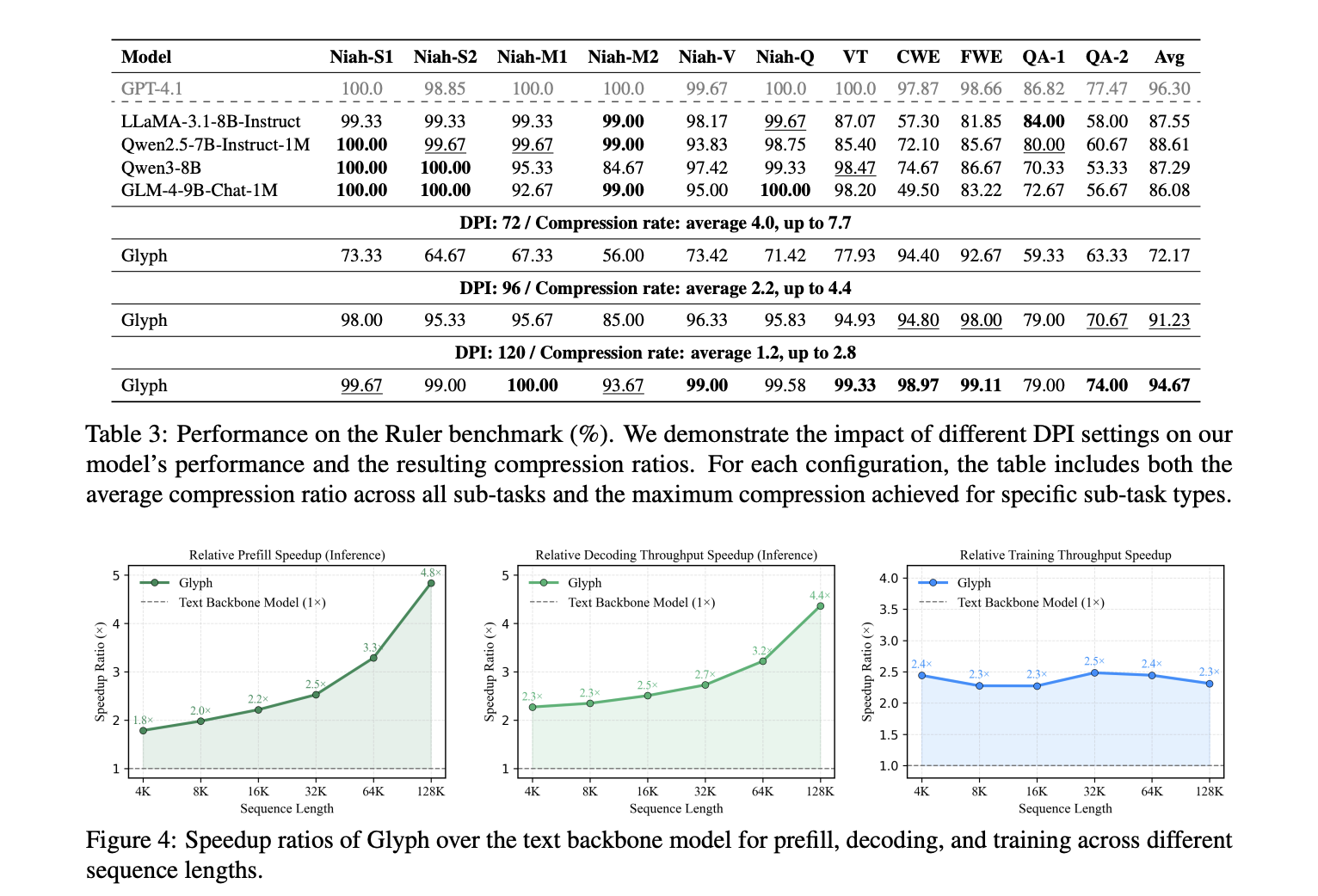

LongBench and MRCR establish accuracy and compression under long conversation history and document tasks. The model achieves an average effective compression ratio of about 3.3 on LongBench, close to 5 on some tasks, and an average effective compression ratio of about 3.0 on MRCR. These gains increase with input time as each visual token carries more characters. Speedups of approximately 4.8x for pre-filling, approximately 4.4x for decoding, and approximately 2x for supervised fine-tuning throughput are reported compared to a 128K input text backbone. The Ruler benchmark confirms that higher dpi improves scores when inferencing because clearer glyphs aid OCR and layout parsing. The research team reported that specific subtasks had an average compression of 4.0 and a maximum compression of 7.7 for DPI 72; an average compression of 2.2 and a maximum compression of 4.4 for DPI 96; and an average compression of 1.2 and a maximum compression of 2.8 for DPI 120. 7.7 The maximum value belongs to Ruler, not MRCR.

so what? Application areas

Glyph facilitates multimodal document understanding. Training on rendered pages improves the performance of MMLongBench Doc relative to the base vision model. This suggests that render targets are a useful excuse for actual document tasks including graphics and layout. The main failure mode is susceptibility to radical typography. Very small fonts and tight spacing can reduce character accuracy, especially for rare alphanumeric strings. The research team excluded the UUID subtask on Ruler. This approach assumes server-side rendering and VLM with strong OCR and layout priors.

Main points

- Glyph renders long text into images, and a visual language model processes the pages. This reframes long context modeling as a multimodal problem and preserves semantics while reducing markup.

- The research team reports token compression of 3 to 4x with accuracy comparable to the strong 8B text baseline on the long context benchmark.

- Measured under 128K input, the prefill speedup is about 4.8x, the decoding speedup is about 4.4x, and the supervised fine-tuning throughput is about 2x.

- The system uses continuous pre-training on rendered pages, LLM-driven genetic search of rendering parameters, followed by supervised fine-tuning and reinforcement learning using GRPO, coupled with OCR alignment targets.

- Evaluations include LongBench, MRCR and Ruler, with the extreme case showing a 128K context VLM handling a 1M token level task. Code and model cards are publicly available on GitHub and Hugging Face.

Glyph treats long context scaling like visual text compression, which renders long sequences into images and lets VLM process them, reducing markup while preserving semantics. The research team claims a 3 to 4x increase in token compression, comparable accuracy to the Qwen3 8B baseline, approximately 4x faster pre-filling and decoding, and approximately 2x increased SFT throughput. The pipeline is disciplined, with continuous pre-training for rendering pages, LLM genetic rendering searches for typesetting, and then post-training. This approach is practical for multi-million token workloads under extreme compression, but it depends on OCR and typesetting choices, which are still knobs. Overall, visual text compression provides a concrete path to extend long context while controlling computation and memory.

Check Paper, weight and buy-back agreements. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.