Why is spatial super-awareness becoming a core capability of multi-modal artificial intelligence systems?

Even powerful “long context” AI models fail miserably when they have to track objects and count over long, cluttered video streams, so the next competitive advantage will come from models that: predict What happens next and selectively remember only the surprisingly important events don’t just buy more computation and a larger context window. A team of researchers from NYU and Stanford University introduces Cambrian-S, a family of spatially based multimodal large language models for video, as well as the VSI Super benchmark and VSI 590K dataset for testing and training spatial superperception in long videos.

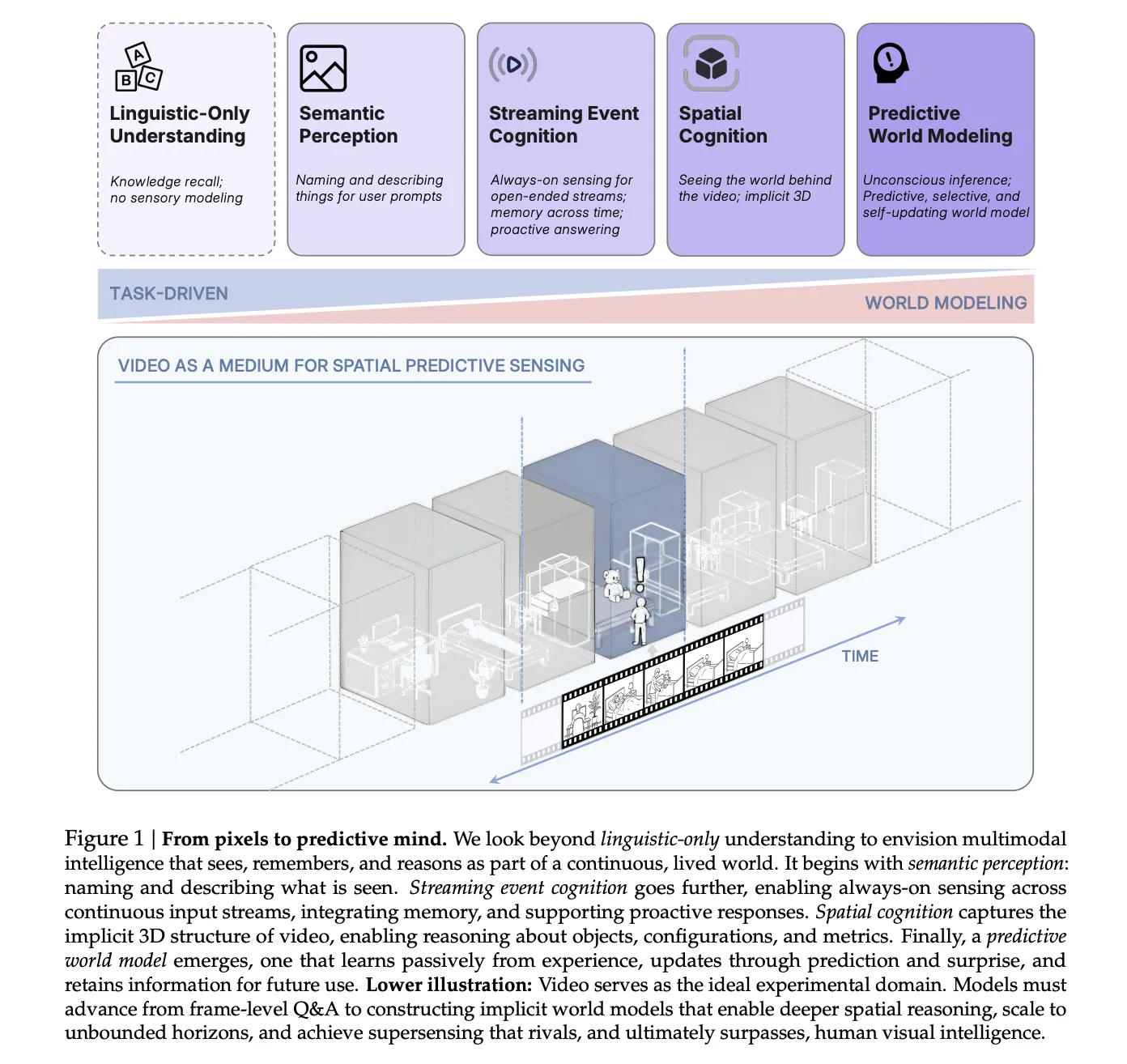

From video question and answer to spatial super-awareness

The research team sees spatial superawareness as an advance in abilities that go beyond mere verbal reasoning. These stages are semantic perception, streaming event cognition, implicit 3D spatial cognition, and predictive world modeling.

Most current video MLLMs sample sparse frames and rely on language priors. They often use titles or single frames rather than continuous visual evidence to answer benchmark questions. Diagnostic testing shows that several popular video benchmarks can be solved with limited or text-only input, so they do not strongly test spatial perception.

Cambrian-S targets the higher stages of this hierarchy, where models must remember spatial layout over time, reason about object positions and counts, and predict changes in the 3D world.

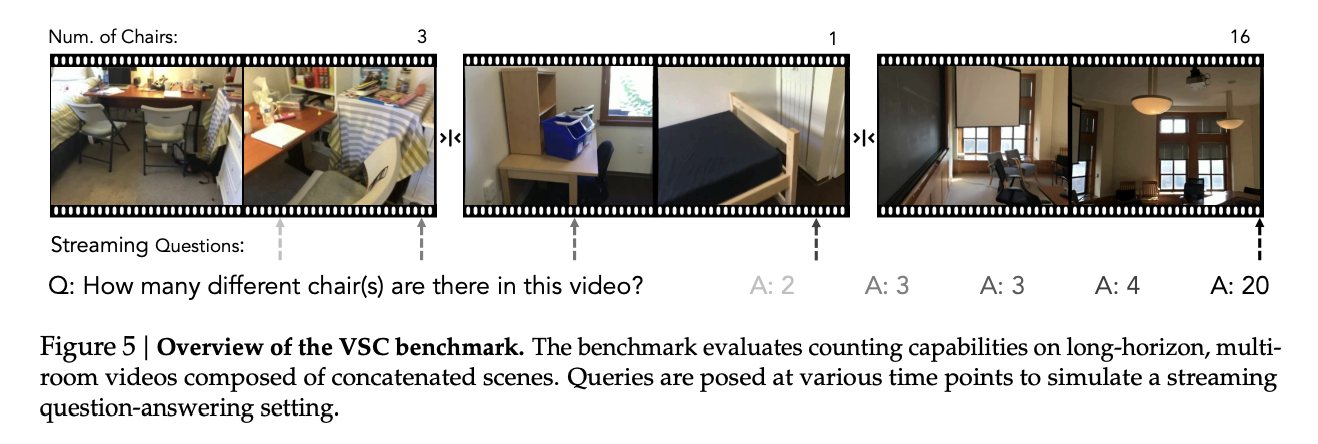

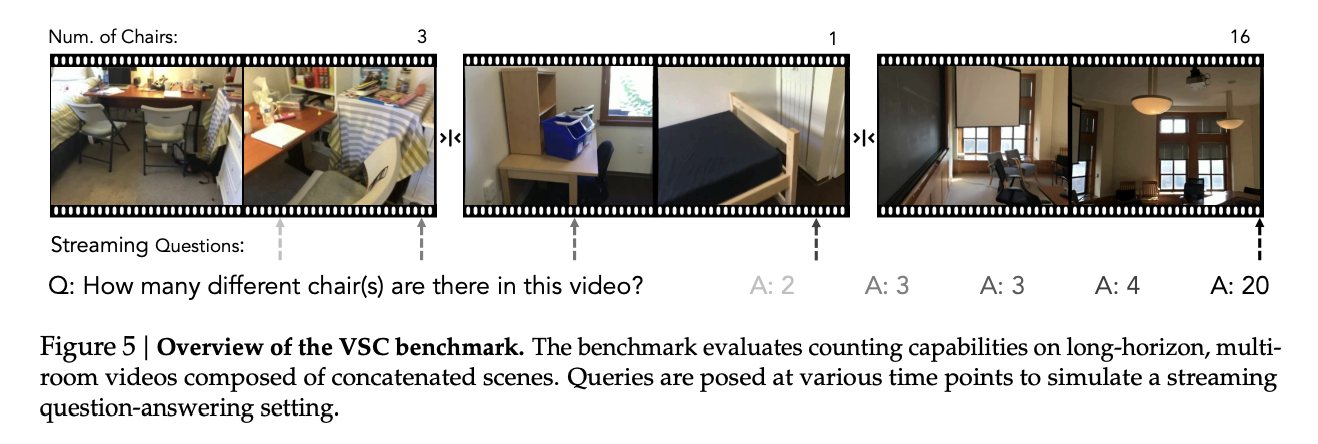

VSI Super, a stress test of continuous space perception

To reveal the gap between current systems and spatial superawareness, the research team designed VSI Super, a two-part benchmark run on arbitrarily long indoor videos.

The VSI Super Recall (VSR) assesses long-field spatial observation and recall. Human annotators obtained indoor walkthrough videos from ScanNet, ScanNet++, and ARKitScenes and used Gemini to insert an unusual object (e.g., a teddy bear) into four frames at different spatial locations. These edited sequences will be concatenated into a 240-minute stream. The model must report the order in which objects appear, which is like the visual needle in a needle-in-a-haystack task called sequentially.

VSI Super Counting (VSC) measures continuous counts at changing viewpoints and rooms. This benchmark concatenates room tour clips from the VSI Bench and asks for the total number of instances of the target object in all rooms. The model must handle viewpoint changes, revisits, and scene transitions and maintain cumulative counts. Average relative accuracy was evaluated using durations from 10 to 120 minutes.

When the Cambrian-S 7B was evaluated on a VSI Super with a streaming setting of 1 frame per second, VSR accuracy dropped from 38.3% at 10 minutes to 6.0% at 60 minutes, and went to zero after 60 minutes. The accuracy of VSC is close to zero over the entire length. Gemini 2.5 Flash degrades on VSI Super despite a long context window, indicating that strong context scaling is not sufficient for continuous spatial sensing.

VSI 590K, spatial focus instruction data

To test whether data scaling helps, the research team built VSI 590K, a spatial instruction corpus containing 5,963 videos, 44,858 images, and 590,667 question-answer pairs from 10 sources.

Sources include 3D annotated real room scans (such as ScanNet, ScanNet++ V2, ARKitScenes, S3DIS, and Aria Digital Twin), simulated scenes from ProcTHOR and Hypersim, and pseudo-annotated web data (such as YouTube RoomTour and robotics datasets Open X Examples and AgiBot World).

The dataset defines 12 spatial problem types, such as object count, absolute and relative distance, object size, room size, and order of occurrence. Questions are generated from 3D annotations or reconstructions, so spatial relationships are based on geometric rather than textual heuristics. Ablation shows that annotated real videos contribute the most on the VSI Bench, followed by simulated data, then pseudo-annotated images, and training on the full mixture provides the best spatial performance.

Cambrian-S model family and spatial performance

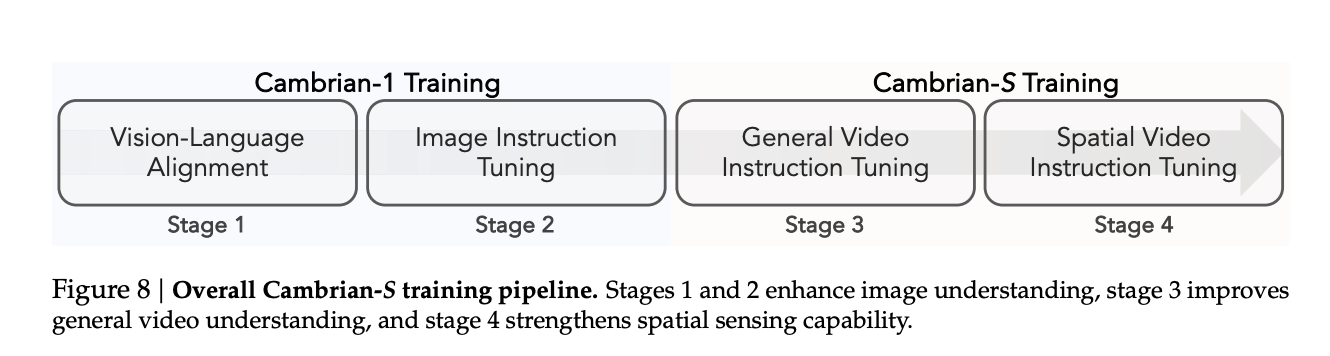

Cambrian-S is built on Cambrian-1 and uses the Qwen2.5 language backbone (0.5B, 1.5B, 3B and 7B parameters) with the SigLIP2 SO400M visual encoder and two-layer MLP connector.

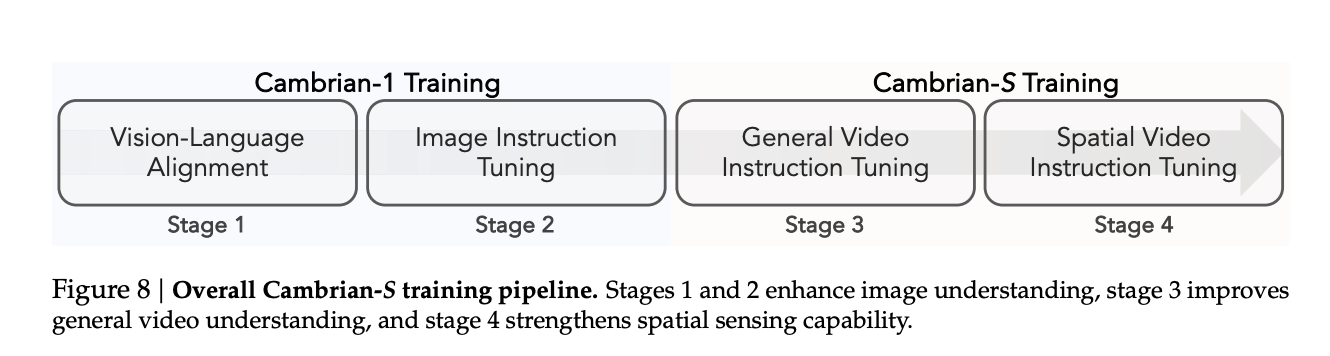

training to follow Fourth level pipeline. first stage Perform visual language alignment on image-text pairs. second stage Applies image command adjustments equivalent to modified Cambrian-1 settings. The third stage Expanding to video, universal video command tuning is performed on a 3 million sample blend called Cambrian-S 3M. Stage 4 Perform spatial video command adjustments on mixtures of VSI 590K and subsets of VSI 590K The third stage data.

On the VSI Bench, Cambrian-S 7B achieved an accuracy of 67.5% and outperformed open source benchmarks such as InternVL3.5 8B and Qwen VL 2.5 7B, as well as the proprietary Gemini 2.5 Pro by more than 16 absolute points. The model also maintains strong performance on the Perception Test, EgoSchema, and other general video benchmarks, so the focus on spatial awareness does not undermine general capabilities.

Predictive sensing with latent frame prediction and surprise

To expand beyond static context, the research team proposed predictive sensing. They added a latent frame prediction head, which is a two-layer MLP that predicts the latent representation of the next video frame in parallel with the next label prediction.

training modifications Stage 4. The model uses mean square error and cosine distance loss between predicted and ground truth latent features, weighted according to the language modeling loss. A subset of 290,000 videos in VSI 590K (sampled at 1 frame per second) was retained for this purpose. During this phase, the connector, language model, and both output heads are jointly trained, while the SigLIP visual encoder remains frozen.

At inference time, the cosine distance between the predicted and actual features turns into a surprising fraction. Low-surprise frames are compressed before being stored in long-term storage, and high-surprise frames retain more detail. A fixed-size memory buffer is used to decide which frames to merge or delete, and a query is used to retrieve the frames most relevant to the problem.

For VSR, this surprisingly driver-memory system allows Cambrian-S to maintain accuracy as video length increases while keeping GPU memory usage stable. It outperforms Gemini 1.5 Flash and Gemini 2.5 Flash on VSR at all test durations and avoids the drastic degradation seen in context-only models.

For VSC, the research team designed a surprise-driven event segmentation scheme. The model accumulates features in an event buffer, and when a high-surprise frame signals a scene change, it aggregates this buffer into segment-level answers and resets the buffer. The partial answers are summed to produce a final count. In streaming evaluations, the average relative accuracy of Gemini Live and GPT Realtime was below 15%, dropping to close to zero at 120 minutes of streaming, while Cambrian-S with unexpected segmentation reached about 38% at 10 minutes and stayed around 28% at 120 minutes.

Main points

- Cambrian-S and VSI 590K show that careful spatial data design and powerful video MLLM can significantly improve spatial awareness on VSI Bench, but they still fail on VSI Super, so scale alone does not solve the problem of spatial super-awareness.

- VSI Super is intentionally built from arbitrarily long indoor videos via VSR and VSC, emphasizing continuous spatial observation, recall, and counting, which makes it resistant to strong context window expansion and standard sparse frame sampling.

- Benchmarks show that leading-edge models including Gemini 2.5 Flash and Cambrian S degrade dramatically on VSI Super even when video length is kept within their nominal context limits, revealing the structural weaknesses of current long-context multi-modal architectures.

- The predictive sensing module based on latent frame prediction uses next latent frame prediction errors or surprises to drive memory compression and event segmentation, which yields significant gains on VSI Super compared to the long context baseline while keeping GPU memory usage stable.

- This research work positions spatial supersensing as a hierarchy from semantic perception to predictive world modeling, and believes that future video MLLM must combine explicit prediction goals and surprise-driven memory, not just larger models and datasets, to handle unbounded streaming videos in practical applications.

Cambrian-S is a useful stress test for current video MLLMs because it shows that VSI SUPER is not just a harder benchmark, it also exposes structural failures of long-context architectures that still rely on reactive awareness. A predictive perception module based on latent frame prediction and surprise-driven memory is an important step because it combines spatial perception with internal world modeling rather than just scaling data and parameters. This research marks a shift from passive video understanding to predictive spatial superawareness as the next design goal for multimodal models.

Check Paper. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.