Two hours of AI conversations can create a near-perfect digital twin for anyone

Researchers at Stanford University and Google DeepMind have created artificial intelligence that can replicate human personalities with astonishing accuracy after just two hours of conversation.

By interviewing 1,052 people from a variety of backgrounds, they constructed what they call “simulated agents”—digital replicas that can predict the beliefs, attitudes, and behaviors of their human counterparts very effectively.

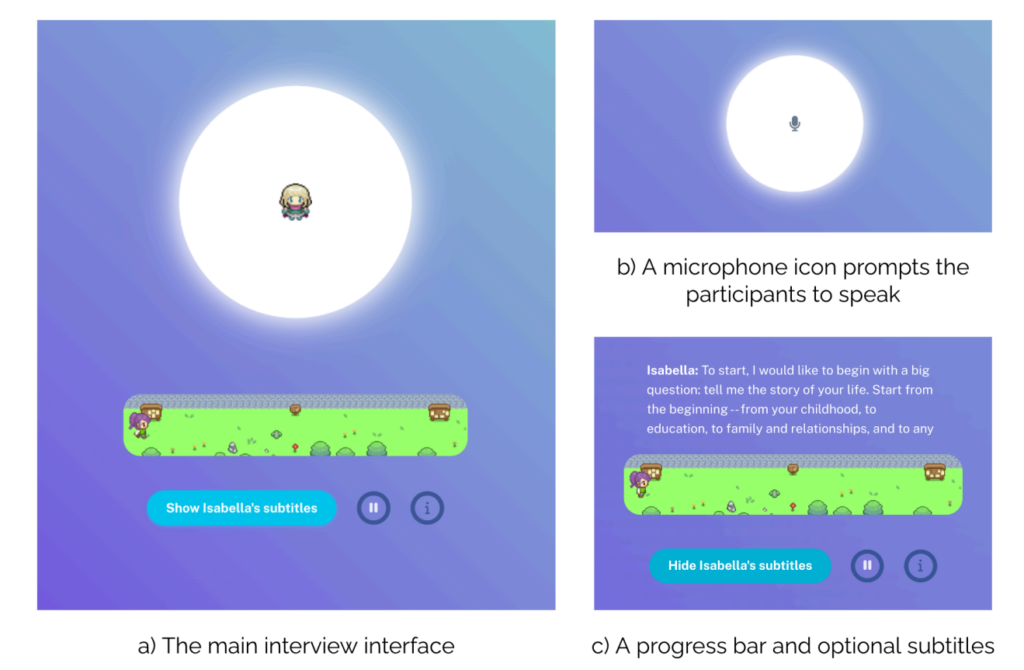

To create digital replicas, the team used data from “artificial intelligence interviewers” designed to engage participants in natural conversations.

AI interviewers ask questions and generate personalized follow-up questions (an average of 82 per session), exploring everything from childhood memories to political opinions.

From these two hours of discussion, each participant produced detailed transcripts averaging 6,500 words.

For example, when a participant mentions their childhood hometown, the AI might dig deeper, asking about specific memories or experiences. By simulating the natural flow of a conversation, the system captures subtle personal information that standard surveys tend to miss.

behind the scenes, study Documenting what the researchers call “expert reflections” prompts a Large Language Model (LLM) to analyze each conversation from four different expert perspectives:

- As a psychologist, it can identify specific personality traits and emotional patterns—for example, noting how someone values independence based on their description of family relationships.

- Through the lens of behavioral economists, it extracts insights about financial decisions and risk tolerance, such as how they approach savings or career choices.

- The perspective of a political scientist maps ideological tendencies and policy preferences on a variety of issues.

- Demographic analysis captures socioeconomic factors and living circumstances.

The researchers concluded that this interview-based technique was far superior to similar methods (such as mining social media data).

Test digital copy

So how good is the AI copy? The researchers put them through a series of tests to find out.

First, they used the General Social Survey, a measure of social attitudes that asks questions about everything from political opinions to religious beliefs. Here, the AI replica matched the responses of its human counterpart 85% of the time.

In the Big Five personality test, which measures traits like openness and conscientiousness through 44 different questions, the AI’s predictions matched human responses about 80 percent of the time. The system is very good at capturing traits like extraversion and neuroticism.

However, economic game testing reveals fascinating limitations. In the “Dictator Game,” in which participants decide how to allocate money with others, it’s difficult for AI to perfectly predict human generosity.

In a “trust game” that tests willingness to cooperate with others for mutual benefit, digital copies matched human choices only about two-thirds of the time.

This suggests that while AI can grasp our established values, it still cannot fully capture the nuances of human social decision-making (of course).

real world experiment

Not only that, but the researchers also conducted five classic social psychology experiments on these copies.

In an experiment testing how perceived intention affects blame, both humans and their AI counterparts showed a similar pattern, assigning more blame when harmful actions appeared to be intentional.

Another experiment looked at how fairness affects emotional responses, with AI copies accurately predicting human reactions to fair versus unfair treatment.

The AI replicas successfully reproduced human behavior in four out of five experiments, demonstrating that they can simulate not only individual subject responses but also broad, complex behavioral patterns.

Simple AI Cloning: What are the implications?

Artificial intelligence systems that “clone” human opinions and behaviors are big business; Meta recently announced plans to fill Facebook and Instagram have AI profiles Can create content and interact with users.

TikTok has also joined the fray with the launch of its new “Symphony” suite of AI-powered creative tools, which includes digital avatars that brands and creators can use to produce localized content at scale.

With Symphony Digital Avatars, TikTok allows eligible creators to build avatars that represent real people and include a variety of gestures, expressions, ages, nationalities, and languages.

Research from Stanford University and DeepMind suggests that such digital replicas will become more sophisticated and easier to build and deploy at scale.

“If you could have a bunch of little ‘yous’ running around and actually making the decisions that you would make, I think that’s ultimately the future,” said lead researcher Joon Sung Park, a doctoral student in computer science at Stanford. described to MIT.

This technique has advantages, Parker described, because building accurate clones can support scientific research.

Rather than conducting expensive or ethically questionable experiments on real people, researchers can test how populations respond to certain inputs. For example, it could help predict responses to public health messages or study how communities adapt to major social shifts.

But ultimately, the same characteristics that make these AI replicas valuable for research also make them powerful tools for deception.

As we’ve observed with the onslaught of deepfakes, as digital replicas become increasingly convincing, it becomes increasingly difficult to distinguish real human interactions from artificial intelligence.

What if this technology was used to clone someone against their will? What are the implications of creating digital replicas that specifically mimic real people?

Stanford University and the DeepMind research team acknowledge these risks. Their framework requires explicit consent from participants and allows them to withdraw data, treating personality replication with the same privacy concerns as sensitive medical information.

This at least provides some theoretical protection against more malicious forms of abuse. However, in Regardless, we are delving deeply into uncharted territory in human-computer interaction, the long-term effects of which remain largely unknown.