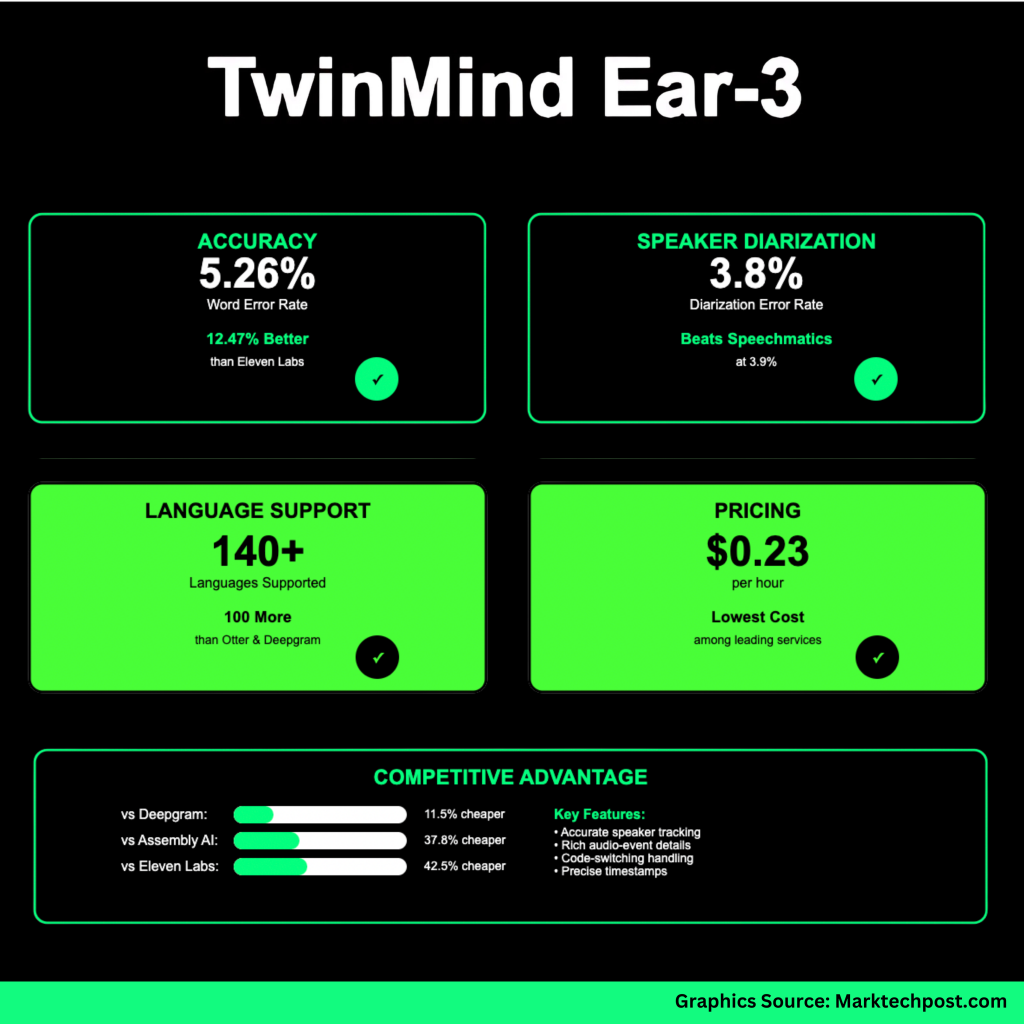

TwinMind introduces the EAR-3 model: a new voice AI model that sets new industry records in accuracy, speaker tags, language and price

TwinMind, a California-based voice AI startup, unveiled EAR-3 The speech recognition model, claiming to be state-of-the-art performance on several key metrics, and expands multilingual support. This release positions EAR-3 as a competitive product for existing ASR (Automatic Speech Recognition) solutions such as Deepgram, Assemblyai, 11 Labs, Otter, Speckmatics, and OpenAI.

Key indicators

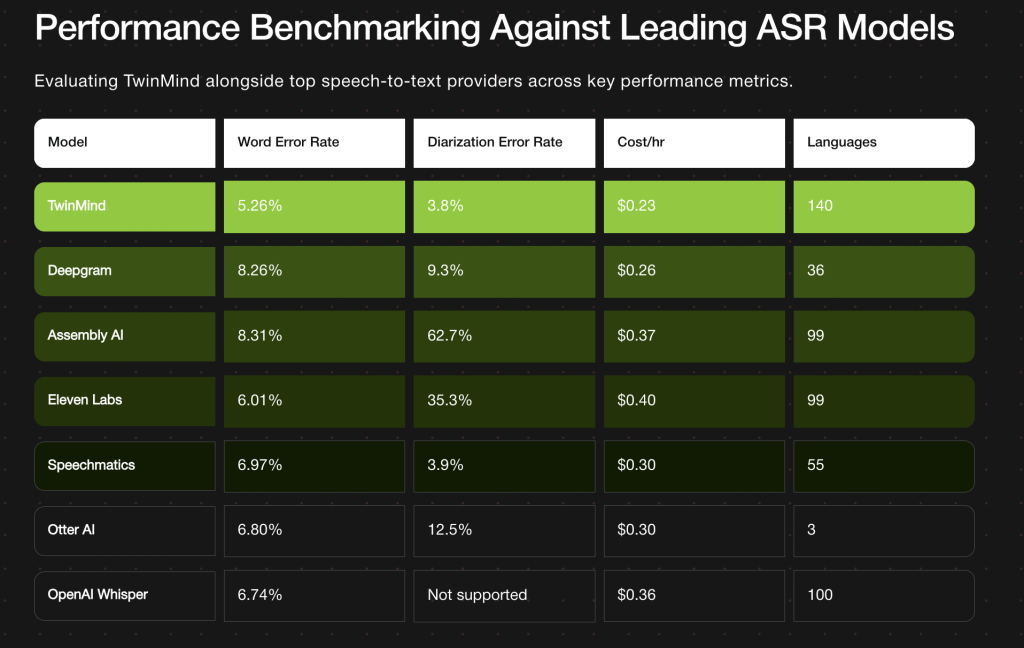

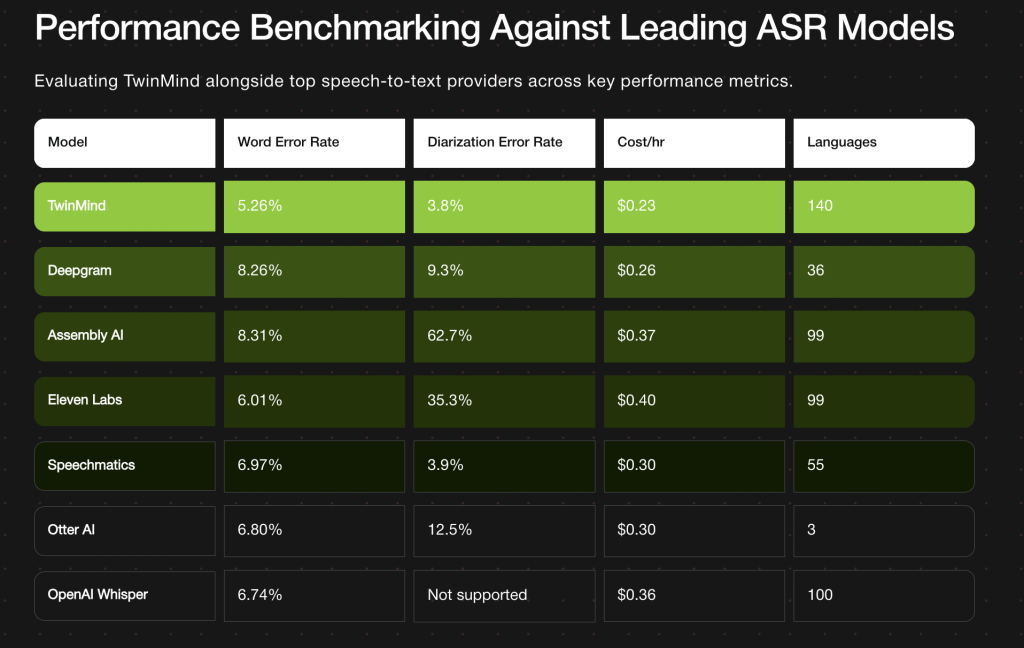

| Metric system | Twinmind Ear-3 results | Comparison/Notes |

|---|---|---|

| Word Error Rate (wer) | 5.26% | Significantly lower than many competitors: dark ~8.26%, assembled ~8.31%. |

| Speaker diagnostic error rate (DER) | 3.8% | Slight improvement (~3.9%) compared to previous verbal methods. |

| Language Support | More than 140 languages | More than 40 languages than many leading models; the goal is “real global coverage.” |

| Hourly transcription fee | US $0.23/hr | Location is the lowest among major services. |

Technical methods and positioning

- TwinMind says EAR-3 is a “fine-tuned mixture of several open source models,” which is trained on selected datasets containing audio sources advertised by people, such as podcasts, videos, and movies.

- Diagnostic and speaker tags are improved through pipelines, including pre-diagnosis audio cleaning and enhancement, as well as “precision alignment checks” to perfect speaker boundary detection.

- The model handles code conversion and hybrid scripts, and ASR systems are often difficult due to diverse voice, accent differences and language overlap.

Trade-offs and operational details

- EAR-3 Need cloud deployment. Due to its model size and compute load, it cannot be completely offline. When connectivity is lost, Twinmind’s Ear-2 (its earlier model) is still a fallback.

- Privacy: TwinMind claims that audio has no long-term storage; only has local storage records with optional encrypted backups. Recording is deleted “instantly”.

- Platform Integration: API access for this model is planned to be targeted to developers/enterprises in the coming weeks. For end users, Pro users will launch EAR-3 features to Twinmind’s iPhone, Android and Chrome apps next month.

Comparative analysis and meaning

Ear-3’s WER and DER metrics lead it ahead of many established models. The lower part translates into fewer transcriptional errors (misrecognition, dropped words, etc.), which is crucial for areas such as law, medicine, lecture transcription or sensitive content archives. Similarly, under der (i.e. better speaker separation + tag) for conferences, interviews, podcasts – anything related to multiple participants.

The price point of $0.23 per hour makes high-intelligent transcription more feasible on long-term audio (e.g., conferences, lectures, recordings). Combined with support for over 140 languages, there is a clear impetus in a global environment that is more than just English-centric or well-resourced linguistic context.

However, cloud dependency can be a place where users need offline or edge device capabilities, or where data privacy/latency issues are strictly strict. Implementation complexity that supports over 140 languages (accent drift, dialect, code conversion) may show weaker areas under poor acoustic conditions. Real-world performance may be different compared to controlled benchmarks.

in conclusion

Twinmind’s EAR-3 model represents a strong technical proposition: high accuracy, speaker diagnostic accuracy, extensive language coverage and promoting cost reduction. If the benchmark is held in actual use, this may change the expectations that “advanced” transcription services should provide.

Check Project page. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex datasets into actionable insights.