Top 6 OCR (Optical Character Recognition) Models/Systems Comparison in 2025

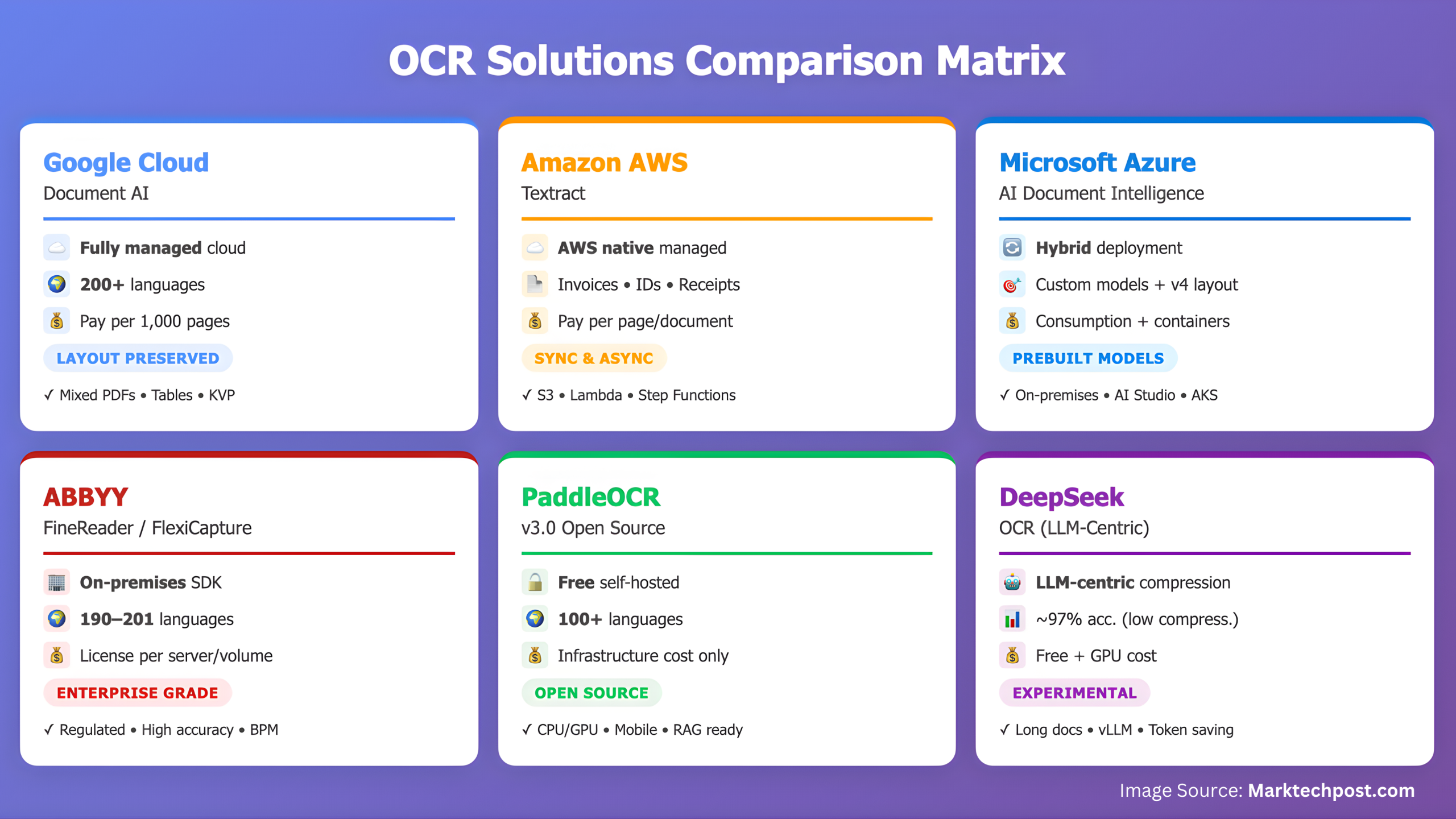

Optical character recognition has moved from plain text extraction to document intelligence. Modern systems must read scanned and digital PDFs, preserve layout, detect tables, extract key-value pairs, and work with multiple languages all at once. Many teams now also want OCR to feed data directly into RAG and proxy pipelines. By 2025, 6 systems will cover most real-world workloads:

- Google Cloud Document Artificial Intelligence, Enterprise Document OCR

- amazon text

- Microsoft Azure AI Document Intelligence

- ABBYY FineReader engine and FlexiCapture

- Paddle OCR 3.0

- DeepSeek OCR, Contexts optical compression

The purpose of this comparison is not to rank them based on a single metric, as they target different constraints. The goal is to show which system to use for a given document volume, deployment model, language set, and downstream AI stack.

Evaluation dimension

We compare on 6 stable dimensions:

- Core OCR quality Scans, photos and digital PDFs.

- layout and structure Tables, key-value pairs, selection tags, reading order.

- Language and handwriting coverage.

- Deployment model Fully managed, containerized, local, self-hosted.

- Integration Owns LLM, RAG and IDP tools.

- cost of scale.

1. Google Cloud Document AI, Enterprise Document OCR

Google’s Enterprise Document OCR can take PDFs and images (whether scanned or digital) and return the text with layout, tables, key-value pairs and selection markup. It also offers handwriting recognition in 50 languages and can detect math and font styles. This is important for financial statements, educational forms and archives. The output is structured JSON and can be sent to Vertex AI or any RAG system.

Advantages

- High-quality OCR on business documents.

- Powerful layout diagram and table detection.

- A pipeline for digital and scanned PDFs that makes ingestion simple.

- Enterprise grade, with IAM and data residency.

limit

- This is a metered Google Cloud service.

- Custom document types still need to be configured.

When using Your data is already on Google Cloud, or you must preserve the layout for subsequent LLM stages.

Textract provides two API channels, synchronous for small documents and asynchronous for large multi-page PDFs. It extracts text, tables, forms, signatures and returns them as blocks with relationships. AnalyzeDocument 2025 can also answer queries on the page, streamlining invoice or claim extraction. Integrations with S3, Lambda, and Step Functions make it easy to turn Textract into an ingestion pipeline.

Advantages

- Reliable table and key value extraction for receipts, invoices and insurance policies.

- Clear synchronization and batching models.

- Tight AWS integration for serverless and IDP on S3.

limit

- Image quality has a visible impact, so camera uploads may require pre-processing.

- Customization is more limited than Azure custom models.

- Locked to AWS.

When using The workload is already in AWS and you need structured JSON out of the box.

3. Microsoft Azure AI document intelligence

Azure’s service, renamed Form Recognizer, combines OCR, generic layouts, pre-built models and custom neural or template models. The 2025 release adds layout and read containers so enterprises can run the same model locally. The layout model extracts text, tables, selection marks and document structure, intended for further processing by the LL.M.

Advantages

- First-class custom document model for business forms.

- Containers for mixing and air-gapped deployment.

- Pre-built mockups of invoices, receipts and ID documents.

- Clean JSON output.

limit

- Some non-English documents may still be slightly less accurate than ABBYY.

- Pricing and throughput must be planned as it remains a cloud-first product.

When using You need to teach your own templates to the system, or when you are a Microsoft store and want to use the same model in Azure and on-premises.

4.ABBYY FineReader engine and FlexiCapture

ABBYY will remain relevant in 2025 for three reasons: accuracy of printed documents, very broad language coverage, and deep control over preprocessing and partitioning. Current Engine and FlexiCapture products support 190 languages and more, export structured data, and can be embedded in Windows, Linux and VM workloads. ABBYY is also strong in regulated areas where data cannot leave the premises.

Advantages

- The recognition quality of scanned contracts, passports, old documents is very high.

- The largest set of languages in this comparison.

- FlexiCapture can be adapted to the clutter of repetitive documents.

- Mature SDK.

limit

- Licensing costs are higher than open source.

- Scene text based on deep learning is not the focus.

- Scaling to hundreds of nodes requires engineering.

When using You have to run locally, you have to handle multiple languages, or you have to pass a compliance audit.

5.PaddleOCR 3.0

PaddleOCR 3.0 is an Apache-licensed open source toolkit designed to bridge images and PDFs to LLM-ready structured data. It comes with PP OCRv5 for multi-language recognition, PP StructureV3 for document parsing and table reconstruction, and PP ChatOCRv4 for key information extraction. It supports over 100 languages, runs on CPUs and GPUs, and has mobile and edge versions.

Advantages

- Free and open with no per page fees.

- Runs fast on GPUs and can be used at the edge.

- Covers detection, identification and structure within a project.

- Active community.

limit

- You must deploy, monitor, and update it.

- For European or financial layouts you often need post-processing or fine-tuning.

- Safety and durability are your responsibility.

When using You want full control, or you want to build a self-hosted document intelligence service for your LLM RAG.

6. DeepSeek OCR, contextual optical compression

DeepSeek OCR will be released in October 2025. It’s not classic OCR. It is an LLM-centric visual language model that compresses long texts and documents into high-resolution images and then decodes them. Public model cards and blogs report that the decoding accuracy is about 97% at 10x compression and about 60% at 20x compression. It is MIT licensed, built around the 3B decoder, and is already supported in vLLM and Hugging Face. This is interesting for teams that want to reduce the cost of tokens before applying for LLM.

Advantages

- Self-hosted, GPU ready.

- Ideal for long contexts and mixed text plus tables, since compression occurs before decoding.

- Open license.

- Perfect for modern proxy stacks.

limit

- There are currently no standard public benchmarks against which Google or AWS can be compared, so enterprises must run their own tests.

- Requires a GPU with sufficient VRAM.

- The accuracy depends on the selected compression ratio.

When using You want OCR to be optimized for LLM processes rather than archive digitization.

head to head comparison

| feature | Google Cloud Document AI (Enterprise Document OCR) | amazon text | Azure AI Document Intelligence | ABBYY FineReader Engine/FlexiCapture | Paddle OCR 3.0 | Deep Thought OCR |

|---|---|---|---|---|---|---|

| core mission | OCR for scanned and digital PDFs, returns text, layout, tables, KVP, selection marks | OCR for text, tables, forms, IDs, invoices, receipts with synchronous and asynchronous API | OCR plus pre-built and custom models, layouts, local containers | High-precision OCR and document capture for large multi-language local workloads | Open source OCR and document parsing, PP OCRv5, PP StructureV3, PP ChatOCRv4 | LLM-centric OCR that compresses document images and decodes them for long-context AI |

| text and layout | block, paragraph, line, word, symbol, table, key-value pair, select tag | Text, relationships, tables, forms, query responses, loan analysis | Text, tables, KVP, selection tags, graphics extraction, structured JSON, v4 layout model | Partitioning, tables, form fields, classification through FlexiCapture | StructureV3 rebuilds table and document hierarchies, KIE module is available | Reconstruct content after optical compression, suitable for long pages that require partial evaluation |

| handwriting | Printed and handwritten versions in 50 languages | Handwriting and free text | Reading and layout models support handwriting | Prints very well and allows for handwriting via capture templates | Supported, domain adjustment may be required | Depends on image and compression ratio, not yet benchmarked with cloud |

| language | More than 200 OCR languages, 50 handwriting languages | Main business language, invoice, ID card, receipt | Main business language, extended in v4.x | 190–201 languages Depending on version, the widest in this table | 100+ languages included in v3.0 stack | Multilingual via VLM decoder, good coverage but not exhaustive, tested by project |

| deploy | Fully managed Google Cloud | Fully managed AWS, synchronous and asynchronous jobs | Hosted Azure services and local read and layout containers (2025) | Local, virtual machine, customer cloud, SDK-centric | Self-hosted, CPU, GPU, edge, mobile | Self-hosted, GPU, vLLM ready, License verification |

| integration path | Export structured JSON to Vertex AI, BigQuery, RAG pipelines | Native to S3, Lambda, Step Functions, AWS IDP | Azure AI Studio, Logic Apps, AKS, custom models, containers | BPM, RPA, ECM, IDP platform | Python pipeline, open RAG stack, custom documentation service | Hope to first reduce the token’s LLM and proxy stack, support vLLM and HF |

| cost model | Pay per 1,000 pages, volume discounts | Pay per page or document, AWS billing | Consumption-based licensing for running containers locally | Commercial license, per server or per volume | Free, infrastructure only | Free repository, GPU cost, license confirmation |

| most suitable | Mix scanned and digital PDFs on Google Cloud, preserving layout | AWS extracts invoices, receipts, loan packages at scale | Microsoft Store requiring custom models and hybrid models | Supervised, multilingual, local processing | Self-hosted document intelligence for LLM and RAG | Long document LLM processes requiring optical compression |

When to use what

- Cloud IDP on invoices, receipts, medical forms: Amazon Textract or Azure Document Intelligence.

- Hybrid scans and digital PDFs for banks and telcos on Google Cloud: Google Document AI enterprise document OCR.

- Cloud-free government archives or publishers in over 150 languages: ABBYY FineReader engine and FlexiCapture.

- Startups or media companies build their own RAG on PDF: Paddle OCR 3.0.

- LLM platforms that wish to reduce context before inference: DeepSeek OCR.

Google Document AI, Amazon Textract, and Azure AI Document Intelligence all offer layout-aware OCR, outputting tables, key-value pairs, and selection tags as structured JSON, while ABBYY FineReader Engine 12 R7 and FlexiCapture export structured data in XML and new JSON formats, supporting 190 to 201 languages for native processing. PaddleOCR 3.0 provides Apache-licensed PP OCRv5, PP StructureV3 and PP ChatOCRv4 for self-hosted document parsing. DeepSeek OCR reports decoding accuracy of 97% at less than 10x compression and around 60% at 20x compression, so enterprises must run local benchmarks before rolling it out in production workloads. Overall, OCR in 2025 is ranked first in document intelligence and second in recognition.

refer to:

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.