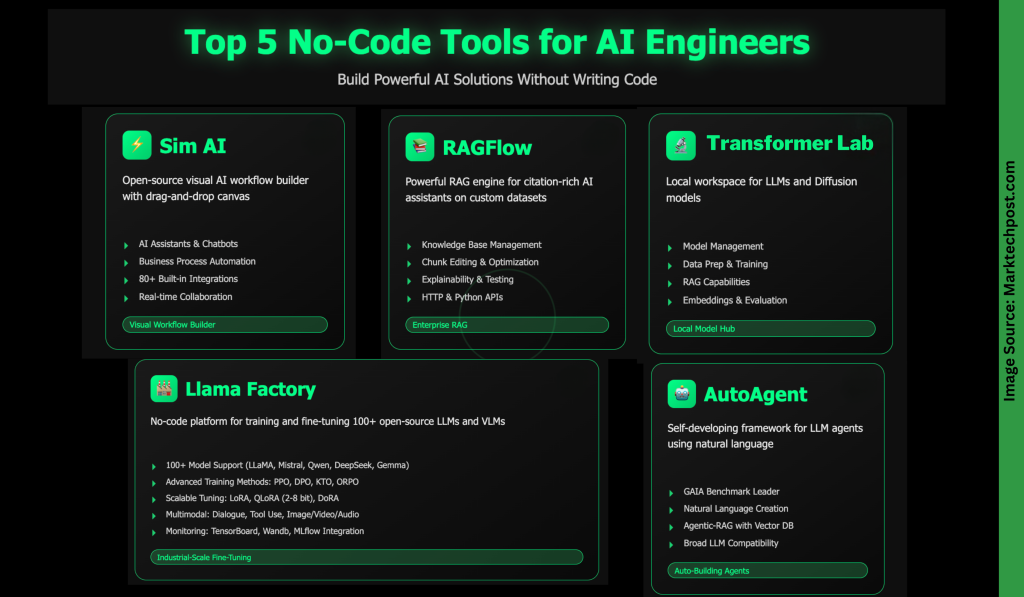

Top 5 codeless tools for AI engineers/developers

In today’s AI-driven world, no-code tools are changing how people create and deploy smart applications. They empower anyone – without coding expertise to build solutions quickly and efficiently. From developing enterprise-grade rag systems to designing multi-agent workflows or hundreds of LLMs, these platforms greatly reduce development time and effort. In this article, we will explore five powerful codeless tools that make building AI solutions faster than ever before.

SIM AI is an open source platform for visual construction and deployment of AI agent workflows without coding. Using its drag-and-drop canvas, you can connect AI models, APIs, databases, and business tools to create:

- AI Assistant and Chatbot: A proxy that searches the network, accesses the calendar, sends emails, and interacts with business applications.

- Business Process Automation: Simplify tasks such as data entry, report creation, customer support, and content generation.

- Data processing and analysis: Extract insights, analyze datasets, create reports and synchronize data across systems.

- API Integration Workflow: Orchestrate complex logic, unify services and manage event-driven automation.

Key Features:

- Visual canvas with “smart blocks” (AI, API, logic, output).

- Multiple triggers (chat, REST API, Webhooks, Scheduler, Slack/github events).

- Real-time teamwork and permission control.

- 80+ built-in integration (AI models, communication tools, productivity applications, development platforms, search services and databases).

- MCP supports custom integration.

Deployment Options:

- Cloud Hosting (hosting basic architecture with scaling and monitoring).

- Self-hosting (via Docker and provides native model support for data privacy).

Ragflow is a powerful search engine (RAG) engine that helps you build grounded, cited AI assistants on your own datasets. It runs on an X86 CPU or NVIDIA GPU (with optional ARM build) and provides a full or slim Docker image for rapid deployment. After rotating the local server, you can connect to LLM (VIA API or Ollama (such as Ollama)) to handle chat, embed or image-to-text tasks. Ragflow supports the most popular language models and allows you to set default settings or custom models for each assistant.

Key features include:

- Knowledge Base Management: Upload and parse files (PDF, Word, CSV, images, slideshows, etc.), select an embed model and organize the content for effective retrieval.

- Block editing and optimization: Check parsed blocks, add keywords, or manually adjust content to improve search accuracy.

- AI Chat Assistant: Create chats linked to one or more knowledge bases, configure fallback responses, and fine-tune prompts or model settings.

- Explanation and testing: Use built-in tools to verify search quality, monitor performance and view real-time references.

- Integration and scalability: Utilizes HTTP and Python API for application integration and provides an optional sandbox for secure code execution inside chat.

Transformer Lab is a free open source workspace for large language models (LLMS) and diffusion models designed to run on local machines (whether it’s a GPU, TPU, or Apple M-series MAC) or in the cloud. It allows you to download, chat and evaluate LLMS from a flexible environment, generate images using diffusion models, and compute embeddings.

Key features include:

- Model Management: Download and interact with LLMS, or generate images using state-of-the-art diffusion models.

- Data preparation and training: Create datasets, fine-tune or train models, including support for RLHF and preference adjustments.

- Search Authorized Generation (RAG): Power smart, rooted conversations with your own files.

- Embedding and evaluation: Compute embeddings and evaluate model performance for different inference engines.

- Scalability and community: Build plugins, contribute to core applications, and collaborate through the proactive Discord community.

Llama-Factory is a powerful codeless platform for training and fine-tuning of open source big speech models (LLMS) and visual models (VLMS). It supports over 100 models, multi-mode fine-tuning, advanced optimization algorithms, and scalable resource configuration. Designed for researchers and practitioners, it provides a wide range of tools for PPOs and DPOs such as PPOs and DPOs, which can be used for training, supervised fine-tuning, reward modeling, and reinforcement learning methods – as well as easy experimental tracking and faster inference.

Key highlights include:

- Extensive model support: Cooperate with Llama, Mistral, Qwen, DeepSeek, Gemma, Chatglm, Phi, Yi, Mixtral-Moe and others.

- Training method: Supports continuous pre-training, multi-modal SFT, reward modeling, PPO, DPO, KTO, ORPO, etc.

- Extensible tweaking options: Full tune, Freeze, Lora, Qlora (2-8 bit), frequently used, Dora and other resources effective technologies.

- Advanced algorithms and optimizations: Including Galore, Badam, Apollo, Muon, Flashattention-2, Rope Scaling, Neftune, Rslora, etc.

- Tasks and methods: Process dialogue, tool usage, image/video/audio understanding, visual grounding, and more.

- Monitoring and reasoning: Integration with Llamaboard, Tensorboard, WandB, MLFlow and Swanlab, plus quick reasoning via OpenAi style API, Gradio UI or Vllm/sglang workers.

- Flexible infrastructure: Compatible with Pytorch, embrace face transformers, DeepSpeed, BitsandBytes, and supports CPU/GPU settings with memory efficiency quantization.

AutoAgent is a fully automated automated development framework that allows you to create and deploy LLM-driven agents in only natural languages. Designed to simplify complex workflows, it allows you to build, customize, and run smart tools and assistants without writing single lines of code.

Key features include:

- high performance: Top results were achieved on Gaia benchmarks to rival advanced deep research agents.

- Easily proxy and workflow creation: Build tools, agents and workflows with simple natural language prompts – no coding required.

- Proxy rag using native vector database: A self-managed vector database is included, which provides superior retrieval compared to Langchain (such as Langchain).

- Extensive LLM compatibility: Seamless integration with leading models from Openai, Anthropic, DeepSeek, Vllm, Grok, Hugging Face, and more.

- Flexible interactive mode: Supports feature calls and reactive reasoning for multifunction use cases.

Lightweight and scalable: A dynamic personal AI assistant that is easy to customize and scale while maintaining resource efficiency.

I am a civil engineering graduate in Islamic Islam in Jamia Milia New Delhi (2022) and I am very interested in data science, especially neural networks and their applications in various fields.