Tiktok researchers introduce SWE-PERF: The first benchmark for repository-level code performance optimization

introduce

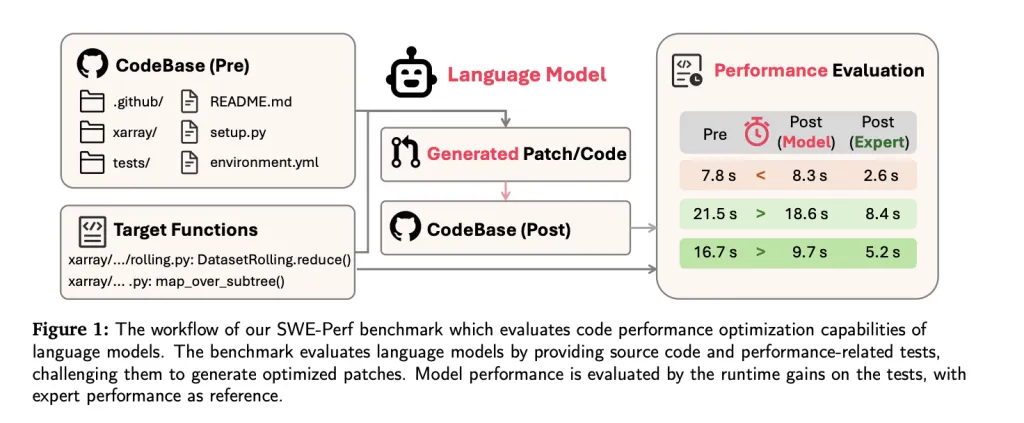

As large language models (LLMS) advance in software engineering tasks (from code generation to bug fixes), performance optimization remains an elusive boundary, especially in repositories. To bridge this gap, researchers from Tiktok and collaborative agencies introduced swe-perf– The first benchmark specifically designed to evaluate LLMS’s ability to optimize code performance in real-world repositories.

Unlike previous benchmark analysis for correctness or functional-level efficiency (e.g., SWE-Bench, Mercury, Effibench), SWE-Perf captures the complexity and context depth of storage scale performance tuning. It provides a reproducible quantitative basis for studying and improving the performance optimization capabilities of modern LLM.

Why SWE-PERF is needed

Real-world codebases are often large, modular and intricate interdependence. Optimizing them for performance requires understanding of cross-file interactions, execution paths, and computational bottlenecks – challenging the scope of isolating functional level datasets.

Today’s LLMs have largely evaluated tasks such as syntax correction or small function conversion. However, in a production environment, performance tuning across repositories can bring greater system-wide benefits. SWE-PERF is explicitly built to measure LLM functionality in this setup.

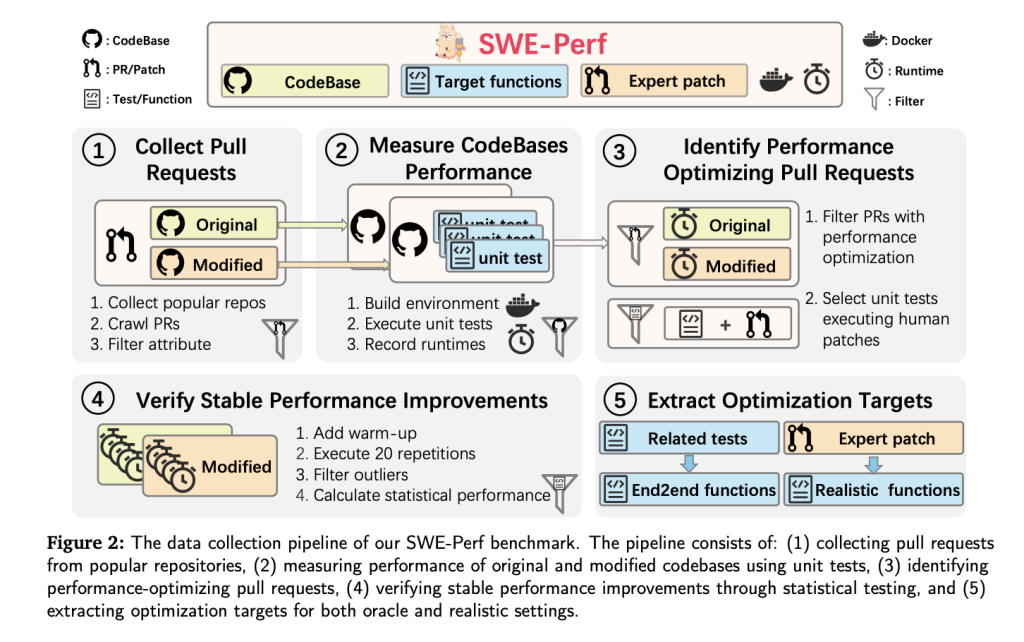

Dataset construction

SWE-PERF is built with over 100,000 pull requests in the high-profile GitHub repository. The final dataset covers 9 repositories, including:

- 140 planning examples Demonstrate measurable and stable performance improvements.

- Complete code library After optimization.

- Target function Classified as Oracle (file level) or reality (reply level).

- Unit testing and dock environment Reproducible execution and performance measurement.

- Patch written by experts Used as the gold standard.

To ensure validity, each unit test must:

- Pass before and after patches.

- Show statistically significant run time for more than 20 replicates (Mann-Whitney U test, P

Performance is measured using minimum performance gain (δ), isolation when filtering noise can be attributed to statistical improvements in patches.

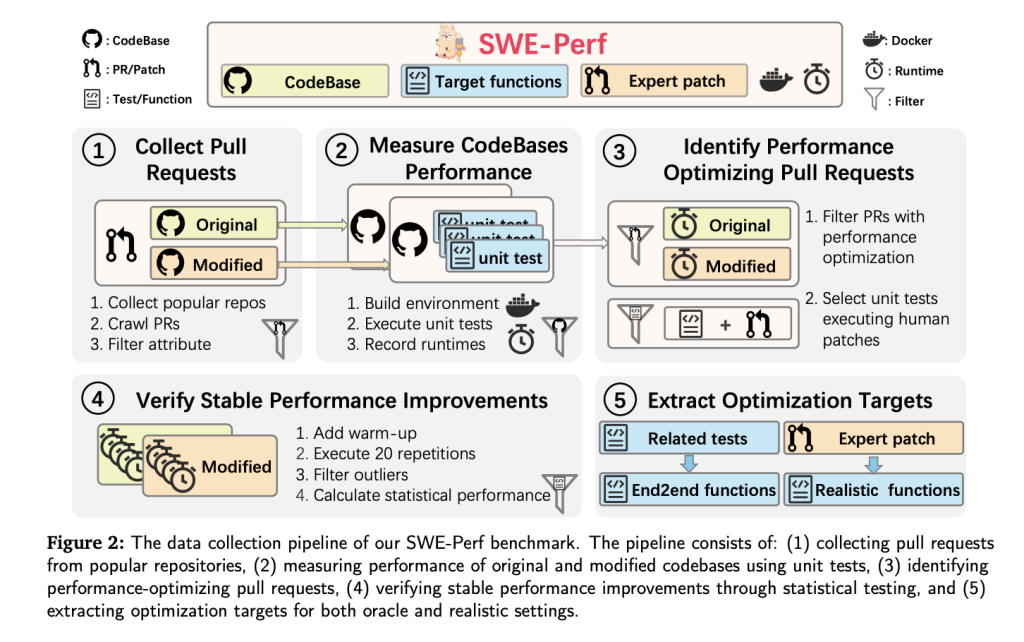

Benchmark settings: Oracle and reality

- Oracle Settings: This model only receives target functions and corresponding files. This setting tests local optimization skills.

- Realistic settings: Provides the entire repository for this model, and critical performance paths must be identified and optimized independently. This is a closer analogue to the way human engineers work.

Evaluation indicators

SWE-PERF defines a three-layer evaluation framework that independently reports each metric:

- Apply: Can the patches of the model be applied cleanly?

- Correctness: Does the patch retain functional integrity (all unit tests passed)?

- Performance: Runtime improvements with measurable patch yield?

The metrics are not aggregated into a single score, allowing for a more nuanced evaluation of the tradeoff between syntactic correctness and performance improvement.

Experimental results

Benchmarks evaluate several top LLMs in Oracle and reality settings:

| Model | environment | Performance(%) |

|---|---|---|

| Claude-4-opus | Oracle | 1.28 |

| GPT-4O | Oracle | 0.60 |

| Gemini 2.5-Pro | Oracle | 1.48 |

| Claude-3.7 (no proxy) | Actual | 0.41 |

| Claude-3.7 (Open) | Actual | 2.26 |

| Expert (Human Patch) | – | 10.85 |

It is worth noting that even the best performing LLM configurations clearly lack human-level performance. The agent-based approach is built on Claude-3.7-Sonnet, which outperforms other configurations in real-world settings, but still lags behind expert-made optimizations.

Key Observation

- A proxy-based framework More suitable for complex multi-step optimization, better than direct model hints and pipeline-based methods (such as Agentless).

- Performance reduction As the number of objective functions increases – LLLM struggles with a wider range of optimizations.

- LLM has limited scalability In the long run, the expert system continues to show growth in performance.

- Patch Analysis Shows LLM’s focus on low-level code structures (e.g. import, environment settings), while experts perform performance tuning for advanced semantic summary.

in conclusion

SWE-PERF represents a critical step in LLMS measuring and improving the performance optimization capabilities of LLMS in a realistic software engineering workflow. It reveals a significant capability gap between existing models and human experts, laying a solid foundation for future research on storage scale performance adjustment. With the development of LLMS, SWE-Perf can act as a Polaris, directing them to large scale practical, and can be produced software enhancements.

Check Paper, github pages and projects. All credits for this study are to the researchers on the project.

Sponsorship Opportunities: Attract the most influential AI developers in the United States and Europe. 1M+ monthly readers, 500K+ community builders, unlimited possibilities. [Explore Sponsorship]

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.