This AI paper introduces Reagan: a graphical proxy network that enables nodes to have autonomous planning and global semantic retrieval

How do we make each node in the graph in its own intelligent proxy – suitable for personalized reasoning, adaptive retrieval and autonomous decision-making? This is a central question explored by a team of researchers at Rutgers University. The research team launched Reagan, a retrieval-type graphical agent network that reconcepts each node as an independent reasoning agent.

Why traditional GNNs struggle

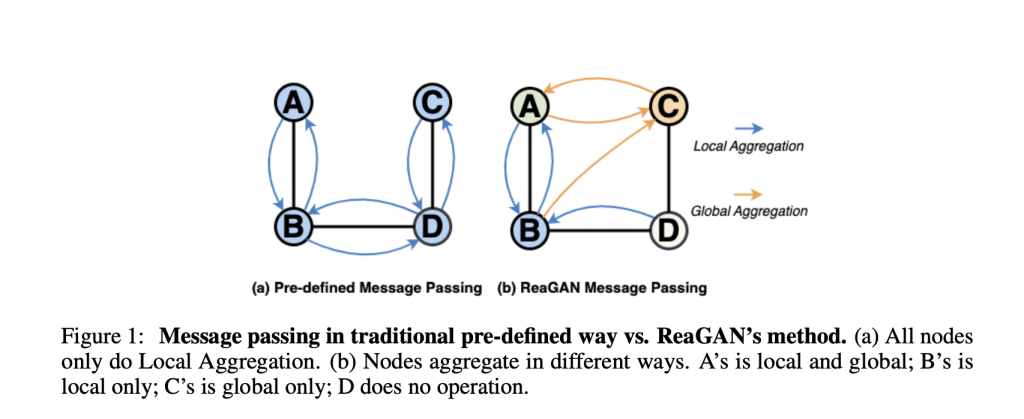

Graphic neural networks (GNNs) are the backbone of many tasks, such as citation network analysis, recommendation systems, and scientific classification. Traditionally, GNNS passes Static, homogeneous information transmission: Each node uses the same predefined rules to summarize information from its direct neighbors.

But two ongoing challenges have emerged:

- Node information imbalance: Not all nodes are equal. Some nodes are rich in useful information, while others are sparse and noisy. When the same processing is obtained, valuable signals may be lost, or irrelevant noises can go beyond useful backgrounds.

- Local restrictions: GNN focuses on local structures (information from nearby nodes) often miss meaningful, semantically similar but distant nodes in larger graphs.

Reagan method: nodes act as autonomous agents

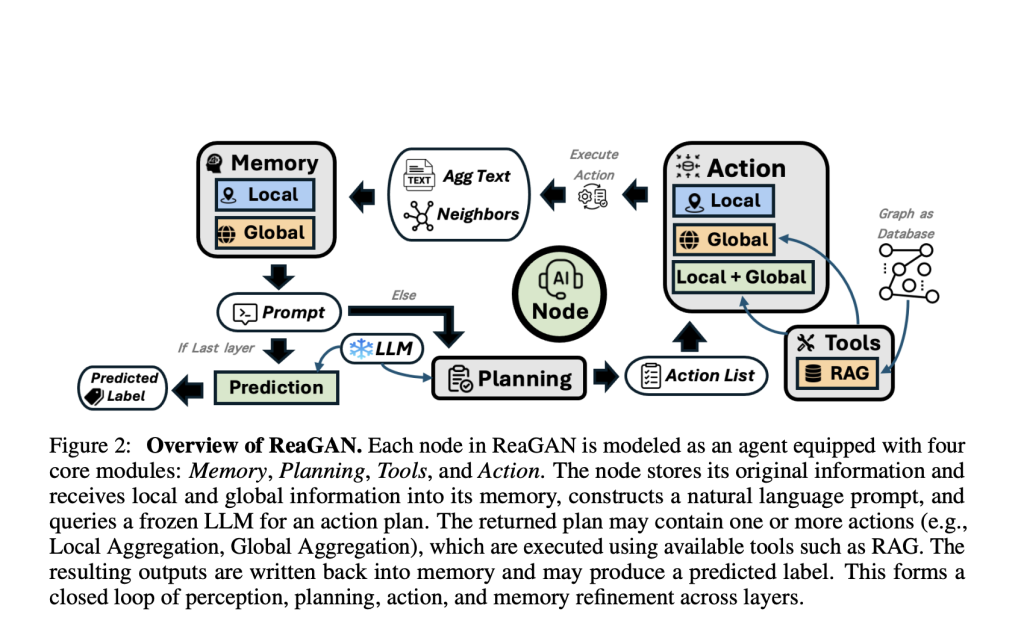

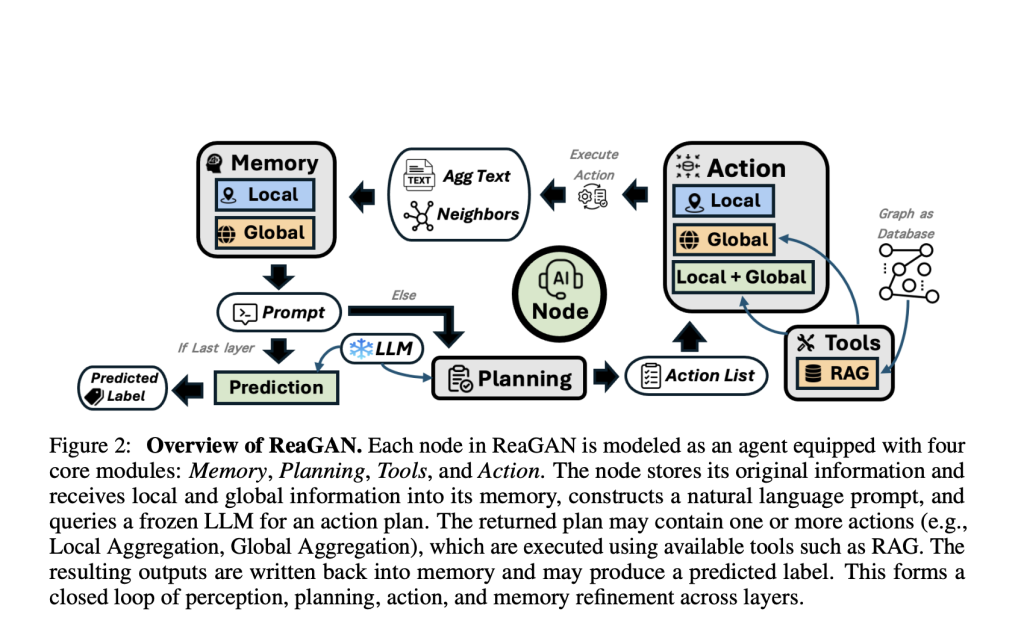

Reagan flips the script. Instead of passive nodes, Each node becomes a proxy This is an action that actively plans the next step based on its memory and context. The following are:

- Agent Plan: The node interacts with a frozen large language model (LLM) (e.g. qwen2-14b) to dynamically determine operations (” Should I collect more information? Predict my tags? Pause? Pause?”).

- Flexible action:

- Local aggregation: Receive information from direct neighbors.

- Global Aggregation: Retrieve relevant insights from anywhere on the graph – Use Retrieval Augmented Generation (RAG).

- noop(“do nothing”): Sometimes the best move is to wait – avoid information overload or noise.

- Memory matters: Each proxy node maintains a private buffer for its original text functionality, summarizing context and a set of tag examples. This allows for tailoring tips and reasoning in each step.

How does Reagan work?

Here is a simplified breakdown of Reagan’s workflow:

- Insight: This node collects context from its own state and memory buffer.

- planning: A prompt is built (summarizing the node’s memory, functionality, and neighbor information) and sent to the LLM, which suggests the next action.

- acting: The node can be aggregated locally, retrieved globally, predicted its tags or taken no action. The result is written back to memory.

- Iteration: This reasoning loop can be used on several layers, which can be integrated and improved.

- predict: In the final stage, nodes aim to make label predictions and are supported by the mixed local and global evidence they collect.

The reason for this novel is that every node is not synchronized. There is no global clock or shared parameters that force uniformity.

Results: Surprisingly powerful – even without training

Reagan’s promise matched his results. On classic benchmarks (Cora, Citeseer, Chameleon), it can achieve competitive precision, usually matching or exceeding baseline GNN(No supervision training or fine-tuning.

Sample results:

| Model | Cola | Citeseer | chameleon |

|---|---|---|---|

| GCN | 84.71 | 72.56 | 28.18 |

| Graphics | 84.35 | 78.24 | 62.15 |

| Reagan | 84.95 | 60.25 | 43.80 |

Reagan uses frozen LLM for planning and context collection to quickly engineer and semantic retrieval.

Key Insights

- Timely engineering issues: How nodes combine local and global memory in prompts affects accuracy, and the best strategy depends on graph sparsity and label location.

- Tag semantics: Exposing clear tag names may lead to biased predictions; anonymous tags can produce better results.

- Agent flexibility: Reagan’s decentralized node-level reasoning is particularly effective in sparse graphs or sparse graphs of noisy blocks.

Summary

Reagan sets new standards for agent-based graphical learning. With LLM becoming more complex and retrieval machine architecture, we may soon see that each node is not only a digital or embedded graph, but also an adaptive, context-aware inference agent, ready to face tomorrow’s challenges in data networks.

Check The paper is here. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Nikhil is an intern consultant at Marktechpost. He is studying for a comprehensive material degree in integrated materials at the Haragpur Indian Technical College. Nikhil is an AI/ML enthusiast and has been studying applications in fields such as biomaterials and biomedical sciences. He has a strong background in materials science, and he is exploring new advancements and creating opportunities for contribution.