Thinking Machine Starts Tinker: A Low-Level Training API that abstracts LLM fine-tuning without hiding knobs

The thinking machine has been released TinkerThis is a Python API that allows researchers and engineers to write training loops locally, while the platform executes them on a managed distributed GPU cluster. Shrinkable and technical: Total control of data, goals and optimization steps; abandon scheduling, tolerance and multi-node orchestration. The service is a waitlist for private beta and starts free, turning to usage-based pricing “in the next few weeks”.

OK, but tell me what this is?

The tinkerer has been exposed Low-level primitives– Not a high-end “train()” wrapper. Core calls include forward_backward,,,,, optim_step,,,,, save_stateand sampleallowing users to directly control gradient calculations, optimizer steps, checkpoints and evaluation/infer custom loops. Typical workflow: Instantiation Lola Training clients for training on basic models (e.g. Llama-3.2-1b), iterative forward_backward/optim_steppersistent state, and then obtain a sampling client to evaluate or export weights.

Key Features

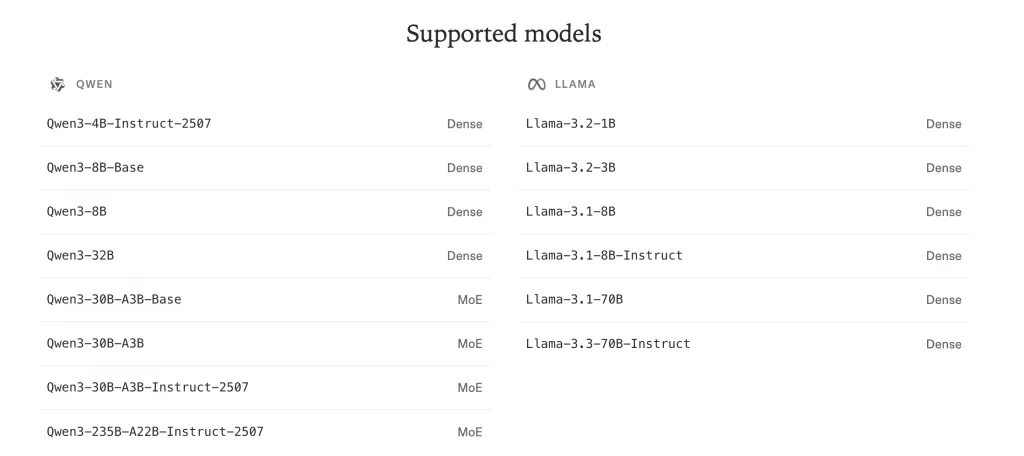

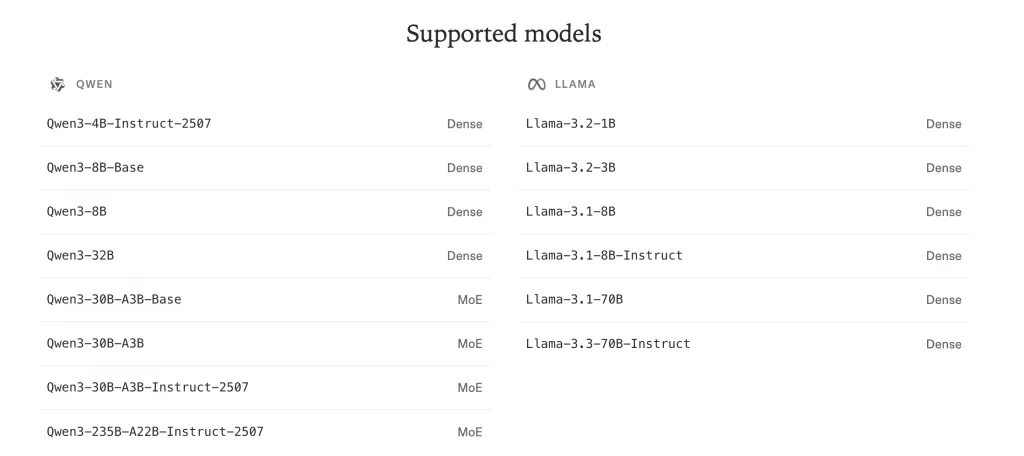

- Open model coverage. Fine-tuning the family, e.g. camel and QWENincluding large expert mixture variants (e.g. QWEN3-235B-A22B).

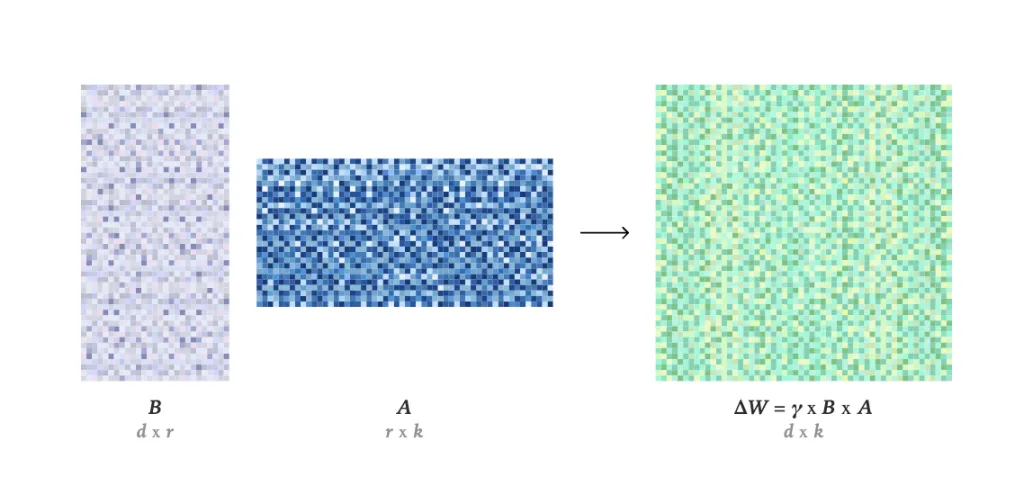

- Lola-based post-training. Tinker’s Tools Low-level adaptation (Lora) Rather than adequate fine-tuning; their technical notes (“Lola has no regrets”) argue that Lola can do many practical workloads (especially RL) matching in the right settings.

- Portable workpiece. Download trained adapter weights for external use (e.g. with your preferred inference stack/provider).

What is running?

The thinking machine team positioned the tinkerer as Post-hosting training platform A good example of open models from small LLM to large Experts systems is QWEN-235B-A22B As a supported model. Switch the model intentionally minimal – replace the string identifier and run it again. Under the hood, runs on the inner cluster of the mind machine; the LORA method enables a shared computing pool and low utilization overhead.

🚨 [Recommended Read] Vipe (Video Pose Engine): A powerful and universal 3D video annotation tool for space AI

Tinker Recipes: Reference Training Loops and Post-Training Recipes

To reduce boilerplate while maintaining the core API lean, the team published Tinker Recipe (Apache-2.0). It contains ready-made reference loops for use Supervised learning and Reinforcement learningplus working examples RLHF (three-stage SFT → reward modeling → strategy RL),,,,, Mathematics Rewards,,,,, Tool usage/retrieve tasks,,,,, Rapid distillationand Multi-agent set up. This repository is also used for Laura high parameter calculations and evaluation integrated utilities (e.g., Inspectai).

Who is already using it?

Early users include groups Princeton (Golder Dynasty Team), Stanford University (Rotskoff Chemistry), University of California, Berkeley (skyrl, asynchronous non-policy multi-agent/tools use RL) and Redwood Research (RL on QWEN3-32B used to control tasks).

The tinkerer is Private beta so far Waitlist registration. Service is Start for freeand Usage-based pricing The plan is short; the organization is asked to contact the team directly to start the job.

I like that tinker exposes low-level original pictures (forward_backward,,,,, optim_step,,,,, save_state,,,,, sample), not single-piece train()– It keeps objective design, reward molding and evaluation in my control while offloading multi-node orchestration to its hosted cluster. Lora’s priority pose is pragmatic cost and turnaround, and their own analysis argues that Lora can be fully fine-tuned when configured correctly, but I still need transparent logs, deterministic seeds and every step telemetry to verify repetition and drift. The RLHF and SL reference loops of the recipe are useful starting points, but I will judge throughput stability of data governance (PII processing, audit trail) during the actual workload, checkpoint portability, and guardrail platform.

Overall, I prefer Tinker’s open and flexible API: it allows me to customize open LLM with an explicit training loop, while the service handles distributed execution. Compared to closed systems, this preserves algorithmic control (loss, RLHF workflow, data processing) and reduces barriers to new practitioners for experimentation and iteration.

Check Technical details and Sign up for our waitlist here. If you are an organization that a university or organization is seeking a large-scale visit, please contact us [email protected].

Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter. wait! Are you on the telegram? Now, you can also join us on Telegram.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.

🔥[Recommended Read] NVIDIA AI Open Source VIPE (Video Pose Engine): A powerful and universal 3D video annotation tool for spatial AI