StepFun AI releases Step-Audio-EditX: a new open source 3B LLM-level audio editing model, good at expressive and iterative audio editing

How can voice editing become as straightforward and controllable as simply rewriting a line of text? StepFun AI has open sourced Step-Audio-EditX, an audio model based on 3B parameter LLM that turns expressive voice editing into token-level text operations instead of waveform-level signal processing tasks.

Why developers care about controllable TTS?

Most zero-shot TTS systems replicate emotion, style, accent and timbre directly from short reference audio. They sound natural but have very little control. Style cues in the text are helpful only for in-domain speech, while cloned speech often ignores the requested emotion or speaking style.

Past work has attempted to disentangle factors through additional encoders, adversarial losses, or complex architectures. Step-Audio-EditX maintains a relatively entangled representation, but instead changes the data and post-training objectives. The model learns control by looking at many pairs and triples where the text is fixed but one attribute changes significantly.

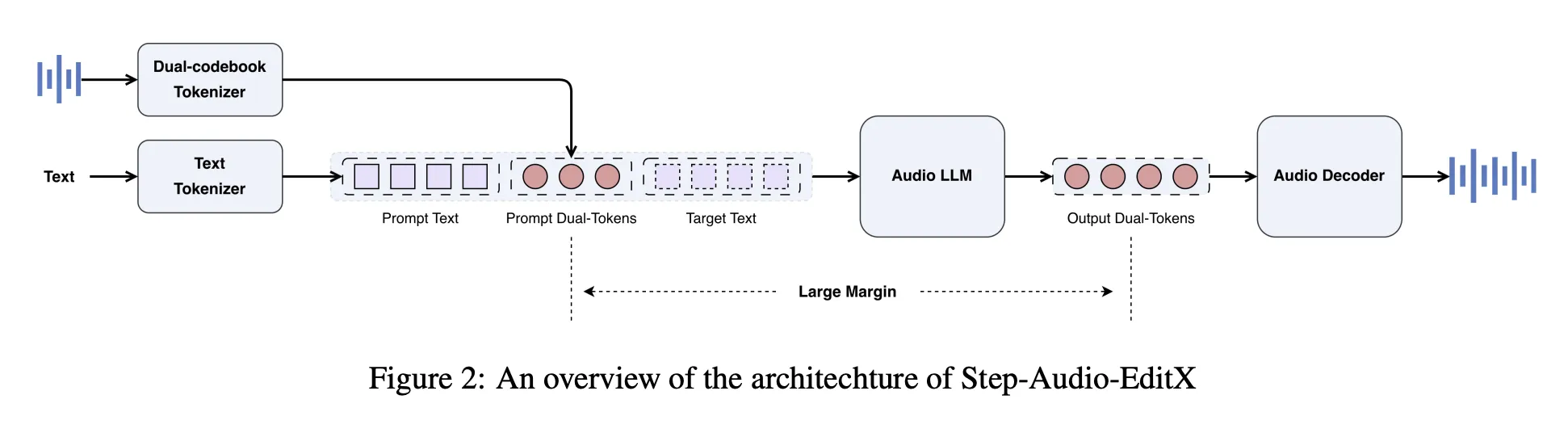

Architecture, dual codebook tokenizer plus compact audio LLM

Step-Audio-EditX reuses the Step-Audio dual codebook tokenizer. Speech is mapped to two token streams, a 16.7 Hz linguistic stream with a 1024-entry codebook and a 25 Hz semantic stream with a 4096-entry codebook. Tokens are interleaved in a ratio of 2 to 3. The tokenizer preserves prosodic and emotional information, so it’s not completely unraveled.

On top of this marker, the StepFun research team built a 3B parametric audio LLM. The model is initialized from text LLM and then trained on a mixed corpus with a 1:1 ratio of text-only and double-coded audio tokens in chat-style prompts. Audio LLM reads text tokens, audio tokens, or both, and always produces dual codebook audio tokens as output.

A separate audio decoder handles reconstruction. A diffusion transformer-based stream matching module predicts the mel spectrogram based on audio markers, reference audio, and speaker embeddings, and the BigVGANv2 vocoder converts the mel spectrogram into a waveform. The flow matching module has improved pronunciation and timbre similarity after approximately 200,000 hours of high-quality speech training.

Generate data with large margins instead of complex encoders

The key idea is large-scale learning. The model is post-trained on triples and quadruples, keeping the text fixed and changing only one attribute with significant gaps.

For zero-shot TTS, Step-Audio-EditX uses a high-quality in-house dataset, mainly Chinese and English, with a small amount of Cantonese and Sichuan, with approximately 60,000 speakers. The data cover a wide range of variation in style and emotion within and between speakers. (arXiv)

For emotion and speaking style editing, the team constructed synthetic large-margin triples (text, audio neutral, audio emotion, or style). Voice actors record approximately 10-second clips for each mood and style. StepTTS zero-shot cloning then generates neutral and emotional versions of the same text and speaker. An edge scoring model trained on a small set of human labels, scores pairs on a scale of 1 to 10, and only retains samples with a score of at least 6.

Paralinguistic editing covers breathing, laughter, filled pauses and other tags, using a semi-synthetic strategy on the NVSpeech dataset. The research team constructed quadruples where the target was the original NVSpeech audio and text transcript and the input was a cloned version with the tags removed from the text. This provides temporal editing supervision without the need for margin models.

Reinforcement learning data uses two preference sources. Human annotators rated the 20 candidates for each prompt on a 5-point scale for correctness, rhythmicity, and naturalness, and pairs with a margin greater than 3 were retained. The comprehension model rates emotion and speaking style on a scale of 1 to 10 and retains pairs with a margin greater than 8.

After training, SFT plus PPO on the token sequence

Later training is divided into two stages, Supervise fine-tuning followed by polyphenylene ether.

exist Supervise fine-tuningthe system prompts to define zero-shot TTS and editing tasks in a unified chat format. For TTS, the cue waveform is encoded as a dual-codebook token, converted to string form, and inserted into the system cue as speaker information. The user message is the target text and the model returns new audio tokens. For editing, user messages include original audio tokens plus natural language instructions, and the model outputs the edited tokens.

Reinforcement learning then refines instructions to follow. The 3B reward model is initialized from SFT checkpoints and trained using Bradley Terry loss on large profit preference pairs. Rewards are calculated directly on the sequence of dual-codebook tokens without decoding to a waveform. PPO training uses this reward model, a clipping threshold, and a KL penalty to balance quality and deviation from the SFT policy.

Steps – Audio – Editing – Testing, Iterative Editing and Outlining

To quantify control, the research team introduced a step audio editing test. It uses Gemini 2.5 Pro as LLM as a judge to assess emotion, speaking style and paralinguistic accuracy. The benchmark has 8 speakers from Wenet Speech4TTS, GLOBE V2 and Libri Light, 4 speakers per language.

The emotion set has 5 categories with 50 Chinese and 50 English prompts in each category. The speaking style set has 7 styles, each style has 50 prompts per language. The paralanguage set has 10 tags such as breathing, laughter, surprise, um, etc., and 50 prompts for each tag and language.

Edits are evaluated iteratively. Iteration 0 is the initial zero-shot clone. The model is then subjected to 3 rounds of editing with textual instructions. In Chinese, the sentiment accuracy increased from 57.0 at iteration 0 to 77.7 at iteration 3. Speaking style accuracy increased from 41.6 to 69.2. English showed similar behavior, and cue fixation ablation (where the same cue audio was used for all iterations) still improved accuracy, supporting the large margin learning hypothesis.

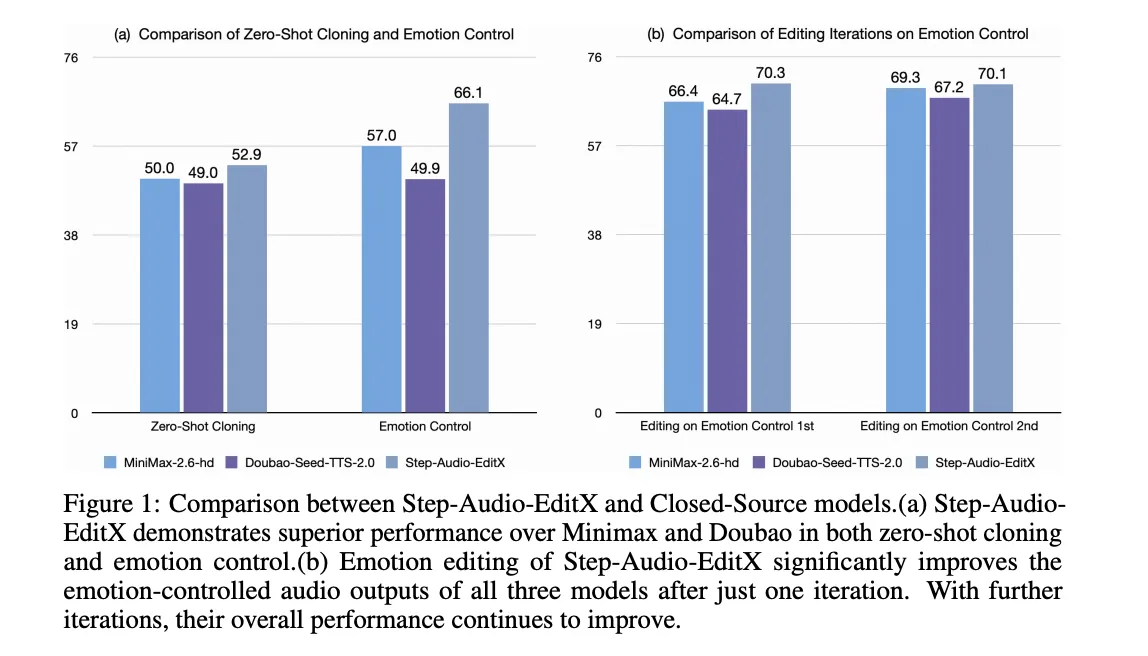

The same editing model is applied to four closed-source TTS systems, GPT 4o mini TTS, ElevenLabs v2, Doubao Seed TTS 2.0 and MiniMax Voice 2.6 HD. For all of these, one editing iteration with Step-Audio-EditX can improve emotional and stylistic accuracy, and further iterations will continue to help.

Associate language editors are rated on a scale of 1 to 3. The average scores for Chinese and English rose from 1.91 at iteration 0 to 2.89 after a single edit, which is comparable to local paralinguistic synthesis in robust commercial systems.

Main points

- Step Audio EditX uses a dual codebook marker and a 3B parametric audio LLM, so it can treat speech as discrete markers and edit audio in a text-like manner.

- The model relies on large amounts of synthetic data on emotion, speaking style, paralinguistic cues, velocity and noise, rather than adding additional disentangled encoders.

- Supervised fine-tuning coupled with PPO with a token-level reward model enables Audio LLM to follow natural language editing instructions for TTS and editing tasks.

- The Step Audio Edit Test benchmark test, with Gemini 2.5 Pro as the judge, showed that after three editing iterations, the accuracy of emotion, style and paralinguistic control in Chinese and English was significantly improved.

- Step Audio EditX can post-process and improve speech from closed-source TTS systems, and the complete stack, including code and checkpoints, is available as open source for developers.

Step Audio EditX is a precise step forward in controllable speech synthesis, as it retains the Step Audio tokenizer, adds a compact 3B audio LLM, and optimizes control with large headroom data and PPO. The introduction of the Step Audio Edit Test with Gemini 2.5 Pro as the judge makes the evaluation story more specific in terms of emotion, speaking style, paralanguage control, etc. The open version lowers the threshold for actual audio editing research. Overall, this version makes audio editing feel closer to text editing.

Check Papers, repurchase agreements and Model weight. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.