NVIDIA AI releases Graspgen: A diffusion-based framework for robotics

Robot grip is a cornerstone task for automation and manipulation, and is crucial in the fields from industrial picking to service and humanoid robots. Despite decades of research, achieving a powerful, universal 6-degree (6-DOF) grip remains a challenging open question. recent, Nvidia is revealed GlasgenThis is a new diffusion-based GRASP generation framework that promises to bring the latest (SOTA) performance with unprecedented flexibility, scalability and real-world reliability.

Grasp the challenge and motivation

Accurate and reliable mastery generation in 3D space (must be expressed in position and orientation), these algorithms can be generalized to unknown objects, diverse grip types, and challenging environmental conditions, including partial observations and debris. GRASP planners based on classical models depend heavily on precise object pose estimation or multi-view scanning, making it impractical in field settings. Data-driven learning approaches show hope, but current approaches tend to struggle with generalization and scalability, especially when moving to new grips or chaotic environments in the real world.

Another limitation of many existing grip systems is that they rely on large amounts of expensive real-world data collection or domain-specific adjustments. Collecting and annotating real mastery datasets is expensive and is not easily transferred between grip types or scene complexity.

Key ideas: Large-scale simulation and diffusion model generation grasp

NVIDIA’s GraspGen pivot collects large-scale synthetic data from expensive real-world data to simulation generation, especially using large-scale object grids (more than 8,000 objects) from the Objaverse dataset (more than 8,000 objects) and simulation’s grabbing interactions (more than 53 million GRASPS).

GraspGen renders Grasp Genation as Stripping diffusion probability model (ddpm) SE(3) Pose space (including 3D rotation and translation). The diffusion model, establishes a good iterative noise sample in image generation toward object-centric point cloud representation as condition. This generational modeling method naturally captures multi-modal distributions that are effectively mastered on complex objects, making spatial diversity crucial for handling chaos and task constraints.

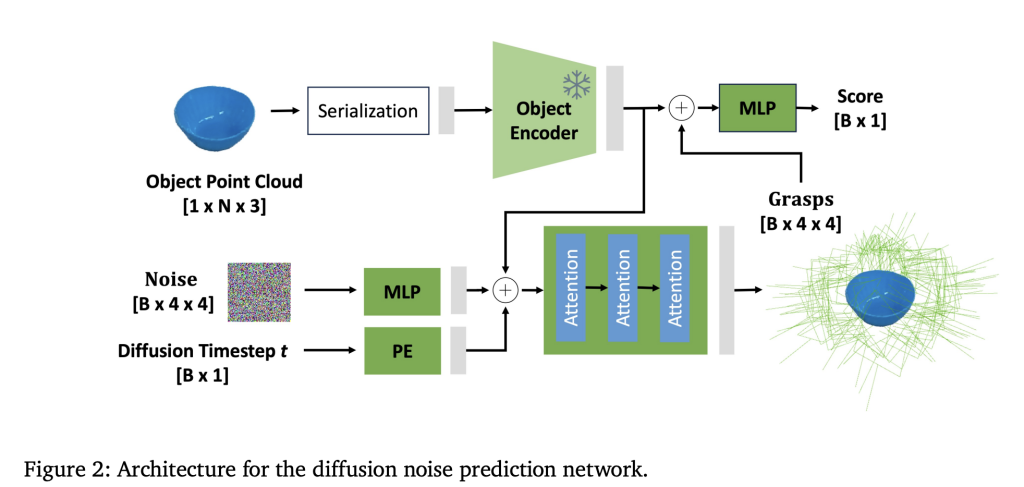

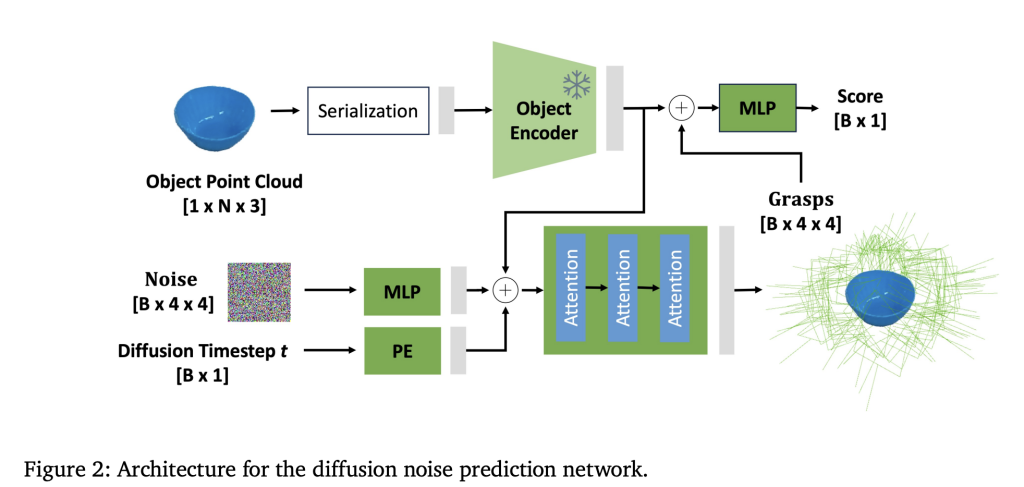

Architecture Graspgen: Diffusion transformer and generation training

- Diffusion transformer encoder: Graspgen adopts a novel architecture that combines a powerful PointTransFormerv3 (PTV3) backbone to encode the original, unstructured 3D point cloud input into the latent representation, followed by an iterative diffusion step to predict noise residuals in the grasping posture space. This is different from previous works that rely on PointNet++ or contact-based GRASP representations, thereby improving mastery quality and computing efficiency.

- Generation training for discriminators: GraspGen innovates in the Grasp scorer or discriminator training paradigm. Rather than train on successful/failed crawl static offline datasets, the discriminator instead of training the grap pose generated by the diffusion generative model during training. These generators’ graSP expose discrimination factors to typical errors or model biases, such as slightly grabbing outliers away from the object surface in collisions, allowing them to better identify and filter false positives during inference.

- Effective weight sharing: The discriminator re-repeats the frozen object encoder from the diffusion generator, only a lightweight multilayer perceptron (MLP) trained from scratch is required to master the successful classification. This results in a 21-fold reduction in memory consumption compared to previous discriminatory architectures.

- Translation standardization and rotation representation: To optimize network performance, the translation components of GRASP are standardized based on dataset statistics and encoded by Lie algebra or 6D representations to ensure stable and accurate pose prediction.

Multi-species grasp and environmental flexibility

Grip is proven among three gripper types:

- Parallel Diagnostics (Franka Panda, Robotiq-2F-140)

- Suction fixture (analytical modeling)

- Multi-fingered knife grab (the future expansion of the plan)

Crucially, the framework is summarized as:

- Partial and complete point cloud: It performs powerful performance on both observations of observations with occlusion and converged multi-view clouds.

- Single object and confusing scenes: Evaluate ExtractorIt’s a challenging chaotic rooting benchmark that shows Graspgen completing the highest tasks and mastering success rates.

- SIM forwarding delivery: GraspGen is trained purely in simulations, showing strong zero beat transfer to the real robotic platform under noisy visual inputs and augmented effects of simulation segmentation and sensor noise.

Benchmarks and performance

- Extract substitute benchmarks: Graspgen outperformed state-of-the-art baselines such as Contact-GraspNet and M2T2 (via the broad edge) (the task successfully improved nearly 17% compared to Contact-GraspNet). Even Oracle Planners with ground mastery work hard to push the mission successfully to over 49%, highlighting the challenge.

- Profits from precision coverage: Graspgen significantly improves the success accuracy and spatial coverage of GRASP compared to previous diffusion and contact point models, indicating higher diversity and quality of GRASP recommendations.

- Real robot experiments: Using UR10 robots with Realsense depth sensing, GraspGen has reached 81.3% overall mastery in various real worlds (including chaos, baskets, shelves), and 28% success over M2T2. It only produces a focus mastery on the target object, avoiding false crawling seen in scene-centric models.

Dataset Publishing and Open Source

Nvidia has publicly released the GraspGen dataset to facilitate the progress of the community. It consists of approximately 53 million simulated grips in 8,515 object grids licensed under the policy of allowing creative sharing. Datasets were generated using NVIDIA ISAAC SIM and have detailed physical-based GRASP success tags, including shaking tests for stability.

In addition to the dataset, GraspGen code base and estimated models are provided under the open source license, and other project materials are provided

in conclusion

GraspGen represents a significant advancement in over 6 robotic grappling hooks, introducing a diffusion-based generative framework that outperforms previous methods while scaling across multiple grip, scene complexity, and observability conditions. Its new mastery training formula decisively scores can decisively improve the filtering of model errors, resulting in a huge improvement in mastering success and task-level performance in simulated and real robots.

By publicly freeing code and mastering datasets with extensive synthetic mastery, NVIDIA has given Robotics Community to further develop and apply these innovations. The GraspGen framework consolidates simulation, learning and modular robot components into turnkey solutions, bringing the vision of reliable, real-world robot grips as a broadly applicable fundamental building block in general robotic operations.

Check Paper,,,,, project and Github page. All credits for this study are to the researchers on the project. Subscribe now To our AI newsletter

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.