Mobilellm-R1 released by Meta AI: an edge inference model with less than 1B parameters and achieves 2x-5X performance improvements on other fully open source AI models

Meta has been released Mobilellm-R1a lightweight edge reasoning model is now available on the hug face. The version includes models ranging from 140 million to 950m parameters, focusing on effective math, coding, and scientific reasoning for multi-billion dollar scales.

Unlike the universal chat model, Mobilellm-R1 is designed for edge deployment and is designed to provide state-of-the-art inference accuracy while maintaining computational efficiency.

What building capabilities are Mobilellm-R1?

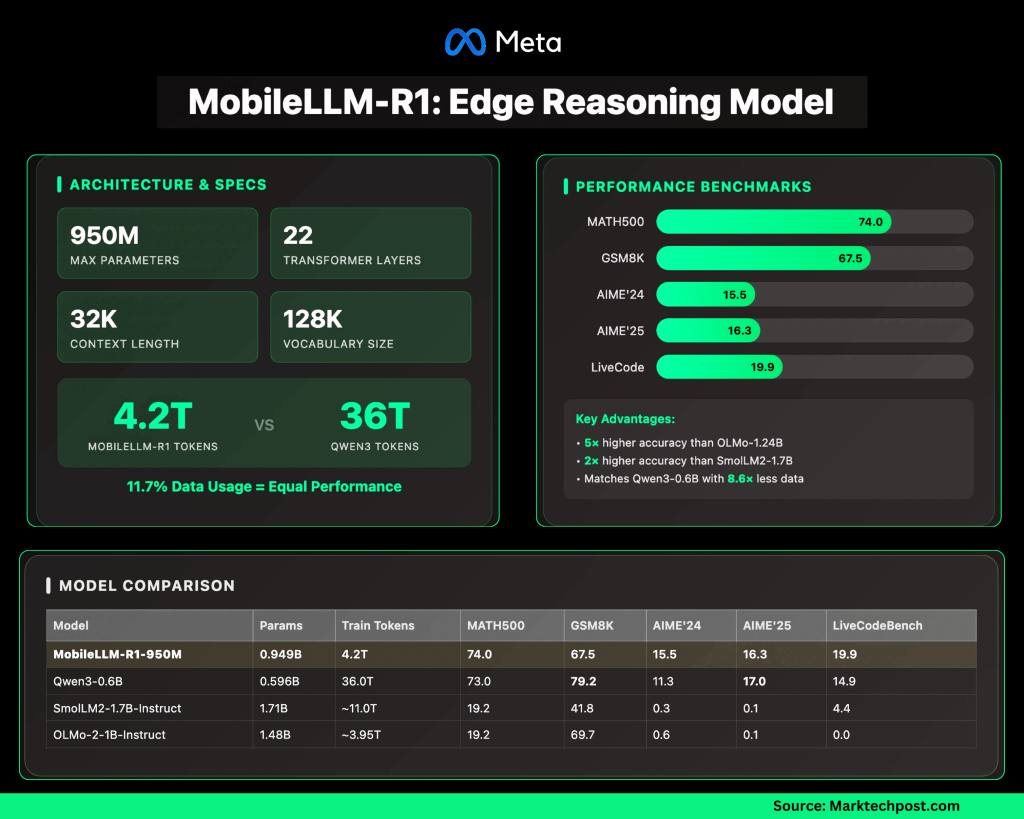

The largest model, MOBILELLM-R1-950Mintegrates several architectural optimizations:

- 22 transformer layers There are 24 attention heads and 6 grouped KV heads.

- Embed dimension: 1536; Hide dimension: 6144.

- Grouping Questions (GQA) Reduce computation and memory.

- Block weight sharing Cut parameter count without serious delay penalty.

- Swiglu Activation Improve small model representation.

- Context length: 4K is used for the basics, 32K is used for the trained model.

- 128K Vocabulary Have shared input/output embedding.

The focus is to reduce compute and memory requirements to make it suitable for deployment on constrained devices.

How efficient is the training?

The data efficiency of Mobilellm-R1 is worth noting:

- Trained ~4.2T token In total.

- By comparison, Qwen3’s 0.6B Models received training 36t token.

- This means that Mobilellm-R1 only uses ≈11.7% Data that achieves or exceeds QWEN3 accuracy.

- Post-training training applies supervised fine-tuning of mathematical, coding and inference data sets.

This efficiency is directly translated into lower training costs and resource requirements.

How does it work on other open models?

On the benchmark, Mobilellm-R1-950M showed significant growth:

- Math (Math500 dataset): ~5×higher accuracy Compare Olmo-1.24b and~2×higher accuracy Compare Smollm2-1.7b.

- Inference and encoding (GSM8K, AIME, livecodebench): Match or surpass QWEN3-0.6Balthough fewer tokens are used.

This model provides results that are usually associated with larger architectures while maintaining a smaller footprint.

Where is the Mobilellm-R1 insufficient?

The focus of this model creates limitations:

- strong Mathematics, codes and structured reasoning.

- weak General dialogue, common sense and creative tasks Compared to larger LLM.

- Distributed in Fair NC (Non-Commercial) Licensewhich limits usage in production environments.

- Longer context (32k) improves KV-CACHE and memory requirements infer.

How does Mobilellm-R1 compare to Qwen3, Smollm2 and Olmo?

Performance snapshot (after training model):

| Model | parameter | Train Token

Key observations:

SummaryMeta’s MobilellM-R1 highlights the trend towards smaller, domain-optimized models that provide competitive inference without a large-scale training budget. By obtaining 2×–5× performance on larger open models while training a small portion of the data, it shows that efficiency (not just extensions) will define the next stage of LLM deployment, especially for mathematical, coding and scientific use cases on edge devices. Check Model embracing face. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience. You may also like... |

|---|