Microsoft AI proposes BitNet Distillation (BitDistill): a lightweight pipeline that saves up to 10x memory and ~2.65x CPU acceleration

Microsoft Research proposed BitNet Distillationconvert the existing full-precision LLM to 1.58 bits BitNet students target specific tasks while maintaining accuracy close to that of FP16 teachers and improving CPU efficiency. This method combines Architecture refinement based on SubLN, Continue pre-trainingand dual signal distillation From Logits and the multi-head attention relationship. The report results show Up to 10x memory savings and CPU inference speed is increased by approximately 2.65 timeswhose mission indicators are comparable to FP16 of various scales.

What’s happening with BitNet Distillation?

The community has shown Bitnet b1.58 Can match full accuracy quality when training from scratch, but convert pre-trained FP16 models directly to 1.58 bits Accuracy is typically lost, and the gap widens as model size increases. BitNet Distillation addresses conversion issues for real-world downstream deployments. It is designed to maintain accuracy while providing CPU-friendly ternary weighting with INT8 activation.

Phase 1: Modeling refinement using SubLN

Low-level models suffer from larger activation variance. research team insert sublogical network Normalization within each Transformer block, specifically Before the output projection of the MHSA module and Before the output projection of FFN. This stabilizes the hidden state scale flowing into the quantized projection, improving optimization and convergence once the weights are ternary. The training loss curve in the analysis section supports this design.

Phase 2: Continue pre-training to adapt to weight distribution

Direct task fine-tuning 1.58 bits Giving students only a small number of task labels is not enough to reshape the FP16 weight distribution of ternary constraints. BitNet Distillation Execution Short-term continuous pre-training On the general corpus, the research team used 10B tokens From the FALCON corpus, pushing the weights to a BitNet-like distribution. The visualization shows that the mass is concentrated near the transition boundaries, which makes small gradients flip the weights [-1, 0, 1] During training on downstream tasks. This improves learning capabilities without the need for complete pre-training.

Stage 3: Distillation-based fine-tuning using two signals

Students learn from FP16 teacher and use logits distillation and Multi-head self-attention relation distillation. The logits path uses the temperature softening KL between the teacher and student token distributions. Attention path follows MiniLM and MiniLMv2 Formula that delivers the relationship between Q, K, V without the same number of heads and lets you select individual layers for distillation. Ablation shows that combining both signals works best, and choosing a well-chosen layer maintains flexibility.

Understand the results

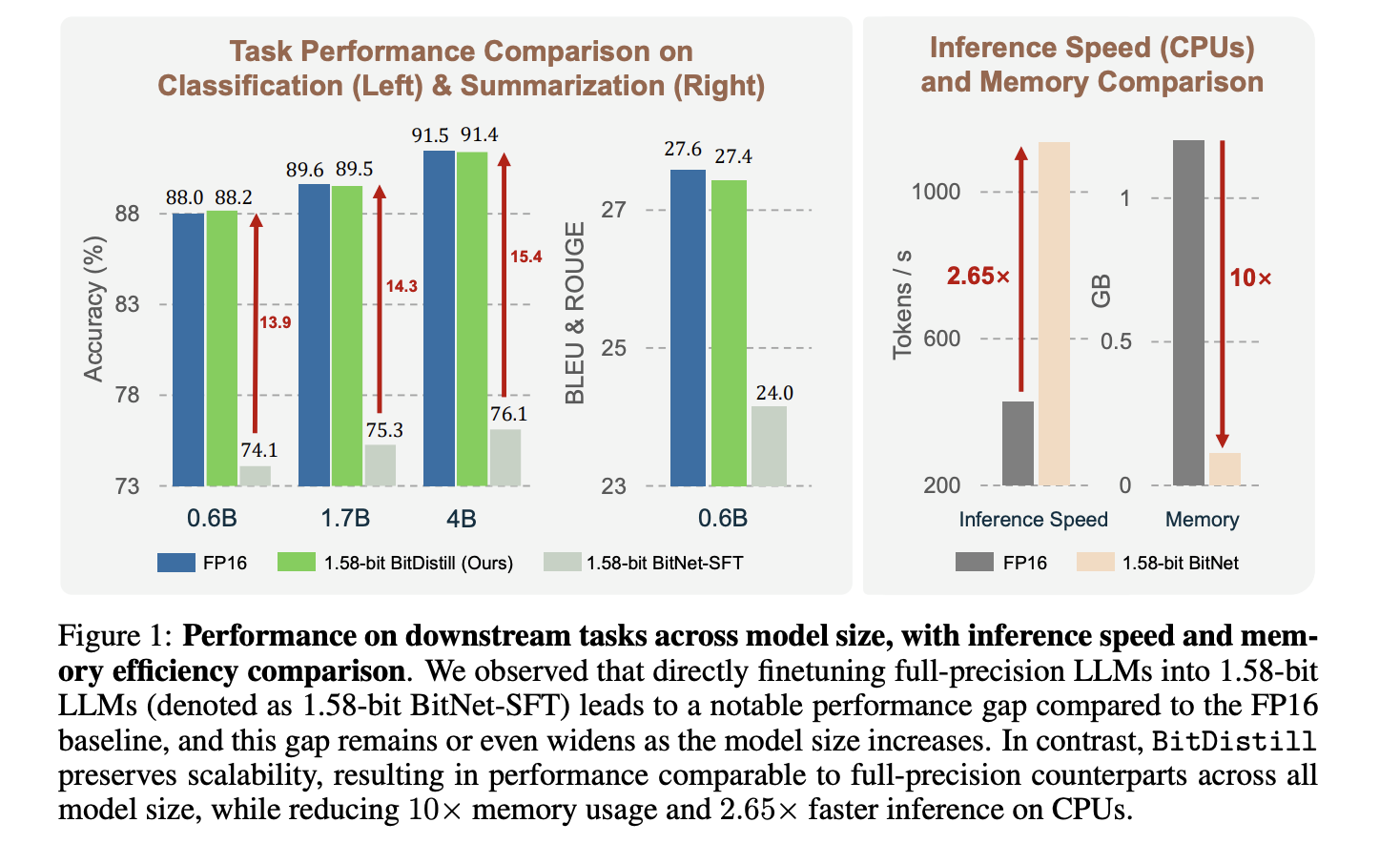

The research team evaluates classification, MNLI, QNLI, SST 2 and summarization on the CNN/DailyMail dataset. It compares three settings: FP16 mission fine-tuning, direct 1.58-bit mission fine-tuning, and BitNet Distillation. Figure 1 Shows that BitNet Distillation matches the FP16 accuracy of the Qwen3 backbone 0.6B, 1.7B, 4Bwhile the direct 1.58-bit baseline lags more as the model size grows. On the CPU, Tokens per second Raise approx. 2.65×memory decreased by approx. 10× For students. The research team quantified activation as INT8 and use pass-through estimator gradient through the quantizer.

The framework is compatible with post-training quantization methods, e.g. General PTQ and weighted average qualitywhich provides additional benefits on top of pipelines. It would be more helpful to draw from stronger teachers, which suggests pairing smaller 1.58 students with larger FP16 teachers when available.

Main points

- BitNet Distillation is a 3-stage pipeline, SubLN insertion, continuous pre-training, and double distillation of Logits and multi-head attention relations.

- The study reports that for 1.58 students, the accuracy is close to FP16, the memory is reduced by about 10 times, and the CPU inference speed is increased by about 2.65 times.

- This method uses MiniLM and MiniLMv2 style target-shifted attention relationships and does not require a matching number of people.

- The evaluation covers MNLI, QNLI, SST 2 and CNN/DailyMail, and includes the Qwen3 backbone with 0.6B, 1.7B and 4B parameters.

- The deployment target is to use INT8 activated ternary weights with optimized CPU and GPU cores available in the official BitNet repository.

BitNet Distillation is a pragmatic step toward 1.58-bit deployment without the need for complete retraining, and the three-stage design, SubLN, continuous pretraining, and MiniLM family attention distillation, clearly maps to known failure modes in extreme quantization. A reported 10x reduction in memory and ~2.65x increase in CPU speed at near FP16 accuracy demonstrate solid engineering value for local and edge targets. The reliance on attentional relational distillation is well grounded in previous MiniLM work, which helps explain the robustness of the results. The presence of bitnet.cpp with optimized CPU and GPU kernels reduces integration risk for production teams.

Check technical paper and GitHub repository. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an artificial intelligence media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.