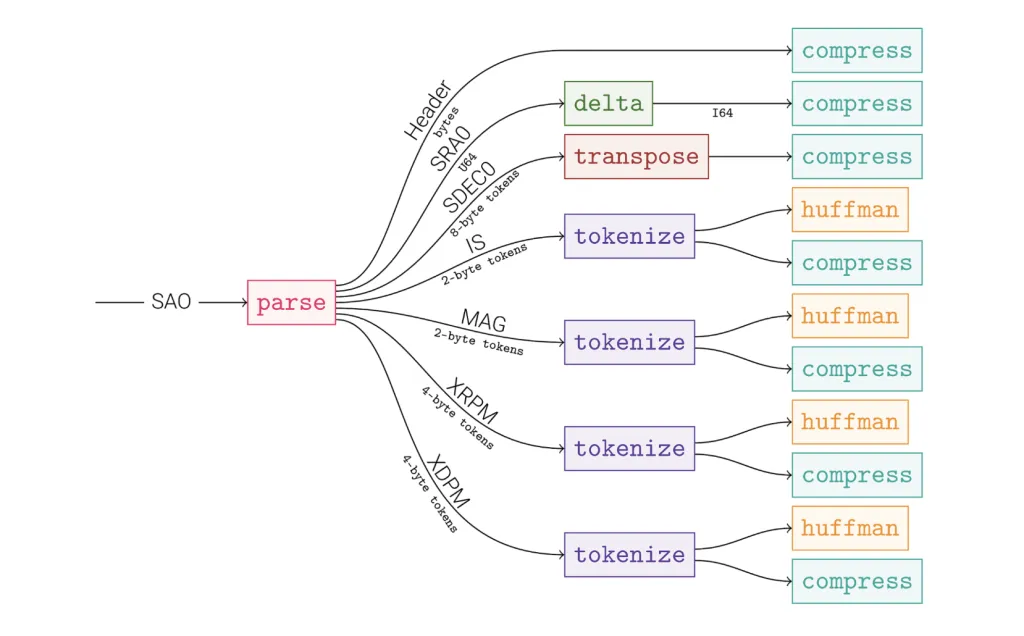

How much compression ratio and throughput can you recover by training format aware graph compressor and transferring only self-descriptive graphs to the general purpose decoder? Meta-Artificial Intelligence Release Open ZLan open source framework, built Specialized, format-aware compressor Describe and send out from advanced data Self-described line format That one General purpose decoder Readable – decouple the development of the compressor from the introduction of the reader. This method is based Compressed graph model It represents the pipeline as a directed acyclic graph (DAG) of a modular codec.

So, is there any new news?

OpenZL formalizes compression into a computational graph: the node is the codec/graph, the edge is the typed message flow, and the final graph is serialized with the payload. Any frame generated by any OpenZL compressor can pass General purpose decoderbecause the graph specification moves with the data. The design aims to combine the ratio/throughput advantages of domain-specific codecs with the simplicity of operation of a single stable decoder binary.

How does it work?

- Describe the data → Build the chart. The developer provides a data description; OpenZL combines the parsing/grouping/transforming/entropy phases into a DAG suitable for the structure. turn out Self-description Frame: Compressed bytes plus graphics specification.

- General decoding path. The decoding process follows embedded graphics without having to ship new readers as the compressor develops.

Tools and APIs

- SDDL (Simple Data Description Language): Built-in components and APIs allow you to break input from precompiled data descriptions into typed streams; available in C and Python surfaces

openzl.ext.graphs.SDDL. - Language binding: The core library and binding are open source; this repository records the usage of C/C++ and Python, and the ecosystem has added community bindings (such as Rust

openzl-sys).

How does it perform?

The research team reported that OpenZL has implemented Excellent compression ratio and speed Compared to the most advanced universal codec Datasets spanning various real worlds. It also states On-premises Meta has consistent size and/or speed improvements and shorter compressor development time. Disclosed materials are indeed no Assign a common numeric factor; the results are presented in Pareto improvements, depending on the data and pipeline configuration.

OpenZL makes format-aware compression operationally practical: the compressor is represented as a DAG, embedded in each frame as a self-descriptive graph, and decoded by a general purpose decoder, thus eliminating the reader rollout. Overall, OpenZL encodes the codec DAG in each frame and decodes it through a general-purpose reader; Meta reports Pareto gain relative to zstd/xz on the real dataset.

Check Papers, GitHub page and Technical details. Please feel free to check out our GitHub page for tutorials, codes and notebooks. Also, please follow us twitter And don’t forget to join us 100k+ ML SubReddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence to benefit society. His latest effort is to launch Marktechpost, an artificial intelligence media platform that stands out for its in-depth coverage of machine learning and deep learning news, technology is reliable and easy to understand by a wide audience. The platform has more than 2 million views per month, which shows that it is very popular among the audience.

🙌 Follow MARKTECHPOST: Add us as the preferred source on Google.