Meta AI introduces DeepConf: the first AI method that can achieve 99.9% using GPT-OSS-1220B on Aime 2025

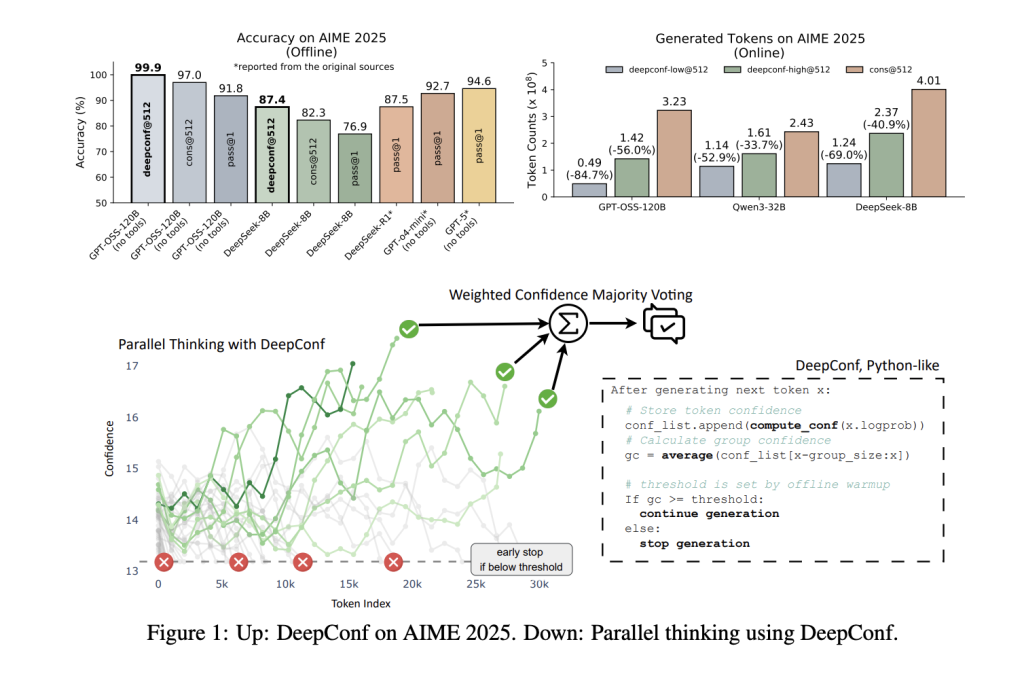

Large Language Models (LLMs) have reshaped AI reasoning and have parallel thinking and self-contradictory approaches are often considered key advances. However, these technologies face a basic trade-off: sampling multiple inference paths improves accuracy, but is computationally expensive. Researchers from Meta AI and UCSD DeepConfthe new AI approach almost eliminates this trade-off. Provided by DeepConf State-of-the-art inference performance and improve efficiency– For example 99.9% accuracy In the hard Aime 2025 math competition, use open source GPT-OSS-1220B, and at the same time, you need 85% reduction in token generation More than the traditional parallel thinking method.

Why DeepConf?

Parallel thinking (in most votes) is the de facto criterion for improving LLM reasoning: generate multiple candidate solutions and then select the most common answers. Although effective, this method has Reduced returns– Accurate plateaus will even drop when sampling, as low-quality traces of reasoning can dilute votes. Furthermore, hundreds or thousands of traces per query are time- and computationally expensive.

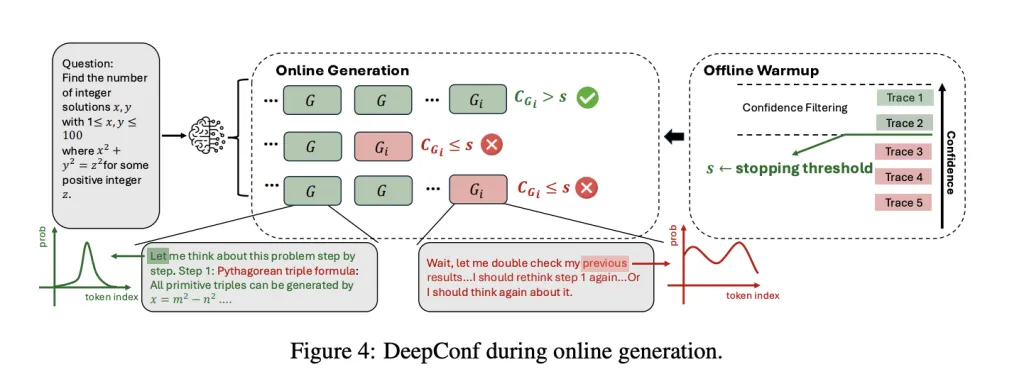

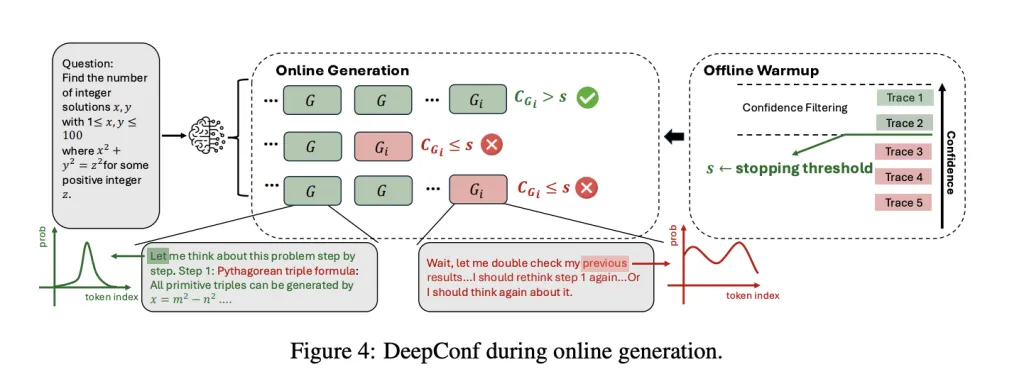

DeepConf pass Use LLM’s own confidence signal. Instead of dealing with all reasoning trajectories equally, it dynamically filters out low confidence paths Passed from generation to generation (online) or after (Offline) – Use only the most reliable tracks to inform the final answer. This strategy is The model is out of place,need No training or hyperparameter adjustmentand can be inserted into any existing model or framework that changes with minimal code.

How DeepConf works: Confidence as a guide

DeepConf describes several advances in how to measure and use confidence:

- Token Confidence: For each generated token, the negative mean logarithmic probability of the TOP-K candidate is calculated. This provides local certainty.

- Group Confidence: Average token confidence (e.g. 2048 tokens) on the sliding window provides a smooth, intermediate inference quality signal.

- Tail Confidence: Focus on the last part of the reasoning trace where the answer often resides to capture the later crash.

- Minimum group confidence: Identify the least confident segment of the trace, which usually indicates a collapse in reasoning.

- Minimum percentile confidence: Highlight the worst segments that are the most predictable.

These metrics are then used for Weight voting (The number of high-letter traces is higher) or Filter track (Retain only the most confident track). exist Online mode,DeepConf stops generating traces once its confidence drops below the threshold of dynamic calibration, greatly reducing wasteful calculations.

Key results: Performance and efficiency

DeepConf was evaluated in multiple inference benchmarks (AIME 2024/2025, HMMT 2025, BRUMO25, GPQA-DIAMOND) and models (DeepSeek-8B, QWEN3-8B/32B, GPT-OSS-OSS-200B/120B). The results are surprising:

| Model | Dataset | By @1 ACC | cons@512 ACC | DeepConf@512 ACC | Token Save |

|---|---|---|---|---|---|

| gpt-oss-1220b | Aime 2025 | 91.8% | 97.0% | 99.9% | -84.7% |

| DeepSeek-8B | Aime 2024 | 83.0% | 86.7% | 93.3% | -77.9% |

| QWEN3-32B | Aime 2024 | 80.6% | 85.3% | 90.8% | -56.0% |

Performance improvement: DeepConf improves accuracy across models and datasets ~10 percentage points Most votes that exceed the standard usually saturate the upper limit of the benchmark.

Ultra-high efficiency: Through an early stagnant low confidence trajectory, DeepConf reduces the total number of tokens generated 43–85%ultimately no loss of accuracy (usually gain).

Plugin: DeepConf is out of the box with any model – no fine-tuning, no hyperparameter search, and no changes to the underlying architecture. You can put it into an existing service stack (e.g. VLLM) ~50 lines of code.

Easy to deploy: This method is a lightweight extension for existing inference engines, requiring only access to the token-level login program and a few lines of logic for confidence calculations and early stops.

Simple integration: Minimum code, maximum impact

The implementation of DeepConf is very simple. For VLLM, the changes are small:

- Extended LogProbs processor Track sliding window confidence.

- Add early checks Before emitting each output.

- Pass the confidence threshold Through the API, no model retraining.

This allows any OpenAI-compatible endpoint to be powered by DeepConf with a single additional setup, which makes adoption in production environments trivial.

in conclusion

Meta ai’s deepconf represents leap In LLM inference, peak accuracy and unprecedented efficiency are provided. By dynamically leveraging the internal confidence of the model, DeepConf implements things that were out of reach of the previous open source models: Nearly perfect results of elite inference tasks, a small part of the calculation cost.

FAQ

FAQ 1: How does DeepConf improve accuracy and efficiency compared to most votes?

DeepConf’s trust-aware filtering and voting took precedence over traces with higher model certainty, up to 10 percentage points in the inference benchmarks compared to most votes alone. Meanwhile, its early termination of low confidence traces cuts token usage by up to 85%, providing performance and huge efficiency gains in actual deployment

FAQ 2: Can DeepConf be used with any language model or service framework?

Yes. DeepConf is completely non-static and can be integrated into any service stack (including open source and business models) without modification or retraining. Deployment requires only minimal changes (VLLM’s code ~50 lines), leveraging the token login program to calculate confidence and handle early stops.

FAQ 2: Does DeepConf require retraining, special data or complex tweaks?

no. DEEPCONF runs entirely within inference time and does not require additional model training, fine-tuning, or hyperparameter search. It uses only the built-in LogProb output and immediately uses the standard API settings for leadership frameworks; it is scalable, robust and can be deployed on actual workload without interruption.

Check Paper and project pages. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.