LLMS technology roadmap for background engineering: mechanisms, benchmarks and open challenges

Estimated reading time: 4 minute

Paper”Investigation on context engineering of Dayu ModelEstablish Context Engineering As a formal discipline, far beyond rapid engineering, it provides a unified, systematic framework for designing, optimizing and managing information guiding large language models (LLMS). Here is an overview of its main contributions and framework:

What is context engineering?

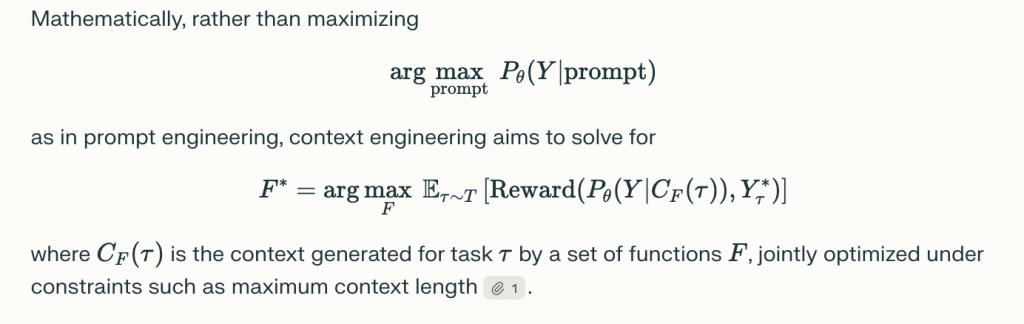

Context Engineering Defined as the science and engineering that organizes, assembles and optimizes all forms of context to maximize understanding, reasoning, adaptability and real-world performance. Instead of treating the context as a static string (the premise of timely engineering), context engineering treats it as a dynamic, structured component component, i.e. through explicit functions, usually under intense resource and building constraints, through explicit functions.

Context Engineering Taxonomy

This paper breaks down the context engineering into:

1. Basic Components

one. Context search and power generation

- Including the promotion of engineering, intrinsic learning (zero/minority, business chain, thought tree, thought graph), external knowledge retrieval (e.g., generation of search authorizations, knowledge graphs), and dynamic assembly of context elements1.

- Highlight technologies such as clear frameworks, dynamic template components and modular search architectures.

b. Context processing

- Solve long sequence processing (using Mamba, Longnet, Flashingtention), context self-performing (iterative feedback, self-evaluation), and integration of multi-modal and structured information (visual, audio, graph, graph, table).

- Strategies include attention sparseness, memory compression, and intrinsic learning meta-optimization.

c. Context Management

- Related to memory hierarchy and storage architecture (short-term context windows, long-term memory, external databases), memory paging, context compression (autoencoder, repetitive compression), and scalable management of multi-transform or multi-proxy settings.

2. System implementation

one. Search Authorized Generation (RAG)

- Modular, proxy and graphically enhanced rag architecture integrates external knowledge and supports dynamics, sometimes multi-agent retrieval pipelines.

- Enable real-time knowledge updates and complex inference on structured databases/graphs.

b. Memory system

- Persistence and hierarchical storage are implemented, thus enabling vertical learning and knowledge recollection of the agent (e.g., commemoration, memory bank, external vector database).

- Key to scaling, multi-steering conversations, personalized assistants and simulation agents.

c. Tool Integration Reasoning

- LLM combines language reasoning with world-based capabilities through function calls or environment interaction using external tools (API, search engines, code execution).

- Enable new fields (mathematics, programming, network interaction, scientific research).

d. Multi-agent system

- Coordination between multiple LLMs (agents) through standardized protocols, orchestration and context sharing – this is critical for complex, collaborative problem-solving and distributed AI applications.

Key insights and research gaps

- Understanding – Agent asymmetryLLM with advanced context engineering can understand very complex multi-faceted contexts, but it is still difficult to produce output that matches this complexity or length.

- Integration and modularity: Optimal performance comes from a modular architecture combining multiple technologies (retrieval, memory, tool use).

- Evaluation Limits: Current evaluation metrics/benchmarks (e.g. BLEU, Rouge) are often unable to capture the composition, multi-step and collaborative behavior of advanced context engineering implementations. New benchmarks and dynamics are needed, overall evaluation paradigms.

- Open research questions: Theoretical basis, effective scaling (especially computationally), cross-patterned and structured context integration, realistic deployment, security, consistency and ethical issues remain open research challenges.

Application and impact

Context engineering supports powerful domain adaptive AI:

- Long document/questions

- Personalized digital assistant and memory authorized agent

- Science, medicine and technology problem solving

- Multi-agent collaboration in business, education and research

The direction of the future

- Unified Theory: Developing a framework for mathematics and information theory.

- Scaling and efficiency: Pay attention to innovations in mechanisms and memory management.

- Multimodal integration: Seamless coordination of text, visual, audio and structured data.

- Strong, safe and ethical deployment: Ensure reliability, transparency and fairness in actual systems.

Anyway: Context engineering is becoming a key discipline guiding the next generation of LLM-based intelligent systems, shifting the focus from creative timely writing to rigorous science of information optimization, system design and context-driven AI.

Check Paper. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.