Liquid AI’s LFM2-VL-3B brings 3B parameter visual language model (VLM) to edge devices

Liquid AI has released LFM2-VL-3B, a 3B parameter visual language model for image-to-text tasks. It extends the LFM2-VL family beyond the 450M and 1.6B variants. The goal of this model is to improve accuracy while preserving the speed profile of the LFM2 architecture. It is available on LEAP and Hugging Face under the LFM Open License v1.0.

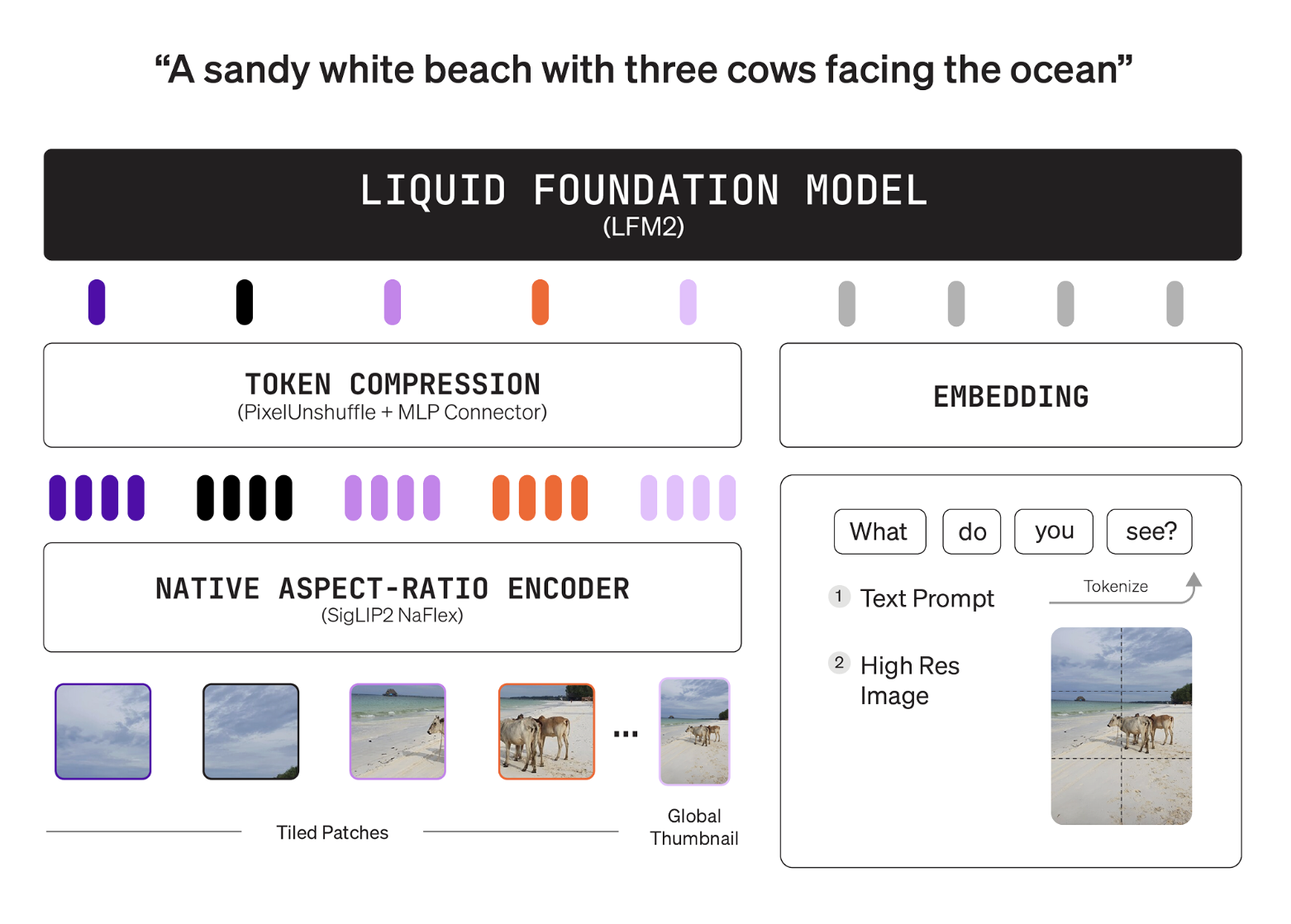

Model overview and interface

LFM2-VL-3B accepts interleaved image and text input and generates text output. The model exposes ChatML-like templates. The processor plugs into a

architecture

This stack pairs a language tower with a shape-aware visual tower and projector. The language tower is LFM2-2.6B, a hybrid convolution plus attention backbone network. The visual tower is a 400M parameter SigLIP2 NaFlex, which preserves the original aspect ratio and avoids distortion. The connector is a 2-layer MLP with pixel unshuffle, which compresses image tokens before merging with the language space. This design allows users to limit the visual token budget without retraining the model.

The encoder handles native resolutions up to 512×512. Larger inputs are split into non-overlapping 512×512 blocks. Thumbnail paths provide global context during tiling. Valid token mappings are documented with specific examples, with a 256×384 image mapping to 96 tokens and a 1000×3000 image mapping to 1,020 tokens. The model card exposes user controls for minimum and maximum image markers and a tiling switch. These controls adjust speed and quality during inference.

Inference settings

The Hugging Face model card provides recommended parameters. Text generation uses temperature 0.1, minimum 0.15, and repetition penalty 1.05. The visual settings use a minimum image mark of 64, a maximum image mark of 256, and image segmentation enabled. The processor automatically applies chat templates and image sentinels. This example uses AutoModelForImageTextToText and AutoProcessor and bfloat16 accurate.

How is it trained?

Liquid AI describes a phased approach. The team performed joint mid-training to adjust the text-to-image ratio over time. The model is then supervised fine-tuned with a focus on image understanding. Data sources are large-scale open datasets as well as in-house synthetic visual data for task coverage.

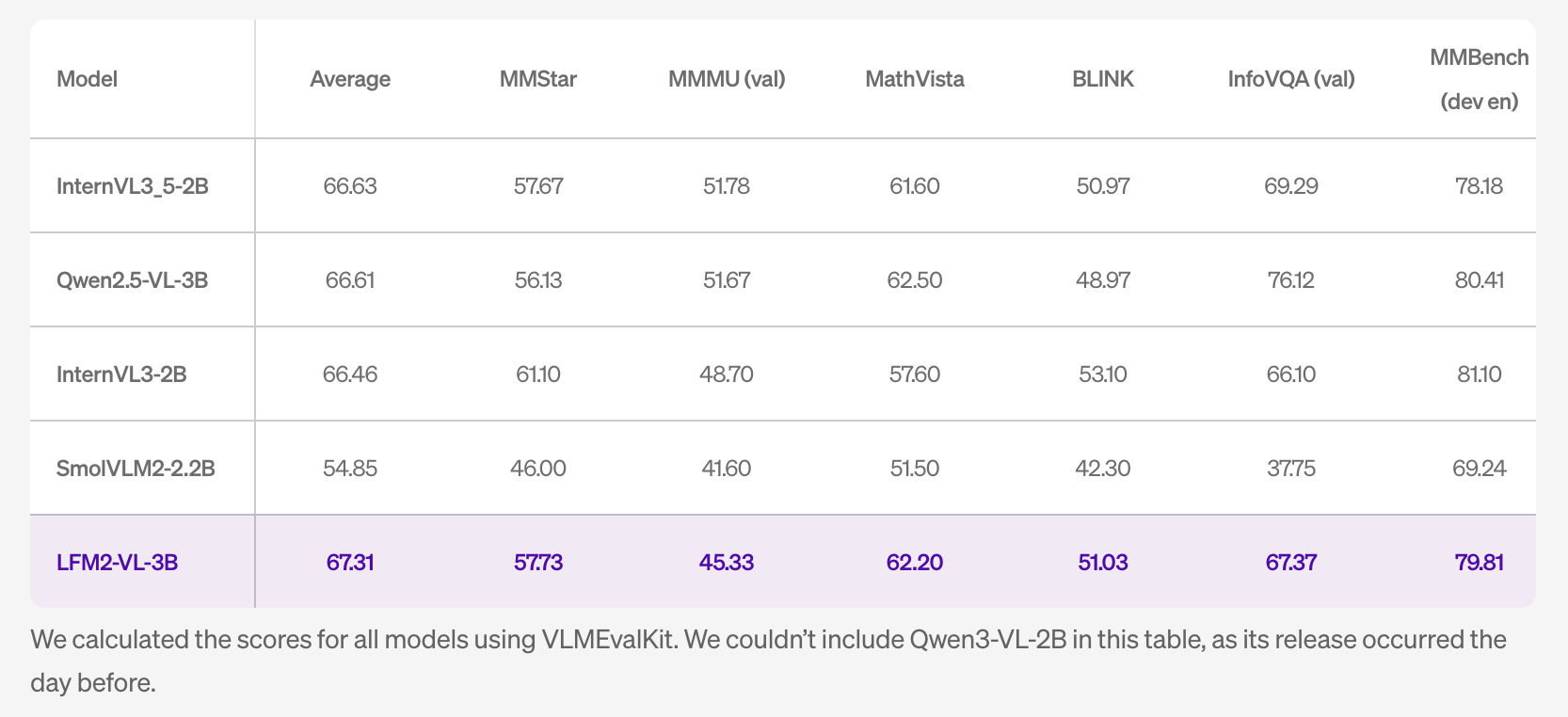

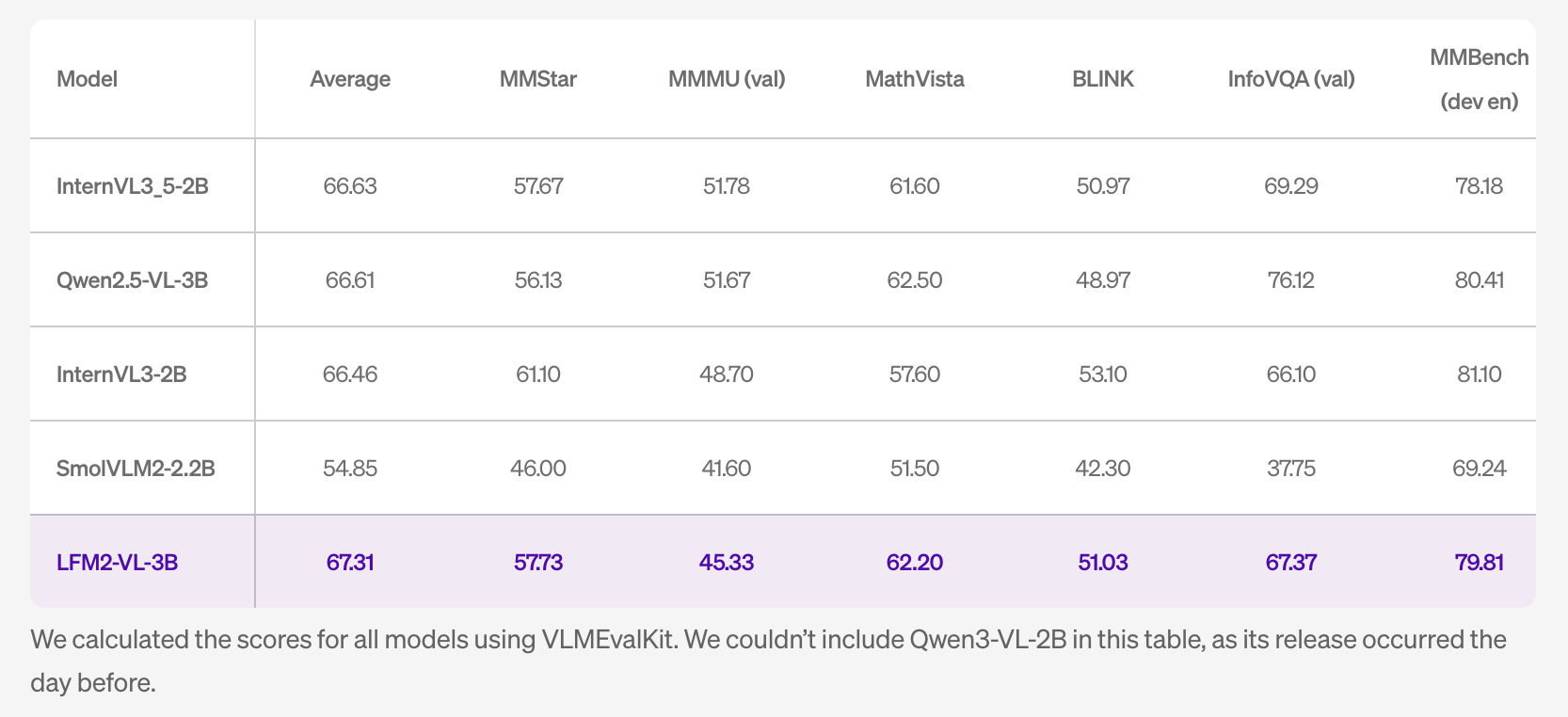

Benchmark

The research team reports the results of competition among lightweight open VLMs. On MM-IFEval, the model reaches 51.83. On RealWorldQA it reached 71.37. On MMBench dev en it reached 79.81. POPE score is 89.01. The table indicates that scores for other systems were calculated using VLMEvalKit. The table does not include the Qwen3-VL-2B because that system was released the day before.

The language ability is still close to the LFM2-2.6B trunk. The research team cited 30% in GPQA and 63% in MMLU. This is important when the perception task involves knowledge inquiry. The team also said it expanded multilingual visual understanding into English, Japanese, French, Spanish, German, Italian, Portuguese, Arabic, Chinese and Korean.

Why should edge users care?

The architecture keeps compute and memory within a small device budget. Image tokens are compressible and user-limited, so throughput is predictable. The SigLIP2 400M NaFlex encoder preserves aspect ratio, which facilitates fine-grained perception. Projectors reduce tokens at the connector, thus increasing tokens per second. The research team also released a GGUF version of the device runtime. These properties are useful for robotics, mobile, and industrial customers that require local processing and tight data boundaries.

Main points

- Compact intermodal stack:3B Parameter LFM2-VL-3B pairs the LFM2-2.6B language tower with a 400M SigLIP2 NaFlex visual encoder and 2-layer MLP projector for image token fusion. NaFlex preserves the original aspect ratio.

- Resolution processing and token budgeting: The image itself runs up to 512×512, with the larger input tiled into non-overlapping 512×512 blocks, with a global contextual thumbnail path. The recorded token mappings include 256×384 → 96 tokens and 1000×3000 → 1,020 tokens.

- Reasoning interface: ChatML-like prompts

- Tested performance: Reported results include MM-IFEval 51.83, RealWorldQA 71.37, MMBench-dev-en 79.81, and POPE 89.01. The pure speech signal from the backbone is about 30% GPQA and 63% MMLU, useful for mixed perception and knowledge workloads.

LFM2-VL-3B is a practical step for edge multi-modal workloads, and the 3B stack pairs LFM2-2.6B with a 400M SigLIP2 NaFlex encoder and high-efficiency projector, resulting in lower image token counts for predictable latency. Native resolution processing with 512 x 512 tiles and token cap provides deterministic budgeting. The scores reported on MM-IFEval, RealWorldQA, MMBench and POPE are competitive for this scale. Open weights, GGUF construction, and LEAP access reduce integration friction. Overall, this is an edge-ready version of VLM with clear controls and transparent benchmarks.

Check High frequency model and technical details. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.