Less: Why searching fewer files can improve AI answers

Retrieval-enhanced generation (RAG) is a method of AI systems combining language models with external knowledge sources. In short, AI first searches for relevant documents related to user queries (such as articles or web pages) and then uses these documents to generate more accurate answers. Celebrate this approach by helping large language models (LLMs) maintain facts and reduce hallucinations by responsive rooted in actual data.

Intuitively, one might think that the more documents AI retrieves, the better the answer. However, recent research has shown a surprising twist: When providing information to AI, sometimes less is more.

Less documents, better answers

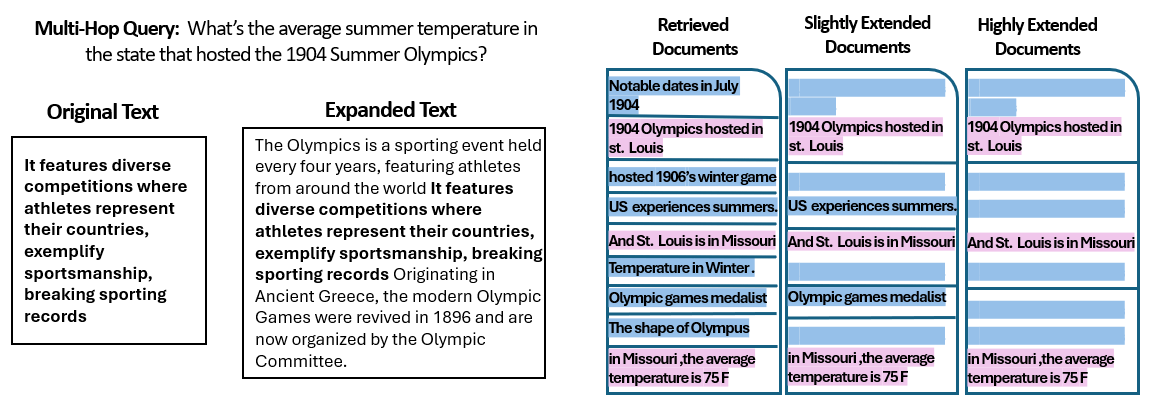

A new study by researchers from Hebrew University in Jerusalem explores number Documents assigned to the rag system can affect its performance. Crucially, they keep the total amount of text constant – meaning that if fewer documents are provided, those documents are slightly expanded to fill the same length as many files. In this way, any performance differences can be attributed to the number of documents, rather than just having shorter inputs.

The researchers used a questioning dataset (Musique) with trivia questions, each dataset (Musique) initially paired with 20 Wikipedia paragraphs (only a few of them actually contain the answers, and the rest were distractors). By reducing the number of documents from 20 to 2-4 truly relevant documents and filling those documents with some extra context to maintain consistent lengths – they create scenarios for AI where less material is considered, but the total total words are nearly the same.

The results are surprising. In most cases, the AI model answers more accurately when it provides less documentation than a complete set. Great performance improvements – In some cases, accuracy (F1 score) can be improved up to 10% (F1 score) when the system uses only a few supporting documents instead of large collections. This counterintuitive improvement was observed in several different open source language models, including Meta’s Llama and other variants, suggesting that the phenomenon is independent of a single AI model.

One model (QWEN-2) is a notable exception, which handles multiple documents without score drops, but almost all tested models have performance on the whole with fewer documents. In other words, adding more critically related works beyond reference material actually hurts its performance more frequently than what it helps.

Source: Levy et al.

Why are you so surprised? Often, rag systems are designed to help only AI under the assumption of retrieving a wider range of information—after all, if the answer is not in the first few documents, it might be the eleventh or 1920s.

This study flipped the script and showed that abuse on additional files backfire. Even if the total text length remains constant, only many different documents exist (each has its own context and quirk) make the task of asking questions more challenging for AI. It seems that, except for a certain point, each additional document introduces more noise than the signal, confusing the model and impairing its ability to extract the correct answers.

Why less in rags

Once we consider how AI language models process information, the result of “less more” makes sense. When AI gets only the most relevant documents, the context it sees is focused and distracting, like a student awarded for proper research.

In this study, when only supporting documents were granted, the model performed significantly better, and irrelevant materials could be removed. The rest of the context is not only shorter, but cleaner – it contains the fact that points directly to the answer, nothing else. With the cluttered documents, the model can focus all its attention on relevant information, making it less likely to be stuck or confused.

On the other hand, when many documents are retrieved, the AI must filter through relevant and unrelated content. These additional documents are usually “similar but unrelated” – they may share topics or keywords with the query, but do not actually contain answers. Such content misleads the model. AI can waste efforts trying to connect cross-documents that don’t actually lead to the right answer, or worse yet, it can mistakenly merge information from multiple sources. This increases the risk of hallucination – AI produces answers that sound reasonable but are not based on any single source instances.

Essentially, feeding too much document to the model can dilute useful information and introduce conflicting details, making it harder for AI to determine that it is correct.

Interestingly, the researchers found that if the additional documents are obviously insignificant (e.g., random irrelevant text), the model would be better off ignoring them. The real trouble comes from distracted data that looks relevant: When all the retrieved text is on a similar topic, the AI thinks it should use everything, and it may be difficult to tell which details are actually important. This is consistent with the results of the study Random distractors cause less chaos than real distractors In the input. AI can filter blatant nonsense, but clever subject information is a slick trap – it sneaks away and derails under the guise of relevance. By reducing the number of documents to the really necessary documents, we first avoid setting these traps to.

There is also a practical benefit: Retrieving and processing less documents reduces the computational overhead of the rag system. Every document using time and computing resources must be analyzed (embedded, read, and engaged). Eliminating redundant documentation can make the system more efficient – it can find answers faster and it is cheaper. With fewer sources to improve accuracy, we get a win-win situation: better answers and a leaner, more efficient process.

Source: Levy et al.

Rethinking the rag: the direction of the future

This new evidence suggests that quality often outperforms quantity in retrieval, which is of great significance to the future of AI systems that rely on external knowledge. It shows that designers of rag systems should prioritize smart filtering and ranking of documents rather than pure volume. Rather than providing 100 possible paragraphs and hoping that the answers are buried somewhere, only the most important few highly relevant paragraphs are available.

The authors of the study highlight the need for retrieval methods to “balance between balance and diversity” in the information they provide to the model. In other words, we want to provide enough thematic coverage to answer this question, but it is not so that the core facts are drowned in irrelevant text.

Going forward, researchers may explore technologies that help AI models process multiple documents more elegantly. One way is to develop a better searcher system or re-level to identify which documents really add value and which documents introduce conflicts only. Another perspective is to improve the language model itself: if a model (such as QWEN-2) manages to cope with many documents without losing accuracy, thus examining how it is trained or structured, it can provide clues that make other models more powerful. Perhaps the large language models of the future will combine mechanisms to identify two sources saying the same thing (or contradict each other) and focus accordingly. The purpose is to enable the model to take advantage of a wide variety of sources without falling into the sacrificial victims of chaos – effectively achieving the best of both worlds (information breadth and clarity of focus).

It is also worth noting that as AI systems gain a larger context window (the ability to read more text at once), just dumping more data into the prompt, this is not a silver bullet. A larger context does not mean a better understanding. This study shows that even if AI can technically read 50 pages at a time, giving it 50 pages of mixed quality information may not produce good results. The model still benefits from curation, and related content can be used instead of indistinguishable dumps. In fact, in the age of huge context windows, intelligent retrieval may become even more important – to ensure that additional capabilities are used for valuable knowledge rather than noise.

From “More documents, same length” (Apt paper) We are encouraged to re-examine our hypotheses in AI research. Sometimes feeding AI all the data we have is not as effective as we think. By focusing on the most relevant information, we not only improve the accuracy of the answers generated by AI, but also make the system more efficient and trustworthy. It’s a counterintuitive course, but with exciting consequences: Future rag systems may carefully select fewer, better documents to retrieve, which is both smarter and streamlined.