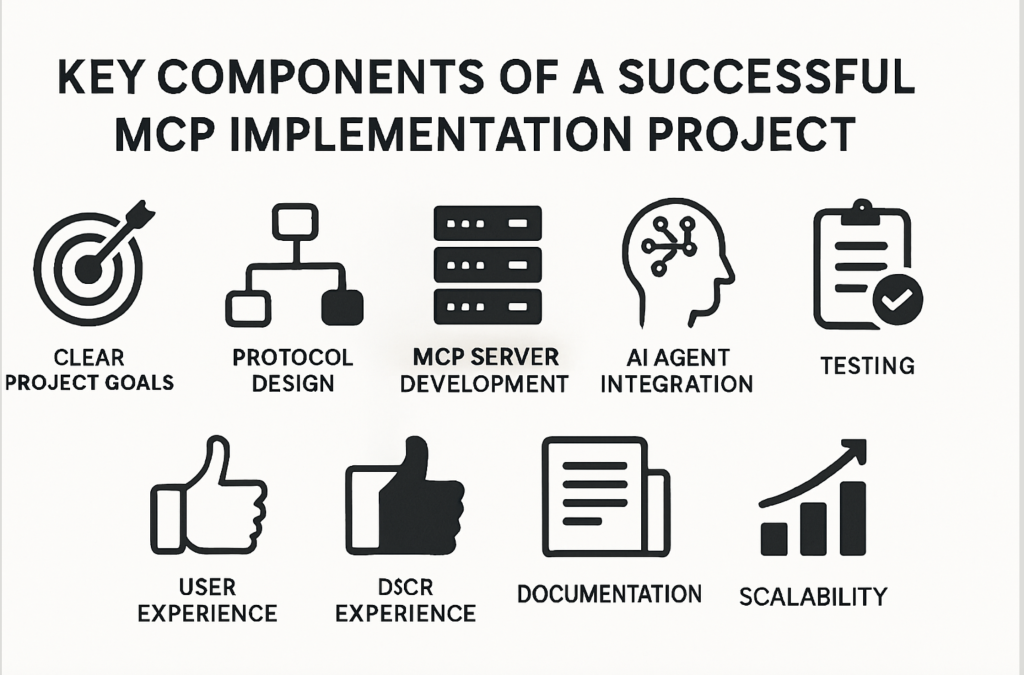

this Model context protocol (MCP) is changing the way smart proxy interacts with backend services, applications and data. A successful MCP implementation project is more important than writing protocol-compliant code. The system adopts construction, safety, user experience and strict operation. This is a fundamental component of data-driven research to ensure MCP projects provide value and resilience in production environments.

1. Clear project goals, use cases and stakeholder buying

- Define the business and technical issues you are solving using MCP: Example use cases include multi-application workflow automation, AI-driven content generation, or proxy-based DEVOPS operations.

- Attract users and their early stages: Successful MCP team hosts requirements workshops, interviews and priority fast pilots win.

2. Protocol, integration and architecture design

- Mapping AI Agents, MCP Middleware and Target Applications: The principle of loose coupling (stateless API endpoint) is key. Most advanced teams use HTTP/2 or Websocket for real-time data drives, avoiding a lot of voting and reducing latency by up to 60% of proxy workflows.

- Context payload: Embedding rich contexts (users, tasks, permissions) in protocol messages results in higher proxy accuracy and fewer ambiguous requests, which are critical for security and compliance.

3. Strong security and permissions

Data points: A 2024 Gitlab DevSecops survey found that 44% of teams view security as the number one AI workflow.

- verify: OAuth 2.0, JWT tokens or mutual TLS are still best practices for MCP endpoints.

- Granule permissions: Implement role-based access control (RBAC), with each AI trigger operation having an audit record.

- User consent and transparency: End users should be able to view, approve and revoke access to data and controls.

4. MCP server development and scalability

- Reusable, scalable and stateless MCP server: Horizontal scaling of architect servers (containerized, cloud native). Container orchestration (Kubernetes, Docker Swarm) is common for elastic scaling.

- Open API definition: Use OpenAPI/Swagger to log endpoints, allowing AI agents and developers to get started quickly.

- Scalability: Modular plug-ins or handler architectures support future integration without core refactoring, which is the feature of the most successful MCP deployment.

5. AI Agent Integration, Memory and Inference

- Context Memory: Store recent operations (with expiration) or complete session transcripts for auditability and continuity.

- Troubleshooting: Implementing structured error payloads and fallback logic – is critical for solutions where proxy operations are irreversible or expensive.

6. Comprehensive testing and verification

- Automatic testing suite: Use simulations and stubs for MCP integration points. Cover input validation, error propagation and edge cases.

- Users undergo testing: Workflows with real users, collect telemetry and iterate quickly based on feedback.

7. User experience and feedback mechanisms

- Session UX: Natural language feedback and confirmation are crucial for proxy-driven flows. The well-designed system showed an intent recognition rate of >90% (Google DialogFlow study).

- Continuous feedback loop: Integrate NPS investigations, error reporting, and feature requests directly into MCP-enabled tools.

8. Documentation and training

- Comprehensive, latest documentation: The best performing team publishes API documentation, setup guides, and integration scripts.

- Hands-on training: Interactive demonstrations, sample code, and “office hours” help drive adoption among developers and non-developers.

9. Monitor, record and maintain

- Dashboard: Real-time monitoring of proxy startup, operation completion, and API errors.

- Automatic Alert: Set threshold-based alerts for critical paths (for example, authentication spike failure).

- Maintenance routine: Schedule periodic review of dependency versions, security policies, and context/permission scopes.

10. Scalability and scalability

- Horizontal Scaling: Use a managed container service or feature-as-a-service model to scale quickly and cost-efficiently.

- Consistent version control: Adopt semantic versioning and maintain backward compatibility – Make agents (and users) run during upgrades.

- Plug-in architecture: Future implementations of MCP implementations through plug-in-compatible modules can enable new tools, agents, or services to be integrated with minimal friction.

in conclusion

Successful MCP implementations are as much as creating seamless, valuable user experiences as strong architecture and security. Invest in teams with clear vision, security, comprehensive testing and continuous feedback best suited to leverage MCP for transformative AI-driven workflows and applications. With the rapid maturity of the protocol ecosystem and the industry adoption examples emerging every month, the script above helps ensure that MCP projects are implemented with a commitment to intelligent automation.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.