Google AI Ships TimesFM-2.5: Smaller, longer base base model that now leads gift words (zero shot prediction)

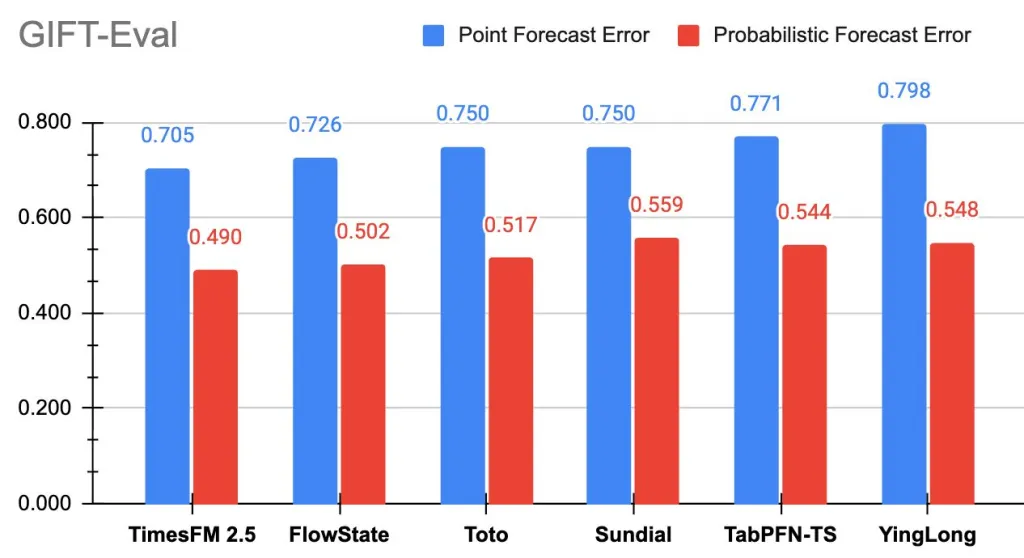

Google Research has been released TimesFM-2.5a 200m parameter, only the decoder time series basic model 16K context length and local Probability prediction support. New checkpoints broadcast live on hugging faces. exist Gift evaluationTimesFM-2.5 is now Top rankings in the mid-span precision indicator (MASE, CRP) in the zero-strike base model.

What is time series prediction?

Prediction of time series is the practice of analyzing sequential data points collected over time to identify patterns and predict future values. It provides important applications for key applications across the industry, including predicting retail product demand, monitoring weather and precipitation trends, and optimizing large-scale systems such as supply chains and energy grids. By capturing time-dependent and seasonal variations, time series predictions enable data-driven decision-making in a dynamic environment.

What has changed TimesFM-2.5 vs v2.0?

- parameter: 200m (Down from 500m in 2.0).

- Maximum context: 16,384 Points (from 2,048).

- Quantile: Elective 30m-param quantile For continuous quantile prediction 1k horizon.

- enter: No “frequency” indicator Required; new inference marks (flip invariance, positive inference, quantile cross-fixation).

- Roadmap: Coming soon flax Implement faster inferences; Covariates Support for return support; the document is being expanded.

Why is longer context important?

16K Historical points allow a single forward pass to capture multi-seasonal structures, regime disruptions and low-frequency components without paving or layering the seams. In practice, this can reduce preprocessing heuristics and improve stability of the domains of the environment >> horizon (e.g., energy load, retail demand). The longer context is for the core design changes of 2.5.

What is the research background?

TimesFM’s core papers –A basic model with only one decoder only for prediction– Introduced in ICML 2024 Paper and Google’s research blog. Gift words (Salesforce) appear to standardize the evaluation of cross-domain, frequency, horizon length and univariate/multivariate regimes, and have a public ranking list hosted in front of the embrace.

Key Points

- Smaller, faster models: TimesFM-2.5 run 200m parameter (half of 2.0 size) At the same time improves accuracy.

- Longer context: support 16K input lengthachieve predictions with more in-depth historical reports.

- Benchmark Leaders: Ranking now #1 in the basic model of zero shooting Gifts for both mase (point accuracy) and CRP (Probability Accuracy).

- Preparing for production: Effective design and quantile prediction support makes it suitable for industry-friendly deployments.

- Wide availability: The model is Living on the face of embracing.

Summary

TimesFM-2.5 shows that the underlying model for prediction is proof-of-concept into practical, production-ready tools. It marks a gradual change in efficiency and capability by cutting parameters in half while extending context length and probability accuracy to probability accuracy. With the embracing face access already present, and the integration of BigQuery/model gardens already exists along the way, the model can accelerate the adoption of predicted zero-strike time series in the real world.

Check Model card (HF), repurchase, benchmark and Paper. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.