Google AI research introduces a novel machine learning approach that transforms TimesFM into several learners

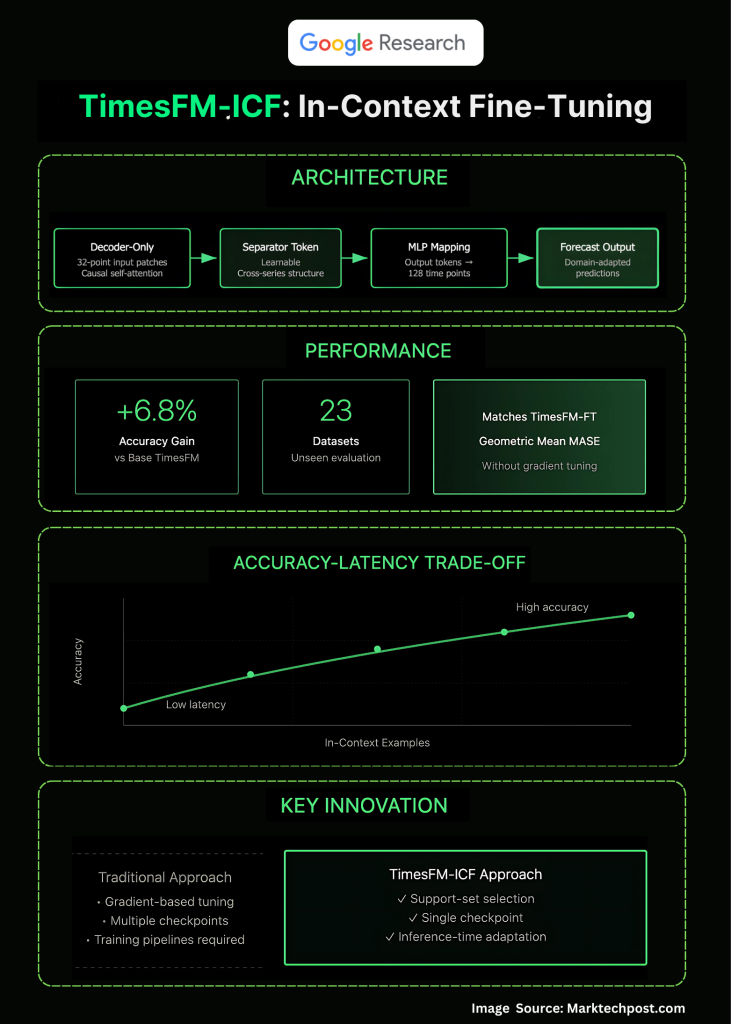

Introduction to Google Research Fine tuning in context (ICF) used to predict the time series as ‘TimesFM-ICF): A recipe for continuous process, teaching TimesFM to provide multiple related series directly in the reasoning prompt. The result is some forecast Matching supervision fine-tuning When delivered +6.8% Accuracy OOD benchmarks are crossed on basic timesfm – no per capita training ring is required.

What pain points are eliminated in the prediction?

Most production workflows still make (a) a trade-off between each dataset through supervised fine-tuning (accuracy, but heavy MLOP) and (b) zero-shooting base models (simple but not domain-friendly). Google’s new method keeps a pre-trained TimesFM checkpoint, but let it ad During inference, a few text examples from the related series are used to avoid each rental training pipeline.

How does the closed fine-tuning under the hood work?

from TimesFM– A patched, decoder-only transformer that can be shared by MLP and Continue pre-training It is a sequence that combines target history with multiple “support” series. The key changes introduced now are Learnable common splitter tokenstherefore, the horizontal example causal concern can mine structures within scope without confusing trends. Training objectives are still the next prediction; what is new Context construction This teaches the model to perform multiple related series of reasoning at reasoning time.

What exactly is the “several shot” here?

At inference, the user connects the target history to KK additional time series fragments (e.g., similar SKUs, adjacent sensors), each fragment defined by a separator token. Now the attention layer of the model is explicitly trained to take advantage of those text examples similar to the 11m-less hint, but for numeric sequences rather than text tokens. This will adapt to transition from parameter update to Timely structured series of projects.

Does it actually match supervised fine-tuning?

In the 23 Data Outdoor Suite, TimesFM-ICF Equal to performance per database Timesfm-ft While existing 6.8% accuracy is basically more accurate than TIMESFM (Scaling the geometric mean of Mase). The blog also shows what is expected Accuracy – Lower tradeoffs: More Chinese examples provide better predictions at the expense of longer inferences. The ablation of “just make the context longer” suggests that the intrinsic example of structured beats the naive long article alone.

How is this different from the timing method?

Timer Classify values into discrete vocabulary and show strength Zero shot Accuracy and fast variants (e.g. timing bolts). Google’s contribution here is not another token or headroom for Zero. Making one Time series FM behaves like LLM several times learner– Learn from cross-series context in reasoning. This capability narrows the gap between “train time adaptation” and “time adaptation” for digital predictions.

What are the architectural details to watch?

Research group focus: (1) Separator token Mark the boundary, (2) Causal self-attention On the history and examples of mixing, (3) persists repair and share the MLP leader, (4) Continue to pre-training to instill cross-sectional behavior. Together, make the model consider the support series as model Instead of background noise.

Summary

Google’s Chinese fine-tuning turns TimeFM into a practical several-gun predictor: a pre-verified checkpoint that adapts to reasoning through a carefully planned support series, providing fine-tuning level accuracy without per-capita training without the need for multiple delays, delays, delays, and delays to choose it as the primary control set.

FAQ

1) What is Google’s “closed fine-tuning” (ICF) for time series?

ICF is to continue pre-training, i.e. timesfm uses multiple related series to place Rapidly When inference, enable shooting adaptations with almost no human data gradient updates.

2) How is the difference between ICF and standard fine-tuning and zero-shooting use?

Standard fine-tuning updates the weights of each dataset; Zero Shot uses a fixed model with only target history. ICFs keep weight fixed during deployment, but learn during pre-training how To take advantage of additional text examples, match each database fine-tuning in the reported benchmarks.

3) What building or training changes were introduced?

TimesFM continues to sequence in sequences of sequences that are separate from special boundary tokens, so causal concerns can take advantage of cross-series structures. The rest of the decoder-only TimesFM stack remains intact.

4) What does the results show relative to the baseline?

On outdoor suites, the ICF is improved through TimesFM baselines and achieved equality through supervised fine-tuning; it is evaluated for strong TS benchmarks (e.g. PatchTST) and prior FMS (e.g. Chronos).

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🔥[Recommended Read] NVIDIA AI Open Source VIPE (Video Pose Engine): A powerful and universal 3D video annotation tool for spatial AI