Google AI launches VISTA: a test-time self-improving agent for text-to-video generation

Total length DR: VISTA is a multi-agent framework that improves text-to-video generation during inference. It plans structured prompts into scenarios, runs a paired tournament to select the best candidate, uses expert judges on visual, audio, and context, and then rewrites the prompts using a deep-thinking prompt agent. The approach shows consistent gains over the strong prompt optimization baseline in both single-scene and multi-scene settings, and human raters prefer its output.

What is VISTA?

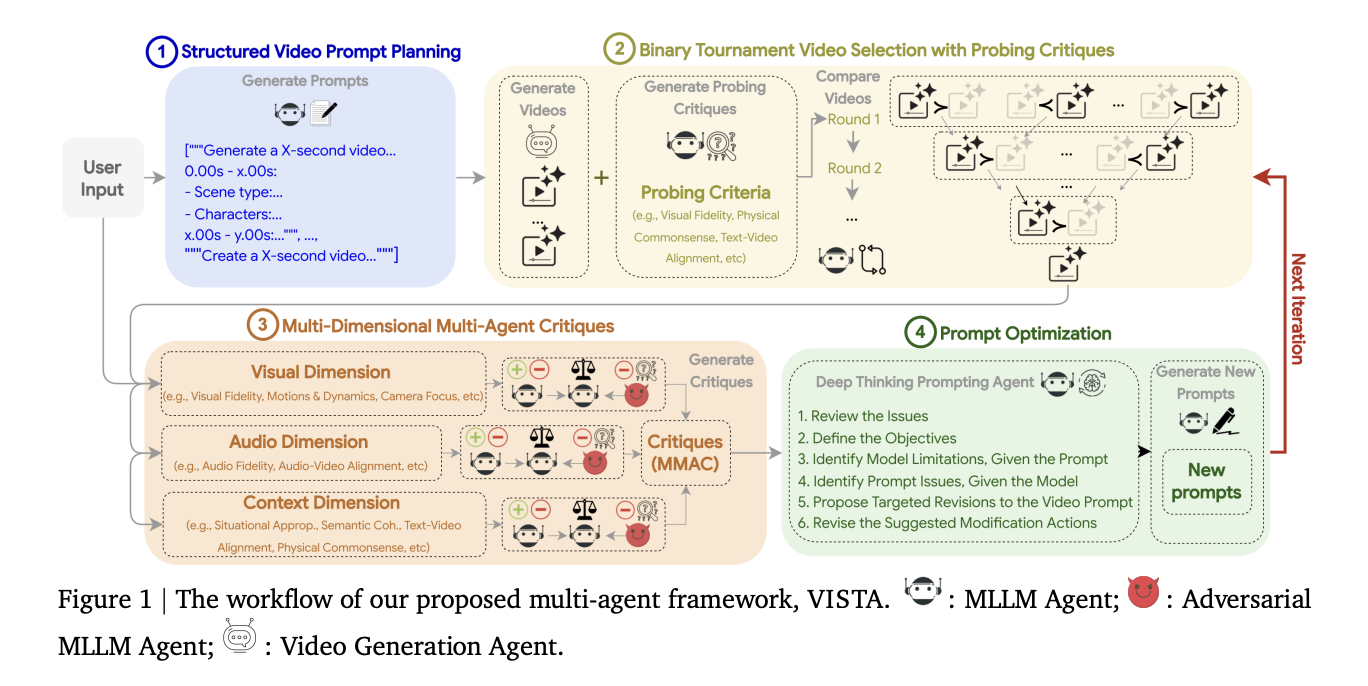

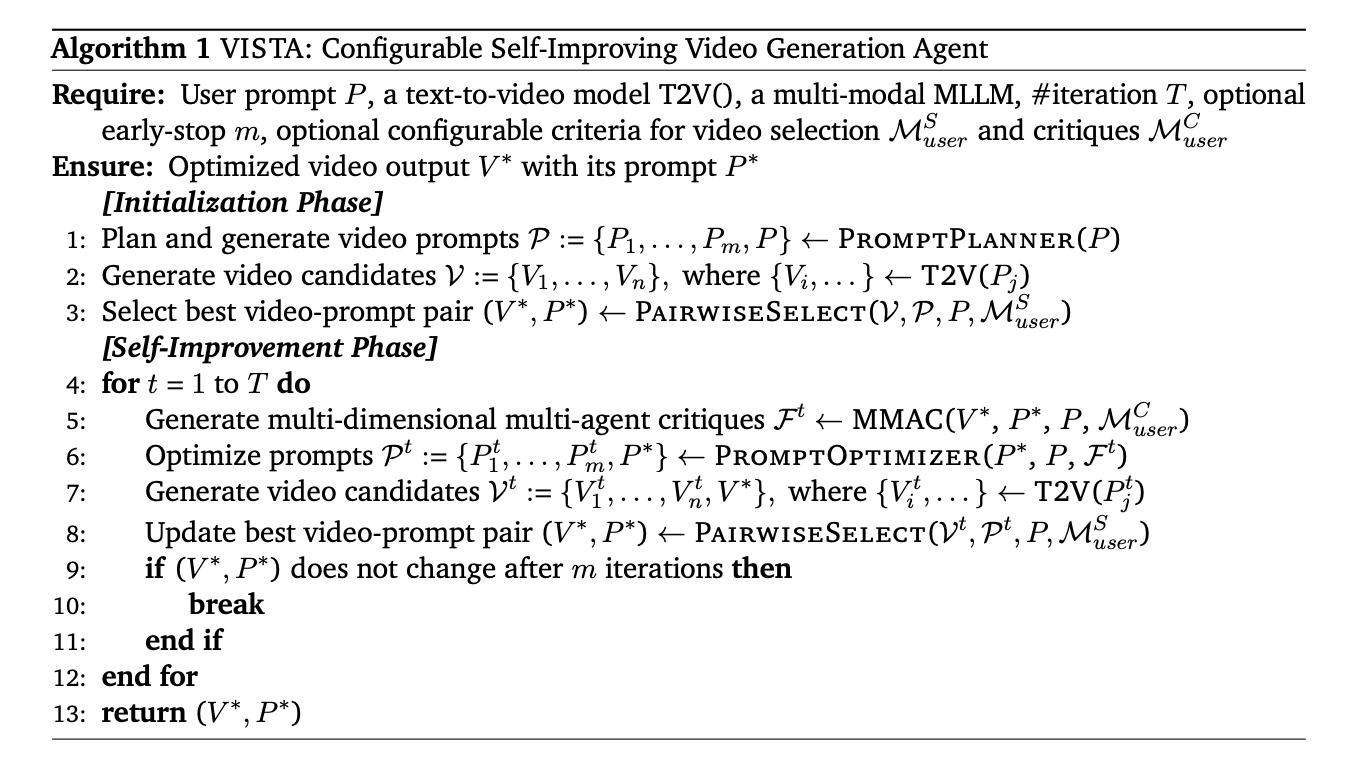

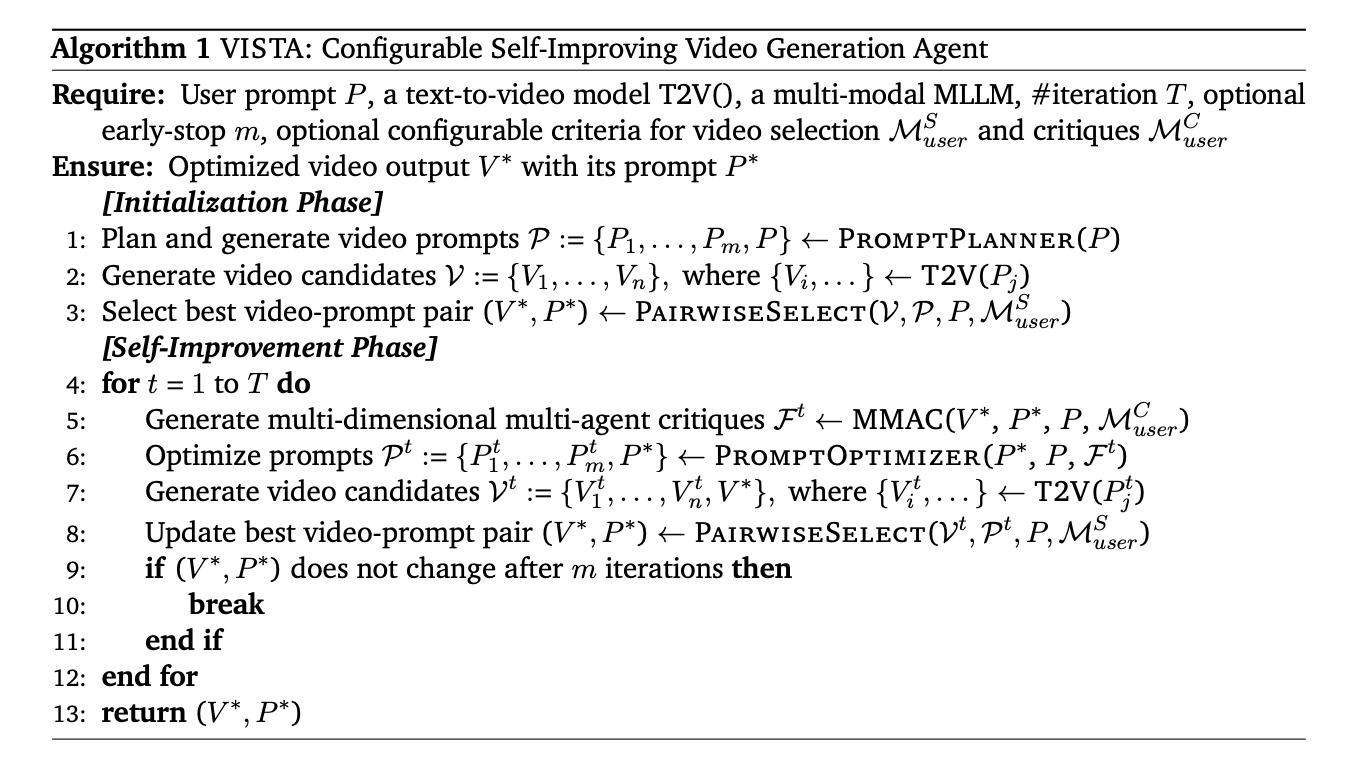

VISTA stands for Video Iterative Self-Improvement Agent. It’s a black box, multi-agent loop that optimizes cues and regenerates video while testing. The system jointly targets visual, audio and contextual aspects. It follows 4 steps, structured video prompt planning, paired match selection, multi-dimensional multi-agent review, and deep thinking prompt agent for prompt rewriting.

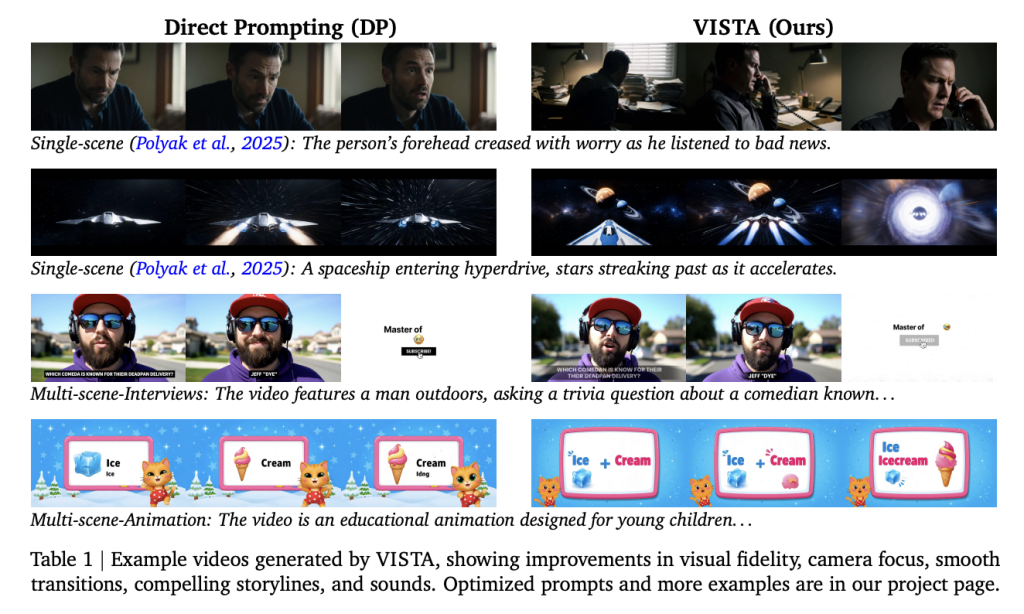

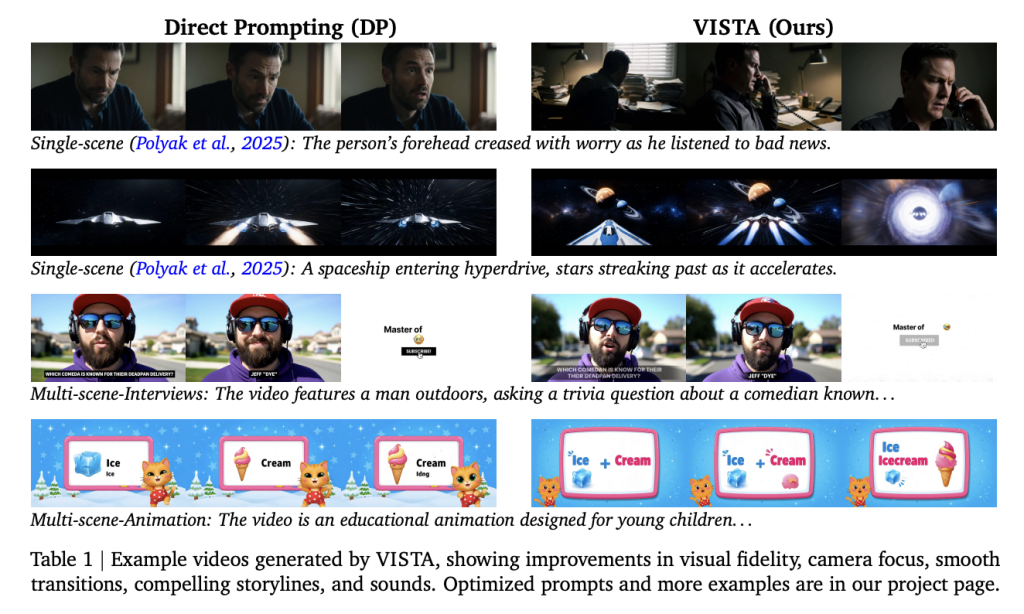

The research team evaluated VISTA on single-scenario benchmarks and on an in-house multi-scenario set. It reports consistent improvements over state-of-the-art baselines and pairwise win rates of up to 60% in some settings, as well as 66.4% human preference over the strongest baseline.

Understand the key issues

Text-to-video models like Veo 3 can produce high-quality video and audio, but the output is still sensitive to precise prompt wording, compliance with physics can fail, and alignment with user goals can deviate, forcing manual trial and error. VISTA treats this as a test time optimization problem. It seeks unified improvements in the alignment of visual signals, audio signals, and context.

How VISTA works (step by step)?

Step One: Structured Video Prompt Planning

User prompts are broken down into timed scenes. Each scene has 9 attributes, duration, scene type, character, action, dialogue, visual environment, camera, sound, and emotion. The multimodal LL.M. fills in missing attributes and imposes constraints on realism, relevance and creativity by default. The system also retains the original user prompts in the candidate set to allow for models that do not benefit from decomposition.

Step 2: Match Video Selection

The system samples multiple video,cue pairs. MLLM acts as a judge to reduce token order bias through binary tournaments and two-way swaps. Default criteria include visual fidelity, physical knowledge, text-video alignment, audio-video alignment, and engagement. The approach first elicits exploratory critiques to support analysis, then conducts pairwise comparisons and applies customizable penalties for common text and video failures.

Step 3: Multi-dimensional and multi-subject criticism

Champion videos and prompts are critiqued along the 3 dimensions of visual, audio and contextual. Each dimension uses a triad, a normal judge, an adversarial judge, and a meta-judge that consolidates both sides. Metrics include visual fidelity, motion and dynamics, temporal consistency, camera focus and visual safety, audio fidelity, audio-video alignment and audio safety, situational appropriateness, semantic coherence, text-to-video alignment, physical knowledge, engagement, and contextual video formatting. Scores range from 1 to 10, enabling targeted error discovery.

Step Four: Deep Thinking Prompt

The inference module reads meta-criticisms and runs a 6-step introspection, which identifies low-scoring indicators, clarifies expected results, checks prompt adequacy, separates model constraints from prompt questions, detects conflicts or ambiguities, proposes modification actions, and then refines the prompts sampled for the next generation cycle.

Understand the results

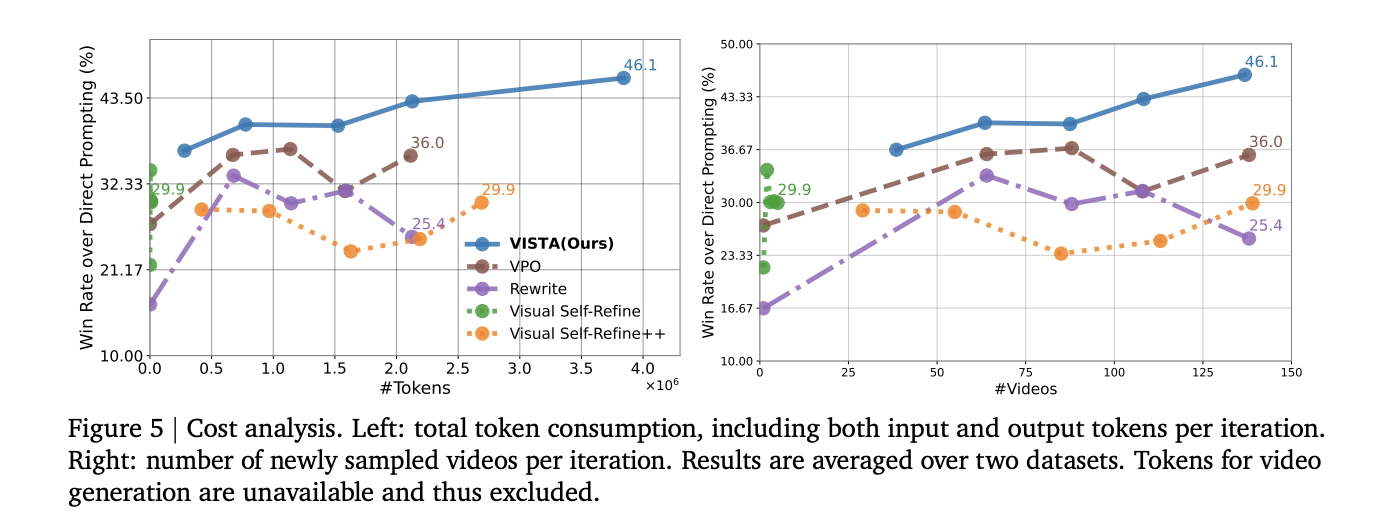

Automatic evaluation: This study reports the win rate, draw rate, and failure rate for ten criteria through two-way comparison using MLLM as the judge. VISTA’s winning rate continued to increase during the iteration process, reaching 45.9% in a single scenario and 46.3% in multiple scenarios at iteration 5. It also directly outperforms every baseline at the same computational budget.

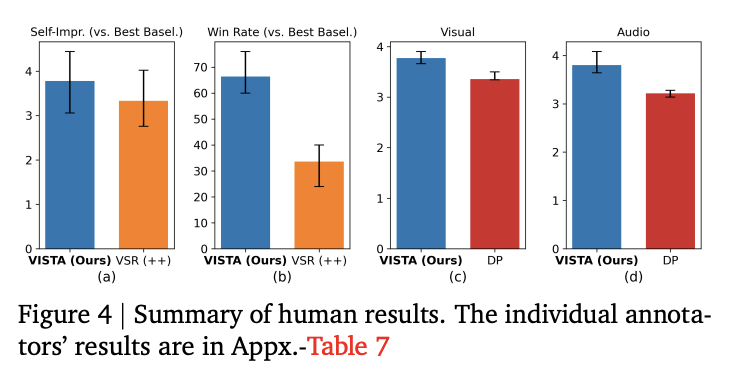

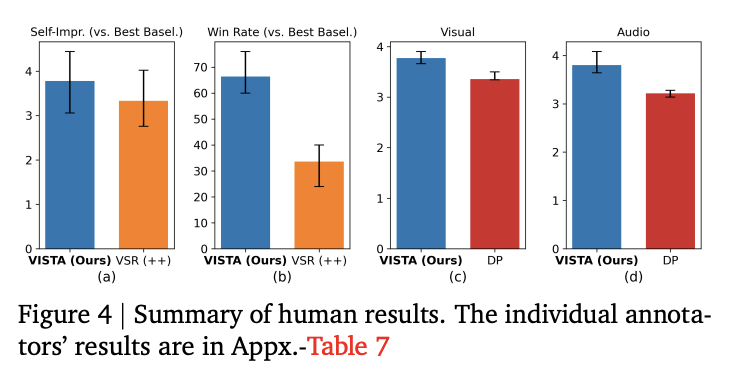

human research: Annotators with experience with instant optimization preferred VISTA in 66.4% of head-to-head trials compared to the best baseline at iteration 5. Experts rated VISTA’s optimized trajectories higher, and they rated visual quality and audio quality higher than direct prompts.

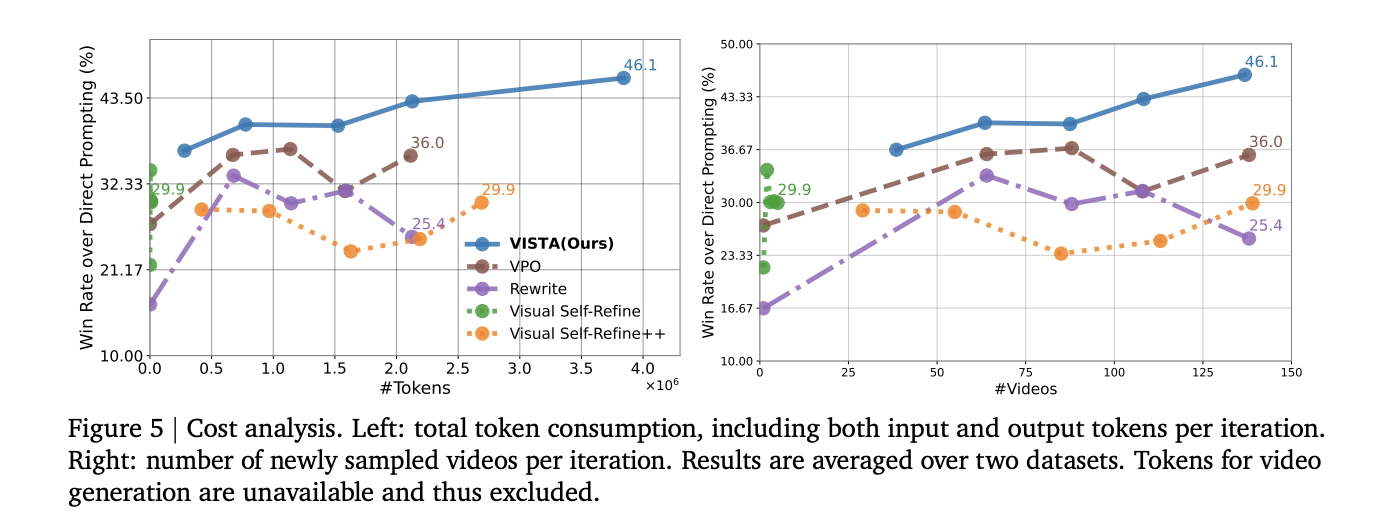

cost and scale: The average tokens per iteration for both datasets is about 700,000, excluding generated tokens. Most token usage comes from selections and comments, which handle videos as long contextual input. The winning rate tends to increase as the number of sampled videos and tokens per iteration increases.

ablate: Removing immediate schedules impairs initialization. Removing tournament selection would destabilize subsequent iterations. Using only one judgment type reduces performance. Removing the Deep Thought Prompt agent will reduce the final win rate.

evaluator: The research team repeated the evaluation using alternative estimator models and observed similar iterative improvements, which supports the robustness of the trends.

Main points

- VISTA is a test-time, multi-agent loop that jointly optimizes visual, audio, and context for text-to-video generation.

- It plans prompts as timed scenes with 9 attributes, duration, scene type, character, action, dialogue, visual environment, camera, sound, and emotion.

- MLLM judges using two-way exchanges select candidate videos through a pairwise tournament, scoring based on visual fidelity, physical knowledge, text-video alignment, audio-video alignment, and engagement.

- Three judges for each dimension (normal, adversarial, meta) produce a score from 1 to 10, instructing the Deep Thought Prompt agent to rewrite the prompt and iterate.

- The results showed that in the 5th iteration, compared with direct prompts, the single-scenario win rate was 45.9% and the multi-scenario win rate was 46.3%. Human raters preferred VISTA in 66.4% of the trials, and the average token cost per iteration was approximately 700,000.

VISTA is a practical step towards reliable text-to-video generation, treating inference as an optimization loop and keeping the generator as a black box. Structured video prompt planning is useful for early stage engineers, with a specific checklist of 9 scenario attributes. Pairwise tournament selection with multi-mode LLM judges and two-way exchanges is a smart way to reduce ranking bias, with criteria targeting realistic failure modes, visual fidelity, common sense of physics, text-video alignment, audio-video alignment, engagement. Multidimensional criticism separates sight, hearing and context, and normal, adversarial and meta-judges expose weaknesses that single judges overlook. The Deep Thought Prompt Agent turns these diagnoses into targeted prompt edits. The use of Gemini 2.5 Flash and Veo 3 illustrates the reference setup and the Veo 2 study is a useful lower bound. The reported win rates of 45.9% and 46.3% and the human preference of 66.4% indicate repeatable gains. The cost of 700,000 tokens is not trivial, yet transparent and scalable.

Check Paper and Project page. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.