Google AI launches FLAME method: a one-step active learning that selects the most informative samples for training, making model specialization super fast

Open vocabulary object detector answers text queries with boxes. In remote sensing, zero-shot performance degrades because the class granularity is very fine and the visual environment is unusual. The Google research team proposed flamea one-step active learning strategy that relies on a powerful open vocabulary detector and adds a micro-refiner that you can train in near real-time on the CPU. The base model generates high-recall recommendations, the refiner uses a few target labels to filter out false positives, and you avoid complete model fine-tuning. It is reported state of the art Accuracy Dota and Dior and 30 shotsand minute scale Adaptation of each tag on the CPU.

problem frame

Open vocabulary detectors (e.g. OWL ViT v2) are trained on web-scale image-text pairs. They generalize well to natural images, but struggle when the categories are subtle, such as chimneys vs. storage tanks, or when the imaging geometries are different, such as nadir aerial tiles with rotating objects and small scale. Since text embeddings and visual embeddings overlap for similar categories, the accuracy decreases. Practical systems require extensive open vocabulary models and local expert accuracy without spending hours on GPU fine-tuning or thousands of new labels.

Clean approach and design

flame is a Cascading pipeline. In the first step, a zero-shot open word detector is run to generate many candidate boxes for the text query, such as “chimney.” In the second step, each candidate is represented by visual features and its similarity to the text. The third step, Retrieve edge samples By using PCA for low-dimensional projection, followed by density estimation, the uncertainty band is then selected so that it lies near the decision boundary. The fourth step, cluster and select one item per cluster for diversity. Step 5, there is a user label 30 Crops are positive or negative. The sixth step, Option to rebalance If the label is skewed, use SMOTE or SVM SMOTE. The seventh step, Train a small classifiersuch as RBF SVM or two-layer MLP, accept or reject the original proposal. The base detector remains frozen so you maintain recall and generalization, and the refiner learns the exact semantics the user wants to express.

Datasets, base models and settings

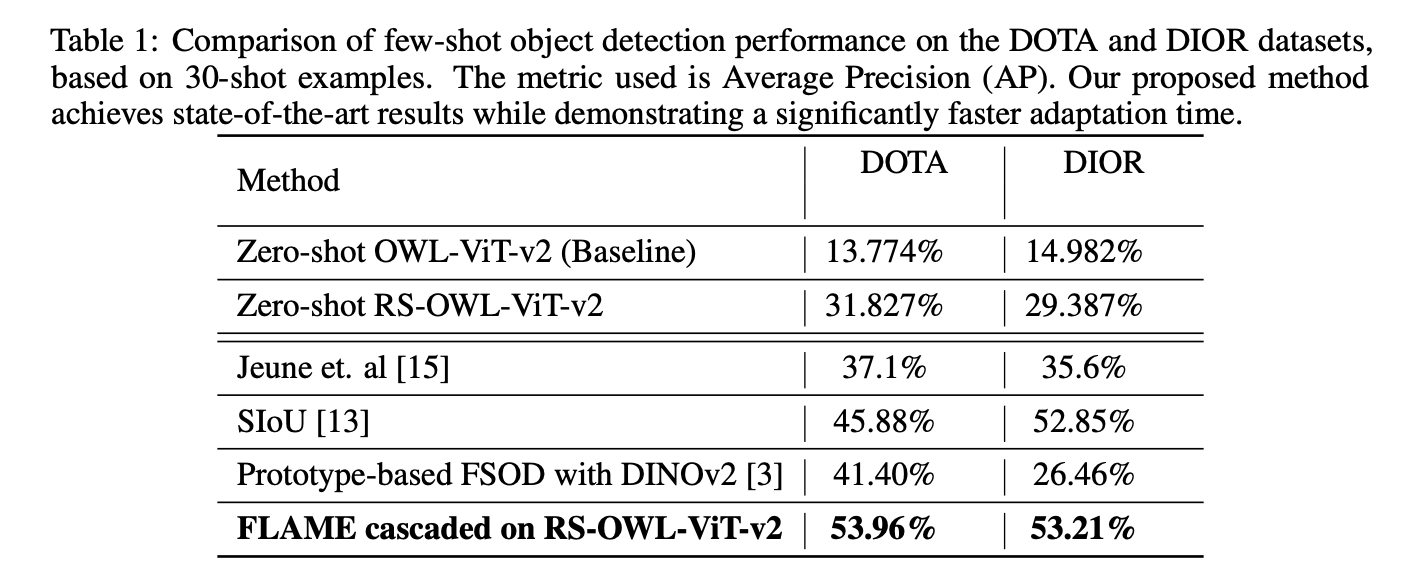

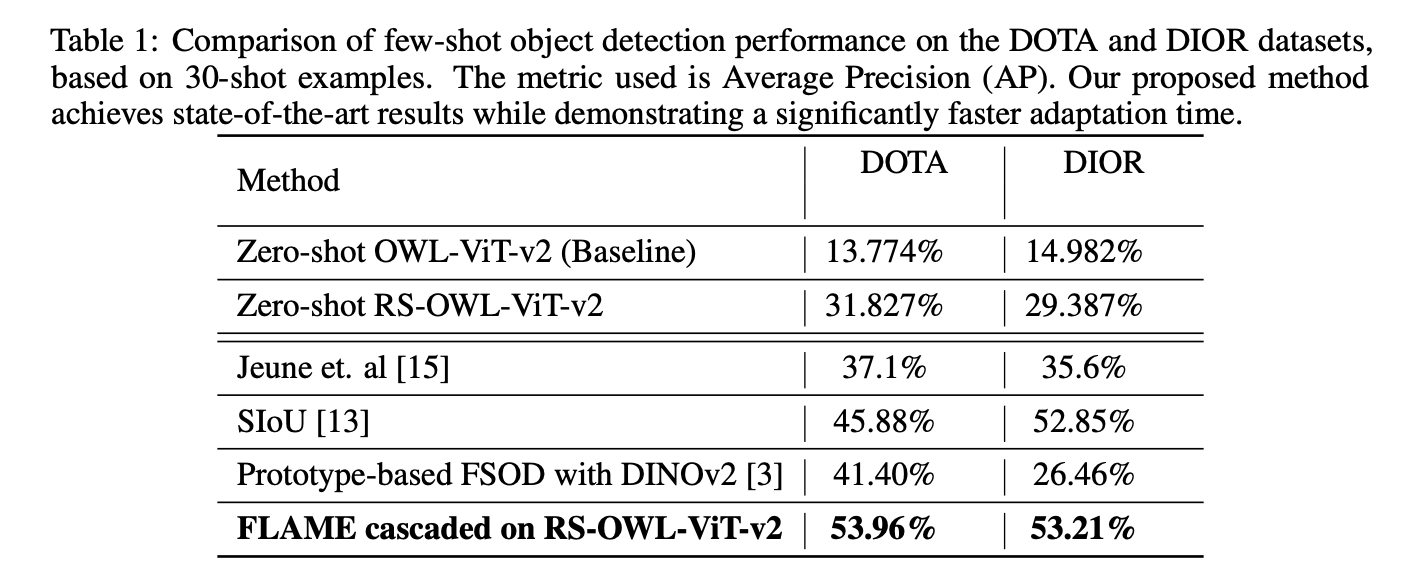

The evaluation uses two standard remote sensing detection benchmarks. Dota Features over 15 categories of oriented boxes in high-resolution aerial imagery. Dior It has 23,463 images and 192,472 instances in 20 categories. Comparison includes Zero-sample OWL ViT v2 baselineone Zero Shot RS OWL ViT v2 fine-tuned RS network LIand several shooting baselines. RS OWL ViT v2 increases Zero Shot average AP to 31.827% About DOTA and 29.387% DIOR, became the starting point of FLAME.

Understand the results

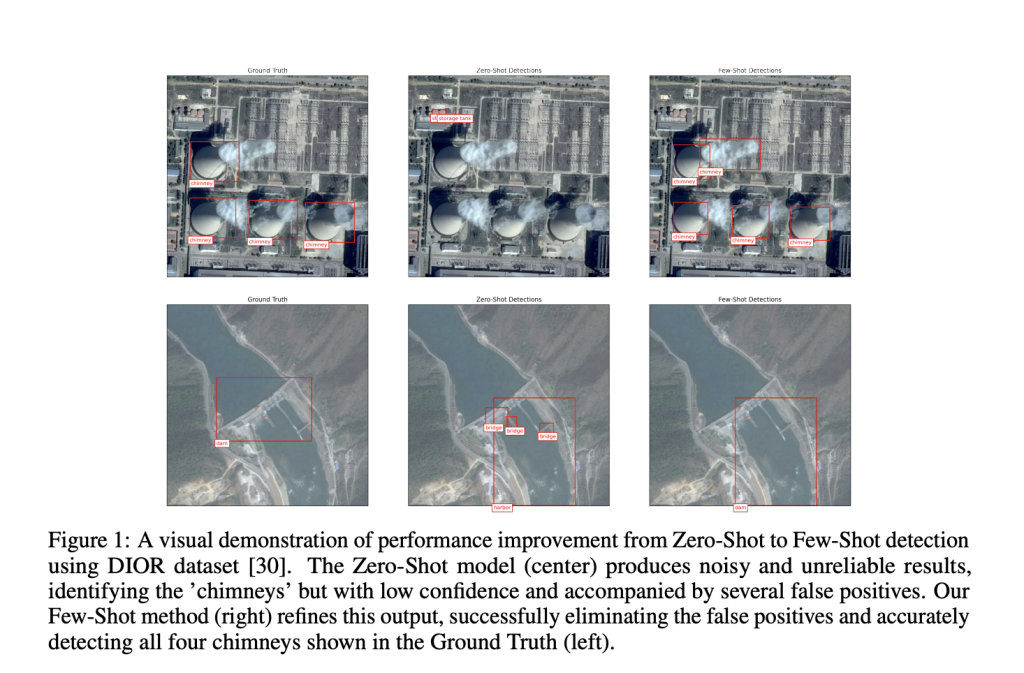

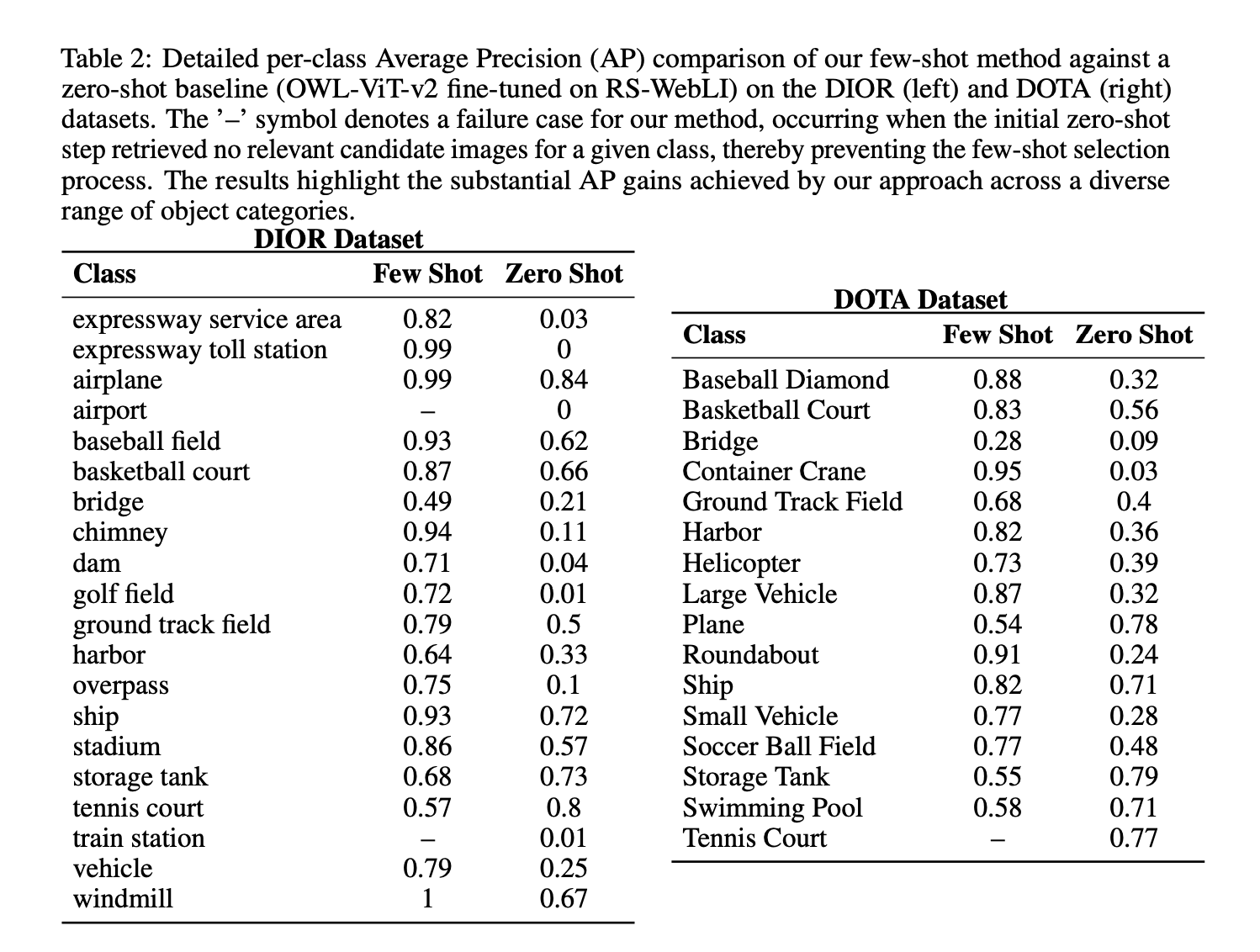

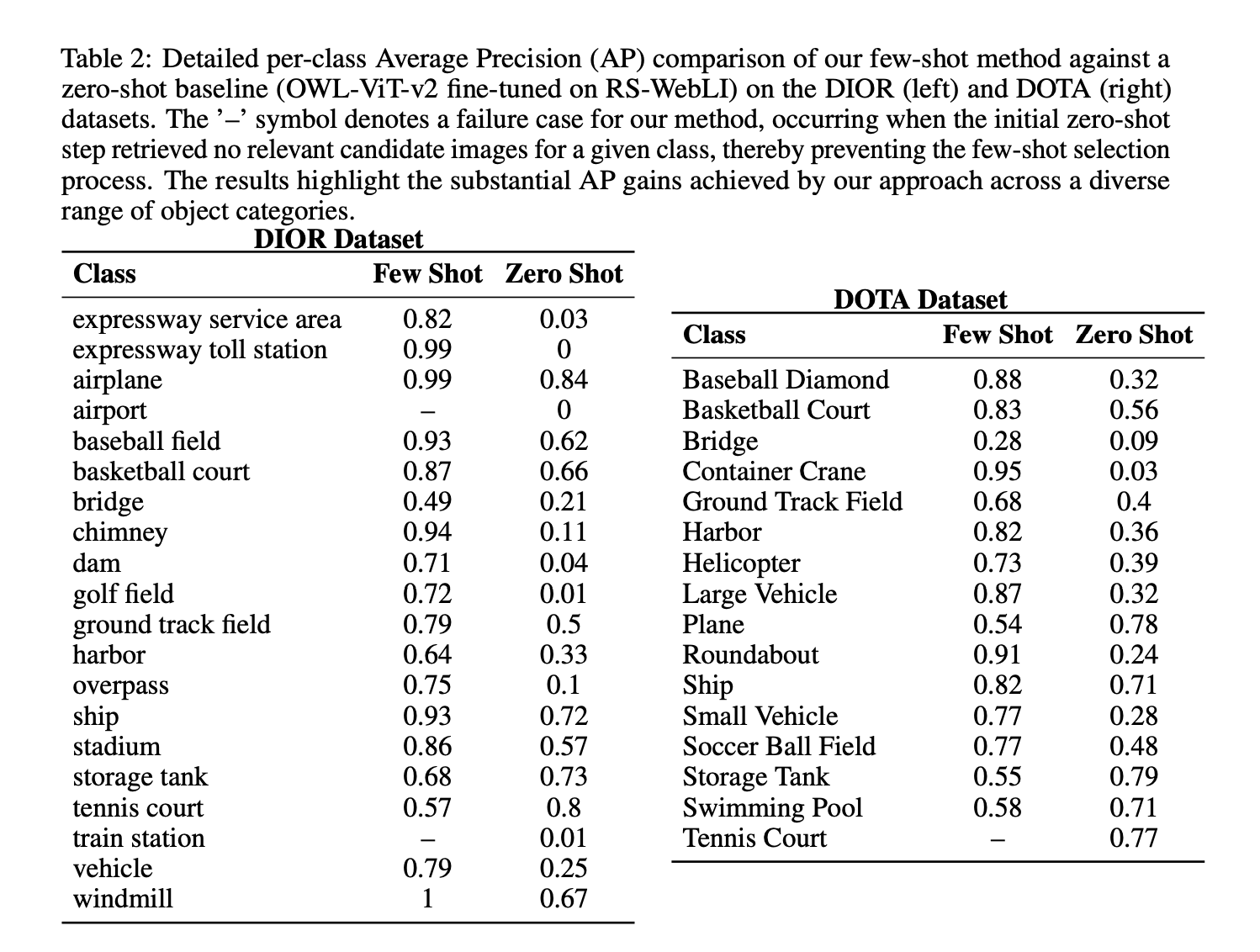

exist 30 shots adapt, FLAME cascading on RS OWL ViT v2 achieve 53.96% Associated Press exist Dota and 53.21% Associated Press exist Diorwhich is the most accurate of the methods listed. The comparison includes SIoU, a prototype method based on DINOv2, and some lens methods proposed by the research team. These numbers appear in Table 1. The research team also reported a breakdown of each class Table 2. exist Diorthis chimney Class improvement from 0.11 Zero shot to 0.94 Following FLAME, it shows how refiners remove similar-looking false positives from open vocabulary proposals.

Main points

- FLAME is a one-step active learning cascade based on OWL ViT v2, which uses density estimation to retrieve edge samples, enhance diversity through clustering, collect approximately 30 labels, and train lightweight refiners such as RBF SVM or small MLP without the need for underlying model fine-tuning.

- With 30 shots, FLAME on RS OWL ViT v2 reaches 53.96% AP on DOTA and 53.21% AP on DIOR, surpassing several previous shot baselines, including prototype methods of SIoU and DINOv2.

- On DIOR, the chimney category improves from 0.11 in zero samples to 0.94 after FLAME, indicating strong filtering of similar false positives.

- Adaptation runtime is approximately 1 minute per tag on a standard CPU, enabling near real-time user loop specialization.

- Zero Shot OWL ViT v2 has 13.774% AP on DOTA and 14.982% AP on DIOR, RS OWL ViT v2 increases Zero Shot AP to 31.827% and 29.387% respectively, and then FLAME provides a huge accuracy gain.

FLAME is a one-step active learning cascade that layers a micro-refiner on top of OWL ViT v2, selects edge detection, collects about 30 labels, and trains a small classifier without touching the base model. FLAME with RS OWL ViT v2 reported 53.96% AP and 53.21% AP on DOTA and DIOR, establishing a strong minority shot baseline. On DIOR chimney, the average accuracy increases from 0.11 to 0.94 after refinement, illustrating false positive suppression. The adaptation run time on the CPU is approximately 1 minute per tag, enabling interactive specialization. OWLv2 and RS WebLI provide the foundation for zero-shot proposals. Overall, FLAME demonstrates a practical path to specializing open vocabulary detection in the field of remote sensing by pairing the RS OWL ViT v2 proposal with a micro-scale CPU refiner, improving DOTA to 53.96% AP and DIOR to 53.21% AP.

Check paper here. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.