Google AI launches DS STAR: a multi-agent data science system to plan, code, and validate end-to-end analytics

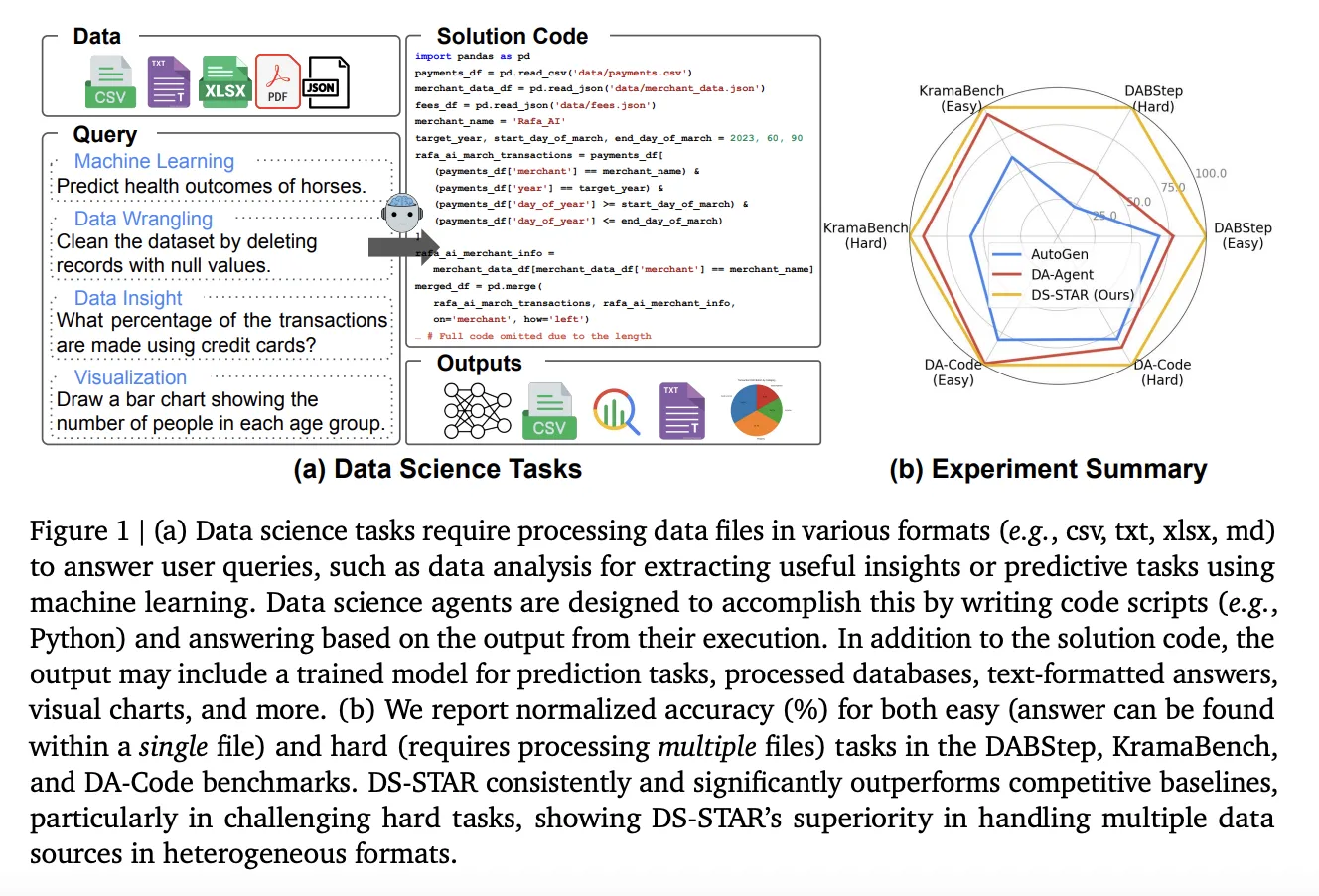

How do you translate vague business-style questions about messy folders of CSV, JSON, and text into solid Python code without the intervention of a human analyst? Introduction to Google researchers Dexing (Data science agency through iterative planning and validation), a multi-agent framework that converts open-ended data science problems into executable Python scripts on heterogeneous files. Rather than assuming a clean SQL database and a single query, DS STAR treats the problem as Text to Python And operate directly on mixed formats such as CSV, JSON, Markdown, and unstructured text.

From text to Python on heterogeneous data

Existing data science agents often rely on text-to-SQL on relational databases. This limitation limits them to structured tables and simple schemas, which is not a match for many enterprise environments where data spans documents, spreadsheets, and logs.

DS STAR changes abstraction. It generates Python code that loads and combines any files provided by the benchmark. The system first summarizes each document and then uses that context to plan, implement, and validate a multi-step solution. This design enables DS STAR to work on benchmarks such as DAB steps, Kramer Bench and DA codewhich expects multi-step analysis of mixed file types and requires strictly formatted answers.

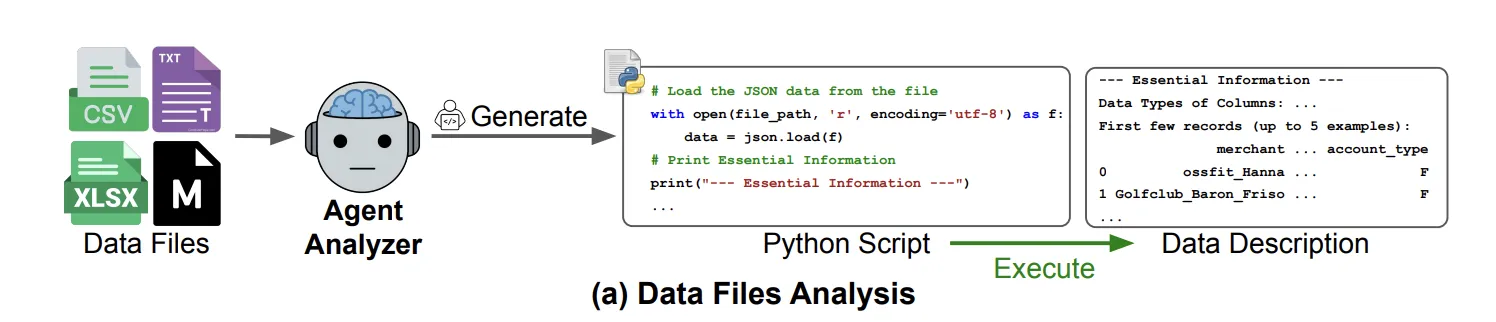

Stage 1: Analyze the data file using Aanalyzer

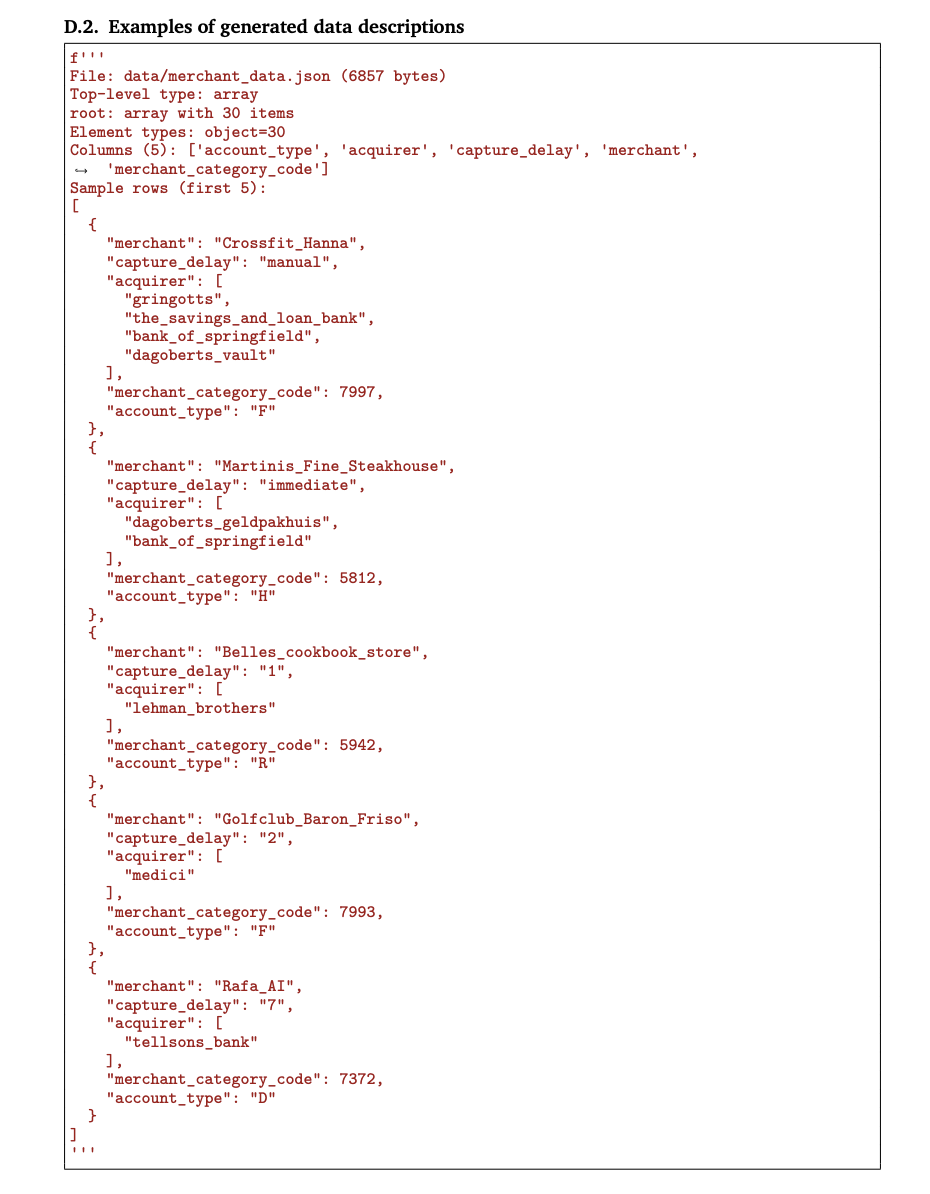

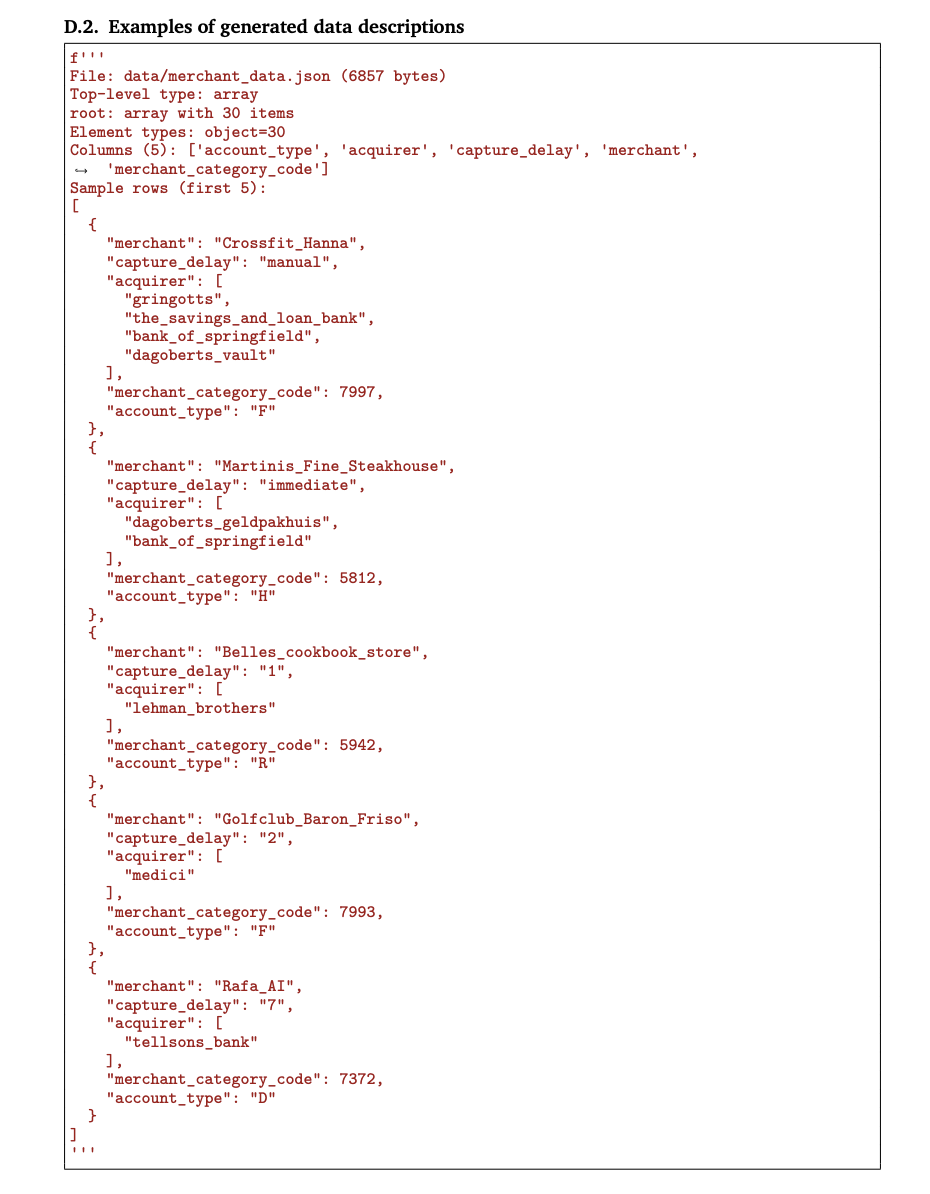

The first phase builds a structured view of the data lake. For each file (Dᵢ), analyzer The agent generates a Python script (sᵢ_desc) that parses the file and prints basic information such as column names, data types, metadata, and text summaries. DS STAR executes this script and captures the output as a concise description (dᵢ).

This process works for both structured and unstructured data. CSV files generate column-level statistics and examples, while JSON or text files generate structural summaries and key snippets. The set {dᵢ} becomes the shared context for all subsequent agents.

Phase 2: Iterative Planning, Coding, and Validation

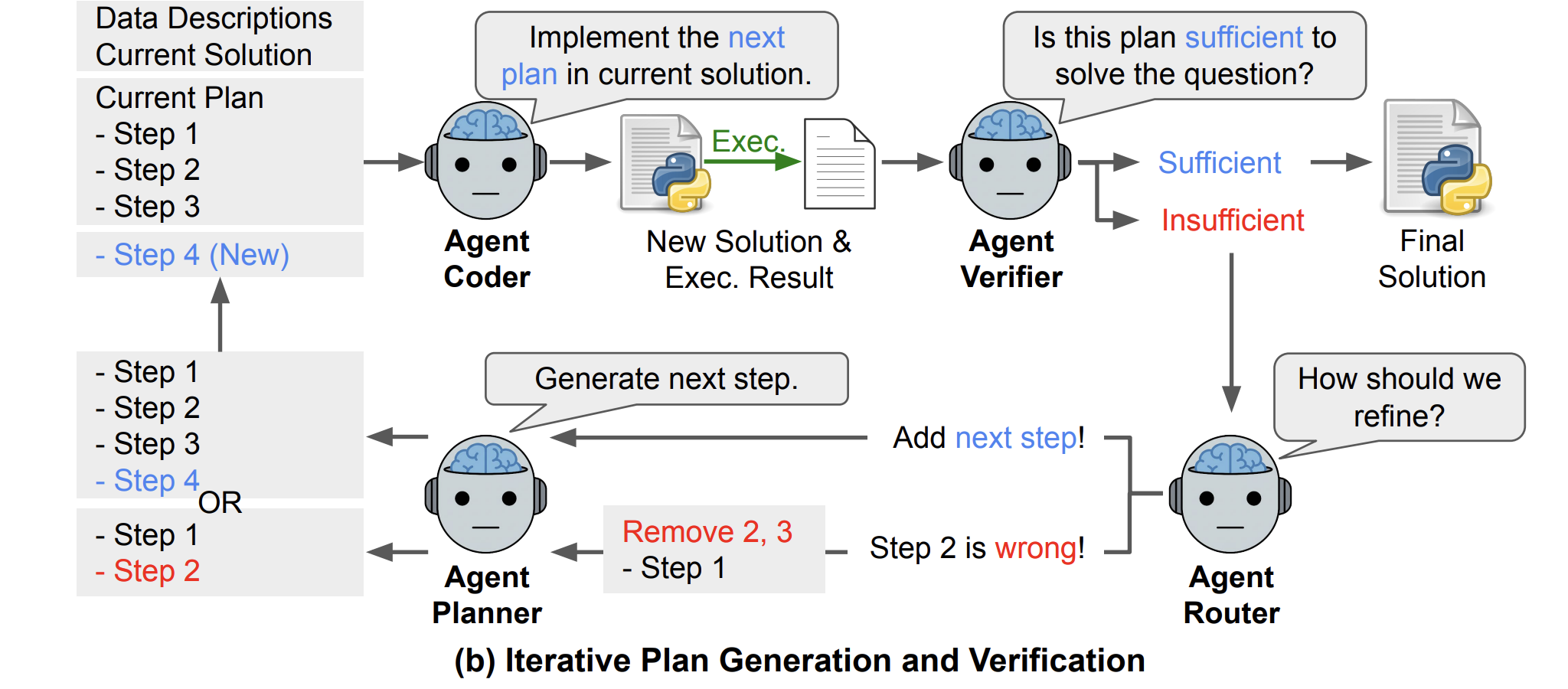

After document analysis, DS STAR runs an iterative loop that reflects how humans use notebooks.

- planner Use queries and file descriptions to create initial executable steps (p₀), such as loading related tables.

- A coder Convert the current plan(p) into Python code(s). DS STAR executes this code to obtain the observation(r).

- validator Is a LL.M. judge. It receives the cumulative plan, query, current code, and its execution results, and returns a binary decision: sufficient or insufficient.

- If planning is inadequate, router Decide how to perfect it. It either outputs the token Add stepswhich appends a new step, or the index of the erroneous step to be truncated and regenerated.

Aplanner is conditioned on the latest execution result (rₖ), so each new step explicitly responds to errors in the previous attempt. The cycle of routing, planning, coding, execution, and verification continues until Averifier marks the plan as sufficient or the system reaches a maximum of 20 rounds of refinement.

To meet the strict benchmark format, a separate analyzer The agent converts the final plan into solution code that performs rules such as rounding and CSV output.

Robustness modules, debuggers and retrievers

Realistic pipelines fail due to schema drift and missing columns. DS STAR New adbug Fix broken scripts. When code fails, Adebugger receives the script, traceback, and profiler description {dᵢ}. It generates the correct script by conditioning all three signals, which is important because many data-centric errors require knowledge of column headers, sheet names, or schema, not just stack traces.

KramaBench introduces another challenge with thousands of candidate files per domain. DS STAR handles this issue in the following ways hound. The system embeds the user query and each description (dᵢ) using a pre-trained embedding model and selects the top 100 most similar documents for the agent context, or all documents if there are less than 100. In implementation, the research team used Gemini embedded 001 Used for similarity searches.

Benchmark results for DABStep, KramaBench and DA code

All main experiments run DS STAR Gemini 2.5 Professional Edition As a base LLM, up to 20 rounds of refinement are allowed per task.

exist DAB stepsthe Gemini 2.5 Pro model alone achieved a hard-level accuracy of 12.70%. The same model of DS STAR achieved 45.24% on difficult tasks and 87.50% on easy tasks. This is more than 32 percentage points in absolute gain over hard segmentation and outperforms other agents such as ReAct, AutoGen, Data Interpreter, DA Agent and several commercial systems recorded on public rankings.

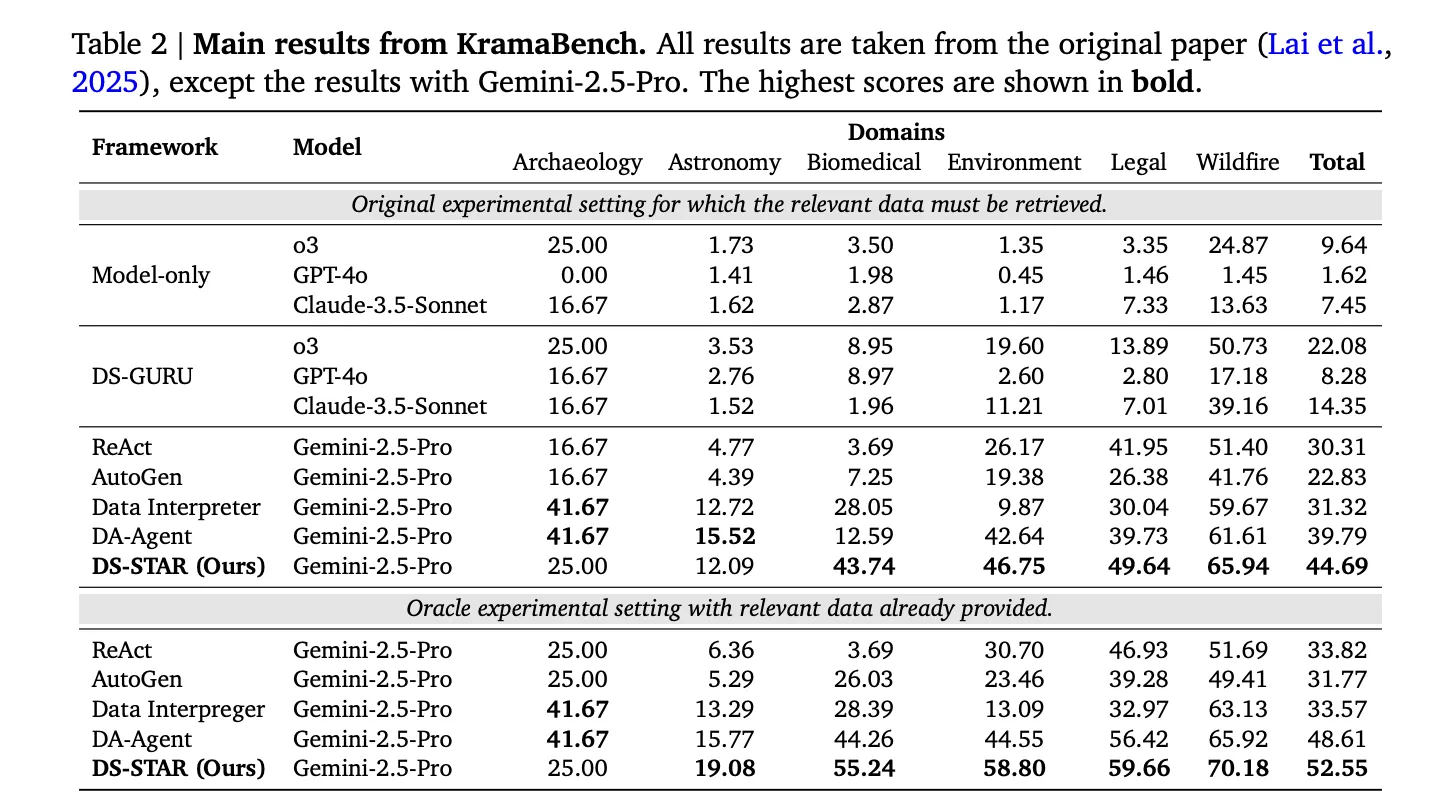

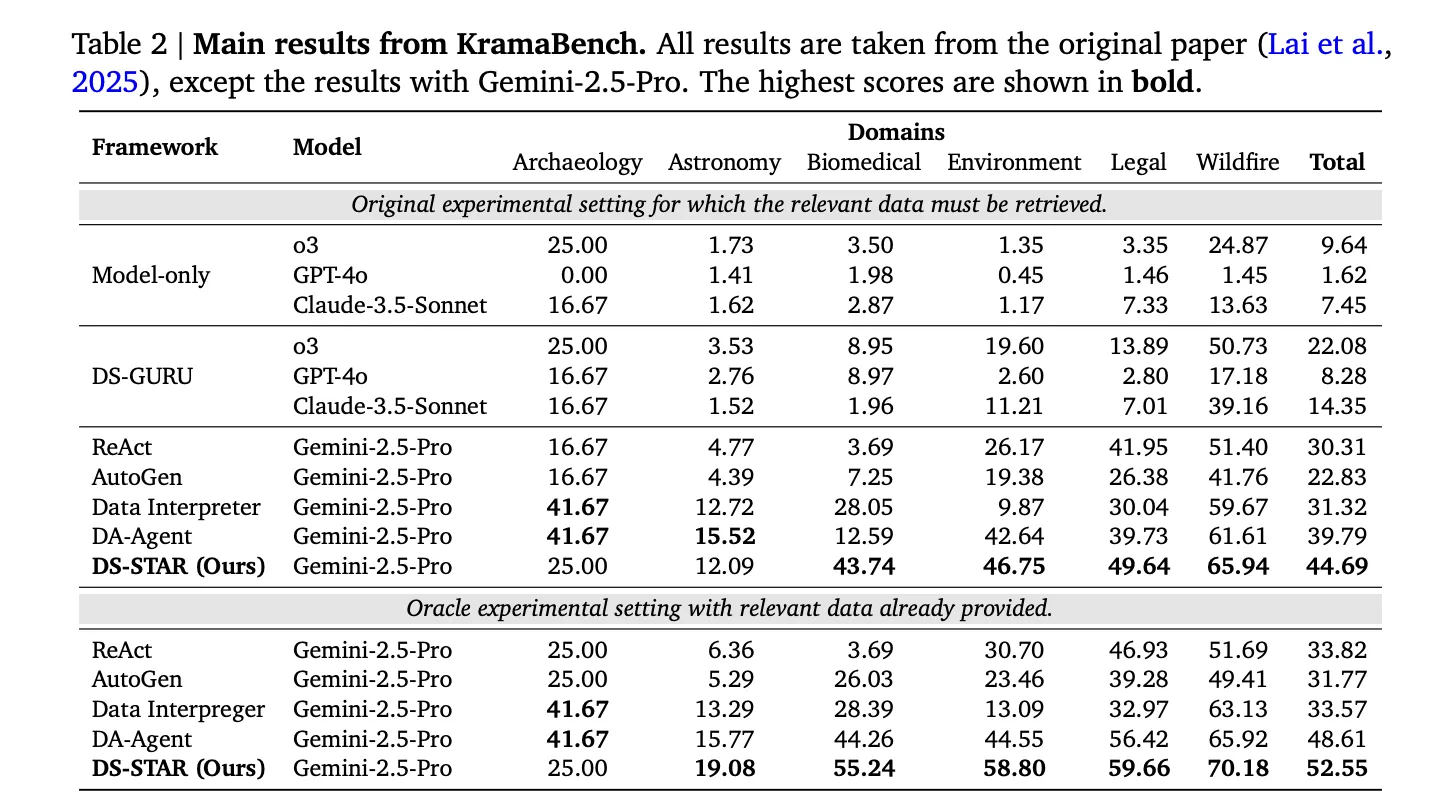

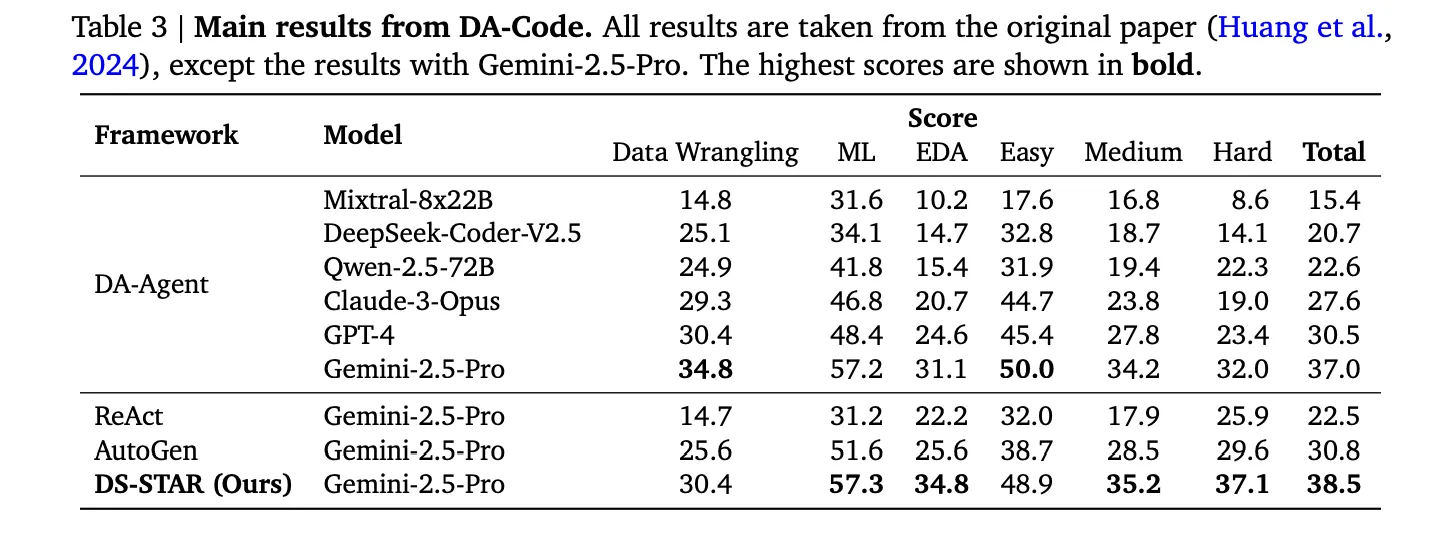

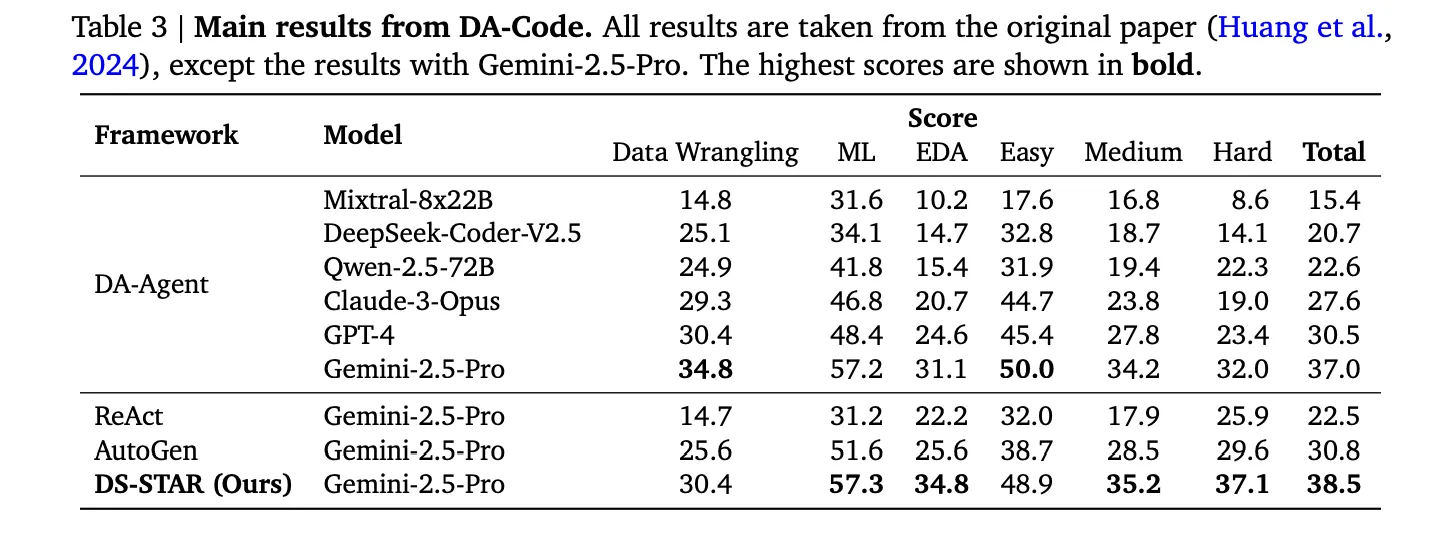

The Google research team reports that compared to the best alternative system in each benchmark, DS STAR improves overall accuracy from 41.0% to 45.2% on DABStep, from 39.8% to 44.7% on KramaBench, and from 37.0% to 38.5% on DA Code.

for Kramer Benchwhich requires retrieving relevant documents from a large domain-specific data lake, DS STAR and Gemini 2.5 Pro with retrieval capabilities achieved a normalized total score of 44.69. The strongest baseline is the DA Agent of the same model, reaching 39.79.

exist DA codeDS STAR defeated DA agents again. In the difficult task, when both used Gemini 2.5 Pro, DS STAR achieved an accuracy of 37.1%, while DA Agent achieved an accuracy of 32.0%.

Main points

- DS STAR re-architects data science agents to convert text to Python via heterogeneous files such as CSV, JSON, Markdown, and text, not just text to SQL via clean relational tables.

- The system uses a multi-agent loop consisting of Aanalyzer, Aplanner, Acoder, Averifier, Arouter, and Afinalyzer to iteratively plan, execute, and verify Python code until the verifier marks the solution as adequate.

- The Adebugger and Retriever modules improve robustness by using rich schema descriptions to repair failed scripts and selecting the top 100 relevant files from a large domain-specific data lake.

- With Gemini 2.5 Pro and 20 rounds of refinement, DS STAR achieves huge improvements over previous agents on DABStep, KramaBench and DA Code, such as increasing DABStep hard accuracy from 12.70% to 45.24%.

- Ablation shows that analyzer description and routing are critical, experiments on GPT 5 confirm that the DS STAR architecture is model-agnostic, and iterative refinement is critical for solving difficult multi-step analysis tasks.

DS STAR demonstrates that practical data science automation requires explicit structure around large language models, not just better hints. The combination of Aanalyzer, Averifier, Arouter, and Adebugger turns free-form data lakes into controlled text-to-Python loops that can be measured on DABStep, KramaBench, and DA code, and are portable across Gemini 2.5 Pro and GPT 5. This work moves data brokers from table presentations to benchmarked end-to-end analytics systems.

Check Paper and technical details. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.