Google AI introduces Gemini 2.5 “Computer Usage” (Preview): Browser control model powers AI agents to interact with user interfaces

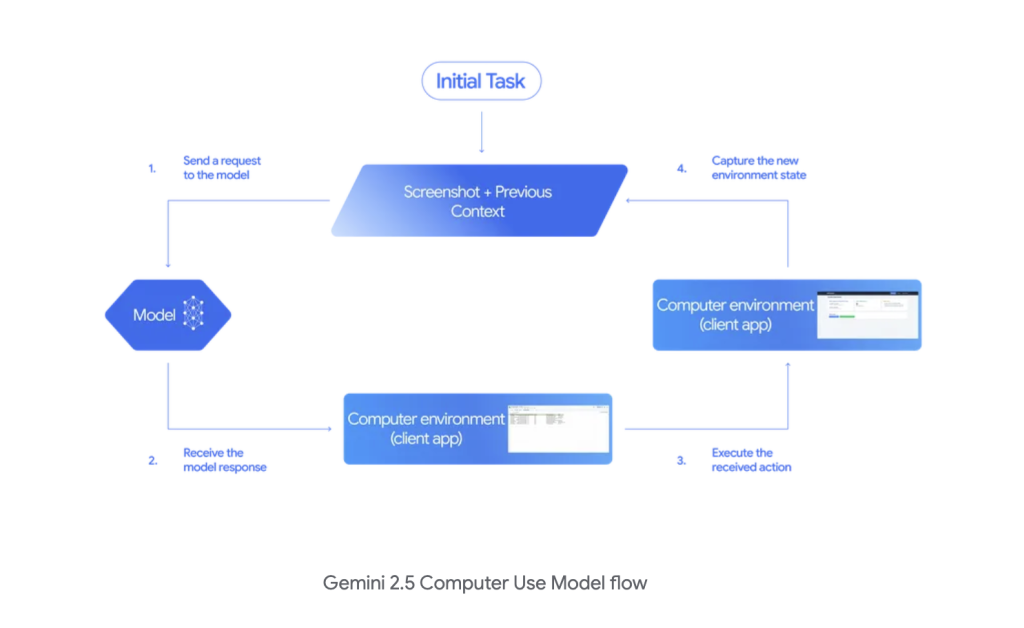

If agents could plan and execute predefined UI actions, which browser workflow would you delegate today? Google AI introduced Gemini 2.5 computer usageis the professional variant of Gemini 2.5 planned and executed Real UI actions Via the constrained action API in the live browser. it is Available in public preview pass Google AI Studio and Vertex AI. This model targets web automation and UI testing with documented benefits of human judgment on standard web/mobile control benchmarks, as well as a security layer that requires human confirmation of risky steps.

What does this model actually emit??

Developers call new computer_use Returned tools function call like click_at,,,, type_text_ator drag_and_drop. The client code performs the action (e.g. Playwright/BrowserBase), captures a new screenshot/URL, and then loops until the task ends or a security rule blocks it. this The supported action space is 13 predefined UI operations–open_web_browser,,,, wait_5_seconds,,,, go_back,,,, go_forward,,,, search,,,, navigate,,,, click_at,,,, hover_at,,,, type_text_at,,,, key_combination,,,, scroll_document,,,, scroll_at,,,, drag_and_drop– Can Use custom function extensions (For example, open_app,,,, long_press_at,,,, go_home) for non-browser surfaces.

What are scopes and constraints?

The model is Optimized for web browsers. Google says this is Not yet optimized for desktop OS level controls;Movement schemes work by exchanging custom actions under the same loop. Built-in security monitors can block actions that are prohibited or require user confirmation before “high-risk” actions (payments, sending messages, accessing sensitive records).

Measured performance

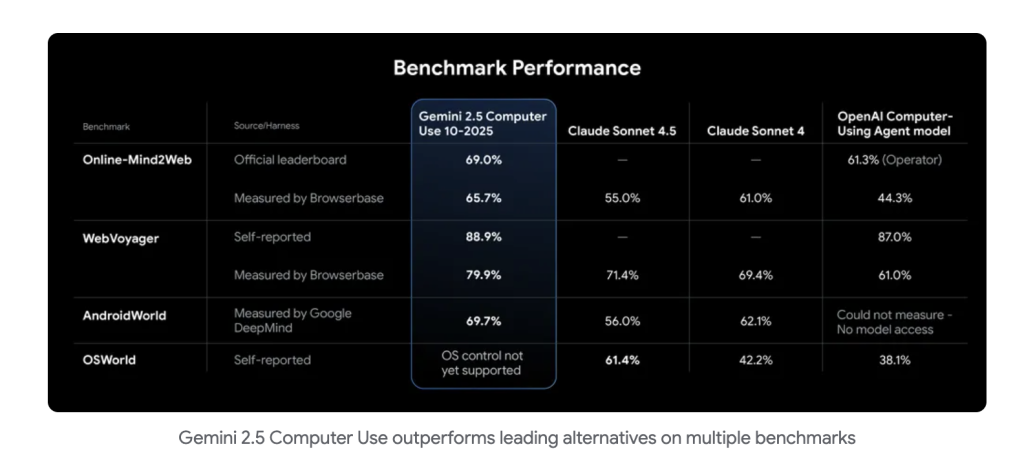

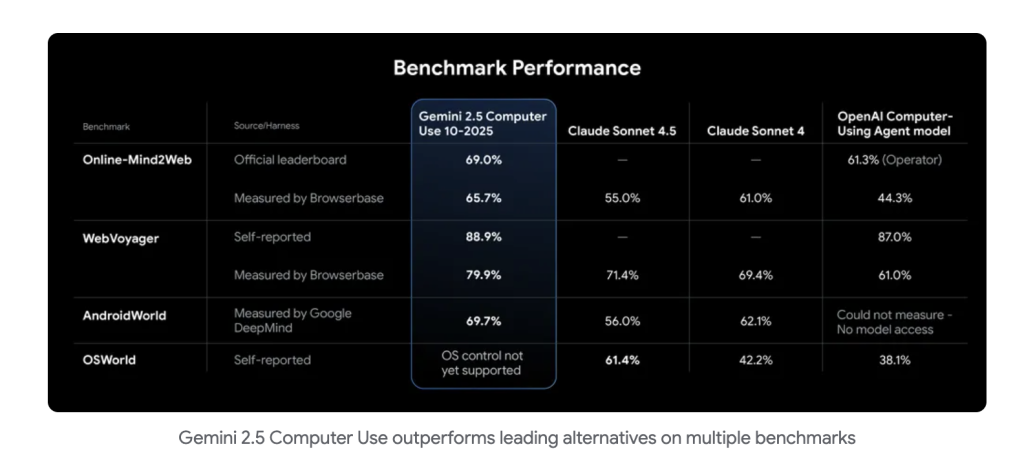

- Online mind2web (official): 69.0% passed @1 (judgment by a majority of voters), verified by benchmark organizers.

- Browserbase matching harness: lead Both compete for the API used by the computer accuracy and latency pass through onlinemind2web and WebVoyager Under the same time/step/environment constraints. Google’s model card list 65.7% (OM2W) and 79.9% (WebVoyager) While BrowserBase is running.

- Latency/quality trade-off (Google image): ~70%+ accuracy ~225 s Median delay on Browserbase OM2W harness. Think of it as a Google report, as measured by humans.

- AndroidWorld (Mobile Overview): 69.7% Measured by Google; implemented via the same API loop Custom mobile actions and excludes browser operations.

early production signals

- Automatic UI test repair: Google’s payments platform team reports on model Recovery > 60% Previously failed automated UI test execution. This is due to (and should be cited from) public reporting, not the core blog post.

- Operating speed: poke.com (Early External Tester) Reporting Workflow Typically ~50% faster Compared to their next alternative.

Gemini 2.5 computer usage is in public preview via Google AI Studio and Vertex AI. It exposes a constrained API with 13 documented UI operations and requires a client executor. Google’s latest report on the Web/Mobile Control Benchmarks for Material and Model Cards and Browserbase’s matching harness shows ~65.7% pass online – pass@1 on mind2Web, with leading latency within the same constraints. The scope is browser-first and has per-step security/confirmation. These data points justify the assessments measured in UI testing and Web OPS.

Check Github page and technical details. Feel free to check out our Github page for tutorials, code and notebooks. Also, please feel free to follow us twitter And don’t forget to join our 100K+ ml subreddit and subscribe our newsletter.

Michal Sutter is a data science professional with a Master of Science in Data Science from the University of Padua. With a strong foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 Follow Marktechpost: Add us as your go-to source on Google.