Anthrogen launches Odyssey: 102B parameter protein language model, replaces attention with consensus, and uses discrete diffusion training

Anthrogen launches Odysseya family of protein language models for sequence and structure generation, protein editing, and conditional design. Production model parameters range from 1.2B to 102B. Anthrogen’s research team positions Odyssey as a cutting-edge multimodal model for true protein design workloads, noting that the API is in early access.

What problem does Odyssey solve??

Protein design combines amino acid sequence with 3D structural and functional context. Many previous models employ self-attention, which mixes information from the entire sequence at once. Proteins obey geometric constraints, so long-range effects pass through local neighborhoods in 3D. Anthrogen sees this as a local problem and proposes a new propagation rule, called consensus, that better matches the field.

Input representation and tokenization

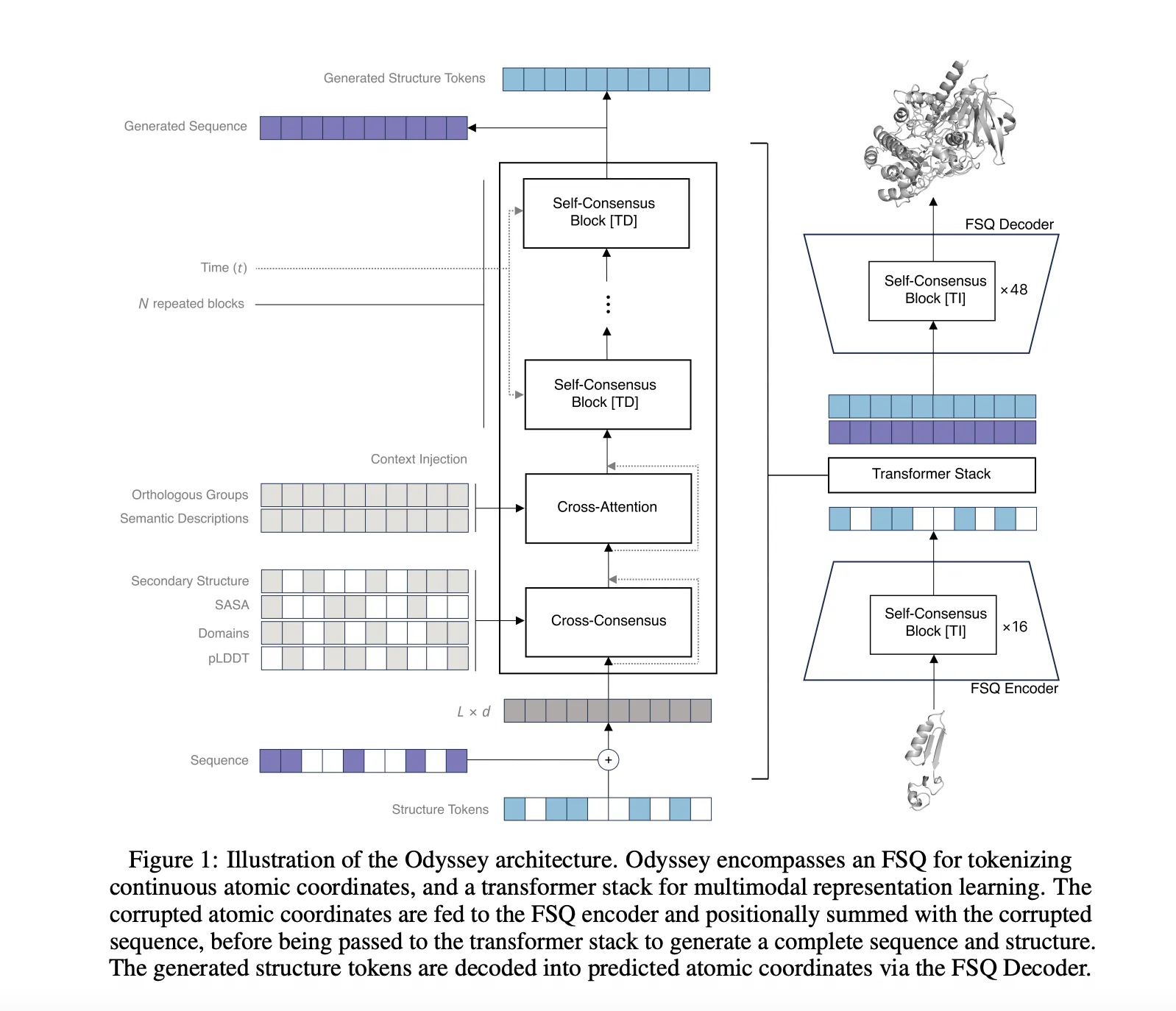

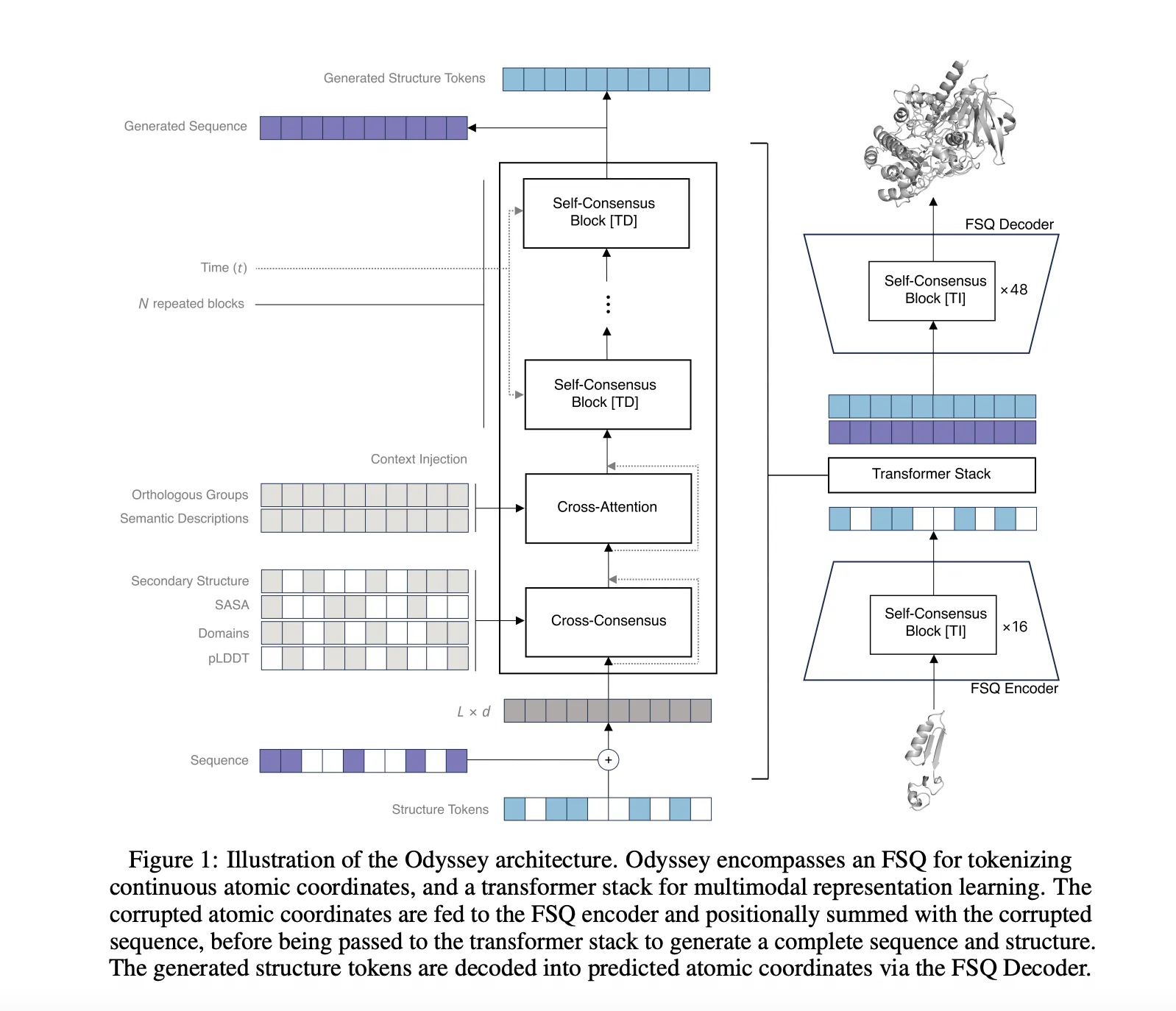

The Odyssey is multimodal. It embeds sequence markers, structural markers, and lightweight functional cues and then fuses them into a shared representation. For structures, Odyssey uses the finite scalar quantizer FSQ to convert 3D geometries into compact notations. Think of FSQ as an alphabet of shapes, allowing models to read structures as easily as sequences. Functional clues can include domain labels, secondary structure cues, homologous group labels, or short text descriptors. This joint view enables the model to access local sequence patterns and long-range geometric relationships in a single latent space.

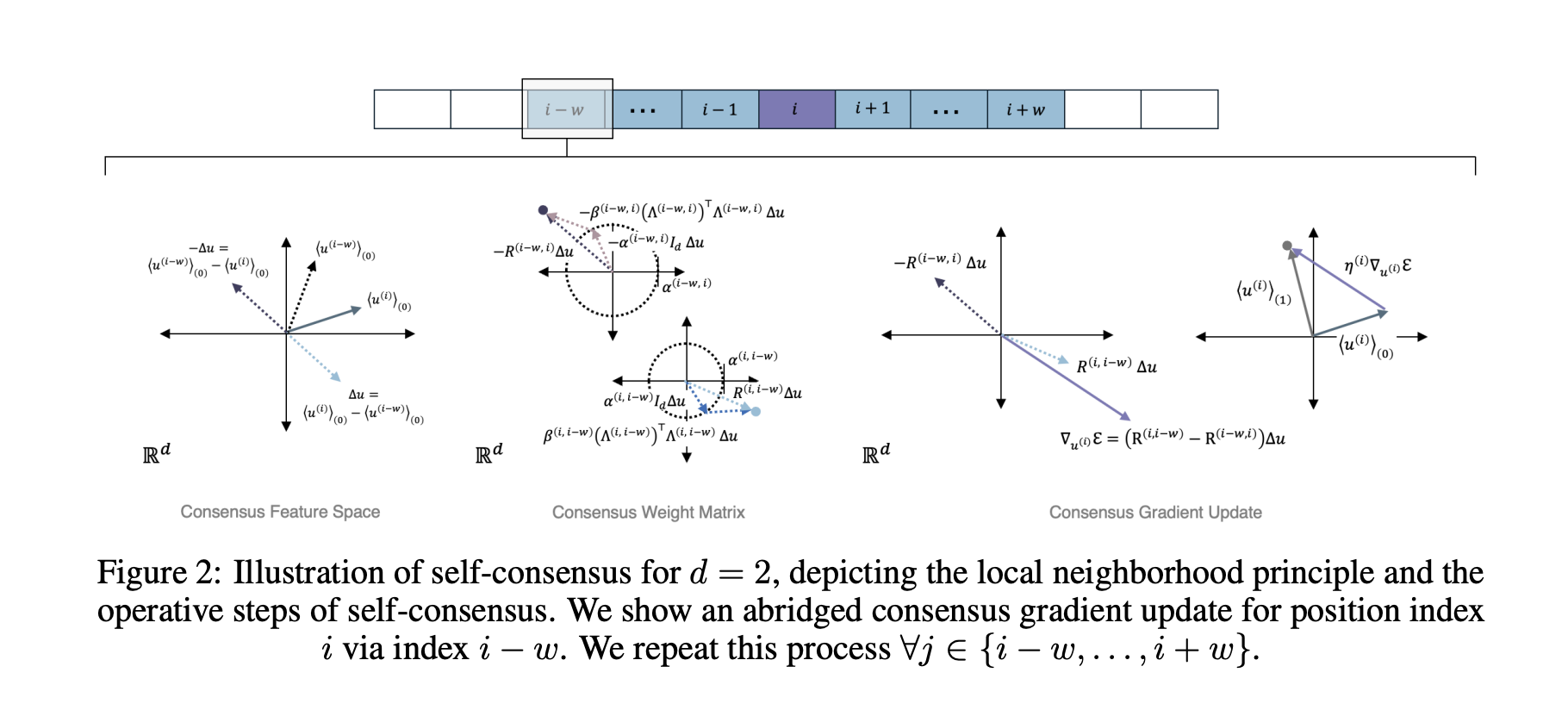

The backbone changes, consensus replaces self-focus

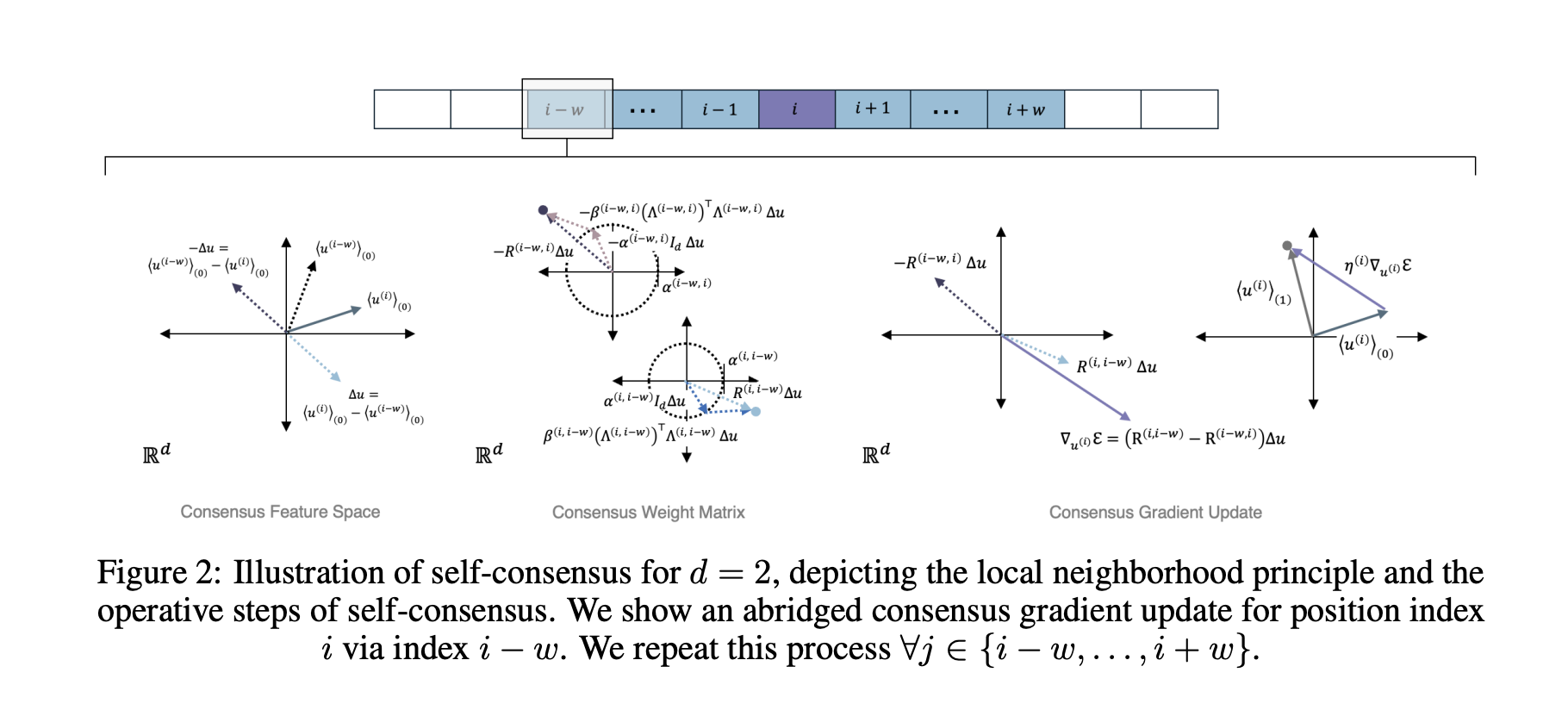

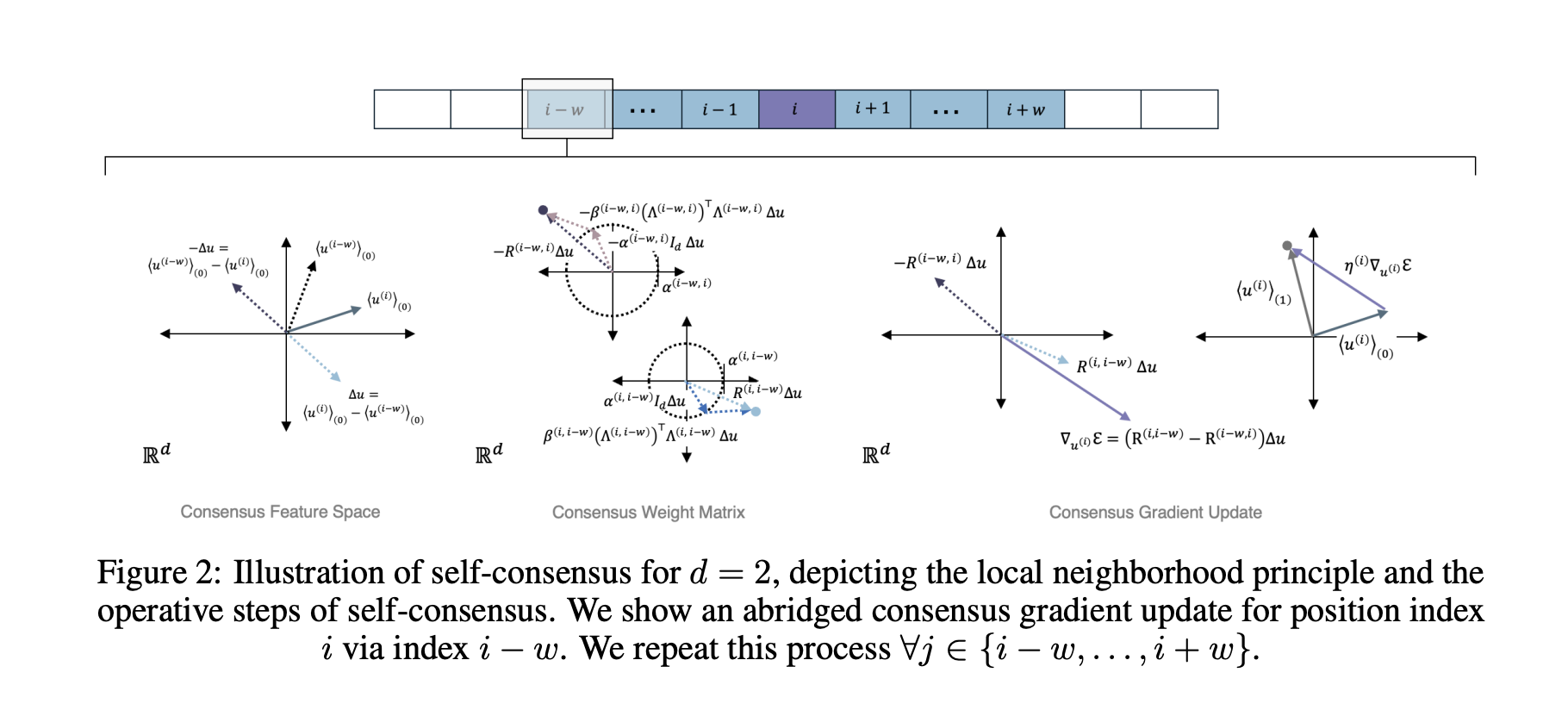

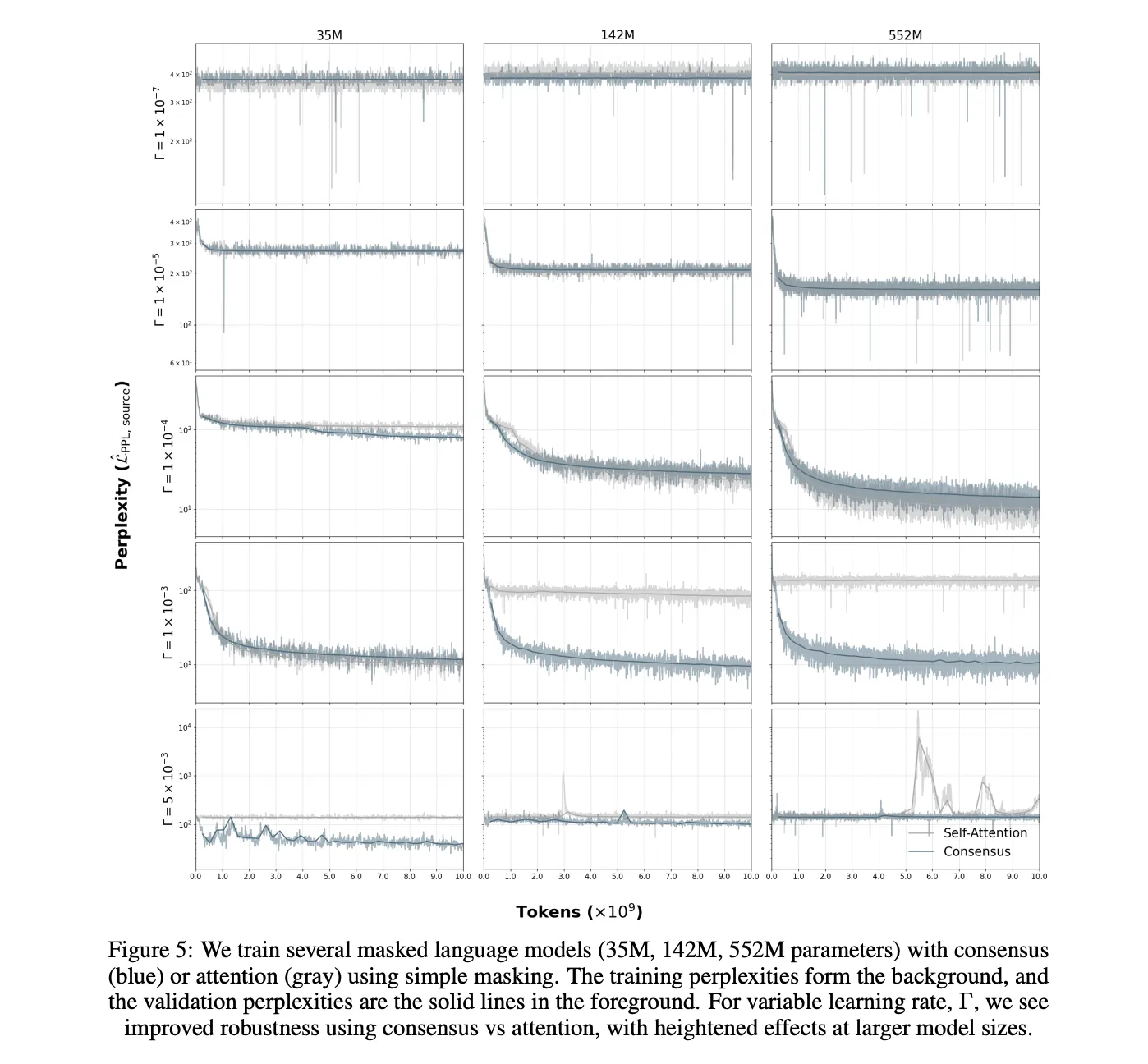

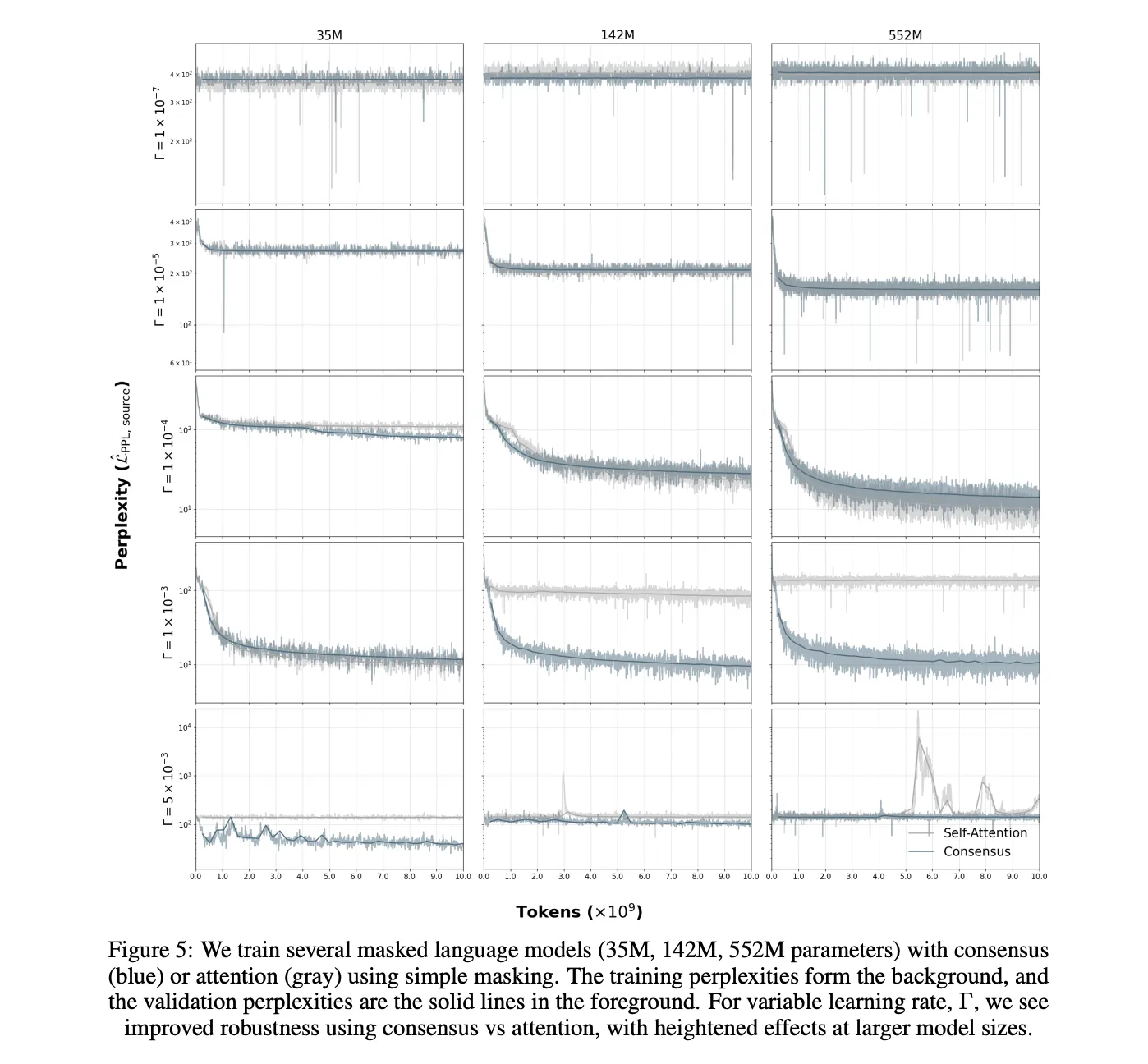

Consensus replaces global self-attention with iterative, local perceptual updates on sparse contact graphs or sequence graphs. Each layer encourages nearby communities to first come to an agreement and then propagate that agreement outward through chains and contact graphs. This change alters the calculations. The scale of self-attention is O(L²), and the sequence length is L. Anthrogen reports that Consensus has a scale of O(L), making long sequences and multi-domain structures cost-effective. The company also reports improved robustness to larger learning rate selections, resulting in fewer brittle runs and restarts.

Training objectives and generation, discrete diffusion

Odyssey performs discrete diffusion training on sequence and structural markers. The forward process applies masking noise that simulates mutations. The reverse-time denoiser learns to reconstruct consistent sequences and coordinates that work together. At inference time, the same reverse process supports conditional generation and editing. You can hold the stand, fix the subject, mask the loops, add feature labels, and let the model do the rest while keeping sequence and structure in sync.

Anthrogen reports a matching comparison in which diffusion outperforms masked language models during evaluation. The page states that training perplexity for diffusion is lower than complex masking, and training perplexity is lower or equivalent to simple masking. In validation, the diffusion model outperformed its masking counterpart, while the 1.2B masking model tended to overfit its own masking scheme. The company believes that diffusion models the joint distribution of intact proteins, consistent with sequence-plus-structure co-design.

Main points

- Odyssey is a multimodal protein model family that incorporates sequence, structural, and functional context, as well as production models with 1.2B, 8B, and 102B parameters.

- Consensus replaces self-attention with local perceptual propagation, which scales O(L) and shows robust learning rate behavior over larger scales.

- FSQ converts 3D coordinates into discrete structural markers for joint sequence and structural modeling.

- Discrete diffusion trains a reverse-time denoiser and outperforms masked language models during evaluation in matching comparisons.

- Anthrogen reports that its performance is approximately 10 times less than that of competing models, which addresses the data scarcity issue in protein modeling.

Odyssey is an impressive model because it enables joint sequence and structural modeling via FSQ, Consensus, and discrete diffusion, enabling conditional design and editing under practical constraints. Odyssey scales to 102B parameters with a consensus complexity of O(L), reducing the cost of long proteins and increasing the robustness of learning rates. Anthrogen reports that diffusion outperforms masked language models in matching evaluation, consistent with co-design goals. The system was designed with multiple goals in mind, including potency, specificity, stability, and manufacturability. The research team emphasizes that the data efficiency is close to 10 times compared to competing models, which is very important in fields where labeled data is scarce.

Check Paper, and technical details. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.