Alibaba AI announces QWEN3-MAX preview: trillion-dollar parameter QWEN model with ultra-fast and quality

Alibaba’s QWEN team unveiled QWEN3-MAX-PREVIEW (instruction)a new flagship large language model More than 1 trillion parameters– The largest so far. It is accessible via default settings in QWEN chat, Alibaba Cloud API, OpenRouter, and Hugging Face’s AnyCoder tool.

How does it fit into today’s LLM landscape?

This milestone comes as the industry moves towards smaller, more efficient models. Alibaba’s decision to move upwards scale marks a deliberate strategic choice, emphasizing its technical capabilities and commitment to trillion-parameter research.

How big is QWEN3-MAX and its contextual limitations?

- parameter:> 1 trillion.

- Context window:achieve 262,144 tokens (258,048 input, 32,768 output).

- Efficiency function:include Context cache Speed up the multi-turn training.

How does Qwen3-Max perform other models?

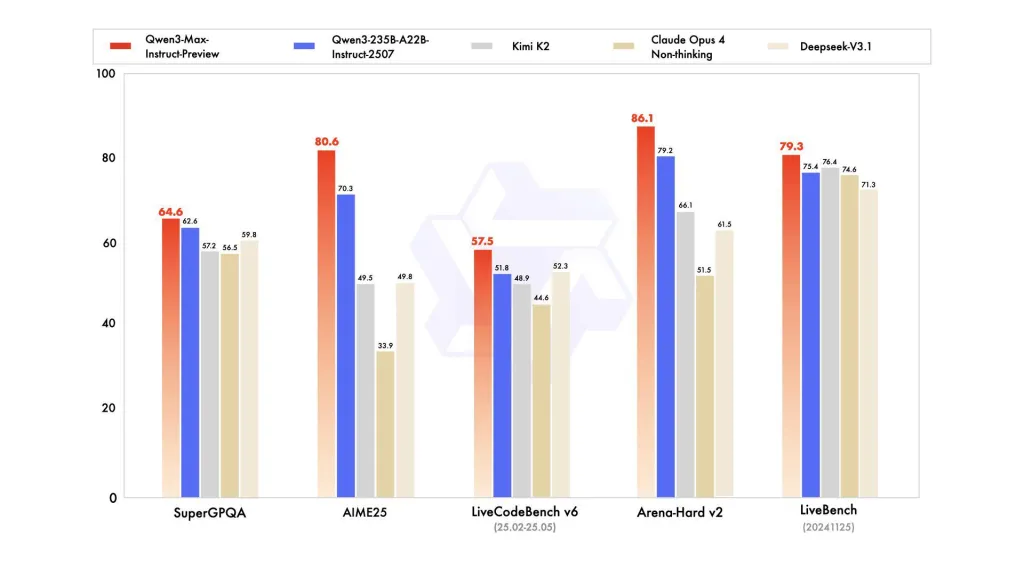

The benchmark shows that it performs better than QWEN3-235B-A22B-2507 And compete with Claude Opus 4, Kimi K2 and DeepSeek-v3.1, Aime25, LiveCodeBench V6, Arena-Hard V2 and LiveBench.

What is the pricing structure used?

Alibaba Cloud Applications’ token-based layered pricing:

- 0–32K tokens: $0.861/million input, $3.441/million output

- 32k – 128k: $1.434/million input, $5735/million output

- 128K – 252K: $2.151/million input, $8.602/million output

For smaller tasks, the model is cost-effective, but the price of long-form cultural workloads is greatly increased.

How does the closed source method affect adoption?

Unlike earlier QWEN releases, this model is Not open. Access is limited to API and partner platforms. This choice highlights Alibaba’s commercialization priorities, but may slow down research and widespread adoption in the open source community

Key Points

- The first trillion parameter QWEN model – QWEN3-MAX exceeds 1T parameters, making it the largest and most advanced LLM for Alibaba to date.

- Extra-long background treatment – support 262K token Caching allows you to go beyond most business models and enable extended documentation and session processing.

- Competitive benchmark performance – Better than QWEN3-235B, competing with Claude Opus 4, Kimi K2 and DeepSeek-V3.1 inference, coding and general tasks.

- Despite design – Although not sold as inference models, early results show structured inference capabilities on complex tasks.

- Closed pricing model – Pricing with tokens can be provided through the API; economics for small tasks, but limited accessibility in high context usage.

Summary

QWEN3-MAX-PREVIEW has set up a new scale in commercial LLMS. Its trillion-dollar parameter design, 262K context length and powerful benchmark results highlight Alibaba’s technological depth. However, closed source versions of the model and steep hierarchical pricing create a problem for wider accessibility.

Check Qwen Chat and Alibaba Cloud API. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.