AI causes trouble during scientific peer review process

In the face of an increasingly provocative question, Science Publishing: How do you deal with peer-reviewed AI?

Ecologist Timothée Poisot recently received comments apparently generated by Chatgpt. The document comes with the following case words: “This is a revised version of your comment with improved clarity and structure.”

Poisot was irritated. “I submitted a manuscript for review in an attempt to get comments from my peers,” he groped in his blog post. “If this assumption is not met, the entire social contract of peer review disappears.”

Poisot’s experience is not an isolated event. one Recent research Discovery published by Nature, as many as 17% of the reviews of the 2023-24 AI Conference paper show signs of substantial modifications through language models.

In a separate Nature InvestigationNearly one in five researchers admit to using AI to speed up the peer review process.

We also see some ridiculous cases of what happens when AI-generated content slips through a peer review process designed to maintain the quality of research.

In 2024, a Papers published at the border Exploring some highly complex cellular signaling pathways, it was found that the diary contains strange charts generated by the AI Art Tool Midjourney.

One picture depicts a deformed mouse, while the others are simply random vortexes and bends filled with nonsense.

Commenters on Twitter were shocked that this apparently flawed number had just passed peer review. “Well, how does Figure 1 surpass peer review?!” a question.

Essentially, there are two risks: a) peer reviewers who use AI to review content, and b) content generated by AI slips through the entire peer review process.

Publishers are responding to these questions. Elsevier prohibits the generation of AI in peer review. Wiley and Springer nature allow disclosure of “limited use”. Like the American Institute of Physics, some have carefully tried AI tools to supplement (but not replace) human feedback.

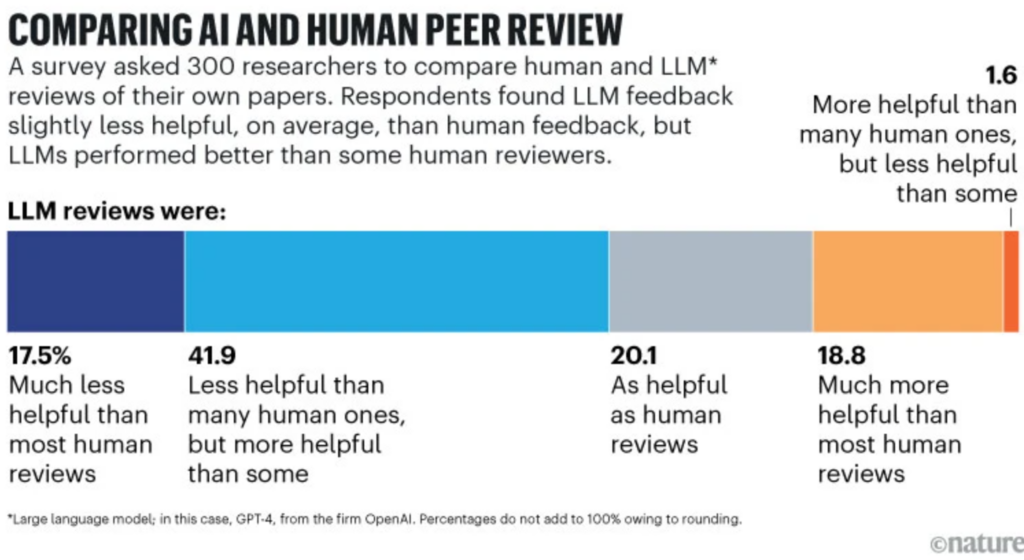

However, the AI generation is very charming and some people will use the benefits wisely. A Stanford University study found that 40% of scientists believe that comments on their works may be as useful as humans, while 20% help.

However, the academic community is spinning around the human input of millennials, so it is very resistant. “Not hitting automatic comments mean we have given up,” Poisot wrote.

Many believe that the entire focus of peer review is considered expert feedback rather than algorithmic rubber stamps.