this Agent Mixture (MOA) Architecture is a transformative approach to enhance the performance of Big Word Model (LLM), especially on complex open-ended tasks where a single model can struggle with precision, reasoning, or domain specificity.

How a mixture of proxy architecture works

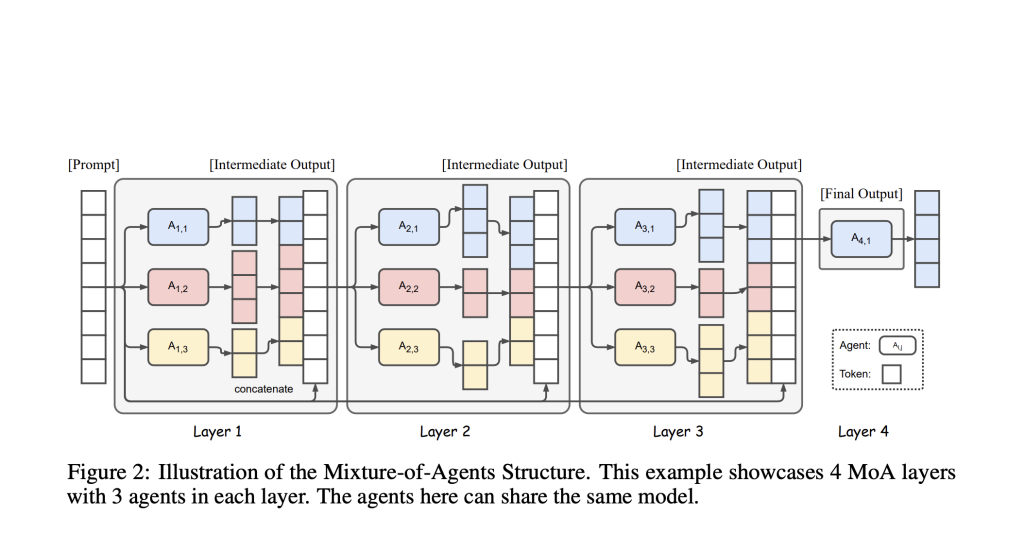

- Hierarchical structure: The MOA framework organizes multiple professional LLM agents in each layer. Each proxy in a layer receives all output from the proxy from the previous layer as the context of its own response – this facilitates richer, smarter output.

- Agent specialization: Each agent can be tailored or fine-tuned for a specific field or type of problem (e.g., law, medicine, finance, coding), similar to a team of experts, each specialist contributes unique insights.

- Collaborative information synthesis: The process starts with prompting to distribute it in each proposer who provides a possible answer. Their collective output is aggregated, refined and synthesized through subsequent layers (with “polymerizer” agents), gradually producing a single, comprehensive, high-quality result.

- Continuous refining: Through response across multiple layers, the system iteratively improves inference depth, consistency and accuracy, an attitude of the human expert panel reviewing and enhancing proposals.

Why is MOA better than single-mode LLM?

- Higher performance: MOA systems recently outperform leading individual models (e.g. GPT-4 OMNI) on competitive LLM evaluation benchmarks, for example, on Alpacaeval 2.0, 65.1% speeds were achieved compared to 57.5% of GPT-4 OMNI, using only open source LLM.

- Better handle complex multi-step tasks: Delegate subtasks to agents with domain-specific expertise, which can respond subtlely and reliably even in complex requests. This solves the key limitations of the “Journal of Everything” model.

- Scalability and adaptability: New agents or existing retraining can be added to meet emerging needs, making the system more agile than using monolithic models in each update.

- Reduce error: By giving each agent a narrower focus and using orchestration to coordinate the output, the MOA architecture reduces the likelihood of errors and misunderstandings, thereby improving reliability and interpretability.

Real-world analogies and applications

Imagine a medical diagnosis: one drug specializes in radiology, the other drug is genomics, one-third of drug treatments. Everyone reviews the patient’s cases from their own perspective. Their conclusion is integrated and weighted, advanced polymerizers assemble the best treatment recommendations. Now, this approach is adapting to AI, from scientific analysis to financial planning, legal and complex document generation.

Key Points

- Collective wisdom about overall AI: MOA architecture utilization collective The advantages of professional drugs produce results that exceed the single generalist model.

- SOTA results and open research boundaries: The best MOA models are setting state-of-the-art results on industry benchmarks and are the focus of active research that pushes the forefront of AI capabilities.

- Potential for change: From key enterprise applications to research assistants and field-specific automation, MOA trends are reshaping the possibilities of AI agents.

Anyway, Combining professional AI agents with domain-specific expertise through MOA architecture can provide more reliable, nuanced and accurate output than any single LLM, especially for complex multidimensional tasks.

source:

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.