A guide to effective contextual engineering for artificial intelligence agents

Anthropic recently published a guide to effective context engineering for AI agents, a reminder that context is a critical but limited resource. The quality of an agent often depends less on the model itself and more on how its context is structured and managed. Even a weaker LLM can do well in the right context, but no state-of-the-art model can make up for a weaker one.

Production-grade AI systems need more than just good cues, they need structure: a complete ecosystem of environments that can shape reasoning, memory, and decision-making. Modern agent architecture now treats context not as a line in a prompt, but as a core design layer.

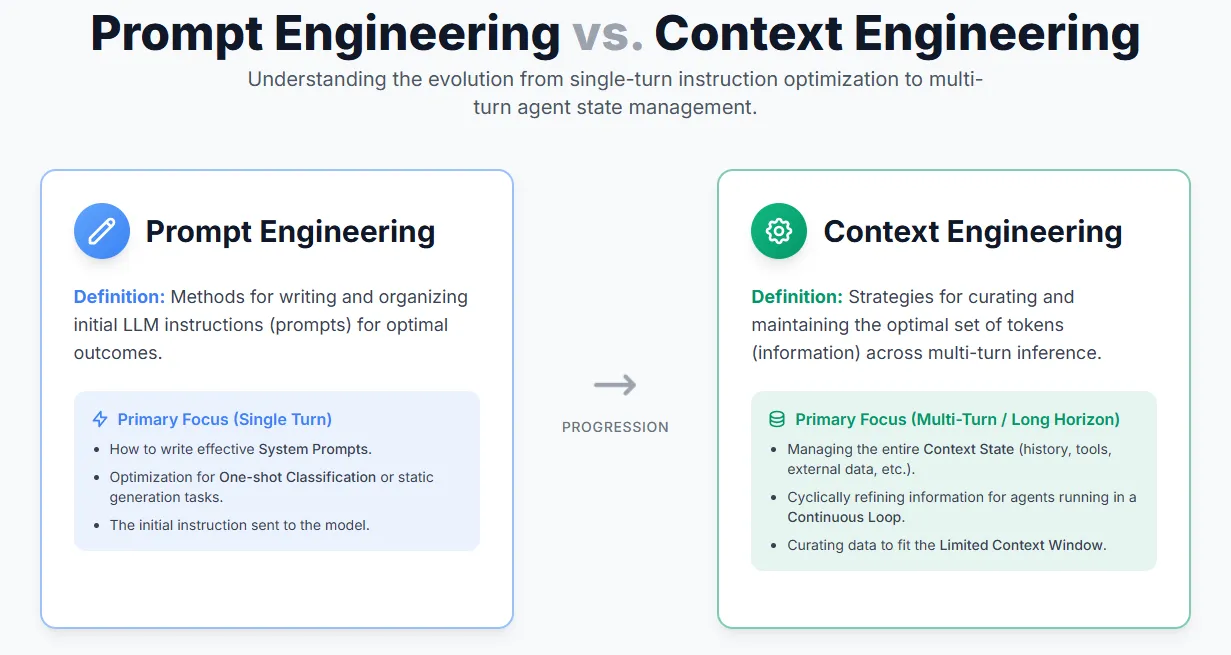

The difference between situational engineering and just-in-time engineering

Just-in-time engineering focus Develop effective instructions to guide the conduct of the LL.M. -Essentially, how to write and structure prompts for optimal output.

Contextual engineering, on the other hand, is not limited to prompts. this is about Manage the entire set of information the model sees during inference — Includes system messages, tool output, memory, external data, and message history. As AI agents continue to evolve to handle multiple rounds of inference and longer tasks, context engineering becomes a key discipline for curating and maintaining what truly matters within the model’s limited context window.

Why is contextual engineering important?

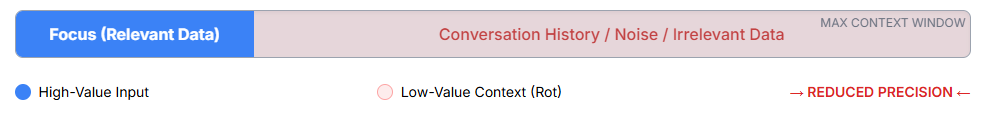

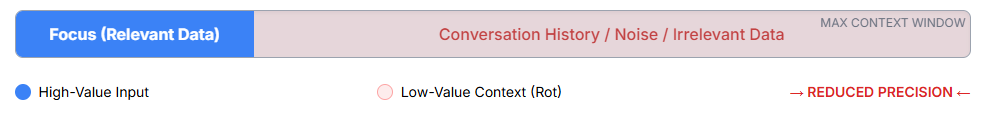

LL.M.s, like humans, have a limited attention span—the more information they are given, the harder it is for them to stay focused and recall details accurately. This phenomenon is called context decay and means that simply increasing the context window does not guarantee better performance.

Since LLM runs on a Transformer architecture, each token must “pay attention” to every other token, which can quickly exhaust their attention as the context grows. Therefore, long context may lead to reduced accuracy and weakened long-range reasoning capabilities.

This is why context engineering is crucial: it ensures that an agent’s limited context contains only the most relevant and useful information, allowing it to reason effectively and stay focused even during complex multi-turn tasks.

What makes a situation effective?

Good context engineering means fitting the right information (not the most information) into the model’s limited attention window. The goal is to maximize the useful signal while minimizing the noise.

Here’s how to design effective context across its key components:

System prompt

- Keep them clear, specific, and minimal—enough to define the desired behavior, but not so rigid that they can be easily broken.

- Avoid two extremes:

- Overly complex hard-coded logic (too brittle)

- Vague high-level instructions (too broad)

- Use structured sections (e.g. ,

, ## output Format) to improve readability and modularity. - Start with a minimal version and iterate based on test results.

tool

- Tools serve as the interface between the agent and its environment.

- put up Small, unique and efficient Tools – Avoid bloated or overlapping functionality.

- Make sure the input parameters are clear, descriptive and unambiguous.

- Fewer, well-designed tools result in more reliable agent behavior and easier maintenance.

Example (few hints)

- use Diverse and representative examplesis not an exhaustive list.

- focus on show mode, no explain Every rule.

- include both good and bad Give examples of behavioral boundaries.

Knowledge

- Provide domain-specific information – APIs, workflows, data models, etc.

- Helping models move from text prediction to decision-making.

memory

- Provides agents with continuity and visibility into past operations.

- Short term memory: Reasoning steps, chat records

- Long term memory: Company data, user preferences, learned facts

Tool results

- Feed tool output into the model self-correction and dynamic reasoning.

Contextual Engineering Agent Workflow

Dynamic contextual retrieval (“on the fly” transformation)

- Just-in-time production strategy: Agents transition from static, preloaded data (traditional RAG) to autonomous, dynamic context management.

- Get at runtime: The agent uses tools (e.g. file paths, queries, APIs) to retrieve only the most relevant data at the moment required for inference.

- Efficiency and Awareness: This approach greatly improves memory efficiency and flexibility, reflecting how humans use external organizational systems (such as file systems and bookmarks).

- Mixed search: Complex systems like Claude Code employ a hybrid strategy that combines JIT dynamic retrieval with preloaded static data for optimal speed and versatility.

- Engineering Challenges: This requires careful tool design and thoughtful engineering to prevent agents from abusing the tool, chasing dead ends, or wasting context.

Long term context maintenance

These techniques are essential for maintaining coherence and goal-directed behavior during tasks that span longer periods of time and extend beyond the limited contextual window of the LL.M.

Compaction (Alembic):

- Preserve conversation flow and key details when the context buffer is full.

- Summarize old message history and restart the context, often discarding redundant data such as old raw tool results.

Structured notes (external storage):

- Provides persistent memory with minimal context overhead.

- The agent autonomously writes persistent external notes (for example, to a NOTES.md file or a dedicated in-memory tool) to track progress, dependencies, and strategic plans.

Sub-agent structure (professional team):

- Handle complex, in-depth exploration tasks without polluting the master agent’s working memory.

- A specialized subagent performs deep work using an isolated context window and then returns only a reduced digest (e.g., 1-2k tokens) to the main coordinating agent.

I am a Civil Engineering graduate (2022) from Jamia Millia Islamia, New Delhi and I am very interested in data science, especially neural networks and their applications in various fields.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.