Black Forest Labs Releases FLUX.2: 32B Flow Matching Transformer for Production Image Pipelines

Black Forest Labs has released FLUX.2, its second generation image generation and editing system. Targeted at real-world creative workflows such as marketing assets, product photography, design layouts, and complex infographics, FLUX.2 offers up to 4-megapixel editing support and powerful control over layout, logos, and typography.

FLUX.2 product range and FLUX.2 [dev]

The FLUX.2 family covers managed APIs and open weights:

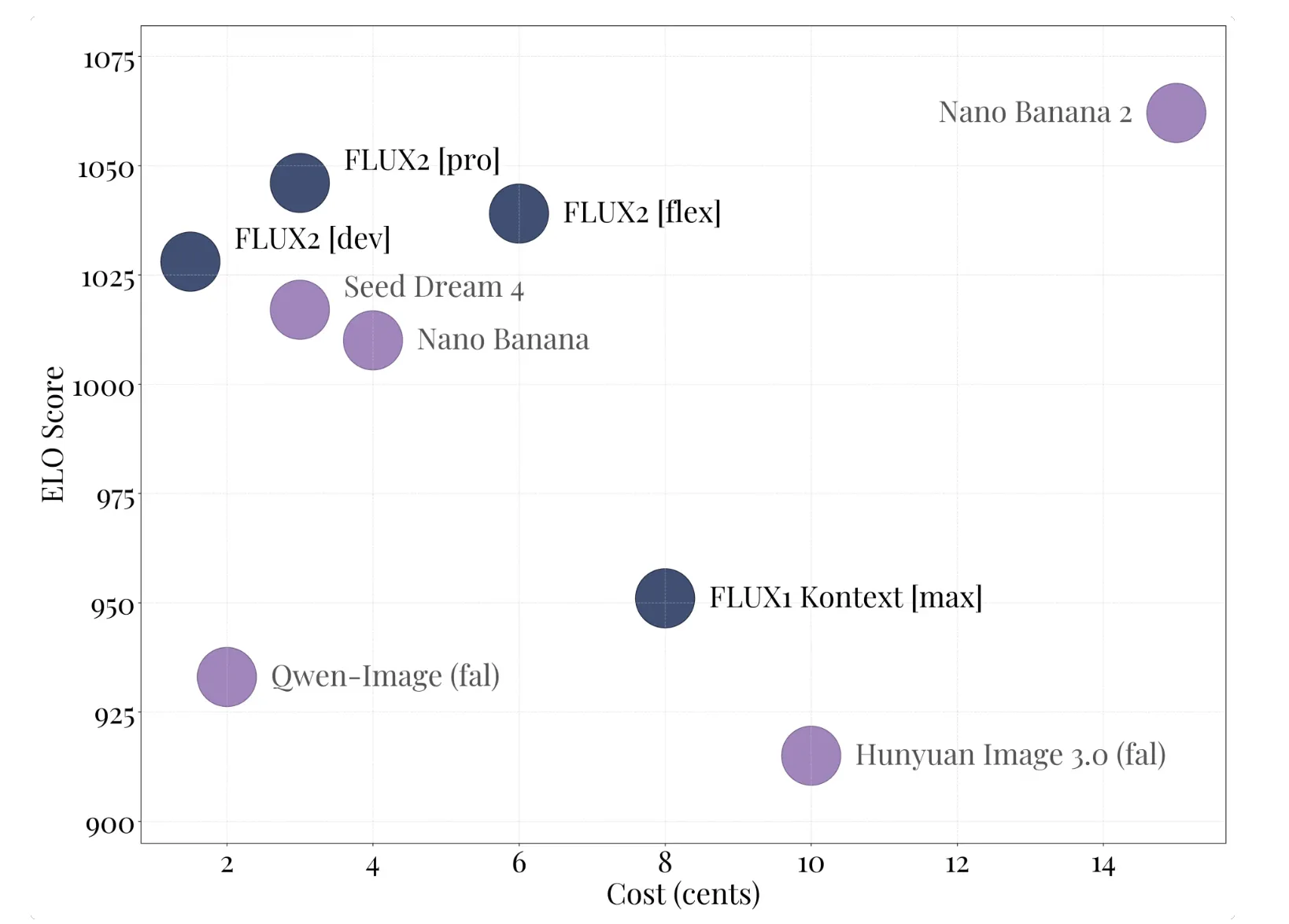

- Flux.2 [pro] Is the managed API layer. It targets state-of-the-art quality relative to closed models, with high on-the-fly compliance and low inference cost, and is available in the BFL Playground, BFL API, and partner platforms.

- Flux.2 [flex] Expose parameters such as number of steps and bootstrap scale so developers can trade off latency, text rendering accuracy, and visual detail.

- Flux.2 [dev] is an open weight checkpoint, derived from the basic FLUX.2 model. It is described as the most powerful open-weight image generation and editing model, combining text-to-image and multi-image editing in a single checkpoint, with 32 billion parameters.

- Flux.2 [klein] is an upcoming open source Apache 2.0 variant sized from the base model suitable for smaller setups, with many of the same features.

All variants support image editing from text and multiple references within a single model, eliminating the need to maintain separate checkpoints for generation and editing.

Architecture, latent flows and FLUX.2 VAE

FLUX.2 uses a latent flow matching architecture. The core design combines Mistral-3 24B visual language model with a Rectifier flow transformer Operate on latent image representations. The visual language model provides semantic foundation and world knowledge, while the Transformer backbone learns spatial structure, materials, and composition.

The model is trained to map noise latent values to image latent values under text conditions, so the same architecture supports text-driven composition and editing. For editing, the latent image is initialized from the existing image and then updated under the same process while preserving the structure.

a new one FLUX.2 VAE Define the latent space. It is designed to balance learnability, reconstruction quality, and compression, and is released separately on Hugging Face under the Apache 2.0 license. This autoencoder is the backbone of all FLUX.2 flow models and can also be reused in other generation systems.

Production workflow capabilities

The FLUX.2 Docs and Diffusers integration highlights several key features:

- Multiple reference support: FLUX.2 can combine up to 10 reference images to maintain character identity, product look and feel in the output.

- Photoreal details at 4MP: This model can edit and generate images up to 4 megapixels, with improved textures, skin, fabric, hands and lighting, suitable for use cases such as product shots and photos.

- Powerful text and layout rendering: It can render complex typography, infographics, memes, and user interface layouts with crisp, small text, a common weakness of many older models.

- World knowledge and spatial logic: Models are trained to achieve more fundamental lighting, perspective, and scene composition, reducing artifacts and synthetic looks.

Main points

- FLUX.2 is a 32B latent flow matching converter that unifies text to image, image editing and multi-reference compositing in a single checkpoint.

- Flux.2 [dev] is the open weight variant, paired with the Apache 2.0 FLUX.2 VAE, while the core model weights use the FLUX.2-dev non-commercial license with enforced security filtering.

- The system supports up to 4 megapixel generation and editing, powerful text and layout rendering, and up to 10 visual references for consistent character, product and style.

- Full-precision inference requires over 80GB VRAM, but 4-bit and FP8 quantization pipelines with offload enable FLUX.2 [dev] Available on 18GB to 24GB GPUs and even 8GB cards with enough system RAM.

Editor’s Note

FLUX.2 is an important step forward in open weight visual generation as it combines the 32B rectified stream converter, Mistral 3 24B visual language model and FLUX.2 VAE into a single high-fidelity pipeline for text to image and editing. Clear VRAM profiles, quantized variants, and powerful integration with Diffusers, ComfyUI, and Cloudflare Workers make it suitable for real workloads, not just benchmarks. This release brings the Open Image model closer to production-grade creative infrastructure.

Check Technical details, model weights and buy-back agreements. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.