NVIDIA AI releases Nemotron-Elastic-12B: a single AI model that provides 6B/9B/12B variants without additional training costs

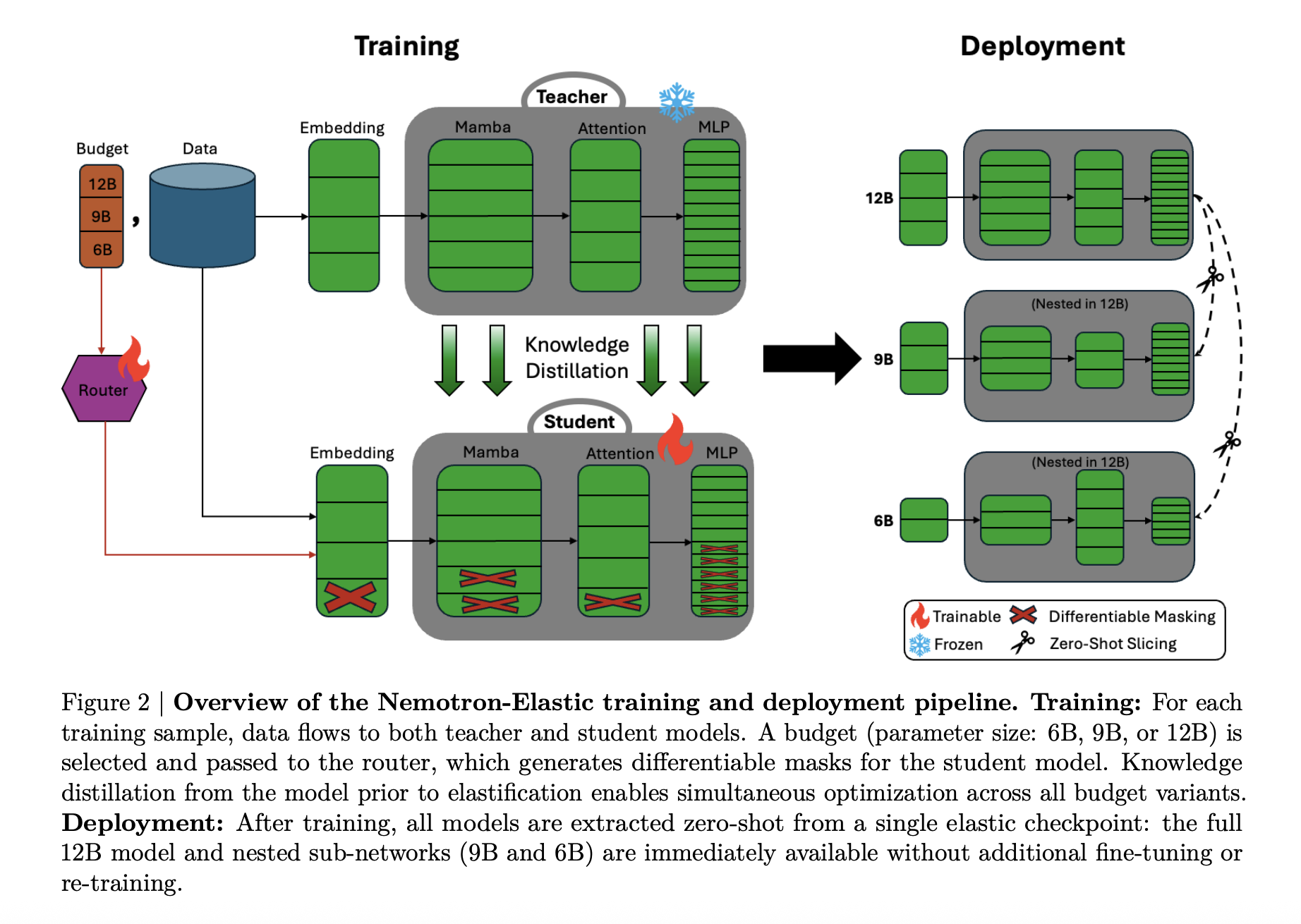

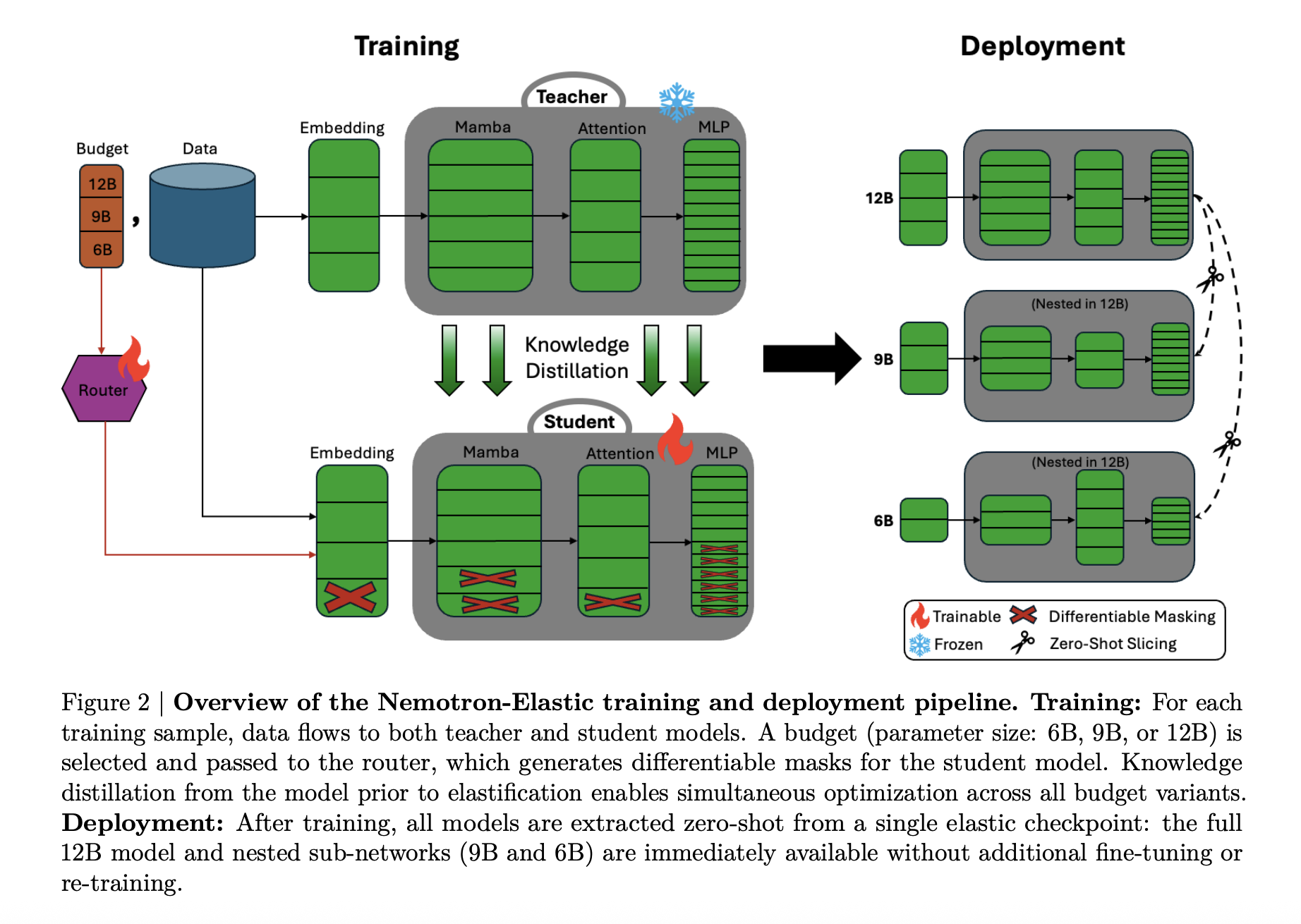

Why do AI development teams still train and store multiple large language models for different deployment needs when a single elastic model can be generated in multiple sizes at the same cost? NVIDIA is breaking down the usual “model family” stack into individual training jobs. Released by NVIDIA AI Team Nemotron-Elastic-12Ba 12B parameter inference model that embeds nested 9B and 6B variants into the same parameter space, so all three sizes come from a single elastic checkpoint with no additional distillation runs for each size.

All-in-one model series

Most production systems require multiple model sizes, a larger model for server-side workloads, a medium model for powerful edge GPUs, and a smaller model for tight latency or power budgets. Usual pipelines train or ingest each size separately, so the token cost and checkpoint storage vary with the number of variants.

Nemotron Elastic takes a different route. It starts with the Nemotron Nano V2 12B inference model and trains an elastic hybrid Mamba Attention network that exposes multiple nested sub-models. Using the provided slicing script, the published Nemotron-Elastic-12B checkpoint can be sliced into 9B and 6B variants, Nemotron-Elastic-9B and Nemotron-Elastic-6B, without any additional optimization.

All variants share weights and routing metadata, so training cost and deployment memory are related to the largest model rather than the number of sizes in the series.

Hybrid Mamba Transformer with Elastic Mask

Architecturally, Nelotron Elastic is a hybrid of Mamba-2 Transformer. The basic network follows the Nemotron-H style design, in which most layers are based on Mamba-2’s sequential state space block plus MLP, and a small part of the attention layer retains the global receptive field.

Resilience is achieved by transforming this hybrid into a dynamic model controlled by a mask:

- Width, embedding channel, Mamba header and header channel, attention head and FFN intermediate size can be reduced by binary masking.

- Deep layers can be discarded based on learned importance ordering, and the remaining paths retain signal flow.

The router module outputs discrete configuration choices based on the budget. These selections are converted to masks via Gumbel Softmax and then applied to embeddings, Mamba projections, attention projections, and FFN matrices. The research team added some details to keep the SSM structure valid:

- Crowd-aware SSM resiliency respecting Mamba headers and channel grouping.

- Heterogeneous MLP elasticization, where different layers can have different intermediate dimensions.

- Layer importance based on normalized MSE determines which layers are retained when depth is reduced.

The smaller variant is always the prefix choice in the ranked component list, making the 6B and 9B models true nested subnetworks of the 12B parent.

Two-stage training for inference workloads

Nemotron Elastic was trained as an inference model by Teacher Freeze. The teacher is the original Nemotron-Nano-V2-12B inference model. Joint optimization of elastic 12B students for all three budgets (6B, 9B, 12B) using knowledge distillation plus language modeling loss.

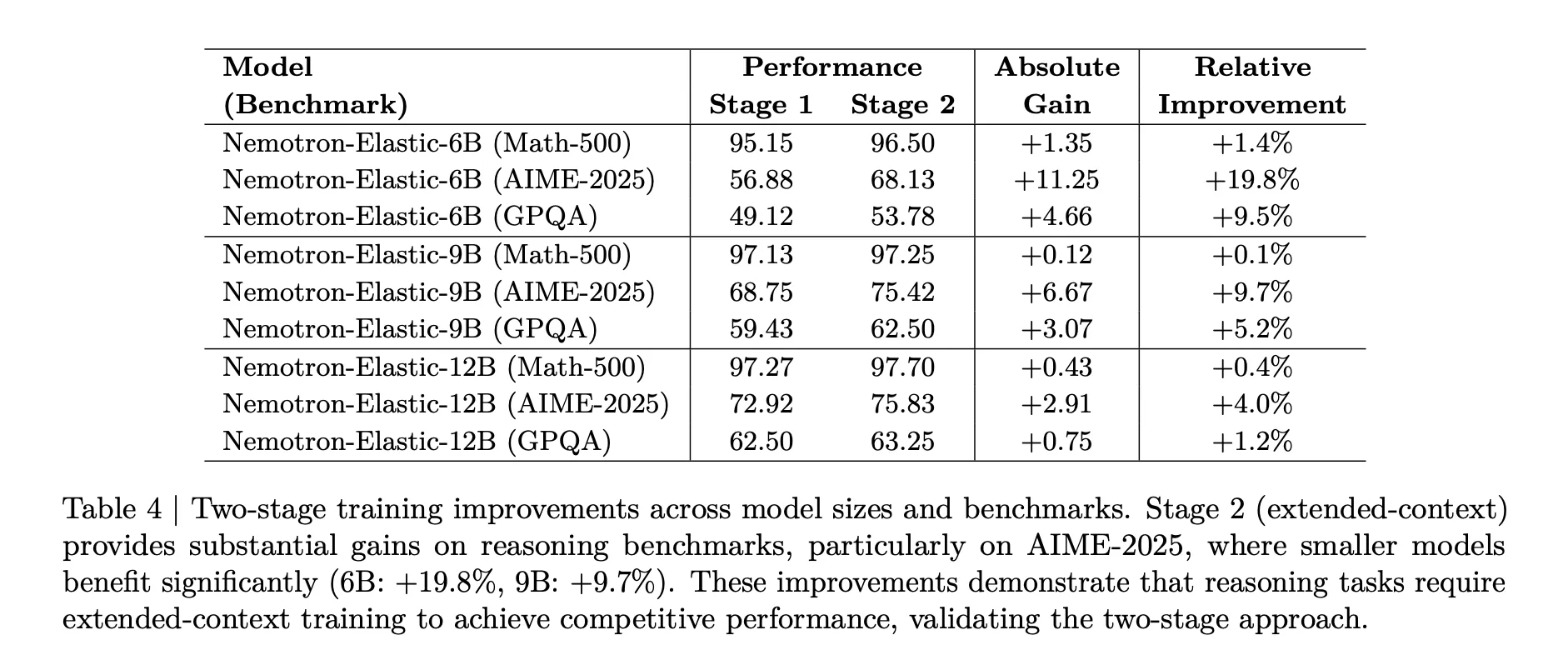

Training is conducted in two phases:

- First stage: Short context, sequence length 8192, batch size 1536, approximately 65B tokens, uniformly sampled on three budgets.

- Second stage: Extended context, sequence length 49152, batch size 512, approximately 45B tokens, non-uniform sampling favors full 12B budget.

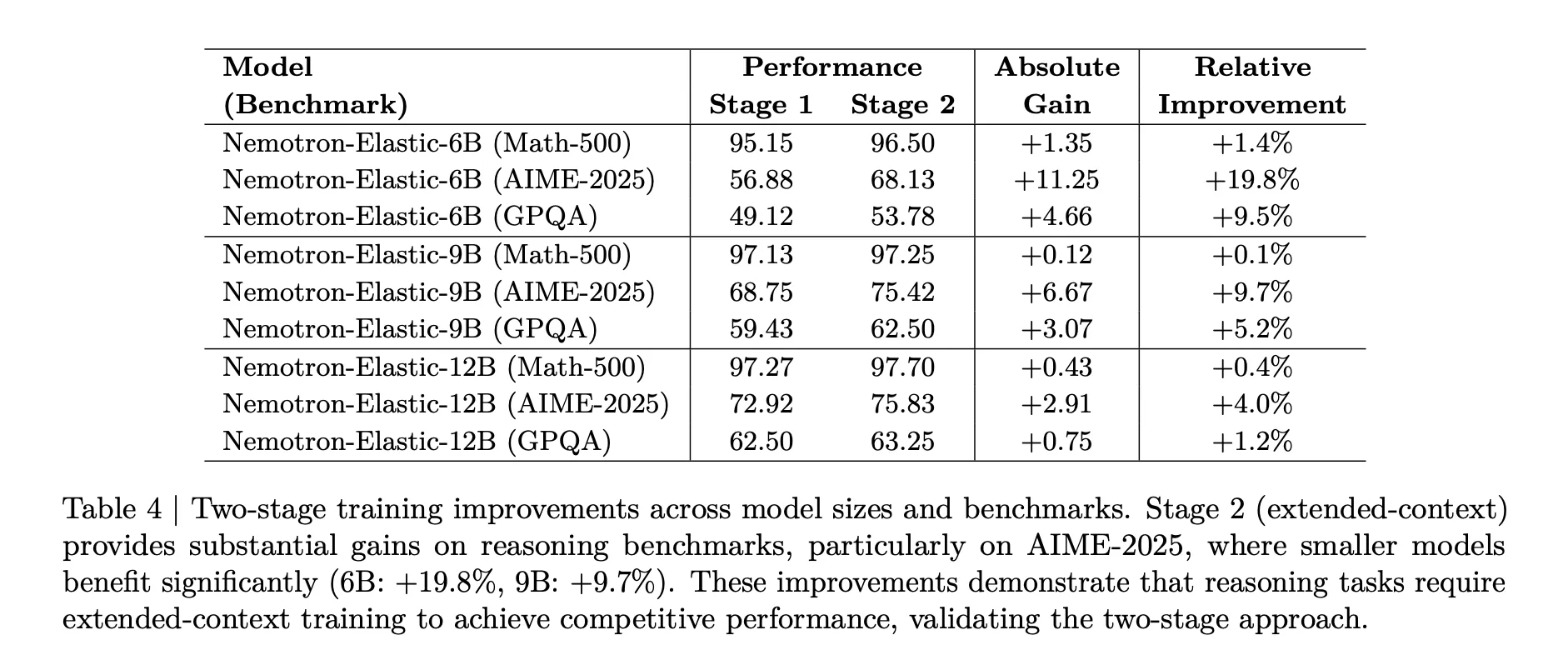

The second stage is important for reasoning tasks. The table above shows that for AIME 2025, after extended context training, the 6B model improved from 56.88 to 68.13, with a relative gain of 19.8%, while the 9B model gained 9.7% and the 12B model gained 4.0%.

Budget sampling has also been adjusted. In stage 2, non-uniform weights of 0.5, 0.3, 0.2 for 12B, 9B, 6B avoid the degradation of the largest model and keep all variants competitive on Math 500, AIME 2025 and GPQA.

Benchmark results

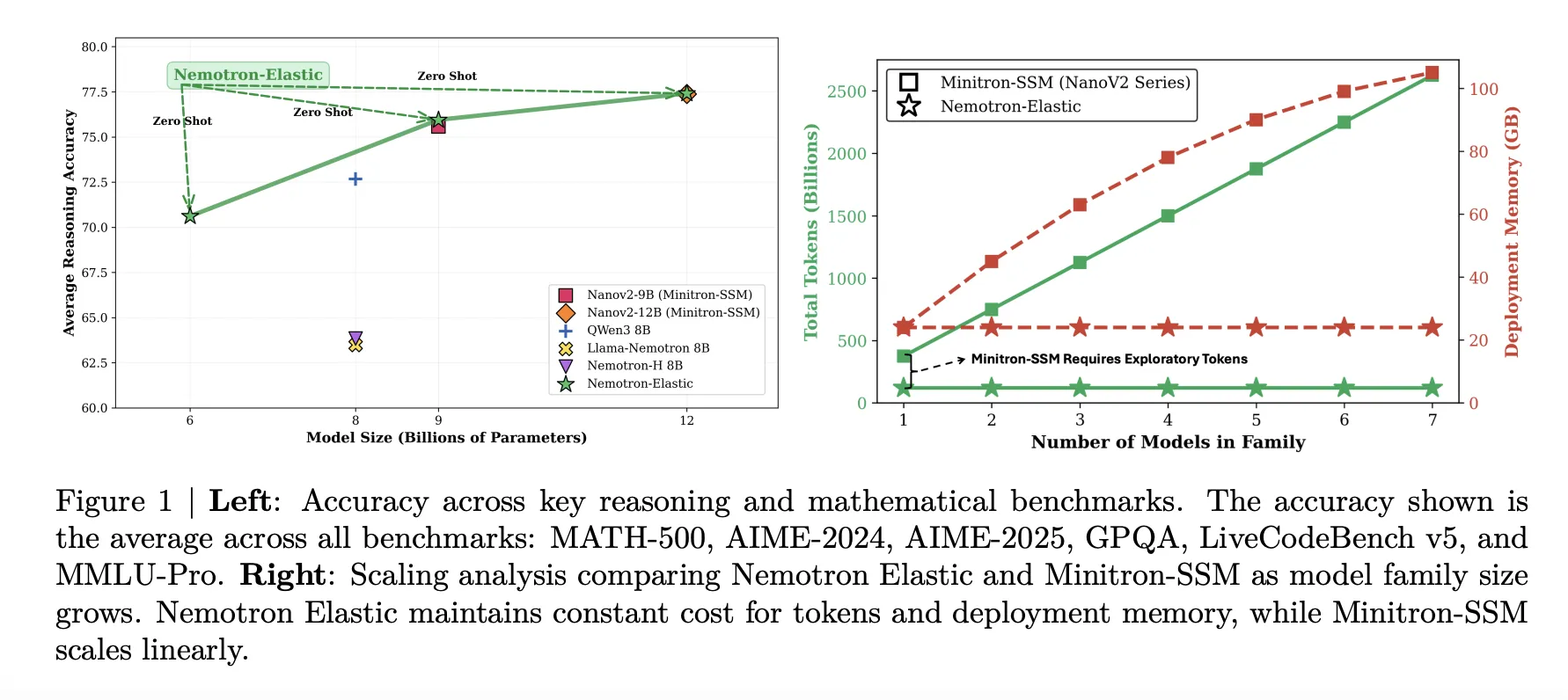

Nemotron Elastic is evaluated on the inference-heavy benchmarks, MATH 500, AIME 2024, AIME 2025, GPQA, LiveCodeBench v5, and MMLU Pro. The table below summarizes the passes at a precision of 1.

The 12B elastic model matches the NanoV2-12B baseline on average at 77.41 and 77.38, respectively, while also providing the 9B and 6B variants from the same run. The 9B elasticity model closely tracks the NanoV2-9B baseline at 75.95 and 75.99 respectively. The 6B elastic model reaches 70.61, which is slightly lower than the 72.68 of Qwen3-8B, but its number of parameters is still strong since it is not trained separately.

Training tokens and memory savings

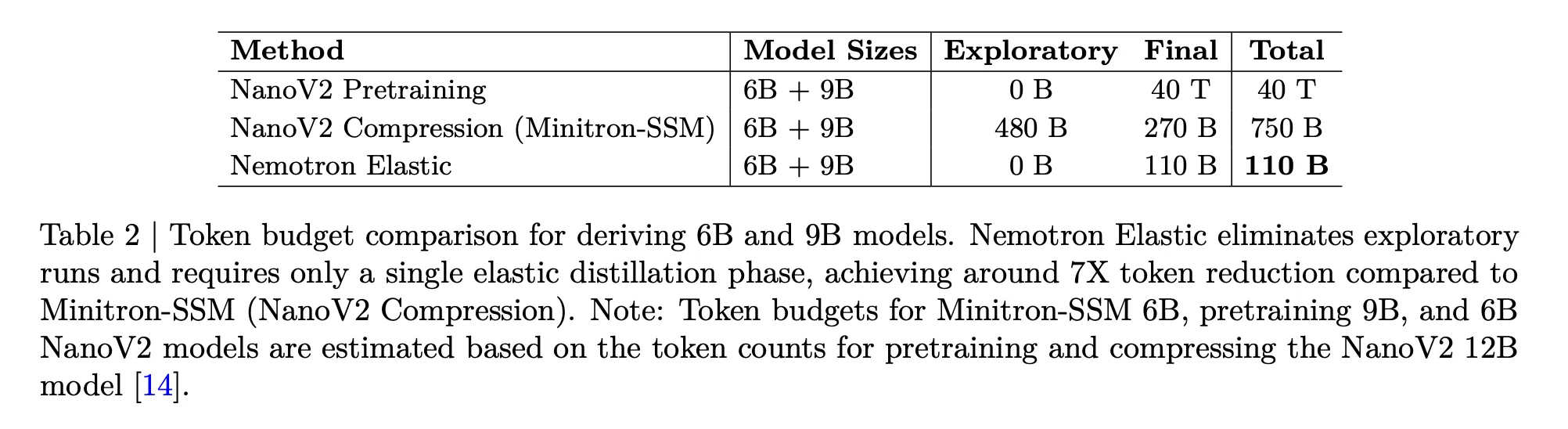

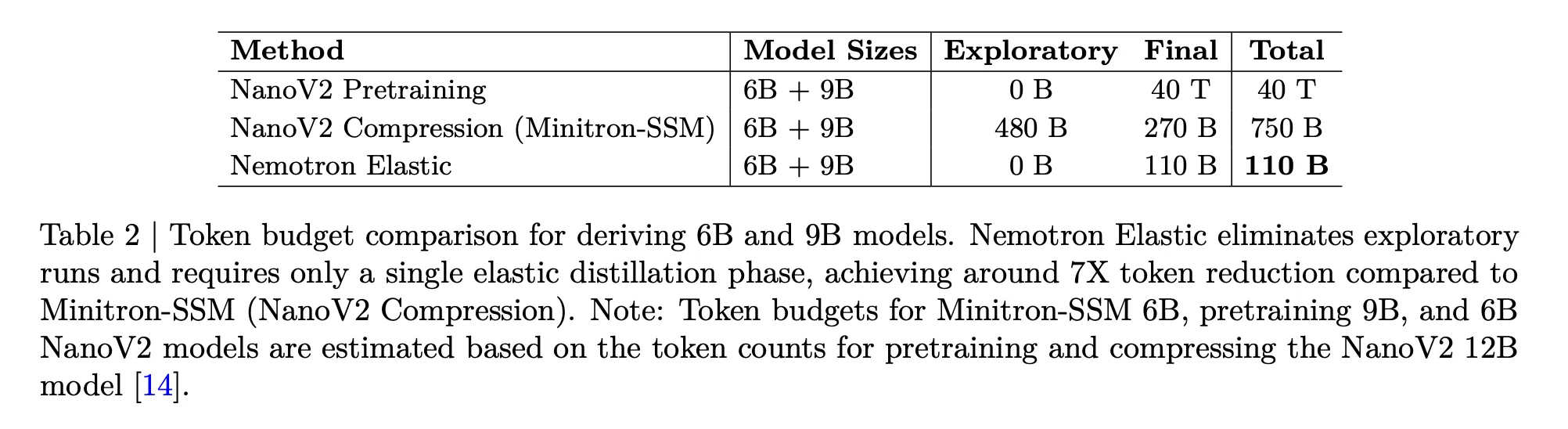

Nemotron Elastic addresses cost directly. The following table compares the token budget required to derive 6B and 9B models from the 12B parent:

- NanoV2 pre-trains 6B and 9B, with a total of 40T tokens.

- NanoV2 compression with Minitron SSM, 480B exploratory plus 270B final, 750B tokens.

- Nemotron Elastic, single elastic distillation run 110B tokens.

The research team reports that this can reduce time by about 360 times compared to training two additional models from scratch, and about 7 times compared with the compressed baseline.

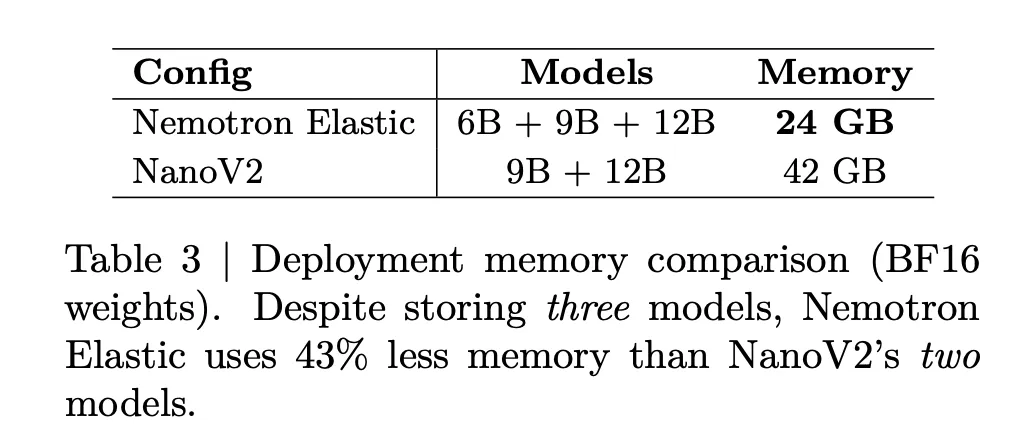

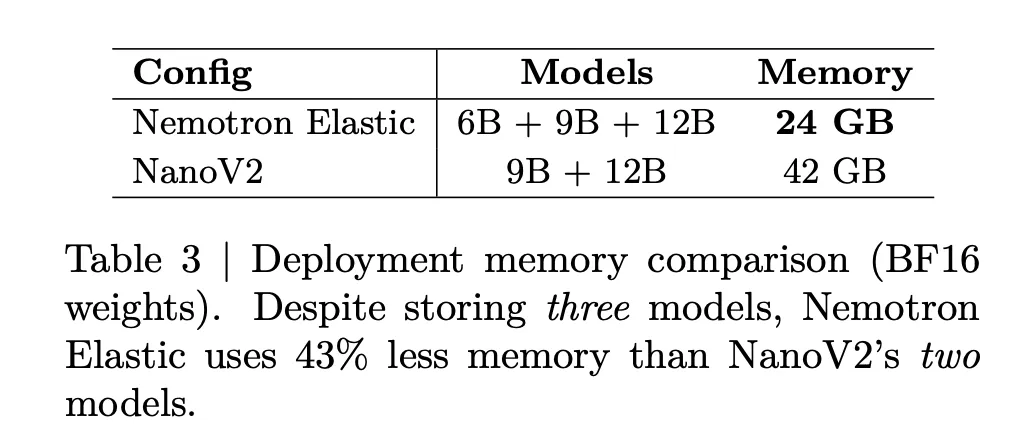

Deployment memory will also be reduced. The table below indicates that storing Nemotron Elastic 6B, 9B and 12B together requires 24GB of BF16 weight, while storing NanoV2 9B plus 12B requires 42GB. This reduced memory by 43% while also exposing an additional 6B of size.

Compare

| system | Dimensions (B) | Average reasoning score* | 6B + 9B tokens | BF16 memory |

|---|---|---|---|---|

| Nemotron Flexibility | 6, 9, 12 | 70.61/75.95/77.41 | 110B | 24GB |

| NanoV2 compression | 9, 12 | 75.99/77.38 | 750B | 42GB |

| Q article 3 | 8 | 72.68 | not applicable | not applicable |

Main points

- Nemotron Elastic trains a 12B inference model with nested 9B and 6B variants to extract zero samples without additional training.

- The Elastic family uses a hybrid Mamba-2 and Transformer architecture and a learning router that applies structured masks in width and depth to define each submodel.

- This approach requires 110B training tokens to derive 6B and 9B from the 12B parent, which is approximately 7x less than the 750B token Minitron SSM compression baseline and approximately 360x less than training additional models from scratch.

- On inference benchmarks such as MATH 500, AIME 2024 and 2025, GPQA, LiveCodeBench and MMLU Pro, the average scores of the 6B, 9B and 12B elastic models are about 70.61, 75.95 and 77.41, which are equivalent to or close to the NanoV2 baseline and competitive with Qwen3-8B.

- All three sizes share a 24GB BF16 checkpoint, so the deployed memory of the series remains the same, while the separate NanoV2-9B and 12B models have approximately 42GB of deployed memory, saving about 43% of memory while adding the 6B option.

Nemotron-Elastic-12B is a practical step toward reducing the cost of building and operating a family of inference models. A resilient checkpoint generates 6B, 9B, and 12B variants using a hybrid Mamba-2 and Transformer architecture, learning routers, and structured masks that preserve inference performance. This approach reduces the cost of tokens relative to separate compression or pre-training runs and keeps deployment memory to 24GB for all sizes, simplifying fleet management for multi-tier LLM deployments. Overall, Nemotron-Elastic-12B transforms multi-dimensional inference LLM into a single elastic system design problem.

Check Paper and model weight. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an artificial intelligence media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.