Meta AI releases Segment Anything Model 3 (SAM 3) for fast concept segmentation in images and videos

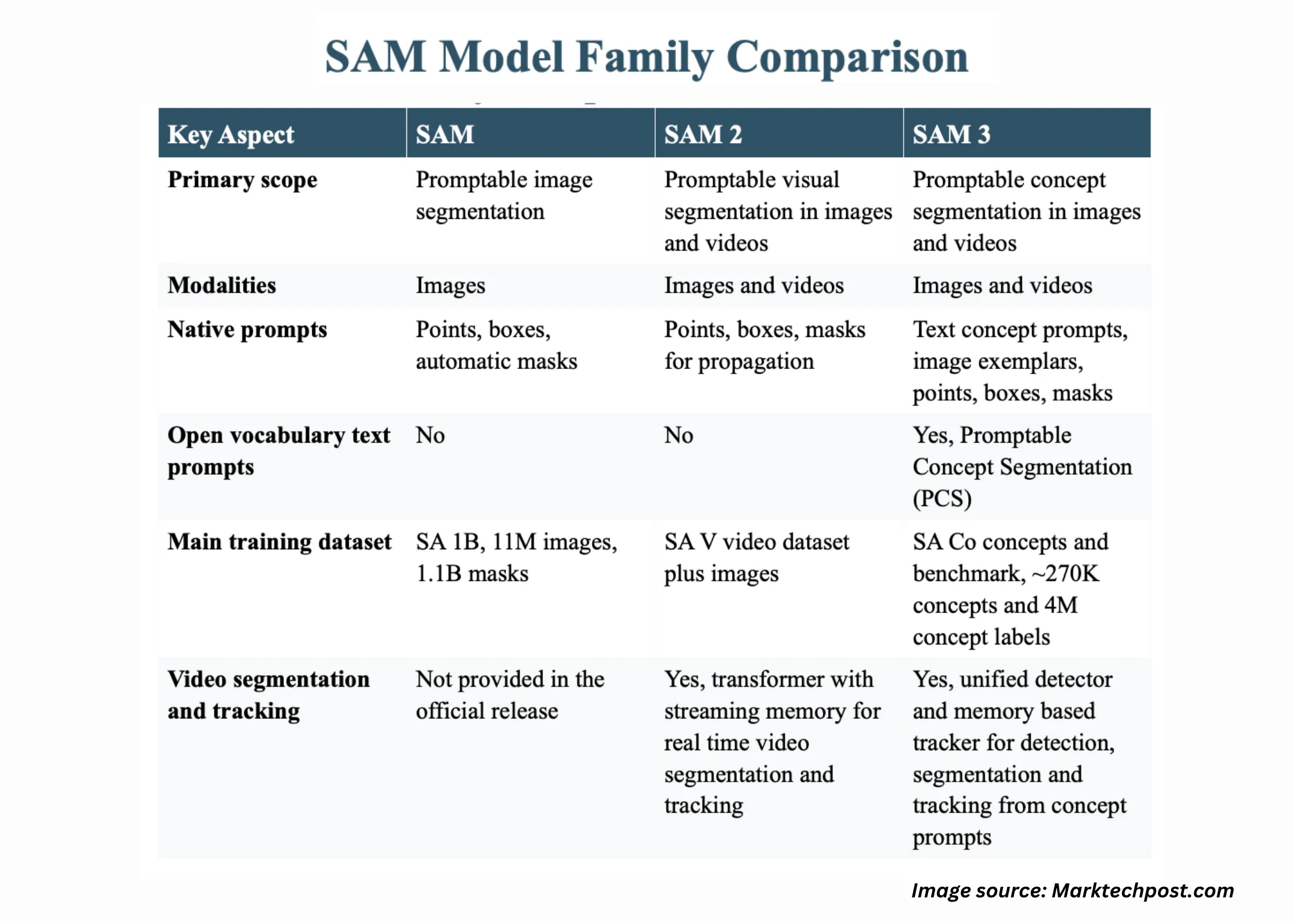

How to reliably find, segment, and track every instance of any concept in a large collection of images and videos using simple tips? The Meta AI team has just released Meta Segment Anything Model 3 (SAM 3 for short), an open source unified base model for rapid segmentation in images and videos that operates directly on visual concepts rather than just pixels. It can detect, segment, and track objects based on textual cues and visual cues such as points, boxes, and masks. Compared to SAM 2, SAM 3 can exhaustively find all instances of an open vocabulary concept, such as every “red baseball cap” in a long video, using a single model.

From visual cues to cued concept segmentation

Early SAM models focused on interactive segmentation. The user clicks or draws a box, and the model generates a mask. This workflow does not scale to tasks where the system must find all instances of a concept in a large image or video collection. SAM 3 formalizes Cue Concept Segmentation (PCS), which accepts concept cues and returns instance masks and stable identities for each matching object in images and videos.

Concept prompts combine short noun phrases with visual examples. The model supports detailed phrases such as “yellow school bus” or “red player” and can also use example crops as positive or negative examples. Text hints describe the concept, while example crops help smooth out fine-grained visual differences. SAM 3 can also be used as a visual tool within multimodal large language models to generate longer referential expressions and then invoke SAM 3 with refined conceptual cues.

Architecture, presence tokens, and tracking design

The SAM 3 model has 848M parameters and consists of detectors and trackers sharing a single visual encoder. The detector is based on the DETR architecture and is conditioned on three inputs: text cues, geometric cues, and image samples. This separates the core image representation from the prompt interface and lets the same backbone serve many segmentation tasks.

A key change in SAM 3 is the presence of tokens. This component predicts whether each candidate box or mask actually corresponds to the requested concept. This is especially important when the text prompt describes related entities (such as “player in white” and “player in red”). Presence markers reduce confusion between such cues and improve open vocabulary accuracy. Recognition (meaning classifying candidates into concepts) is decoupled from localization (meaning predicting box and mask shapes).

For video, SAM 3 reuses SAM 2’s transformer-encoder-decoder tracker, but tightly connects it to a new detector. The tracker propagates instance identities across frames and supports interactive refinement. The decoupled detector and tracker design minimizes task interference, scales cleanly with more data and concepts, and still exposes an interactive interface similar to early Segment Anything models for point-based refinement.

SA-Co dataset and benchmark suite

To train and evaluate instant concept segmentation (PCS), Meta introduces the SA-Co series of datasets and benchmarks. The SA-Co benchmark contains 270K unique concepts, which is more than 50 times the number of concepts in the previous open vocabulary segmentation benchmark. Each image or video is paired with a noun phrase and a dense instance mask of all objects that match each phrase, including negative cues that no objects should match.

The associated data engine automatically annotated over 4M unique concepts, making SA-Co the largest high-quality open vocabulary segmented corpus mentioned by Meta. The engine combines large ontologies with automatic inspection and supports the mining of hard negatives, such as visually similar but semantically different phrases. This scale is critical for learning models that can respond robustly to a variety of textual cues in real-world scenarios.

Image and video performance

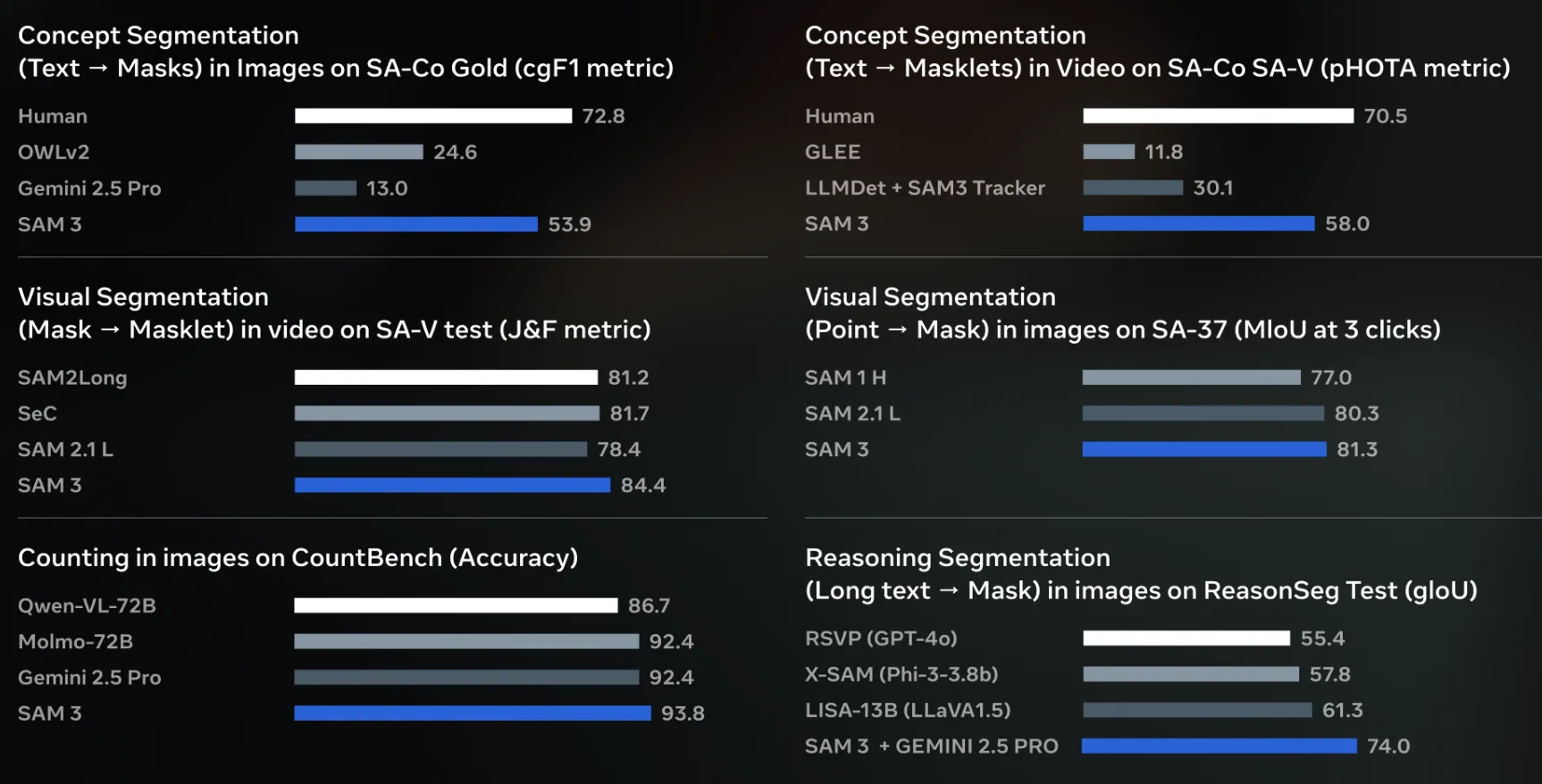

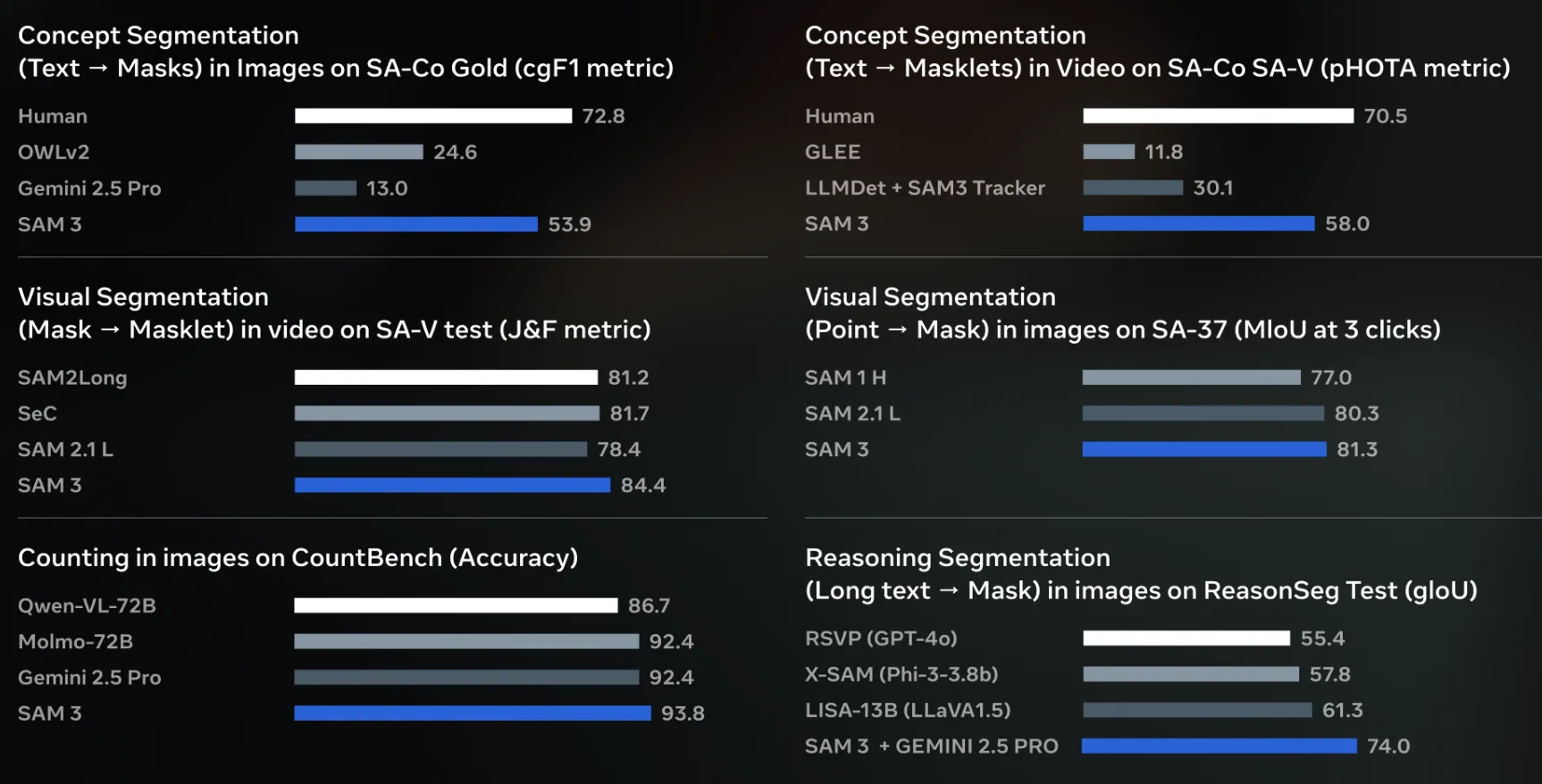

On the SA-Co image benchmark, SAM 3 achieved 75% to 80% of human performance measured using the cgF1 metric. Competing systems such as OWLv2, DINO-X and Gemini 2.5 are significantly behind. For example, in the SA-Co gold box test, SAM 3 reported a cgF1 of 55.7, while OWLv2 reached 24.5, DINO-X reached 22.5, and Gemini 2.5 reached 14.4. This shows that a single unified model can outperform specialized detectors in open word segmentation.

In the video, SAM 3 is evaluated on SA-V, YT-Temporal 1B, SmartGlasses, LVVIS and BURST. In the SA-V test, it achieved 30.3 cgF1 and 58.0 pHOTA. In the YT-Temporal 1B test, it achieved 50.8 cgF1 and 69.9 pHOTA. In the SmartGlasses test, it reached 36.4 cgF1 and 63.6 pHOTA, while in the LVVIS and BURST tests, it reached 36.3 mAP and 44.5 HOTA respectively. These results confirm that a single architecture can handle image PCS and long horizontal video tracking.

SAM 3 as a data-centric benchmarking opportunity for an annotation platform

For a data-centric platform like Encord, SAM 3 is a natural next step following the existing integration of SAM and SAM 2 for automatic labeling and video tracking, which already allows customers to automatically annotate more than 90% of their images with high mask accuracy using the base model in Encord’s QA-driven workflow. Similar platforms such as CVAT, SuperAnnotate and Picsellia are standardizing Segment Anything style models to enable zero-shot labeling, in-loop model annotation and MLOps pipelines. SAM 3’s fast conceptual segmentation and unified image video tracking create clear editing and benchmarking opportunities here, e.g. quantifying label cost reductions and quality gains when stacks like Encord move from SAM 2 to SAM 3 in dense video datasets or multi-modal settings.

Main points

- SAM 3 unifies image and video segmentation into a single 848M parameter base model that supports textual cues, exemplars, points, and boxes for cueing conceptual segmentation.

- The SA-Co data engine and benchmark introduces approximately 270K evaluation concepts and over 4 million automatically annotated concepts, making SAM 3’s training and evaluation stack one of the largest open vocabulary segmentation resources available.

- SAM 3 significantly outperforms previous open vocabulary systems, reaching approximately 75% to 80% of human cgF1 on SA Co and more than twice that of OWLv2 and DINO-X on key SA-Co Gold assay metrics.

- The architecture decouples DETR-based detectors from SAM 2-style video trackers with presence headers, enabling stable instance tracking in long videos while maintaining interactive SAM-style refinement.

SAM 3 advances everything from instant visual segmentation to instant conceptual segmentation in a single 848M parameter model that unifies images and videos. It leverages the SA-Co benchmark, which contains approximately 270,000 evaluation concepts and more than 4 million automatically annotated concepts, and performs close to 75% to 80% of human performance on cgF1. Decoupled DETR-based detectors and SAM type 2 trackers with presence heads make SAM 3 a practical vision base model for agents and products. Overall, SAM 3 is now the reference point for production-scale open vocabulary segmentation.

Check Papers, Repos and Model Weights. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.