OpenAI debuts GPT-5.1-Codex-Max, a long-term proxy encoding model for compression for multi-window workflows

OpenAI introduces GPT-5.1-Codex-Max, a cutting-edge agent coding model designed for long-running software engineering tasks involving millions of tokens and multi-hour sessions. It is now available in the CLI, IDE extension, cloud integration, and code review interface within Codex, with API access planned soon.

What is the optimization purpose of GPT-5.1-Codex-Max??

GPT-5.1-Codex-Max is built on an update of OpenAI’s basic inference model. The base model is trained on agent tasks in software engineering, mathematics, research, and other fields. In addition to this, GPT-5.1-Codex-Max is trained on real-world software engineering workloads such as PR creation, code review, front-end coding, and Q&A.

This model targets cutting-edge coding assessments rather than general chat. GPT-5.1-Codex-Max and the wider Codex family are recommended only for proxy coding tasks in Codex or Codex-like environments, and not as a replacement for GPT-5.1 in a general conversation.

GPT-5.1-Codex-Max is also the first Codex model trained to run in a Windows environment. Its training includes tasks to make it a better collaborator in the Codex CLI, including improving behavior when running commands and processing files under the Codex sandbox.

Compression and long-running tasks

The core function of GPT-5.1-Codex-Max is compression. The model still operates within a fixed context window, but it is natively trained to work across multiple context windows by pruning its interaction history while retaining the most important information in the long term.

In Codex applications, GPT-5.1-Codex-Max automatically compresses its sessions when approaching the context window limit. It creates a new context window to preserve the basic state of the task, and then continues execution. Repeat this process until the task is completed.

OpenAI reported an internal evaluation in which GPT-5.1-Codex-Max worked independently on a task for over 24 hours. During these runs, the model iterates its implementation, fixes failing tests and ultimately produces successful results.

Reasoning effort, speed, and token efficiency

GPT-5.1-Codex-Max uses the same inference work controls introduced with GPT-5.1, but tuned for encoding agents. Reasoning works by choosing how many thought markers to use before giving an answer.

On SWE-bench Verified, GPT-5.1-Codex-Max with medium inference capabilities achieves higher accuracy than GPT-5.1-Codex with the same effort while using 30% fewer thought tokens. For non-latency-sensitive tasks, OpenAI has introduced a new Extra High, written as xhigh, inference work that lets the model think longer to get better answers. Medium remains the recommended setting for most workloads.

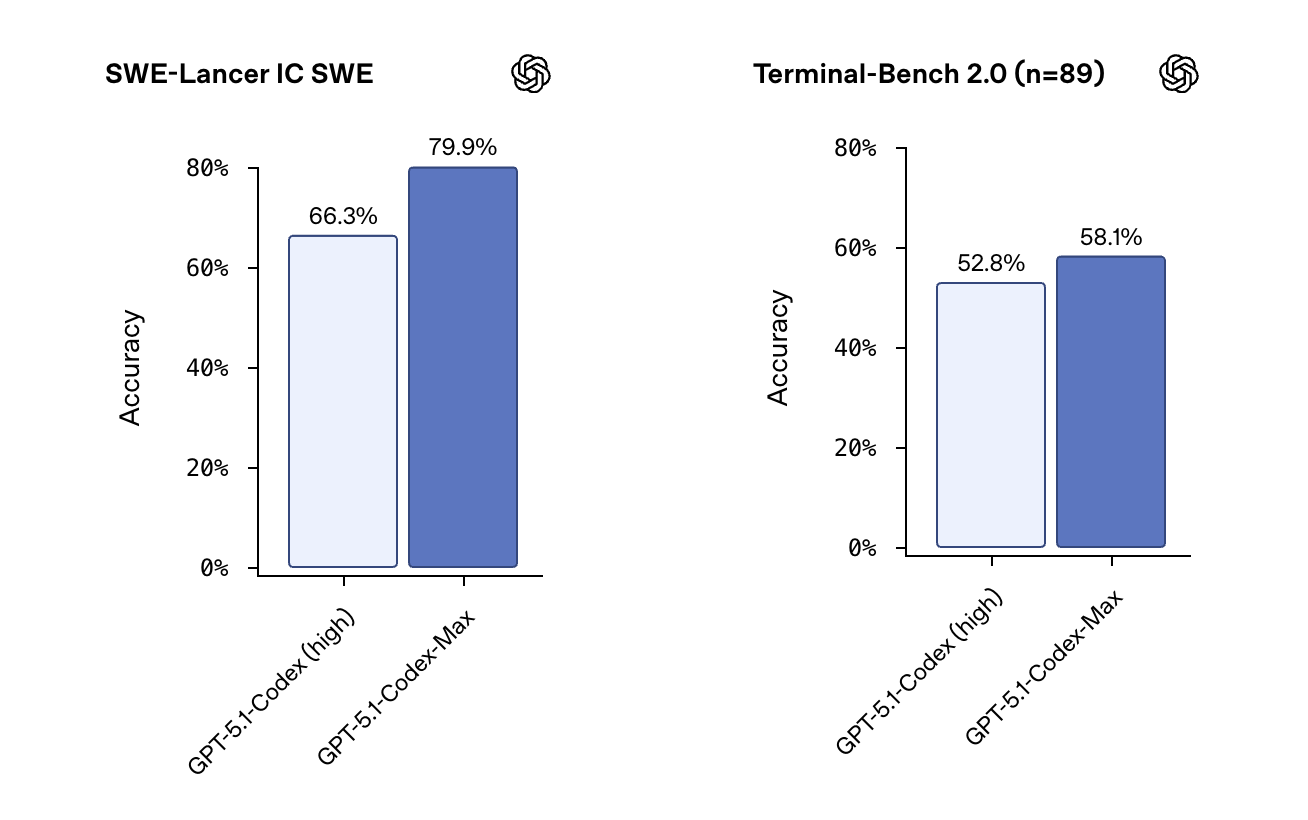

These changes are reflected in the benchmark results. GPT-5.1-Codex is evaluated at high reasoning ability and GPT-5.1-Codex-Max is evaluated at xhigh. OpenAI reported the following scores on 500 questions verified by SWE-bench, 73.7% for GPT-5.1-Codex and 77.9% for GPT-5.1-Codex-Max. On the SWE-Lancer IC SWE, the scores were 66.3% and 79.9%. On Terminal-Bench 2.0, the scores are 52.8% and 58.1% respectively. All evaluations were run with compression enabled, Terminal-Bench 2.0 using the Codex CLI within the Laude Institute Harbor harness.

In qualitative tests, GPT-5.1-Codex-Max generates high-quality front-end designs with similar functional and visual quality to GPT-5.1-Codex, but with lower overall token cost due to more efficient inference tracking.

Main points

- GPT 5.1 Codex Max, a cutting-edge agent coding model built on updated reasoning and further trained on real-world software engineering tasks such as PR creation, code review, front-end coding and Q&A, is now available on the Codex CLI, IDE, cloud and code review interfaces, with API access coming later.

- This model introduces native support for long-running jobs through compression, where it repeatedly compresses its own history to span multiple context windows, allowing autonomous coding sessions to last over 24 hours on millions of tokens while remaining on a single task.

- GPT 5.1 Codex Max retains the inference work control of GPT 5.1. Under medium workloads, it outperforms GPT 5.1 Codex on SWE benchmark verification, while using about 30% fewer thought markers and providing an ultra-high inference mode for the most difficult tasks.

- On cutting-edge encoding benchmarks with compression enabled, the high-strength GPT 5.1 Codex Max improves SWE bench Verified from 73.7% to 77.9%, SWE Lancer IC SWE from 66.3% to 79.9%, and Terminal Bench 2.0 from 52.8% to 58.1% compared to the high-strength GPT 5.1 Codex.

GPT-5.1-Codex-Max explicitly states that OpenAI is doubling down on long-running proxy encodings rather than short-term single edits. Compression, cutting-edge coding evaluation (such as SWE-bench Verified and SWE-Lancer IC SWE), and explicit reasoning effort control make this model a test case that can be evaluated at extended test time in real software engineering workflows (not just benchmarks). As this feature enters the production pipeline, preparing the framework and Codex sandbox will be critical. Overall, GPT-5.1-Codex-Max is a cutting-edge proxy coding model that enables long-term inference in real-world development tools.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.