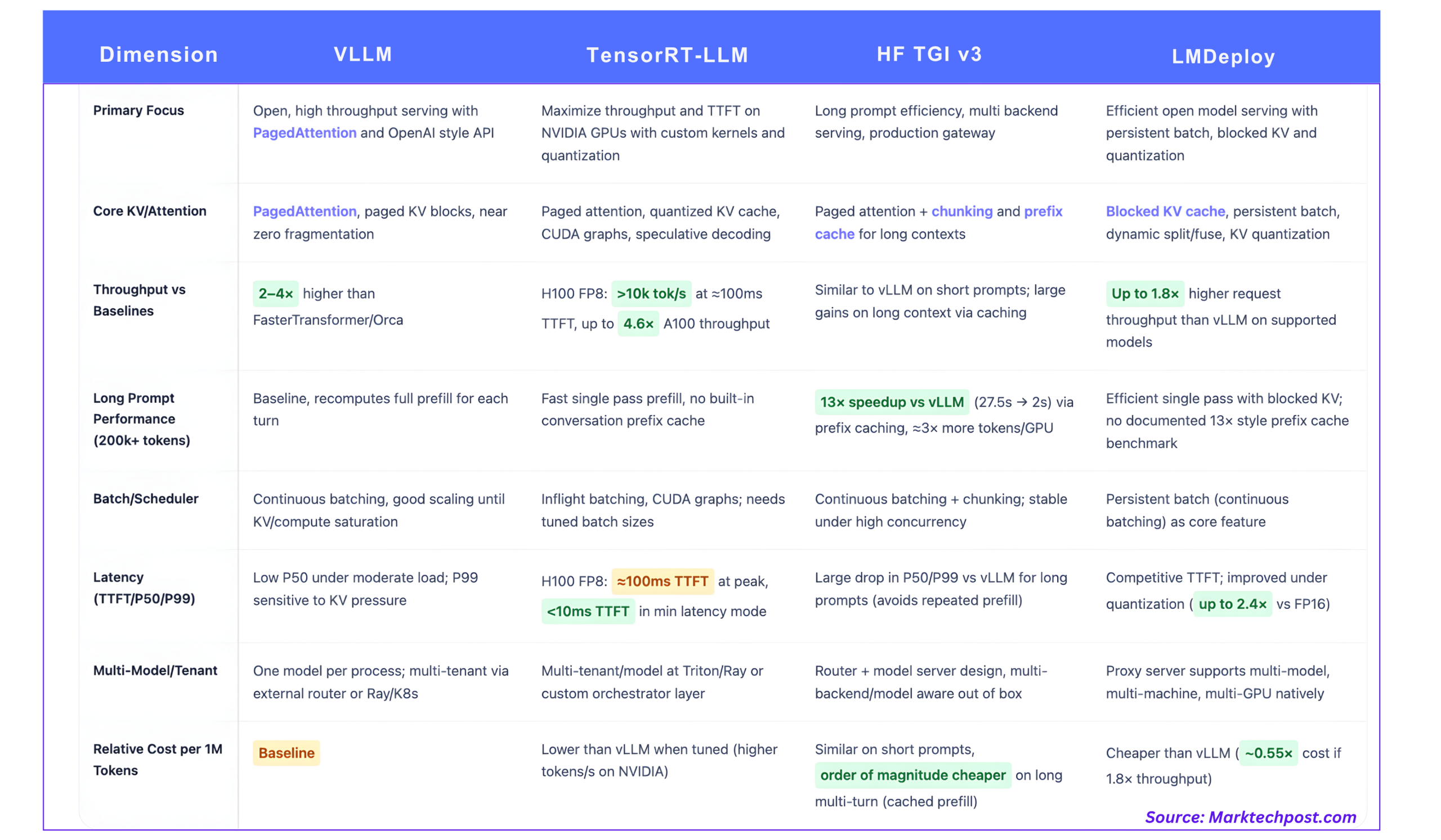

vLLM, TensorRT-LLM, HF TGI, LMDeploy, in-depth technical comparison of production LLM inference

Producing LLM services is now a system issue rather than a generate() ring shape. For real workloads, the choice of inference stack affects your Tokens per second, tail latency,final Cost per million tokens on a given GPU queue.

This comparison focuses on 4 widely used stacks:

- Master of Laws

- NVIDIA TensorRT-LL.M.

- Embracing facial text generation inference (TGI v3)

- LM deployment

1. vLLM and PagedAttention as open baselines

core concept

vLLM surrounds Pagination attentionan attention implementation that treats the KV cache as paged virtual memory rather than a single contiguous buffer per sequence.

Instead of allocating a large KV area for each request, Master of Laws:

- Divide the KV cache into fixed-size chunks

- Maintain a block table that maps logical tokens to physical blocks

- Sharing blocks between sequences with overlapping prefixes

This reduces external fragmentation and lets the scheduler pack more concurrent sequences into the same VRAM.

Throughput and latency

vLLM improves throughput by 2–4× Compared to systems such as FasterTransformer and Orca, latency is similar and gains are greater for longer sequences.

Key properties of operators:

- Continuous batching (also known as flying batching) merges incoming requests into existing GPU batches instead of waiting for a fixed batching window.

- In a typical chat workload, throughput scales nearly linearly with concurrency until the KV memory or compute is saturated.

- For moderate concurrency, P50 latency is still low, but once the queue is long or the KV memory is tight, especially for pre-populated large queries, P99 latency may decrease.

vLLM made public OpenAI compatible with HTTP API And integrates well with Ray Serve and other orchestrators, which is why it is widely used as an open baseline.

KV and multi-tenancy

- PagedAttention gives KV waste is almost zero and flexible prefix sharing within and between requests.

- Each vLLM process serves a modelmulti-tenant and multi-model setups are typically built using external routers or API gateways that fan out to multiple vLLM instances.

2. TensorRT-LLM, hardware maximum on NVIDIA GPUs

core concept

TensorRT-LLM is NVIDIA’s GPU-optimized inference library. The library provides custom attention kernels, on-the-fly batching, paged KV cache, quantization to FP4 and INT4, and speculative decoding.

It is tightly coupled to NVIDIA hardware, including FP8 tensor cores on Hopper and Blackwell.

Tested performance

NVIDIA’s H100 vs A100 review is the most specific public reference:

- On H100 with FP8, TensorRT-LLM reaches Over 10,000 output tokens/second The peak throughput is 64 concurrent requestsand ~100 milliseconds The time of the first token.

- H100 FP8 reaches Maximum throughput increased by 4.6 times and 4.4x faster first token latency Compared with the same model A100.

For latency sensitive mode:

- TensorRT-LLM on H100 can drive TTFT Less than 10 milliseconds In the batch 1 configuration, the cost is lower overall throughput.

These figures are model and shape specific, but they give realistic proportions.

Pre-filling and decoding

TensorRT-LLM optimizes two stages:

- Pre-population benefits from high-throughput FP8 attention kernels and tensor parallelism

- Decoding benefits from CUDA graphs, speculative decoding, quantized weights and KV, and kernel fusion

The result is very high tokens/second over a wide range of input and output lengths, especially when the engine is tuned for that model and batch profile.

KV and multi-tenancy

TensorRT-LLM provides:

- Paged KV cache Has configurable layout

- Supports long sequence, KV reuse and offloading

- Flying batching and priority-aware scheduling primitives

NVIDIA pairs this with an orchestration mode based on Ray or Triton multi-tenant clusters. Multi-model support is done at the coordinator level, not within a single TensorRT-LLM engine instance.

3. Hugging Face TGI v3, long prompt expert, multi-backend gateway

core concept

Text Generative Inference (TGI) is a Rust and Python-based services stack that adds:

- HTTP and gRPC API

- continuous batch scheduler

- Observability and autoscaling hooks

- Pluggable backends including vLLM style engines, TensorRT-LLM and other runtimes

Version 3 focuses on long prompt processing Chunking and prefix caching.

Long prompt benchmark vs. vLLM

The TGI v3 documentation gives clear benchmarks:

- in long prompt exceeds 200,000 tokensthe conversation reply requires vLLM 27.5 seconds Can be delivered approx. 2 seconds in TGI v3.

- According to reports, this is 13x speedup In terms of that workload.

- TGI v3 is capable of processing approx. 3x more tokens in same GPU memory By reducing memory footprint and leveraging chunking and caching.

The mechanism is:

- TGI retains the original conversation context in prefix cacheso only incremental tokens are paid in subsequent rounds

- The cache lookup cost is approximately microsecondswhich is negligible relative to the prefill calculation

This is a targeted optimization for workloads with very long prompts that are reused in turns, such as RAG pipelines and analytic summaries.

Architecture and latency behavior

Key components:

- Chunkingvery long prompts are divided into manageable segments for KV and scheduling

- prefix cachea data structure that shares long context across rounds

- Continuous batchingthe incoming request joins the batch of the already run sequence

- PagedAttention and Fusion Kernel On the GPU backend

For short chat type workloads, throughput and latency are about the same as vLLM. For long, cacheable contexts, both P50 and P99 latencies improve by an order of magnitude because the engine avoids repeated pre-population.

Multiple backends, multiple models

TGI is designed to Router + model server architecture. It can:

- Route requests across multiple models and replicas

- Target different backends such as TensorRT-LLM on H100 plus CPU or smaller GPU for low priority traffic

This makes it suitable as a central service layer in a multi-tenant environment.

4. LMDeploy and TurboMind have blocking KV and aggressive quantization

core concept

LMDploy in the InternLM ecosystem is a toolkit for compressing and serving LLM to Turbo thinking engine. Its focus is:

- High-throughput request servicing

- Blocked KV cache

- Continuous batch processing (continuous batch processing)

- Weight quantization and KV caching

Relative throughput versus vLLM

The project states:

- ‘LMDeploy’s request throughput is 1.8 times higher than vLLM‘, supported by persistent batching, chunked KV, dynamic segmentation and fusion, tensor parallelism and optimized CUDA kernels.

KV, quantization and delay

LMDeploy includes:

- Blocked KV cachesimilar to paged KV, helps pack many sequences into VRAM

- support KV cache quantizationusually int8 or int4, to reduce KV memory and bandwidth

- Weighted quantization path only, e.g. 4-bit AWQ

- Benchmarking tool reporting token throughput, request throughput, and first token latency

This makes LMDeploy attractive when you want to run larger open models (such as InternLM or Qwen) on moderate GPUs with aggressive compression while still maintaining good tokens.

Multi-model deployment

LMDeploy provides proxy server Able to handle:

- Multi-model deployment

- Multi-machine, multi-GPU setup

- Routing logic for model selection based on request metadata

So architecturally, it’s closer to TGI than a single engine.

When to use what?

- If you want maximum throughput and extremely low TTFT on NVIDIA GPUs

- TensorRT-LL.M. is the first choice

- It uses FP8 and lower precision, custom kernels, and speculative decoding to push tokens/second and keep TTFT under 100ms at high concurrency and under 10ms at low concurrency

- If you are occupied with long prompts for reuse, such as RAGs in large contexts

- TGI v3 is a strong default

- its prefix cache and chunked abandon 3×Token Capacity and Latency reduced by 13 times Better than vLLM in published long prompt benchmarks, no additional configuration required

- If you want an open, simple engine with strong benchmark performance and OpenAI style API

- Master of Laws Still maintain standard baseline

- PagedAttention and continuous batching make it possible 2–4 times faster It integrates perfectly with Ray and K8s compared to older stacks with similar latency

- If your goal is open models (e.g. InternLM or Qwen) and value radical quantification of multi-model services

- LM deployment Very suitable

- Blocking KV cache, persistent batching and int8 or int4 KV quantization are given Request throughput is 1.8 times higher than vLLM On supported models, includes router layer

In practice, many development teams use a mix of these systems, such as using TensorRT-LLM for high-volume proprietary chat, TGI v3 for long-context analysis, and vLLM or LMDeploy for experimental and open model workloads. The key is to align the throughput, latency tail, and KV behavior with the actual token distribution in the traffic, and then calculate the cost per million tokens based on tokens/second measured on your own hardware.

refer to

- vLLM/PagedAttention

- TensorRT-LLM performance and overview

- HF Text Generating Inference (TGI v3) long prompt behavior

- LMDeploy/TurboMind

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.