Google DeepMind launches SIMA 2, a universal agent for complex 3D virtual worlds powered by Gemini

Google DeepMind released SIMA 2 to test how far generalist concrete agents can go in complex 3D game worlds. The new version of SIMA (Scalable Instructable Multiworld Agent) upgrades the original instruction follower to a Gemini-driven system that can reason about goals, explain plans, and improve through self-play in many different environments.

From SIMA 1 to SIMA 2

The first SIMA was released in 2024 and learned more than 600 language following skills such as “turn left”, “climb the ladder”, and “open the map”. It controls commercial games only by rendering pixels and virtual keyboard and mouse, without access to game internals. On complex tasks, DeepMind reported a SIMA 1 success rate of about 31%, while human players achieved about 71% on the same benchmark.

SIMA 2 retains the same specific interface, but replaces the core strategy with Gemini models. According to the TechCrunch article, the system uses Gemini 2.5 Flash Lite as the inference engine. This turns SIMA from a direct mapping between pixels and actions to an agent that forms an internal plan, reasons it in language, and then executes the necessary sequence of actions in the game. DeepMind describes it as shifting from a command follower to an interactive gaming companion that collaborates with the player.

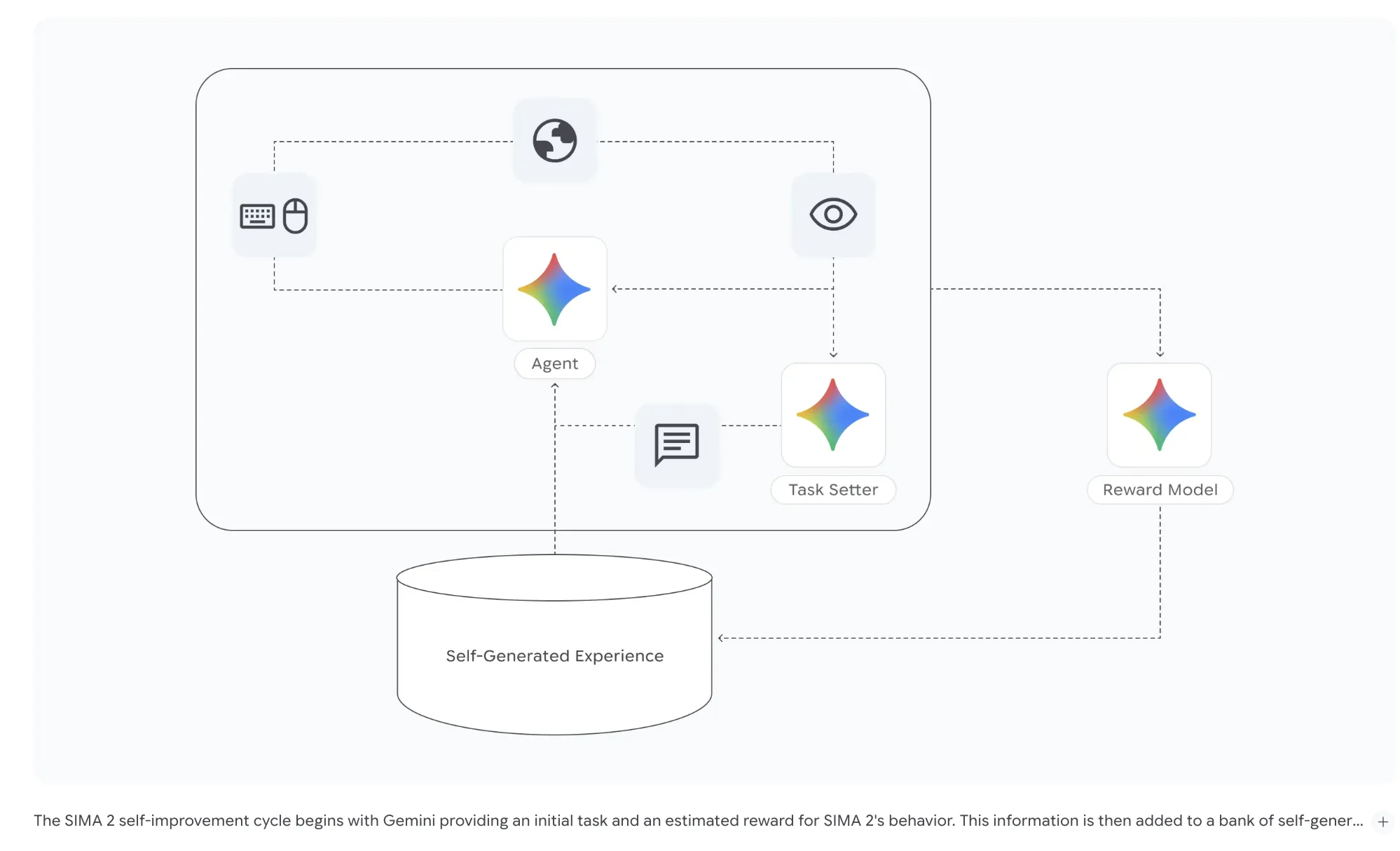

Architecture, Gemini is in the control loop

The SIMA 2 architecture integrates Gemini as the agent core. The model receives visual observations and user instructions, infers high-level goals, and generates actions sent through a virtual keyboard and mouse interface. Training uses a mixture of human demonstration videos with language labels as well as labels generated by Gemini itself. This supervision enables the agent to combine its internal reasoning with human intentions and model-generated behavioral descriptions.

Thanks to this training regimen, SIMA 2 can explain what it intends to do and list the steps it will take. In practice, this means that an agent can answer questions about its current goals, justify its decisions, and reveal interpretable chains of thoughts about the environment.

Generalization and performance

Task completion chart shows SIMA 1 is about 31%, SIMA 2 is about 62%, the values on the main evaluation kit, humans are in the range of about 70%. Integrating Gemini can double the performance of the original agent on complex tasks. The important point is not the exact numbers but the shape, with the new agent closing most of the gaps measured between SIMA 1 and human players on long-term, linguistically specified tasks in the training game.

The DeepMind team showed similar patterns in games such as ASKA and MineDojo, which were never seen during training. In these environments, SIMA 2 has much higher task completion rates than SIMA 1, indicating that Zero-shot generalization rather than overfitting to a fixed set of games. The agent also delivers abstract concepts, for example, it can reuse its understanding of “dig” in one title when it is asked to “harvest” in another title.

Multimodal Transport Directive

SIMA 2 extends the command channel beyond plain text. DeepMind demos showed the agent following verbal commands, reacting to sketches drawn on the screen, and performing tasks based on prompts using only emojis. In one example, the user asked SIMA 2 to go to a “house the color of a ripe tomato.” The Gemini core thinks that ripe tomatoes are red and then chooses and goes to the red house.

Gemini also supports instructions in a variety of natural languages and supports hybrid prompts that combine verbal and visual cues. For physical AI, robotics developers this is a concrete multimodal stack, a shared representation links text, audio, images and game actions, and agents use this representation to weave abstract symbols into concrete control sequences.

Massive self-improvement

One of the main research contributions of SIMA 2 is the explicit self-improvement loop. After an initial period using human gameplay as a baseline, the team moved the agent to a new game and allowed it to learn only from its own experience. A separate Gemini model generates new tasks for agents in each world, and a reward model scores each attempt.

These trajectories are stored in a set of self-generated data. Subsequent generations of SIMA 2 use this data during training, which allows the agent to successfully complete tasks where previous generations failed without the need for any new human demonstrations. This is a specific example of a multi-task loop model data engine, where the language model specifies goals and provides feedback, and the agent converts the feedback into new effective policies.

Elf 3 world

To further drive generalization, DeepMind combines SIMA 2 with Genie 3, a world model that generates interactive 3D environments from a single image or text prompt. In these virtual worlds, agents must orient themselves, interpret instructions, and act toward goals, even if the geometry and assets differ from all training games.

The reported behavior is that SIMA 2 can navigate these Genie 3 scenes, identify objects such as benches and trees, and perform the requested actions in a coherent manner. This is important for researchers as it shows that a single agent can operate across commercial games and generated environments using the same inference core and control interfaces.

Main points

- Architecture centered around Gemini: SIMA 2 integrates Gemini (reportedly Gemini 2.5 Flash Lite) as the core reasoning and planning module, wrapped by the visual motion control stack that operates through pixels via virtual keyboard and mouse in many commercial games.

- Measurement performance beyond SIMA 1: On DeepMind’s main suite of tasks, SIMA 2 roughly doubles SIMA 1’s 31% task completion rate and achieves near-human-level performance in the training game, while also delivering significantly higher success rates in supporting environments such as ASKA and MineDojo.

- Multi-mode, combined command following: Agents can follow long combined instructions and support multimodal prompts, including speech, sketches, and emoticons, by building language and symbols into shared representations of visual observations and game actions.

- Self-improvement through model-generated tasks and rewards: SIMA 2 uses a Gemini-based teacher to generate tasks and a learned reward model to score trajectories, thereby building a growing library of experience that enables later generations of agents to outperform earlier ones without the need for additional human demonstrations.

- Genie 3 stress test and its impact on robotics: Combining SIMA 2 with Genie 3, which synthesizes interactive 3D environments from images or text, shows that agents can transfer skills to newly generated worlds, supporting DeepMind’s claim that the stack is a concrete step toward general concrete agents and ultimately more capable real-world robots.

SIMA 2 is a meaningful system milestone, not a simple benchmark win. By embedding pruned Gemini 2.5 Flash lite models at the core, the DeepMind team demonstrated a practical approach that combines multimodal perception, language-based planning, and Gemini-orchestrated self-improvement loops, validated in commercial games and Genie 3-generated environments. Overall, SIMA 2 shows how a specific Gemini stack can serve as a real-world precursor to a universal robotic agent.

Check technical details. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an artificial intelligence media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.