Baidu releases ERNIE-4.5-VL-28B-A3B-Thinking: an open source, compact multi-modal inference model under the ERNIE-4.5 family

How do we get large model-level multimodal inference of documents, graphs, and videos while only running 3B class models in production? Baidu has added a new model to the ERNIE-4.5 open source family. ERNIE-4.5-VL-28B-A3B-Thinking is a visual language model focused on document, diagram, and video understanding with a small active parameter budget.

Architecture and training setup

ERNIE-4.5-VL-28B-A3B-Thinking is built on the ERNIE-4.5-VL-28B-A3B expert hybrid architecture. The series is designed with a heterogeneous multimodal MoE, with shared parameters across text and visuals, and modality-specific experts. At the model level, it has a total of 30B parameters, while the architecture is in the 28B-VL branch, and only 3B parameters are activated per token through the A3B routing scheme. This provides compute and memory profiles for Class 3B models while retaining a larger pool of inference capacity.

The model underwent an additional intermediate training phase on a large corpus of visual language inference. This stage aims to improve the representation power and semantic consistency between visual and linguistic modalities, which is important for dense text in documents and fine structures in diagrams. Most importantly, ERNIE-4.5-VL-28B-A3B-Thinking uses multi-modal reinforcement learning on verifiable tasks, combining GSPO and IcePop strategies with dynamic difficulty sampling to stabilize MoE training and push the model to difficult examples.

key capabilities

Baidu researchers position the model as a lightweight multi-modal inference engine that can only activate 3B parameters, while approaching the behavior of large flagship systems on internal benchmarks. Officially listed abilities include visual reasoning, STEM reasoning, visual fundamentals, image thinking, tool utilization, and video comprehension.

Thinking in images is central. The model can zoom in on a region, reason about the cropped view, and then integrate these local observations into a final answer. When internal knowledge is insufficient, tool utilization can extend this by invoking tools such as image search. Both features are exposed as part of the inference parser and tool call parser paths in your deployment.

Performance and Positioning

Compared with Qwen-2.5-VL-7B and Qwen-2.5-VL-32B, the lightweight visual language model ERNIE-4.5-VL-28B-A3B achieves competitive or superior performance on many benchmarks while using fewer activation parameters. The ERNIE-4.5-VL model also supports thinking and non-thinking modes, with thinking mode improving reasoning-focused tasks while maintaining strong perceptual quality.

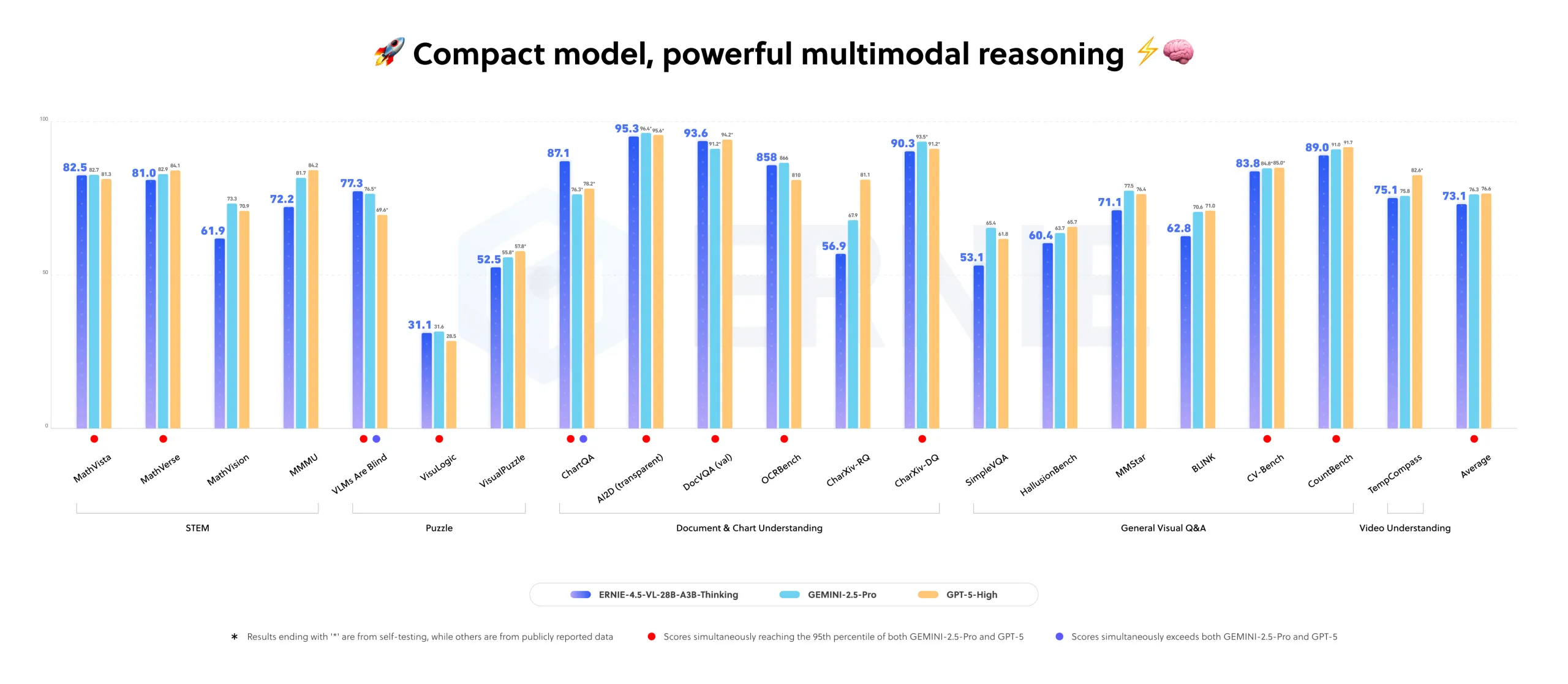

For specific Thinking variants, Baidu researchers describe ERNIE-4.5-VL-28B-A3B-Thinking as closely matching the performance of industry flagship models in internal multi-mode benchmarks.

Main points

- ERNIE-4.5-VL-28B-A3B-Thinking uses a hybrid expert architecture with approximately 30B total parameters and only 3B active parameters per token, providing efficient multi-modal reasoning.

- The model is optimized for document, diagram, and video understanding with an additional mid-training stage for visual language reasoning and multimodal reinforcement learning using GSPO, IcePop, and dynamic difficulty sampling.

- By thinking in images, models can iteratively zoom in on image regions and reason about crops, while tool utilization can invoke external tools such as image search for long-tail recognition.

- It demonstrates strong performance in analyzing style diagrams, STEM circuit problems, visual grounding with JSON bounding boxes, and localization of video clips with timestamped answers.

- The model is released under the Apache License 2.0, supports deployment through Transformer, vLLM and FastDeploy, and can be fine-tuned through ERNIEKit using SFT, LoRA and DPO to enable commercial multi-modal applications.

comparison table

| Model | training phase | Total/active parameters | Way | context length (tags) |

|---|---|---|---|---|

| ERNIE-4.5-VL-28B-A3B-Base | pre-training | 28B in total, 3B active per token | words, vision | 131,072 |

| ERNIE-4.5-VL-28B-A3B (PT) | Chat model after training | 28B in total, 3B active per token | words, vision | 131,072 |

| ERNIE-4.5-VL-28B-A3B-Thinking | Inference-oriented mid-term training on ERNIE-4.5-VL-28B-A3B | 28B architecture, 3B activity per token, HF model size 30B parameters | words, vision | 131,072 (FastDeploy example uses 131,072 maximum model length) |

| Qwen2.5-VL-7B-Command | Visual language model after training | Total about 8B (Level 7B) | Text, images, videos | 32,768 text positions in configuration (max_position_embeddings) |

| Qwen2.5-VL-32B-Instruction | Enhanced tuning of large VL models after training | Total 33B | Text, images, videos | 32,768 text positions (same Qwen2.5-VLTextConfig family) |

ERNIE-4.5-VL-28B-A3B-Thinking is a practical version for teams that want to do multi-modal reasoning on documents, charts, and videos with only 3B activation parameters, while still using the Mixture-of-Experts architecture with ~30B total parameters and Apache License 2.0. It connects thinking with imagery, tool exploitation, and multimodal reinforcement learning into a deployable stack that directly targets real-world analysis and understanding workloads.

Check repo, model weights and technical details. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.