Moonshot AI releases Kimi K2 Thinking: Impressive thinking model that can perform up to 200-300 sequential tool calls without human intervention

How do we design artificial intelligence systems that can plan, reason, and act on long sequences of decisions without constant human guidance? Moonshot AI has released Kimi K2 Thinking, an open source thinking agent model that exposes the complete reasoning flow of the Kimi K2 Mixture of Experts architecture. It targets workloads that require deep inference, long-term tool usage, and stable agent behavior across multiple steps.

What are Kimi K2’s thoughts??

Kimi K2 Thinking is described as the latest and most powerful version of Moonshot’s open source thinking model. It is built as a thinking agent that can reason step by step and dynamically invoke tools during the reasoning process. The model is designed to interweave chains of thoughts with function calls so that it can read, think, call tools, think again, and repeat hundreds of steps.

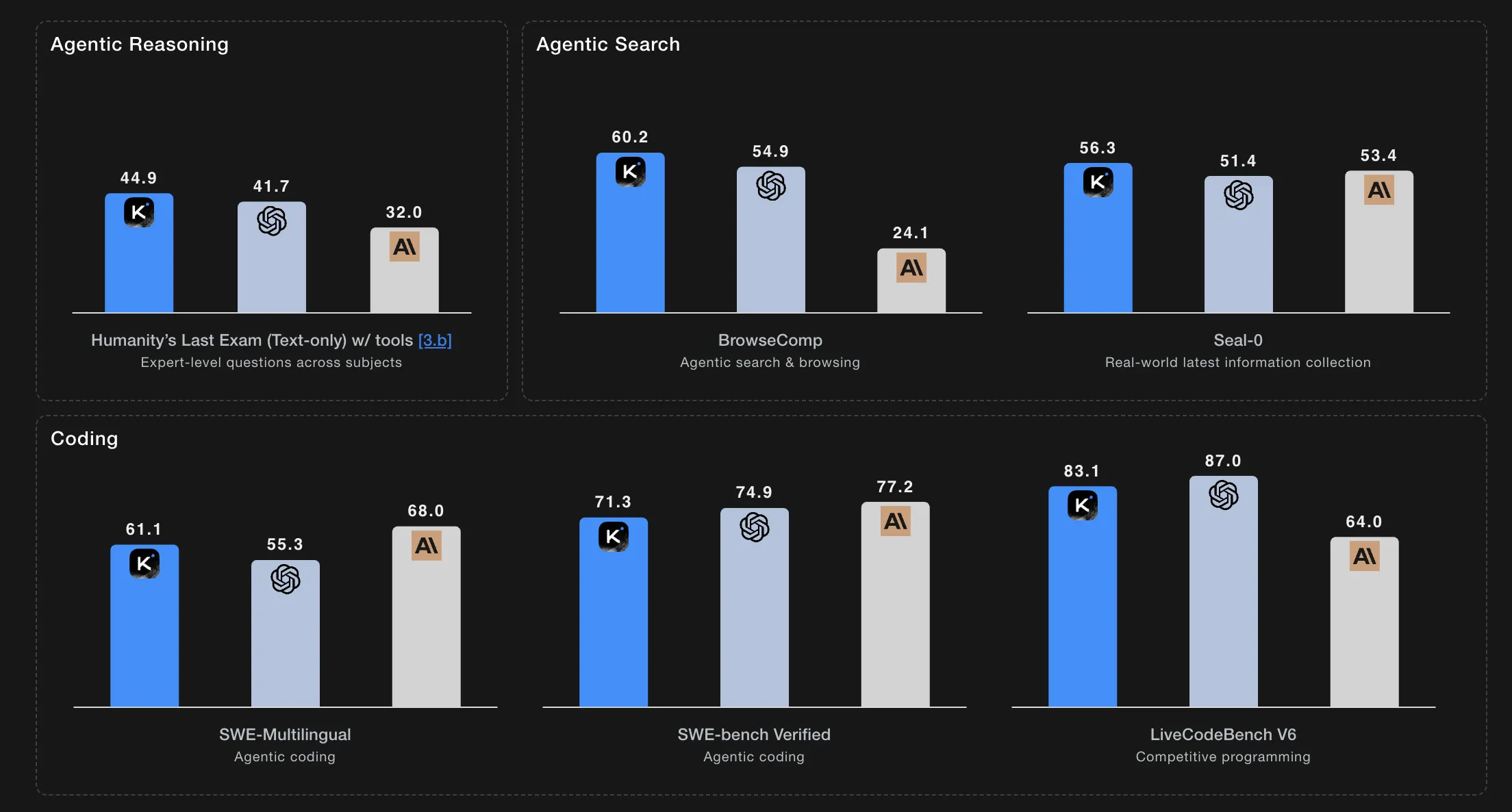

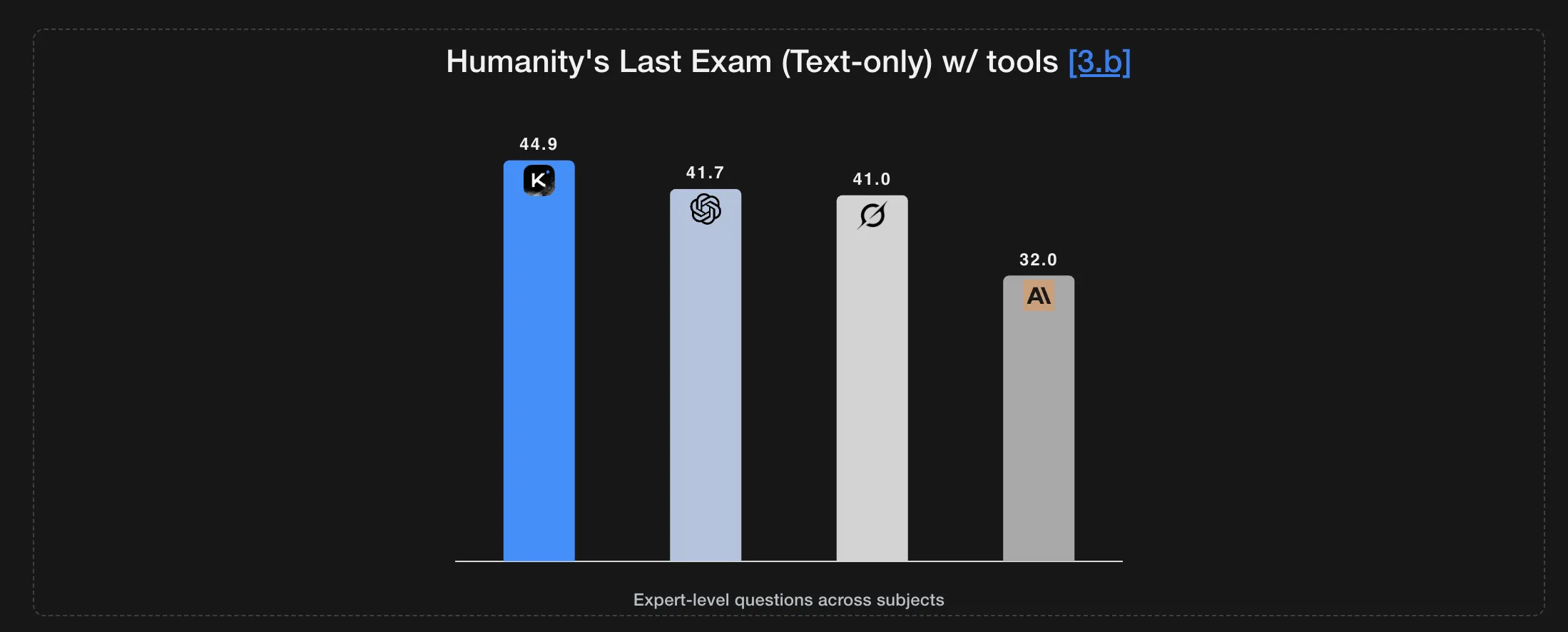

This model sets a new state of the art for Humanity’s Last Exam and BrowseComp while maintaining consistent behavior across approximately 200 to 300 consecutive tool calls without human intervention.

Meanwhile, K2 Thinking is released as an open-weight model with a 256K token context window and native INT4 inference to reduce latency and GPU memory usage while maintaining baseline performance.

K2 Thinking is already live in chat mode on kimi.com, accessible through the Moonshot Platform API, and plans to adopt a dedicated agent mode to expose complete tool usage behavior.

Architecture, MoE design and context length

Kimi K2 Thinking inherits the design of Kimi K2 Mixture of Experts. This model adopts MoE architecture, with a total parameter of 1T and each token activation parameter of 32B. It has 61 layers, including 1 dense layer, 384 experts, 8 experts selected for each token, 1 shared expert, 64 attention heads, and the attention hidden dimension is 7168. MoE hidden dimensions are 2048 per expert.

The vocabulary size is 160K tokens and the context length is 256K. The attention mechanism is Multi head Latent Attention, and the activation function is SwiGLU.

Test time scaling and long-term thinking

Kimi K2 Thinking is explicitly optimized for test time scaling. The model is trained to expand its reasoning length and tool call depth when faced with more difficult tasks, rather than relying on fixed short chains of thinking.

In the last human test, without tools, K2 Thinking scored 23.9. When using tools, the score rose to 44.9, and in heavy duty it hit 51.0. On the AIME25 using Python it reports 99.1, and on the HMMT25 using Python it reports 95.1. It scores 78.6 on IMO AnswerBench and 84.5 on GPQA.

The test protocol sets the upper limit of the thinking token budget for HLE, AIME25, HMMT25 and GPQA to 96K. It uses 128K thought tokens for IMO AnswerBench, LiveCodeBench and OJ Bench and 32K completion tokens for long-form writing. On HLE, the maximum number of steps is limited to 120, and the inference budget per step is 48K. In the agent search task, the limit is 300 steps with a 24K inference budget per step.

Benchmarks for agent search and encoding

When using the tools for agent search tasks, K2 Thinking scored 60.2 on BrowseComp, 62.3 on BrowseComp ZH, 56.3 on Seal 0, 47.4 on FinSearchComp T3, and 87.0 on Frames.

In the general knowledge benchmark, MMLU Pro scored 84.6, MMLU Redux scored 94.4, Longformwriting scored 73.8, and HealthBench scored 58.0.

In terms of coding, K2 Thinking achieved 71.3 points on SWE Bench with Tools for Verification, 61.1 points on SWE Benchmark Multilingual using Tools, 41.9 points on Multi SWE Bench using Tools, 44.8 points on SciCode, 83.1 points on LiveCodeBenchV6, and 48.7 on OJ Bench in C plus plus setup. It achieved 47.1 points on Terminal Bench using simulation tools.

The Moonshot team also defined a heavy model that runs eight trajectories in parallel and then aggregates them to produce the final answer. This is used in some inference benchmarks to squeeze additional accuracy out of the same base model.

Native INT4 quantization and deployment

K2 Thinking is trained as a native INT4 model. The research team applied quantization-aware training in the post-training stage and used INT4 weight-only quantization on the MoE component. This supports INT4 inference, resulting in approximately 2x faster generation in low-latency mode while maintaining state-of-the-art performance. All reported benchmark scores are obtained at INT4 precision.

Checkpoints are saved in compressed tensor format and can be decompressed to higher precision formats such as FP8 or BF16 using the official compressed tensor tool. Recommended inference engines include vLLM, SGLang, and KTransformers.

Main points

- Kimi K2 Thinking is an open-weighted thinking agent that extends the Kimi K2 expert hybrid architecture with explicit long-term reasoning and tool usage instead of just short chat-like responses.

- The model is designed with trillion parameters MoE, each token has approximately tens of billions of active parameters, 256K context windows, and is trained as a native INT4 model through quantization-aware training, increasing the inference speed by approximately 2 times while maintaining stable baseline performance.

- K2 Thinking is optimized for test time scaling, it can perform hundreds of consecutive tool calls in a single task, and is evaluated with a large thinking token budget and strict step caps, which is important when you try to reproduce its inference and agent results.

- In public benchmarks, it leads or is competitive on inference, agent search, and coding tasks such as HLE, BrowseComp, and SWE benchmarks using tools. Validation using tools shows that the thinking-oriented variant has clear advantages over the basic non-thinking K2 model.

Kimi K2 Thinking is a strong signal that test time scaling is now a first-class design goal for open source inference models. Moonshot AI not only exposes a 1T parameter hybrid expert system with 32B active parameters and 256K context windows, it does this with native INT4 quantization, quantization-aware training, and tool orchestration to run hundreds of steps in a production environment like setup. Overall, Kimi K2 Thinking shows that open weight inference agents with long-term planning and tool usage are becoming practical infrastructure and not just research demonstrations.

Check Model weight and technical details. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an AI media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.