Cache-to-Cache (C2C): Direct semantic communication between large language models via KV cache fusion

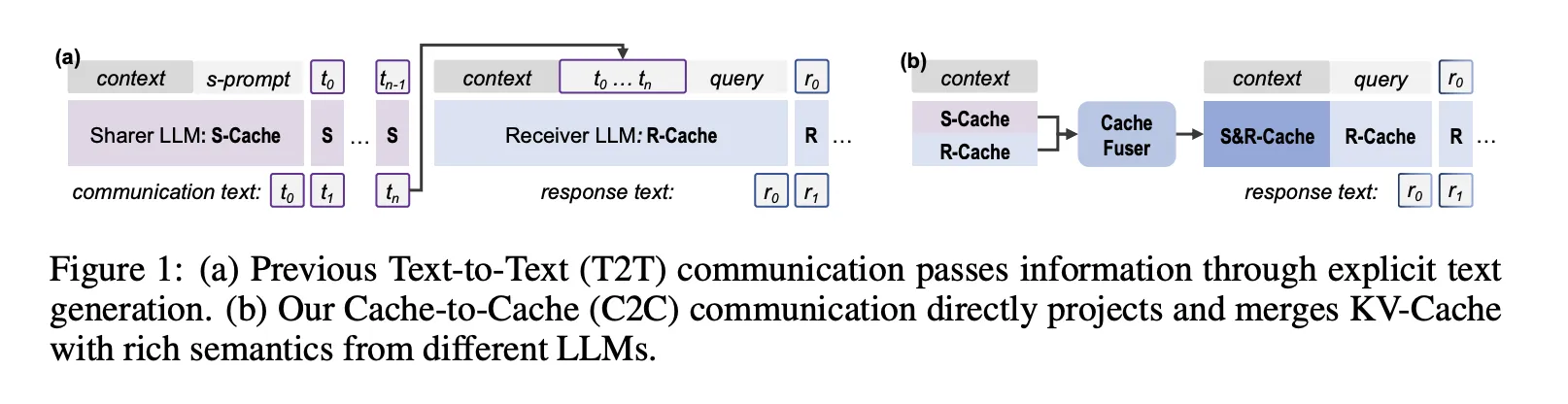

Can large language models collaborate without sending individual text tokens? A team of researchers from Tsinghua University, Infinigence AI, the Chinese University of Hong Kong, Shanghai Artificial Intelligence Laboratory, and Shanghai Jiao Tong University say yes. Cache-to-cache (C2C) is a new communication paradigm in which large language models exchange information via their KV cache rather than via generated text.

Text communication is the bottleneck of multi-LLM systems

Most current multi-LLM systems use text for communication. One model writes the explanation and the other reads it as context.

The design has three real costs:

- Internal activations are condensed into brief natural language messages. Many semantic signals in KV-Cache never cross the interface.

- Natural language is ambiguous. Even with structured protocols, the encoder model can encode structural signals, such as the roles of HTML

Labels cannot survive in vague text descriptions. - Each communication step requires token-by-token decoding, which dominates latency in long analysis exchanges.

The C2C work raises a direct question, whether we can think of KV-Cache as a communication channel.

Oracle experiment, can KV-Cache carry communication?

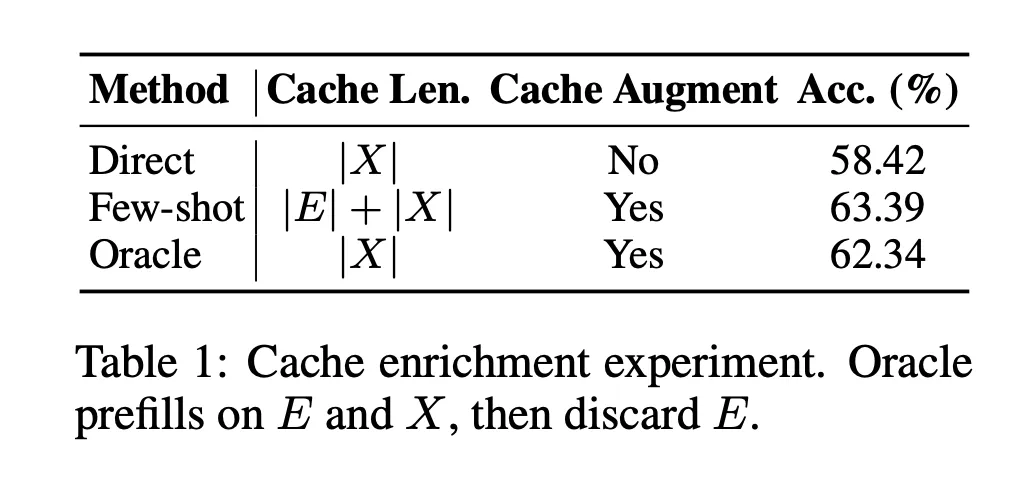

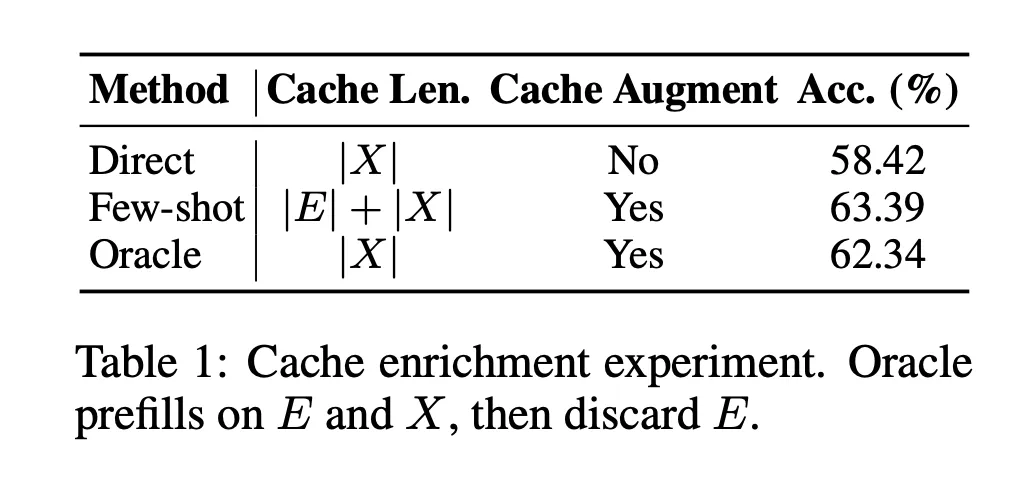

The research team first ran two oracle-style experiments to test whether KV-Cache was a useful medium.

Cache rich oracle

They compared three settings on a multiple-choice benchmark:

- Direct, pre-filled questions only.

- Few shots, pre-populated examples and questions, longer cache.

- Oracle, prepopulates the examples plus questions, then discards the example segments and keeps only the cached question-aligned slices, so the cache length is the same as Direct.

Under the same cache length, Oracle increases the accuracy from 58.42% to 62.34%, while Few shot reaches 63.39%. This shows that enriching the issue KV-Cache itself can improve performance even without more tokens. Layer-by-layer analysis showed that enriching only selected layers was better than enriching all layers, which later inspired the gating mechanism.

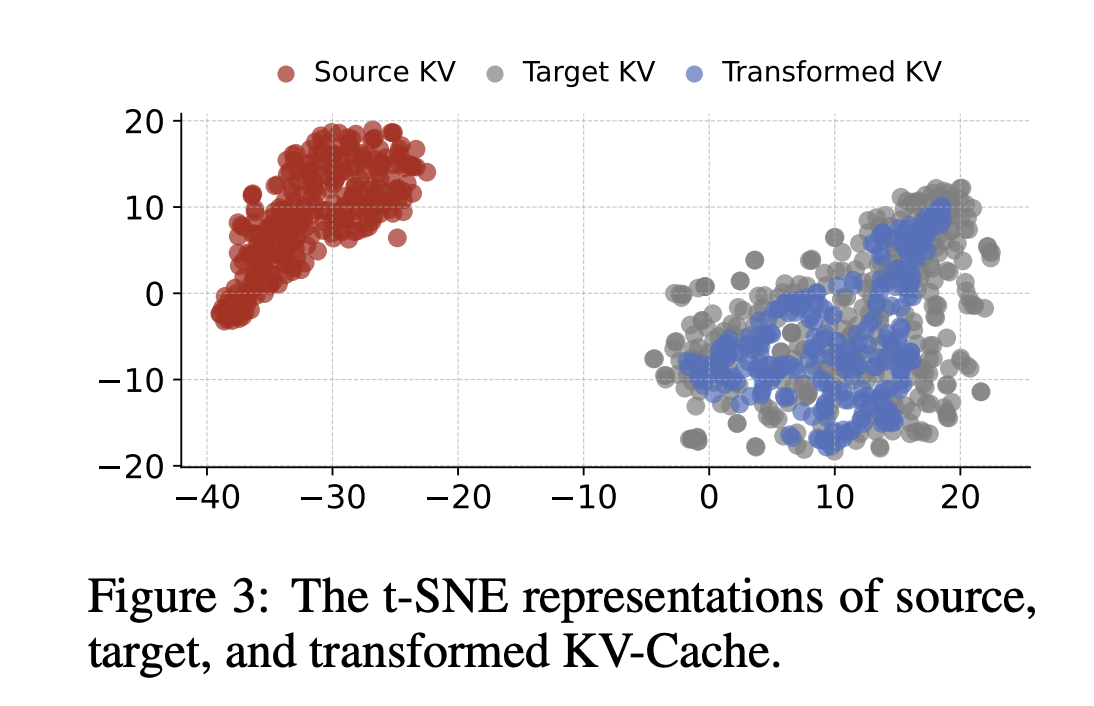

cache conversion oracle

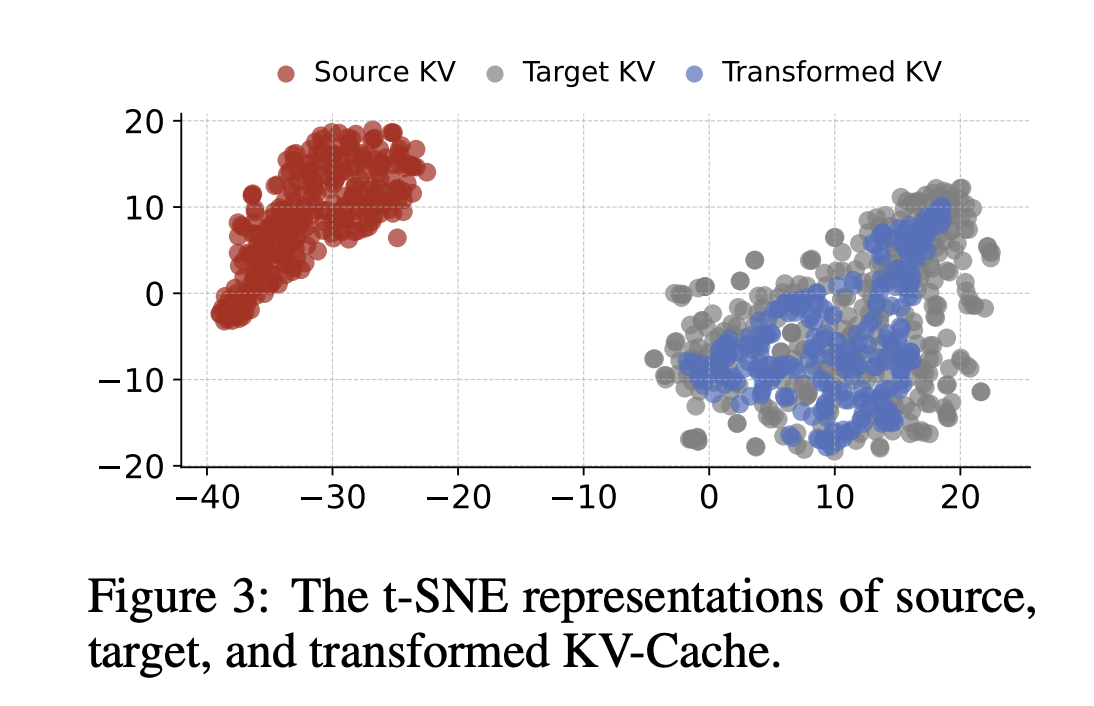

Next, they tested whether the KV-Cache of one model could be transformed into the space of another model. Train a three-layer MLP to map KV-Cache from Qwen3 4B to Qwen3 0.6B. t SNE plot shows that the transformed cache is located inside the target cache manifold, but only in subregions.

C2C, direct semantic communication through KV-Cache

Based on these predictions, the research team defined cache-to-cache communication between sharer and receiver models.

During prepopulation, both models read the same input and generate hierarchical KV caches. For each sink layer, C2C selects the mapped sharer layer and applies C2C Fuser to generate the fused cache. During decoding, the receiver predicts tokens conditioned on this fused cache instead of its original cache.

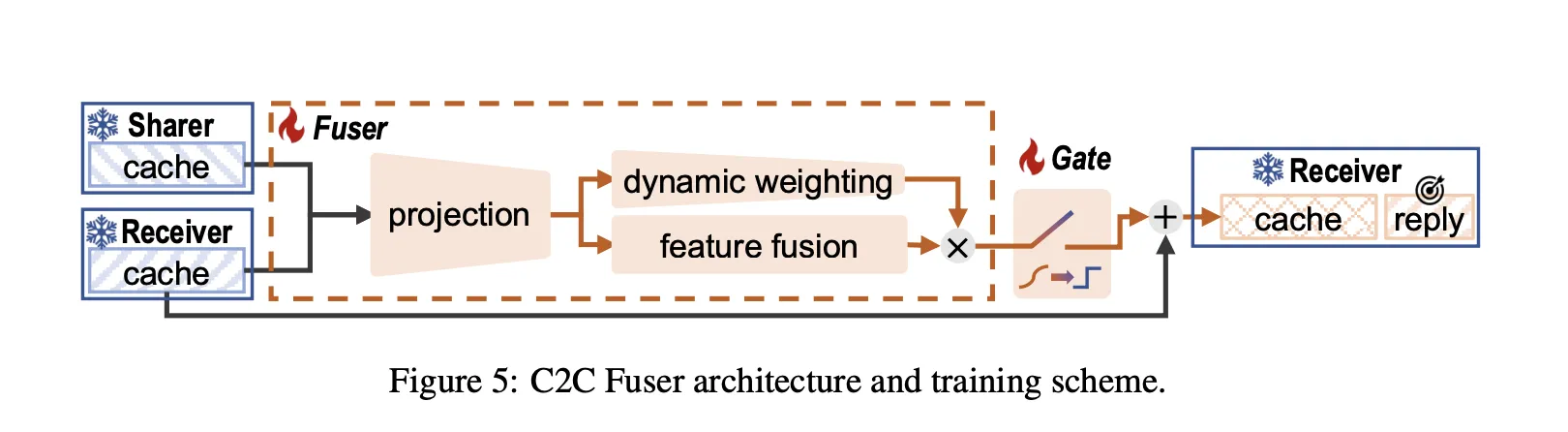

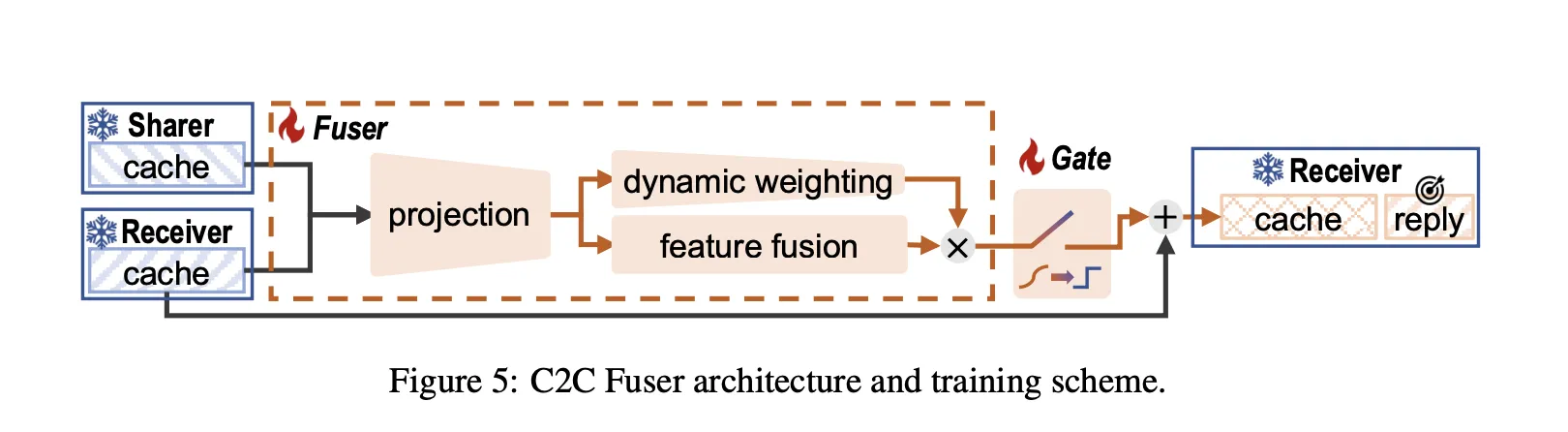

C2C Fuser follows the principle of residual integration and has three modules:

- Projection module Concatenate the sharer and receiver KV-Cache vectors, apply a projection layer, and then apply a feature fusion layer.

- Dynamic Weighting Module Adjust heads based on input so that some attention heads rely more on sharer information.

- learnable door Adds a per-layer gate that determines whether to inject sharer context into that layer. This gate uses Gumbel sigmoid during training and becomes binary at inference time.

Sharers and Receivers can be from different series and sizes, so C2C also defines:

- Token alignment is performed by decoding receiver tokens into strings and re-encoding them using the sharer token generator, then selecting the sharer token with the greatest string coverage.

- Use a terminal strategy for layer alignment, pairing the top layer first and working your way back until the lighter model is completely covered.

Both LL.M.s were frozen during the training period. The C2C module is trained using only the next token prediction loss on the receiver output. The main C2C fusions are trained on the first 500k samples of the OpenHermes2.5 dataset and evaluated on OpenBookQA, ARC Challenge, MMLU Redux and C Eval.

Accuracy and latency, C2C and text communications

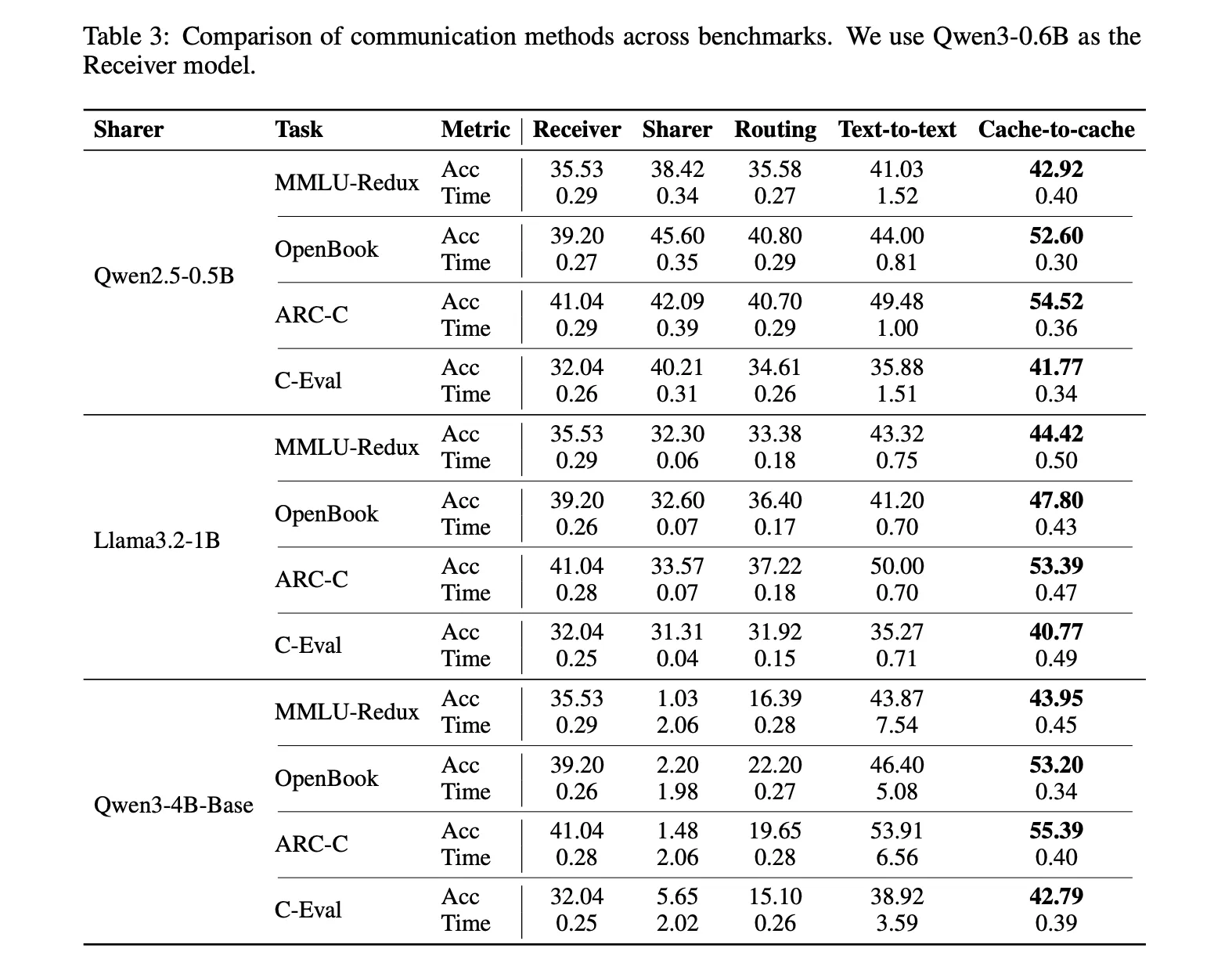

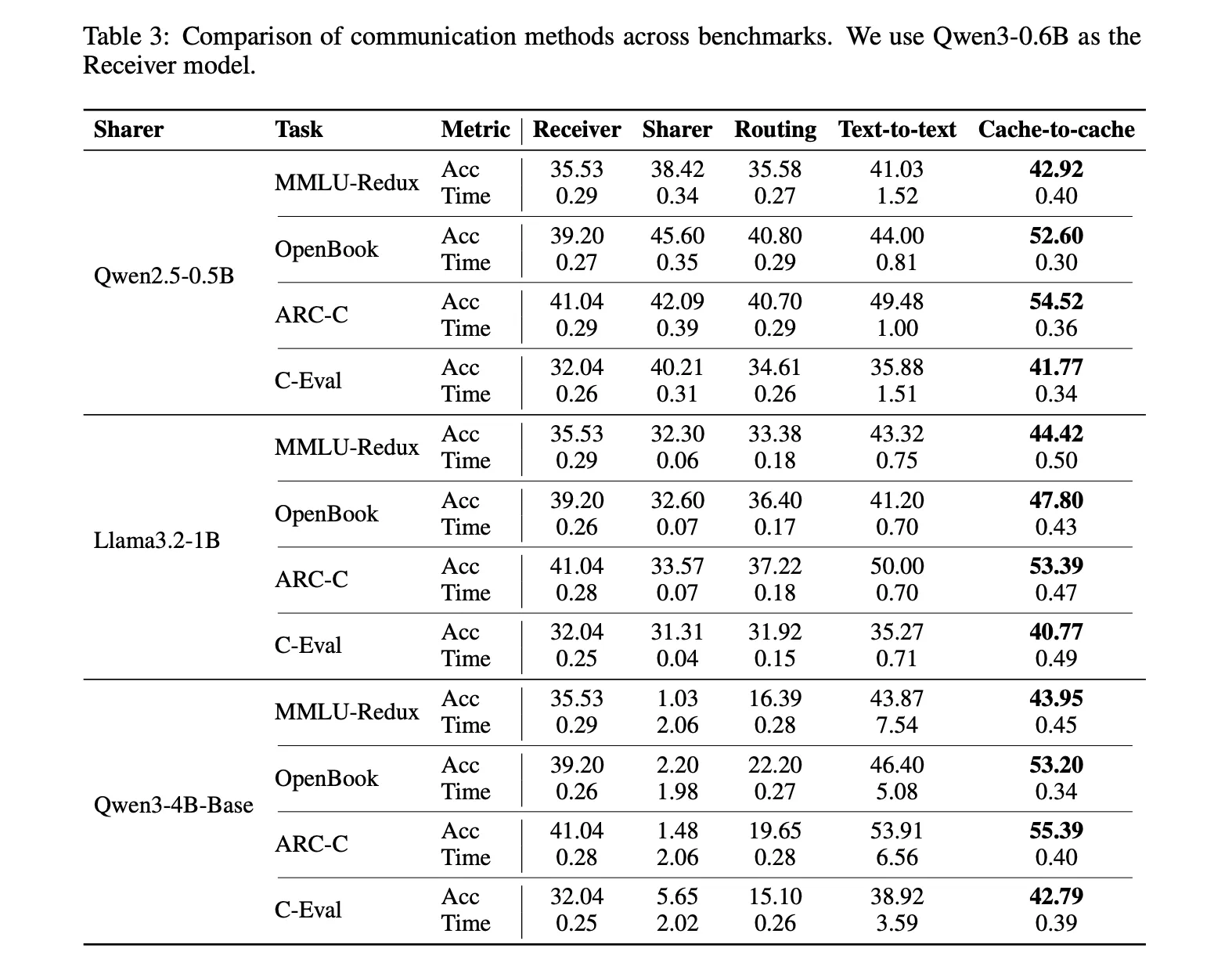

C2C continues to improve receiver accuracy and reduce latency across many sharer-receiver combinations built using Qwen2.5, Qwen3, Llama3.2 and Gemma3. For the result:

- The average accuracy of C2C is about 8.5% to 10.5% higher than that of a single model.

- The performance of C2C is about 3.0% to 5.0% better than text communication on average.

- Compared to text-based collaboration, C2C has an average latency speedup of approximately 2x, with faster speedup in some configurations.

The specific example uses Qwen3 0.6B as the receiver and Qwen2.5 0.5B as the sharer. On MMLU Redux, Receiver alone reached 35.53%, text-to-text reached 41.03%, and C2C reached 42.92%. The average time per query for text-to-text is 1.52 units, while C2C remains close to 0.40 units for the single model. Similar patterns also appear on OpenBookQA, ARC Challenge and C Eval.

On LongBenchV1, using the same pairs, C2C outperforms text communication on all sequence length buckets. For sequences from 0 to 4k tokens, text communication reaches 29.47, while C2C reaches 36.64. For environments from 4k to 8k and beyond, the gain remains.

Main points

- cache-to-cache communication Let the Sharer model send information directly to the Receiver model through KV-Cache, so collaboration does not require intermediate text messages, thereby eliminating the token bottleneck and reducing semantic loss in multi-model systems.

- Two Oracle Studies Results show that enriching cache-only problem-aligned slicing improves accuracy at constant sequence length, and that KV-Cache in larger models can be mapped to the cache space of smaller models via a learning projector, confirming caching as a viable communication medium.

- C2C Fuser architecture Combining sharer and sink caches with projection modules, dynamic head weighting, and learnable per-layer gates, and integrating everything in a residual manner, allows the sink to selectively absorb sharer semantics without destabilizing its own representation.

- Consistent accuracy and latency gains It was observed in the Qwen2.5, Qwen3, Llama3.2 and Gemma3 model pairs that compared to a single model, the average accuracy improved by about 8.5% to 10.5%, inter-text communication improved by 3% to 5%, and the response speed increased by about 2 times due to the removal of unnecessary decoding.

Cache-to-cache redefines multi-LLM communication as a direct semantic transfer problem rather than a just-in-time engineering problem. By using neural fusion and learnable gating to project and fuse the KV-Cache between sharers and sinks, C2C uses the deep specialized semantics of both models while avoiding explicit intermediate text generation (which is an information bottleneck and latency cost). With 8.5% to 10.5% higher accuracy than text communication and approximately 2x lower latency, C2C is a powerful system-level step toward KV-native collaboration between models.

Check Paper and code. Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for the benefit of society. His most recent endeavor is the launch of Marktechpost, an artificial intelligence media platform that stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easy to understand for a broad audience. The platform has more than 2 million monthly views, which shows that it is very popular among viewers.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.