Google Artificial Intelligence releases C2S-Scale 27B model, which translates complex single-cell gene expression data into “cell sentences” that a master of law can understand

A research team from Google Research, Google DeepMind, and Yale University released C2S-Scale 27Ba basic model for single-cell analysis containing 27 billion parameters Gemma-2. This model formalizes single-cell RNA-seq (scRNA-seq) profiles as “Cell Sentence”– An ordered list of gene symbols – so that language models can locally parse and reason about cell states. In addition to benchmarking gains, the research team also reported Experimentally verifiedcontext-sensitive approach: CK2 inhibition (silmitasertib/CX-4945) combined with low-dose interferon enhances antigen presentationa mechanism that can make “cold” tumors more sensitive to immunotherapy. The result is~50% Increased in vitro antigen presentation under combined conditions.

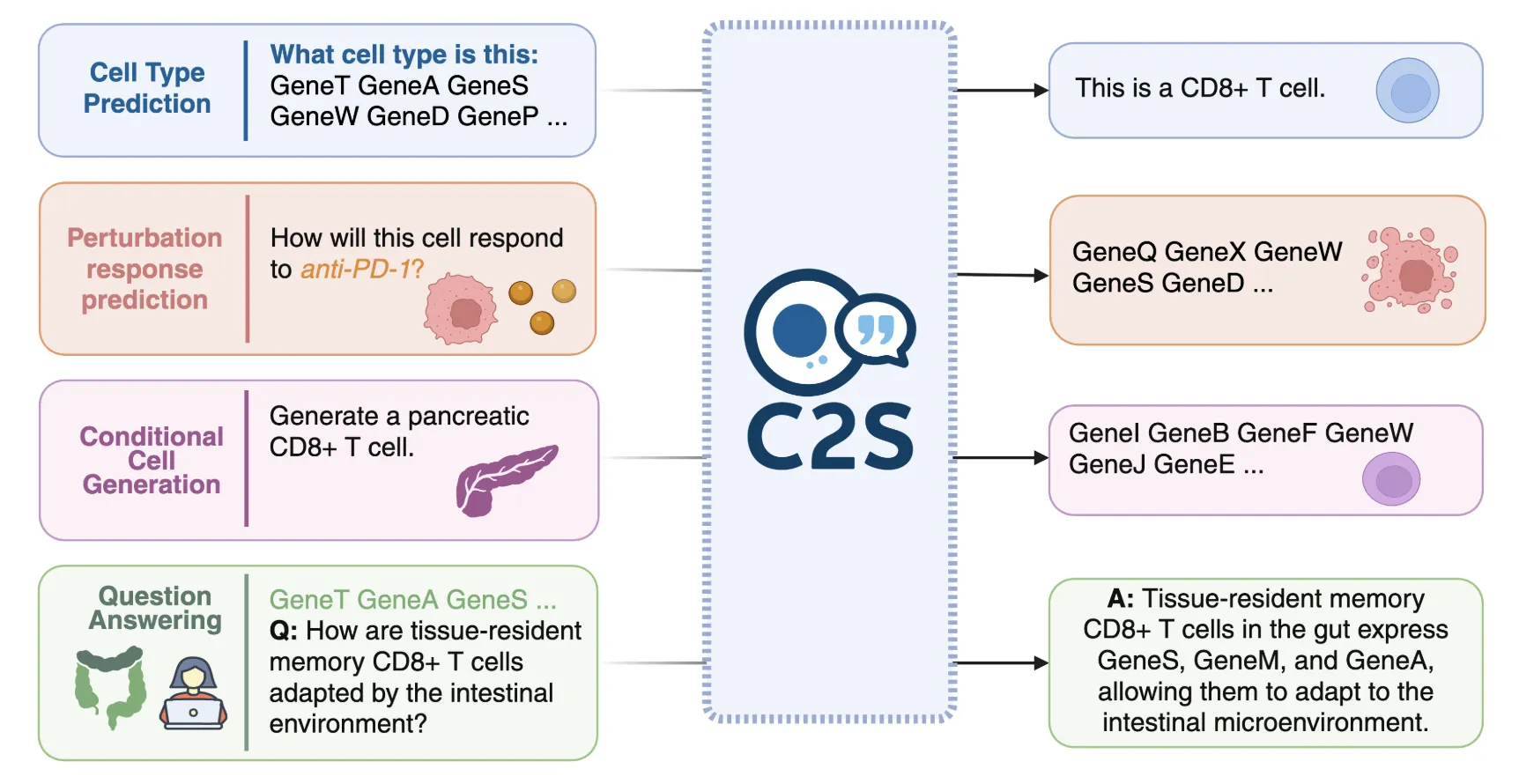

Understand the model

C2S-Scale converts high-dimensional expression vectors into text by sorting genes and emitting the top K symbols as a sequence of gene names. This representation aligns single-cell data with standard LLM toolchains and allows the following tasks: Cell type prediction, tissue classification, clustering subtitles, perturbation prediction, and biological quality assurance Expressed as text prompts and completions.

Training data, stacks and releases

C2S-Scale-Gemma-2-27B is built on Gemma-2 27B (decoder transformer only)trained on Google TPU v5and published under CC-BY-4.0. Training corpus aggregation >800 public scRNA-seq datasets span >57M cells (human and mouse) along with associated metadata and textual context; pre-training unifies transcriptome tags and biological text into a single multimodal corpus.

Main results: Interferon conditioning amplifier

The research team constructed a Dual context virtual screen Exceed >4,000 drugs Find compounds Enhance antigen presentation (MHC-I program) only exist Positive immune environment Setting—i.e., primary patient sample low interferon Tone – although the impact is negligible Immune environment neutral Cell line data. The model predicts astonishing context segmentation for Simitaseti (CK2 inhibitor): There is a strong upregulation of MHC-I with low doses of interferon, whereas in the absence of interferon there is almost zero upregulation of MHC-I. The research team reports laboratory validation of a human neuroendocrine model not seen in training, combination (silmitasertib + low-dose interferon) production significant, synergistic Increased antigen presentation (≈50% in their analysis).

amplifier Lower response threshold Interferon rather than de novo antigen presentation; flow cytometry readout reveals HLA-A, B, C Upregulated only under combination therapy including IFN-β and IFN-γ two Neuroendocrine model, representative microfinance institution gain (e.g. 13.6%@10nM and 34.9%@1000 nM silmitasertib in one model).

Main points

- C2S-Scale 27B (Gemma-2) enables LLM-native single-cell analysis workflows by encoding scRNA-seq profiles into textual “cell sentences.”

- In a two-context virtual screen (>4,000 compounds), the model predicted that interferon conditioned amplifier: CK2 inhibition (silmitasertib) enhanced MHC-I antigen presentation using only low doses of IFN.

- Wet laboratory testing in human neuroendocrine cell models confirmed this prediction, with silmitasertib + IFN increasing antigen presentation by approximately 50% compared to either agent alone; this is still preclinical/in vitro.

- Open weights and usage documentation has been published on Hugging Face (vandijklab), which contains the 27B and 2B Gemma variants for research use.

C2S-Scale 27B was a technically sound step for the LL.M. in biology: translating scRNA-seq into “cell sentences”, letting the Gemma-2 model run programmed queries on cell states and perturbations, and in practice it emerged that an interferon-conditioned amplifier—silmitasertib (CK2 inhibition)—increased MHC-I antigen presentation with only low doses of IFN, a mechanism the team subsequently validated in vitro. The value here is not the title rhetoric, but the workflow: text-native screening of >4k compounds in a dual immune background to propose a context-dependent pathway that can convert immune “cold” tumors into visibility. That is, all evidence is preclinical and laboratory scale; the correct interpretation is that of “hypothesis-generating artificial intelligence” with open weight that can be replicated and stress tested, rather than a clinical statement.

Check Technical papers, HF models, GitHub pages, and technical details . Please feel free to check out our GitHub page for tutorials, code, and notebooks. In addition, welcome to follow us twitter And don’t forget to join our 100k+ ML SubReddit and subscribe our newsletter. wait! Are you using Telegram? Now you can also join us via telegram.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex data sets into actionable insights.

🙌 FOLLOW MARKTECHPOST: Add us as your go-to source on Google.