Zhipu AI releases GLM-4.6: Enable enhancements in real-world coding, long-form processing, reasoning, search and proxy AI

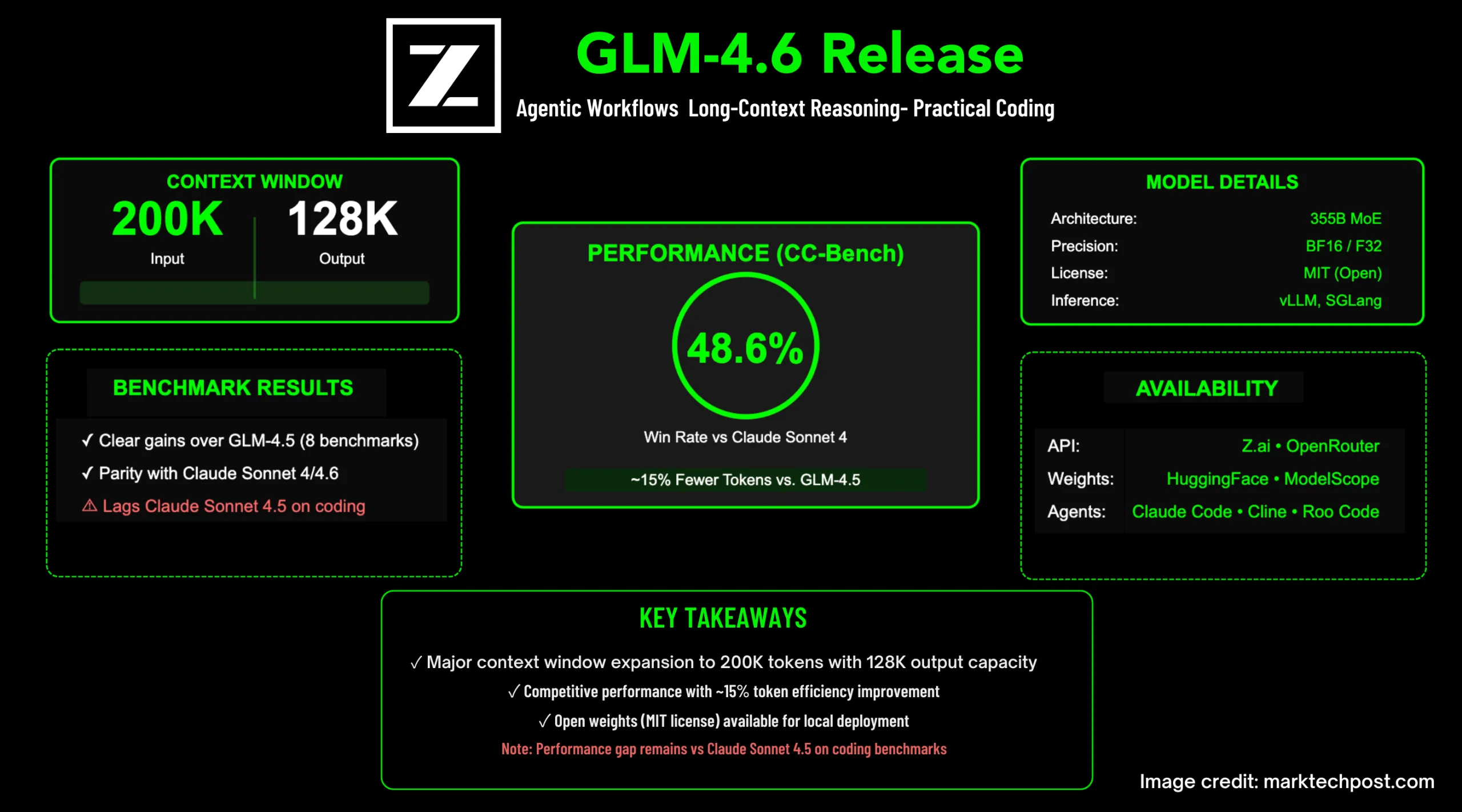

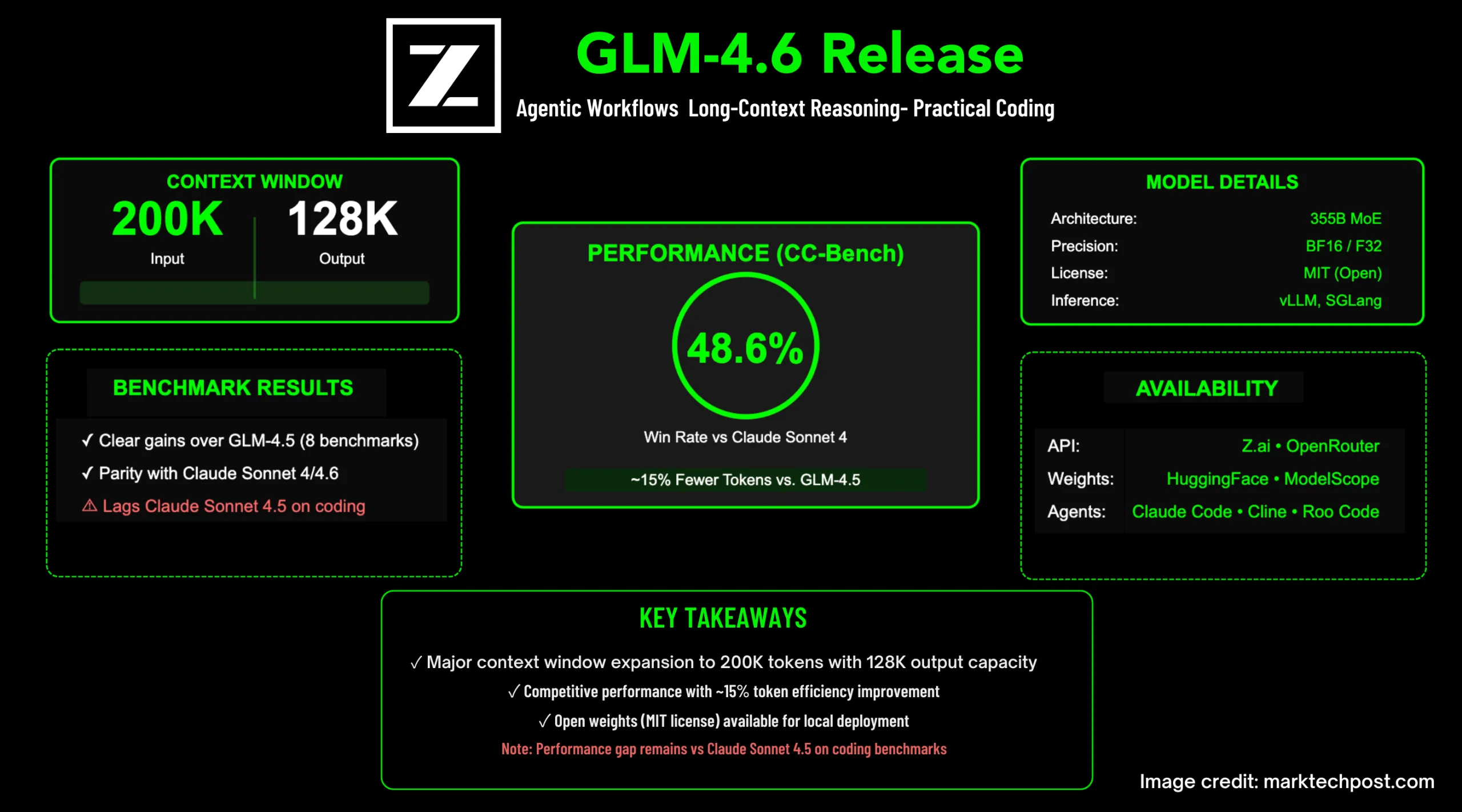

Zhipu AI released GLM-4.6, a major update to its GLM series, which highlights agent workflows, novel reasoning and practical coding tasks. This model raises the input window to 200k token and 128K maximum outputthe target is in the application task to consume lower tokens and with Open weight For on-premises deployment.

So, what is new?

- Context + Output Limit: 200k Enter context and 128K Maximum output token.

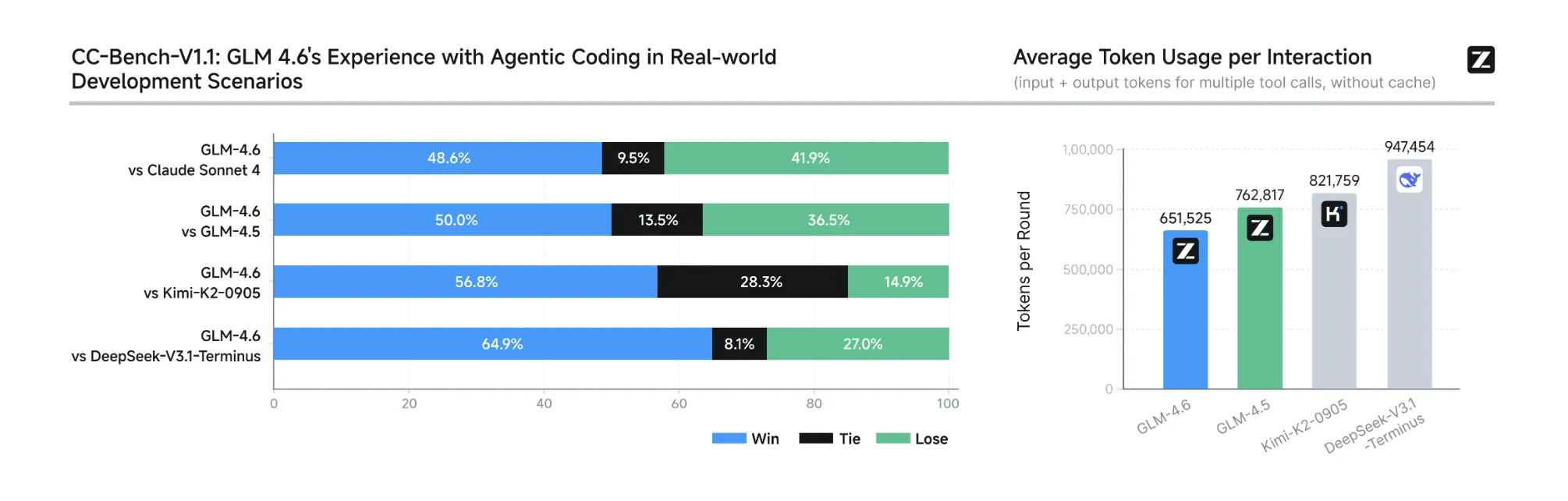

- Actual encoding results: In extension CC stool (Multi-turn tasks performed by human evaluators in isolated Docker environments), reported GLM-4.6 Close to parity with Claude Sonnet 4 (48.6% win rate) And use ~ Token with GLM-4.5 15% less Complete the task. Task prompts and proxy tracks have been published for inspection.

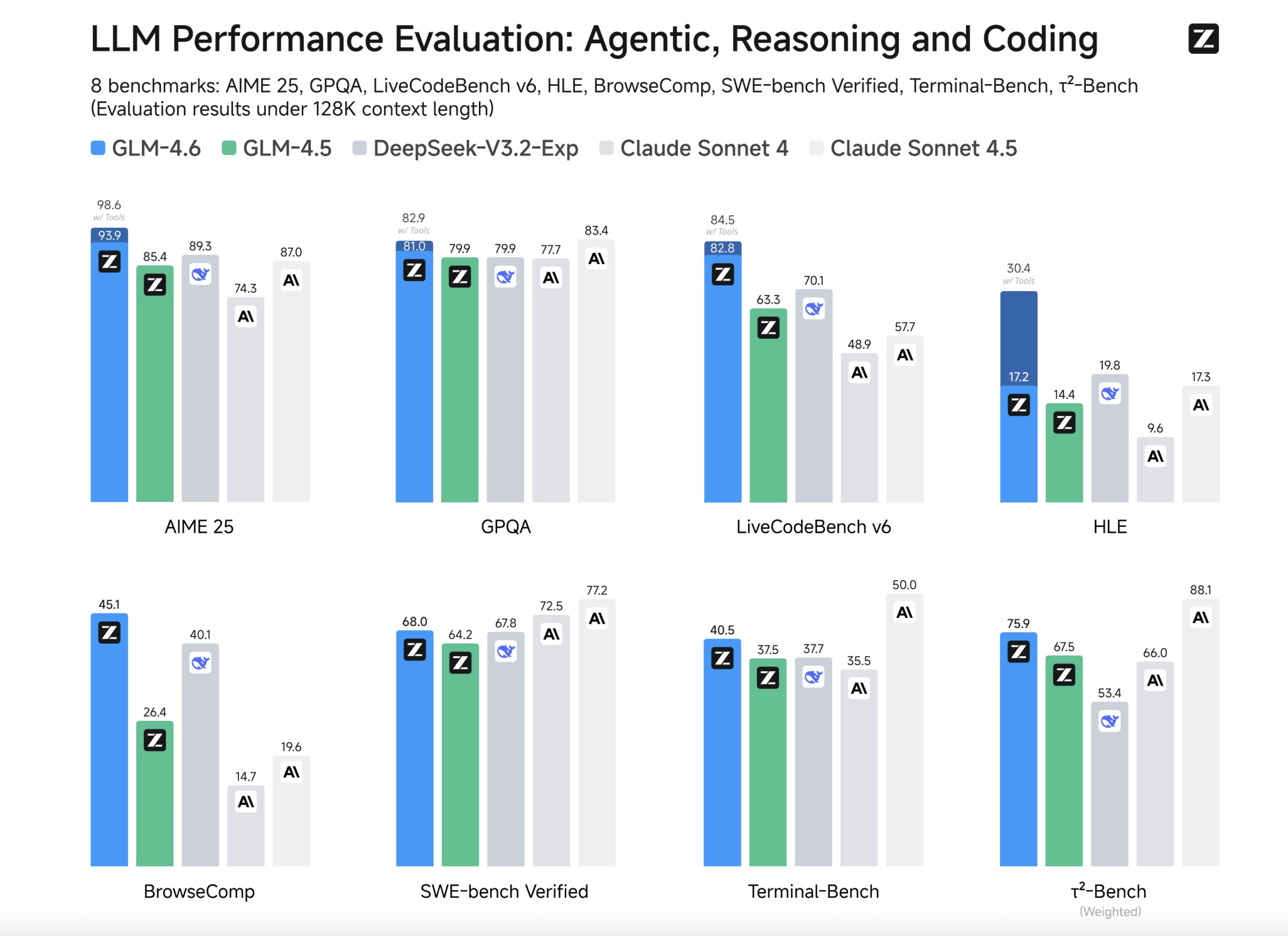

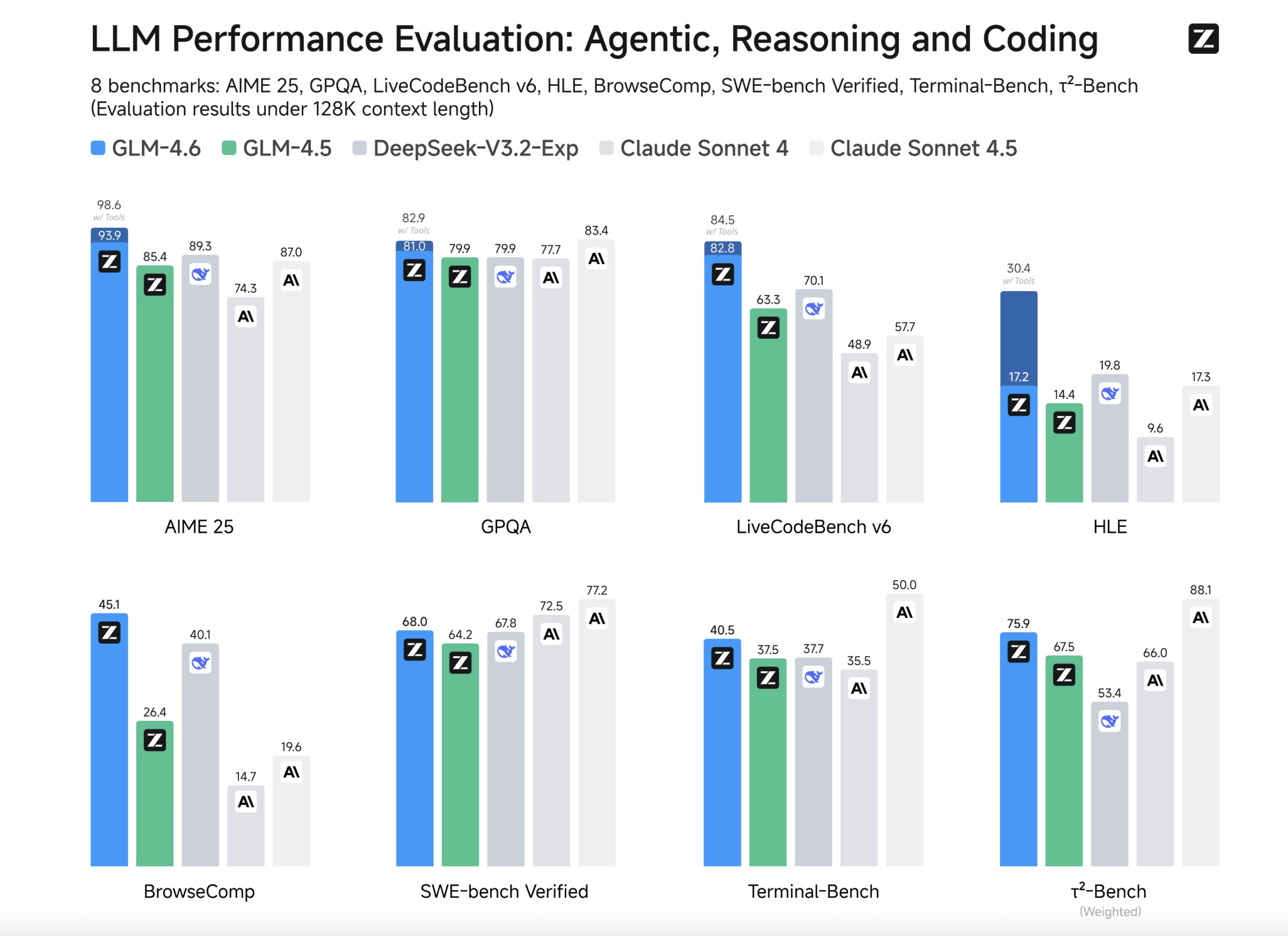

- Benchmark Positioning: Zhipu summarizes the “clear gains” on GLM-4.5 of eight public benchmarks and summarizes them in several public benchmarks with Claude Sonnet 4/4.6; it also points out GLM-4.6 Still Lags Encoded Sonnet 4.5– Useful warning for model selection.

- Ecosystem availability: GLM-4.6 can be passed Z.AI API and OpenRouter;It integrates with popular coding agents (Claude Code, Cline, Roo Code, Kilo Code)

glm-4.6. - Open weight + license: Hug facial model card list License: MIT and Model size: 355B parameter (MOE) With BF16/F32 tensor. (MOE “total parameters” are not equal to the active parameters of each token; there is no active parameter on the card to be 4.6.)

- Local Inference: vllm and sglang Receive support from local services; weight is turned on Hug the face and ModelsCope.

Summary

GLM-4.6 is an incremental but material step: 200k context window, CC pallet with a token of about 15% reduction in GLM-4.5, near-difference task win rate using Claude SONNet 4, and availability of Z.Ai, OpenROUTER and Open-Weight Watifts for local services.

FAQ

1) What are the context and output token limits?

GLM-4.6 supports a 200k Enter context and 128K Maximum output token.

2) Is there an open weight? Under what license is available?

Yes. Embrace facial model card list Open weight and License: MIT and 357B Parameter Moe Configuration (BF16/F32 tensor).

3) How does GLM-4.6 compare to GLM-4.5 and Claude sonnet 4?

In extension CC stoolGLM-4.6 report ~ Token with GLM-4.5 15% less and Claude Sonnet 4 (48.6% win rate) approaches close distance.

4) Can I run GLM-4.6 locally?

Yes. Zhipu provides weights Hug face/model and local inferences were recorded vllm and sglang;Community quantification of workstation-level hardware is emerging.

Check Github page, embrace facial model card and Technical details. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🔥[Recommended Read] NVIDIA AI Open Source VIPE (Video Pose Engine): A powerful and universal 3D video annotation tool for spatial AI