Visual Pane vs. Text Draw: Technical Comparison of Enterprise Search

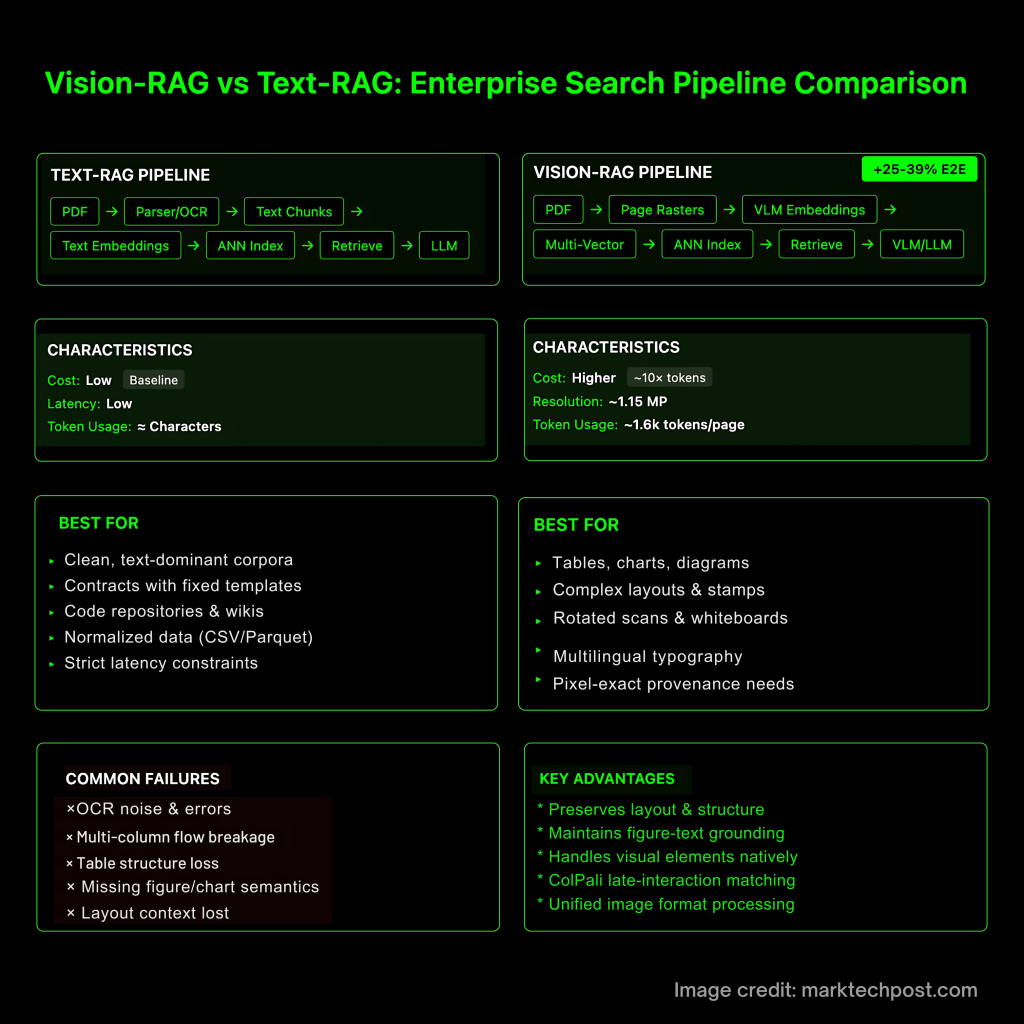

Most rag failures stem from retrieval, not from generation to generation. Text-first pipelines lose layout semantics, table structures and graphical grounding during PDF → text conversion, degrading recalls and precision before LLM runs. Eye-retreatment Rendering Pages with visual embeddings – direct targeting this bottleneck and showing end-to-end material benefits of visually rich Corpora.

Pipelines (and where they fail)

Text rag. PDF → (Parser/OCR) → Text Block → Text Embed → ANN Index → Retrieval → LLM. Typical failure modes: OCR noise, multi-column flow rupture, loss of table cell structure, and missing graph/chart semantics – recorded by tables and DOC-VQA benchmarks to measure these gaps.

Ugly vision. PDF → PAGE RASTER(S) → VLM embedded (usually multi-vector with delayed AC) → ANN INDEX → Retrieval → VLM/LLM consumes high fidelity crops or full pages. This preserves layout and graphic text grounding; the method is verified by recent systems (Colpali, Visrag, Vdocrag).

What does the current evidence support

- Document image search works, simpler. Colpali embeds page images and uses post-match; on Vidore benchmarks, it outperforms modern text pipelines while maintaining end-to-end training.

- End-to-end lift is measurable. Visrag Report 25-39% When retrieving and generating VLM, text in multimodal documents is rag-to-end improvements.

- Unified image format for real-world documents. Vdocrag shows that documents are kept in uniform image formats (tables, charts, ppt/pdf) to avoid parser losses and improve generalization. It also introduced OpenDOcVQA for evaluation.

- Resolutions promote the quality of reasoning. High resolution support in VLMS (e.g. qwen2-vl/qwen2.5-vl) is explicitly related to sota results on docvqa/mathvista/mtvqa; loyalty is important for ticks, superscripts, stamps and small fonts.

Cost: Visual context (usually) burning order is heavier because of the existence of tokens

Vision input expansion Token count By tiles, it is not necessarily frank. For GPT-4O-class models, the total tokens ≈Base + (Tile_tokens×Tiles), so the cost of small text blocks for 1–2 MP pages is about 10 times. Anthropomorphism suggests about 1.15 mp cover (approximately 1.6K tokens) to respond. By comparison, Google Gemini 2.5 Flash-Lite price text/image/video At the same ratebut large images still consume more tokens. Engineering significance: adoption Selective fidelity (Crop>Sample below>Full page).

Design rules for producing visual rags

- Align across embedding. Encoder using trained text image alignment (Clip – Family or VLM Hound) is actually a dual index: cheap text memory coverage + Vision Rerank for precise. Colpali’s late communication (MaxSim style) is a powerful default for page images.

- Selectively feed high fidelity input. Coarse to 1: Run BM25/DPR, bring the top page to the visual rereader, and then send only the ROI crops (tables, charts, stamps) to the generator. This keeps important pixels without exploding tokens under tile-based accounting.

- Engineer of real documents.

• surface: If parsing is required, use the table structure model (for example, PubTables-1M/TATR); otherwise, select Image Local Retrieval.

• Charts/charts: Expect ticks and legendary levels tips; resolutions must keep these. Evaluate graph-centric VQA sets.

• Whiteboard/rotating/multi-language: Page rendering avoids many OCR failure modes; multilingual scripting and rotation scans survive in the pipeline.

• Source: Store page hash and crop coordinates and embed next to copy Accurate Visual evidence used in the answer.

| standard | Text rag | Ugly vision |

|---|---|---|

| Intake pipeline | PDF → parser/OCR → text block → text embedding → ANN | PDF → Page Rendering (S) → VLM Page/Crop Embedding (usually multivector, late interaction) → ANN. Colpali is a standard implementation. |

| Main failure mode | parser drift, OCR noise, multi-column traffic rupture, table structure loss, missing graph/chart semantics. There are benchmarks because these errors are common. | Keep layout/digital; failover to resolution/tile selection and cross-mode alignment. Vdocrag formally formally processed the “unified image” processing to avoid parsing losses. |

| Retrieval representation | Single vector text embedding; rerank by vocabulary or cross encoder | Page image embedding Late interaction (MaxSim style) Capture local areas; improve page-level retrieval on Vidore. |

| End-to-end benefits (vs text rags) | Baseline | +25–39% E2E on multimodal documents when both search and generate are VLM-based (Visrag). |

| Where it is good at | Clean, text-led corpus; low latency/cost | Visually rich/structured documents: tables, charts, stamps, rotation scans, multilingual layouts; a unified page context helps quality check. |

| Resolution sensitivity | Not applicable to OCR settings | Inference quality tracks input fidelity (tick, small font). This is emphasized by high resolution documentation VLM (e.g. the QWEN2-VL family). |

| Cost Model (Input) | Token ≈ characters; cheap search environment | Image token with Flat lay: For example, Openai Base+Tiles formula; human boot ~1.15 mp ≈ ~1.6K token. Even if the price per person is equal (Gemini 2.5 Flash-lite), high-resolution pages consume more tokens. |

| Cross-mode alignment requirements | unnecessary | Key: Text image encoders must share the geometry of the mixed query; Colpali/Vidore demonstrates effective page image retrieval and language tasks. |

| Tracking benchmarks | DOCVQA (DOC QA), PubTables-1M (table structure), is used to parse loss diagnosis. | Vidore (page search), Visrag (pipe), Vdocrag (unified image rag). |

| Evaluation method | IR indicator plus text quality check; graphic grounding issues may be missed | Joint search +Gen on visually rich suites (e.g. OpendoCVQA under vdocrag) to capture crop correlation and layout grounding. |

| Operation mode | One-stage search; cheap scale | Too thick to thin: Text Memories → Visual Rerank → Voting Crops for Generators; while retaining loyalty, keeping token costs limited. (Tile Mathematics/Price provides information for the budget.) |

| When will you like it more | Contract/Template, Code/Wiki, Standardized Table Data (CSV/Parquet) | Real-world enterprise documentation with heavy layout/graphics; compliance workflows require pixel-like source (Page hash + crop coordinates). |

| Representative System | DPR/BM25 + cross encoder rerank | Colpali (iClr’25) Visual Retrieval; Visrag pipeline; vdocrag Unified image frame. |

When the text rag is still correct default?

- Clean, text-led corpus (with fixed templates, wiki, code contract)

- Strict delay/cost limit for short answers

- Data has been normalized (CSV/Parquet) – Skip pixels and query table storage

Evaluation: Measuring search + co-generation

Add multi-mode rag reference-EG on the line harness, m²Rag (Multi-mode quality inspection, subtitles, fact verification, rereading), Real-MM-rag (Real World Multimodal Retrieval) and Wipe inspection (Relevance + Correctness metric for multimodal context). These capture failure cases (irrelevant crops, graph mismatch), text metrics are missed only.

Summary

Text rag For cleaning, text-only data is still valid. Ugly vision Is a practical default value for corporate documents with layouts, tables, charts, stamps, scans and multilingual layouts. (1) alignment, (2) provide selective high-fidelity visual evidence, and (3) evaluate with multi-modal benchmarks, always obtaining higher retrieval accuracy and better downstream answers – now supported by Colpali (ICLR 2025), Visrag’s, Visrag’s. 25-39% Unified image form results for E2E lift and Vdocrag.

refer to:

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.

🔥[Recommended Read] NVIDIA AI Open Source VIPE (Video Pose Engine): A powerful and universal 3D video annotation tool for spatial AI