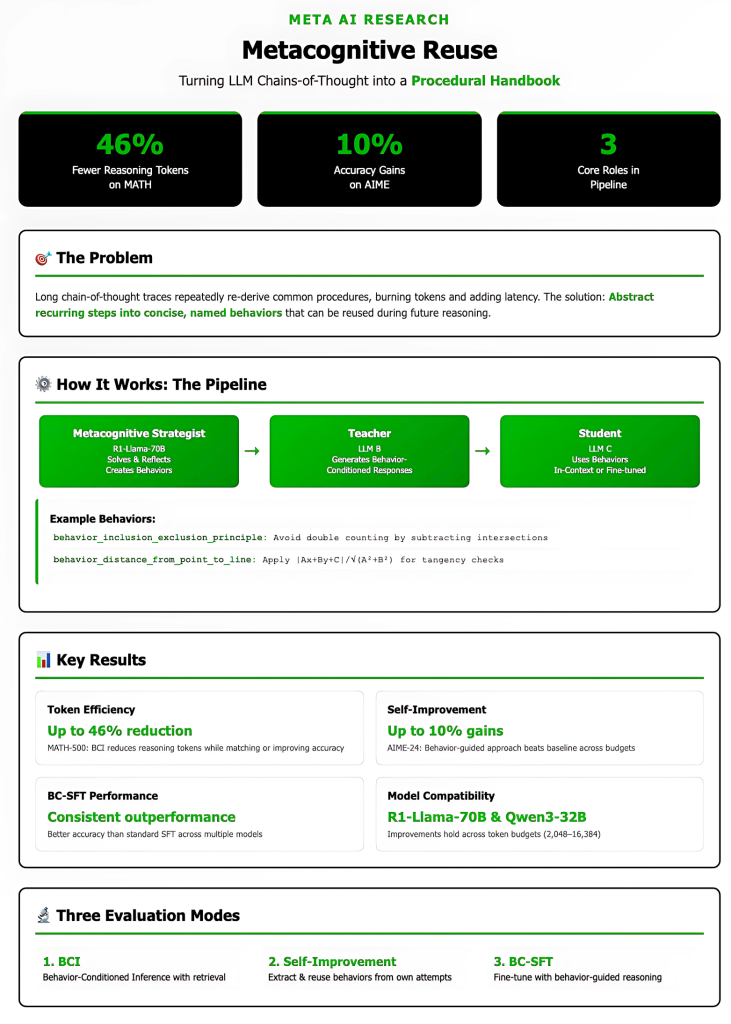

Meta AI proposes “metacognitive reuse”: linking llm chains into a program manual, cutting tokens by 46%

Meta-researchers introduce a method of compressing repetitive inference patterns into short-named programs (“behaviors”) and then adjusting the model to use them when reasoning or extract them by fine-tuning. result: 46% of reasoning tokens Mathematics when matching or improving accuracy, and Up to 10% accuracy improvement In the Self Improvement Setting on AIME, the model weights were not changed. This work treats it as LLM’s program memory (not just the reasons to recall) and is implemented with a selectable, searchable “behavior manual”.

What problem does this solve?

Long chain (COT) traces are repeatedly re-derived for co-subprocessing (e.g., including -exclusion, base transformation, geometric angle sum). This redundant combustion token adds latency and can be squeezed out for exploration. The idea of Yuan is The abstract repeating steps are concise and named as behavior (name + one line of instructions) Recover from previous traces via LLM driven reflection pipeline, then Reuse They are in future reasoning. On mathematical benchmarks (Math-500; AIME-24/25), this greatly reduces the output length while retaining or improving solution quality.

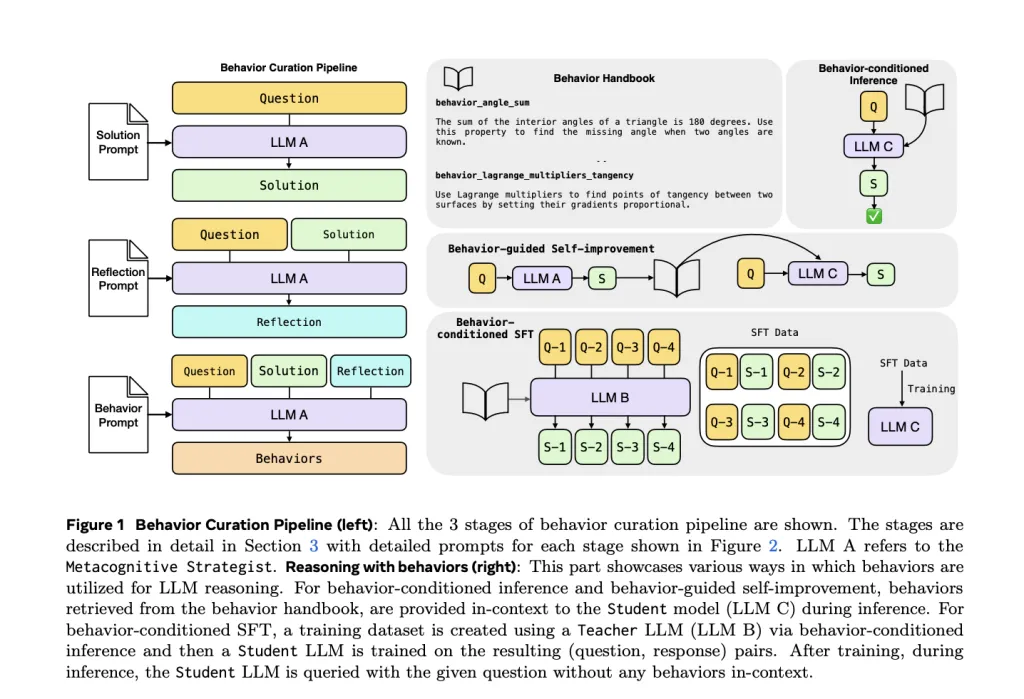

How does the pipeline work?

Three characters, one manual:

- Metacognitive Strategist (R1-LAMA-70B):

- Solved the problem of trace generation, 2) Reflection to identify generalizable steps, 3) The launch behavior is

(behavior_name → instruction)entry. These fill a Behavior Manual (Program memory).

- Solved the problem of trace generation, 2) Reflection to identify generalizable steps, 3) The launch behavior is

- Teacher (LLM B): Generate behavioral conditional responses to establish a training corpus.

- Student (LLM C): In the following, consume behavior (inference) or fine-tune the behavioral condition data.

Search topic-based math-based and embedding-based AIME (BGE-M3 + FAISS).

hint: The team provides clear tips for solutions, reflection, behavior extraction, and behavioral conditional reasoning (BCI). In BCI, the model is instructed to explicitly refer to behavior in its reasoning, thereby encouraging consistent brief, structured derivatives.

What is the evaluation model?

- Behavioral Conditions Inference (BCI): Search for K-related behaviors and prompt them in advance.

- Self-improvement of behavioral guidance: Extract behavior from model My own Early attempts and feed them as tips for revisions.

- SFT of behavioral conditions (BC-SFT): Fine-tuning students’ teacher grades have followed behavior-guided reasoning, so behavioral use becomes a parameter (not retrieved at the time of testing).

Key Results (Math, AIME-24/25)

- Token efficiency: On the Mathematics 500, BCI reduces reasoning tokens by up to 46% Match or improve accuracy relative to the same model without behavior. These two R1-LAMA-70B and QWEN3-32B Students across token budgets (2,048–16,384).

- The benefits of self-improvement: On Aime-24, Self-improvement of behavioral guidance In almost every budget, a benchmark of criticism and rebellion has been defeated, 10% higher As budgets increase, test time scaling that indicates accuracy is better (not just shorter traces).

- BC-SFT quality improvement: In the teaching circle of traversal 3.1-8B, qwen2.5-14b bas, qwen2.5-32b-instruct and qwen3-14b, BC-SFT always beats (Accuracy) Standard SFT and the original basis of cross-budgets, While maintaining higher token efficiency. Importantly, the advantage training corpus does not explain the advantage: the teacher correctness rate (primitive vs. behavioral conditions) in both training sets are close, but BC-SFT students outlined a better summary on AIME-24/25.

Why does this work?

Manual Store Program knowledge (Operation strategy), different from classic rag Declarative Knowledge (fact). By converting the detailed derivation into a brief reusable step, the model skips the restart and relocates the calculations to a new subproblem. The behavior prompts Structured Tips Polish the decoder to the valid, correct trajectory; then BC-SFT Internalization These trajectories make behaviors implicitly called without rapid overhead.

What is inside “behavior”?

The behavior ranges from domain general reasoning to precise mathematical tools, e.g.

behavior_inclusion_exclusion_principle: Avoid double counting by subtracting intersections;behavior_translate_verbal_to_equation: Systematically formalize word problems;behavior_distance_from_point_to_line: Apply | ax+by+c |/√ (a²+b²) to conduct practical inspections.

During BCI, students use the citation behavior explicitly, making the traces auditable and compact.

Search and cost considerations

Mathematically, behaviors are retrieved by topics; on AIME, TOP-K behaviors are selected by BGE-M3 embedding and faiss. BCI Introduction Additional input tokens (Behavior), the input token is pre-computable and non-automatically regressive and is generally cheaper than the output token on commercial APIs. Since BCI Shrink output tokenthe overall cost may decline while delays increase. BC-SFT completely eliminates retrieval at test time.

Summary

META’s behavior manual method runs in LLMS’s program memory: it abstracts the repeated inference steps into reusable “behaviors”, applies them by inference of behavioral conditions or distillation with BC-SFT, and experience provides up to 46% of inference with fewer inference tokens to ensure or improve self-corsextectivememence in Self-Cains effects). The method can be simply integrated (an index, retriever, optional fine-tuning), and surface aesthetic traces, although scaling beyond math and managing a growing behavioral corpus remains an open engineering problem.

Check Paper. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🔥[Recommended Read] NVIDIA AI Open Source VIPE (Video Pose Engine): A powerful and universal 3D video annotation tool for spatial AI