Researchers at IBM and EthZürich unveil the simulation basic model to solve noise in memory AI hardware

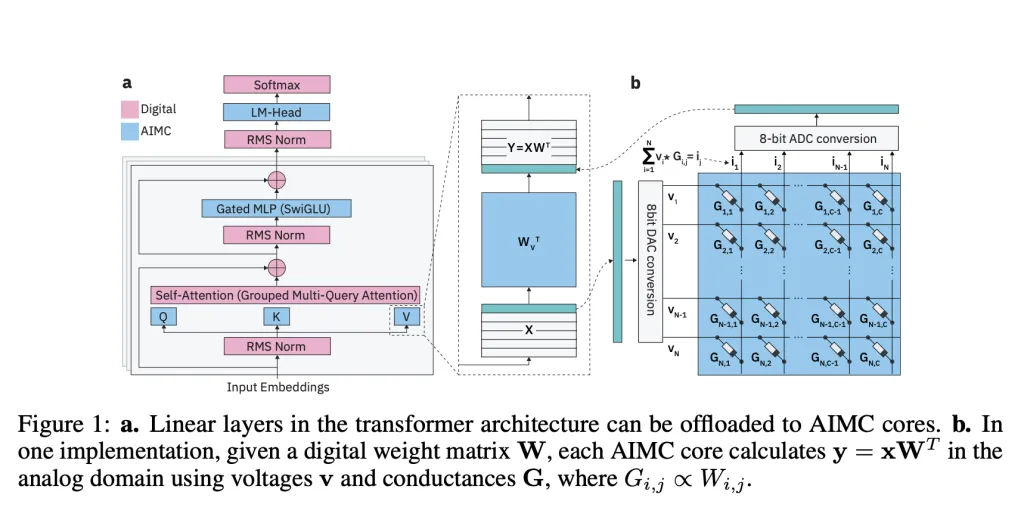

IBM researchers launch a new class of courses along with Eth Zurich in Zurich Simulation Basic Model (AFMS) To bridge the Big Word Model (LLM) and Analog Memory Computing (AIMC) hardware. AIMC has long promised a fundamental leap in efficiency, with models on small enough footprints or small enough footprints for edge devices – to dense non-volatile memory (NVM) combining storage and computing. But the Achilles heel of the technology is noise: performing matrix vector multiplication directly inside an NVM device produces nondeterministic errors that weaken the off-the-shelf model.

Why is simulated computing important for LLM?

Unlike GPU or TPU, data is performed between memory and computing units, AIMC performs matrix vector multiplication directly inside the memory array. The design eliminates the bottlenecks of Von Neumann and makes huge improvements in throughput and power efficiency. Previous research shows that AIMC is combined with 3D NVM and A mixture of Experts (MOE) In principle, the architecture can support a trillion-parameter model of compact accelerators. This could make AI at a fundamental scale viable on devices far beyond the data center.

What is it Simulated memory calculation ((Is AIMC) difficult to use in practice?

The biggest obstacle is noise. AIMC computing is subject to device variability, DAC/ADC quantization and runtime fluctuations that reduce model accuracy. With quantization on the GPU (Errors are deterministic and easy to manage), Analog noise is random and unpredictable. Earlier research has discovered methods to adapt to small networks such as CNN and RNN (

How to solve the noise problem by simulating the basic model?

IBM Team Introduction Simulation basic modelintegrates hardware-aware training into preparing LLMS for simulation execution. Their pipeline uses:

- Injection of noise Simulate AIMC randomness during training.

- Iterative weight reduction Stable distribution within the device limit.

- Learned static input/output quantization range Align with real hardware constraints.

- Distillation from pre-trained LLMS Synthetic data using 20B tokens.

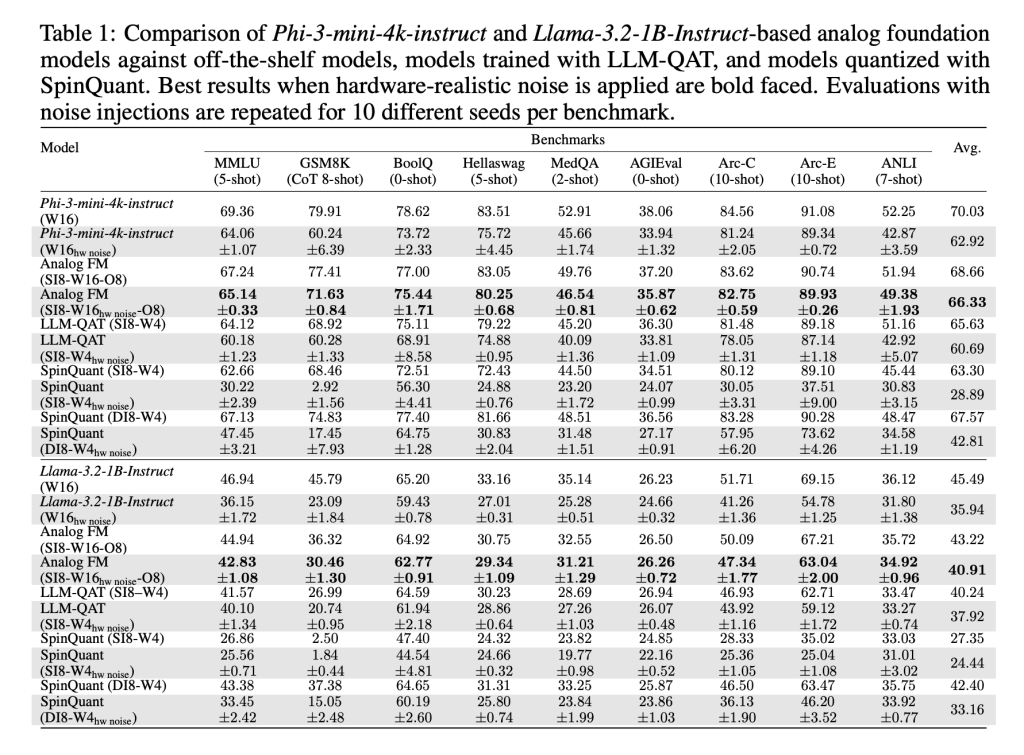

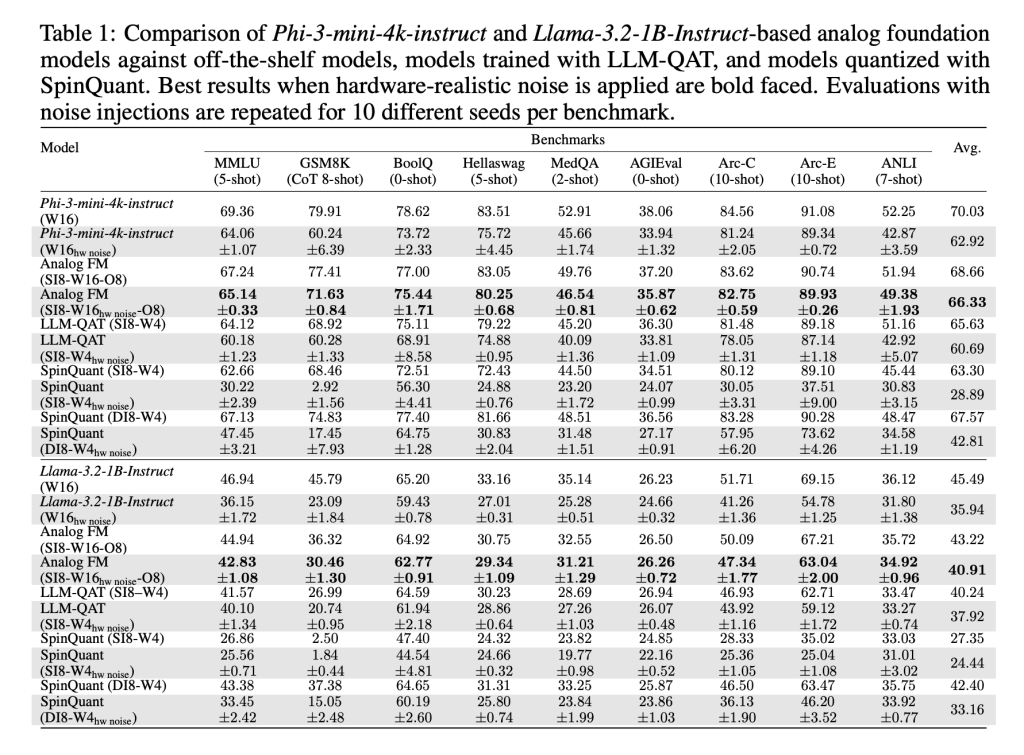

These methods, aihwkit-lightningallowing for example PHI-3-MINI-4K Teaching and Llama-3.2-1b-trustinct maintain Performance comparable to weight-quantized 4-bit/activated 8-bit baseline under simulated noise. In evaluations across reasoning and factual benchmarks, AFMS outperforms quantitative perception training (QAT) and post-training quantification (Spinquant).

Are these models only suitable for simulated hardware?

no. An unexpected result is that AFMS also performed well Low-precision digital hardware. Since training AFMs can tolerate noise and shearing, they handle simple post-training loop (RTN) quantization better than existing methods. This makes them useful not only for AIMC accelerators, but also for commodity digital reasoning hardware.

Can performance use more calculations when inference?

Yes. The researchers tested it Test time calculation scaling In the Math-500 benchmark, each query produces multiple answers and the best is selected by the reward model. Compared with the QAT model, AFM shows better scaling behavior and allocates more inference calculations, narrowing the accuracy gap. This is consistent with the advantages of AIMC – low power, high throughput reasoning rather than training.

How does it affect the future of analog memory computing (AIMC)?

The research team provided the first systematic proof that large LLMs can adapt to AIMC hardware without causing catastrophic accuracy losses. While training AFMS is resource-rich, inference tasks such as GSM8K still show accurate gaps, the result is a milestone. Combined Energy efficiency, robustness to noise and compatibility with digital hardware Make AFMS a promising direction for scaling the base model beyond GPU limits.

Summary

The introduction of the simulation foundation model marks a critical milestone in extending LLM beyond the limits of digital accelerators. By making the model strongly pose the unpredictable noise that simulates memory computing, the research team shows that AIMC can transform from a theoretical commitment to a practical platform. While training costs are still high and inference benchmarks still show gaps, this work establishes a pathway for large-scale models that are energy-efficient and scaled running on compact hardware that pushes the underlying model to the edge deployment

Check Paper and Github page. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.

🔥[Recommended Read] NVIDIA AI Open Source VIPE (Video Pose Engine): A powerful and universal 3D video annotation tool for spatial AI