IBM AI Research unleashes two English granite embedding models based on Modernbert Architecture

IBM has quietly built a strong influence in the open source AI ecosystem, and its latest version shows why it should not be ignored. The company launches two new embedding models –Granite-Embedding-English-R2 and Granite-Embedding-Mall-English-R2– Designed specifically for high-performance search and rag (retrieval generation) systems. These models are not only compact and efficient, but also Apache 2.0prepare them for commercial deployment.

What models has IBM released?

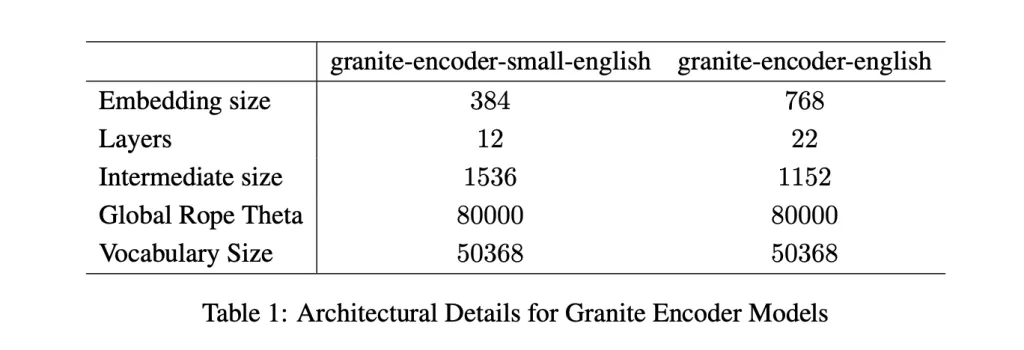

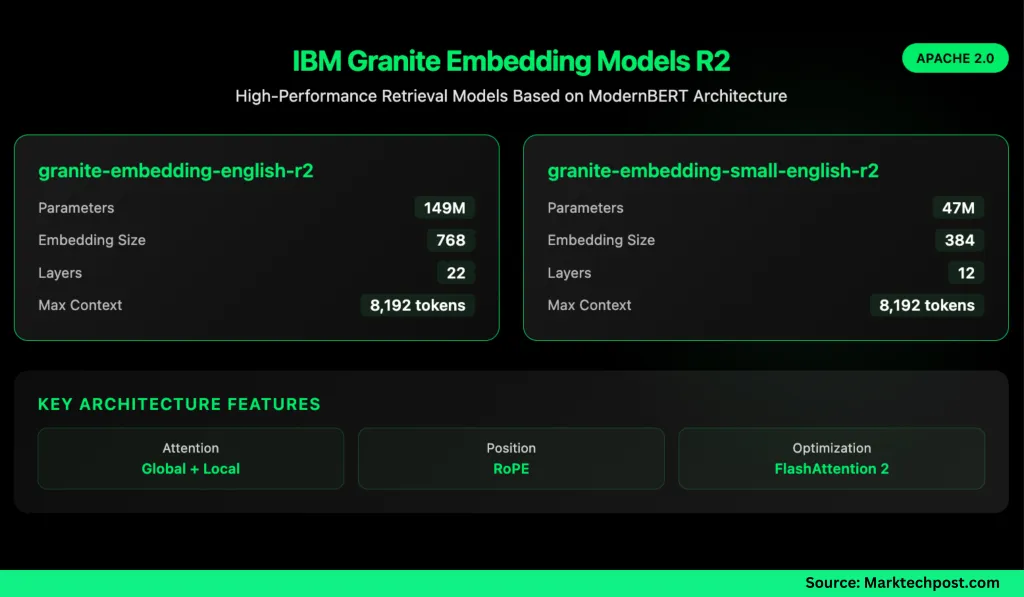

These two models target different computational budgets. Larger Granite-Embedding-English-R2 With 149 million parameters, embedded size is 768, built on a 22-layer modern Burt encoder. Its smaller opponent Granite-Embedding-Mall-English-R2using only 12-layer modern Burt encoder, with only 47 million parameters and an embedded size of 384.

Despite the size difference, both support maximum context length 8192 Tokena major upgrade from the first generation of granite embeddings. This long-term cultural capability makes it ideal for enterprise workloads involving long documents and complex retrieval tasks.

What’s in the architecture?

Both models are built on Modern Burt Backbone, several optimizations have been introduced:

- Alternate global and local attention Balancing efficiency and remote dependencies.

- Rotating position embed (rope) Adjust for position interpolation, enabling longer context windows.

- Flash 2 Improve memory usage and throughput when reasoning.

IBM still uses it Multi-stage pipeline. The process begins with masking language predictions of 200 million British datasets from Web, Wikipedia, PubMed, BookCorpus and Insernal IBM technical documents. Next is Context extension from 1K to 8K token,,,,, Contrast learning for distillation from Missral-7band Domain-specific tuning Used for conversation, table and code retrieval tasks.

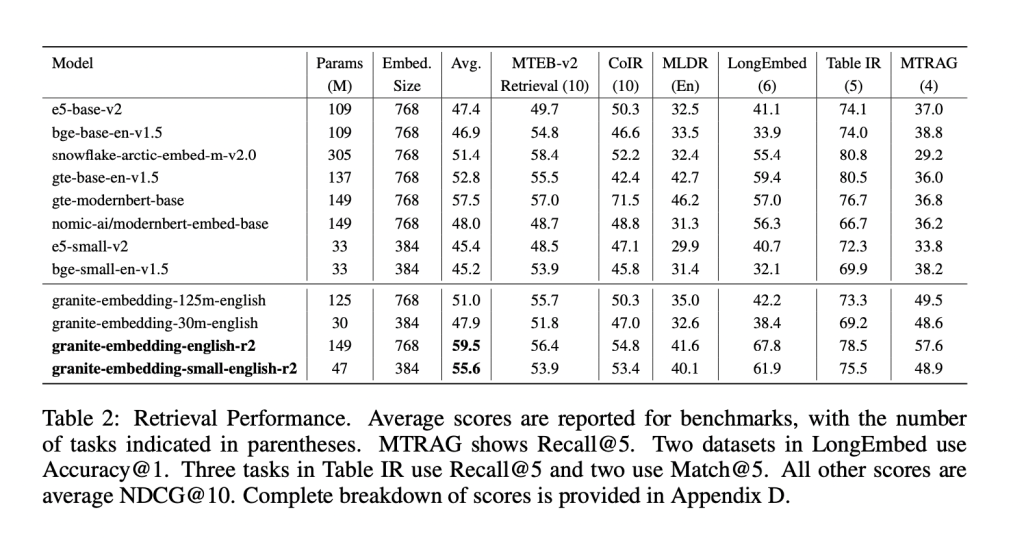

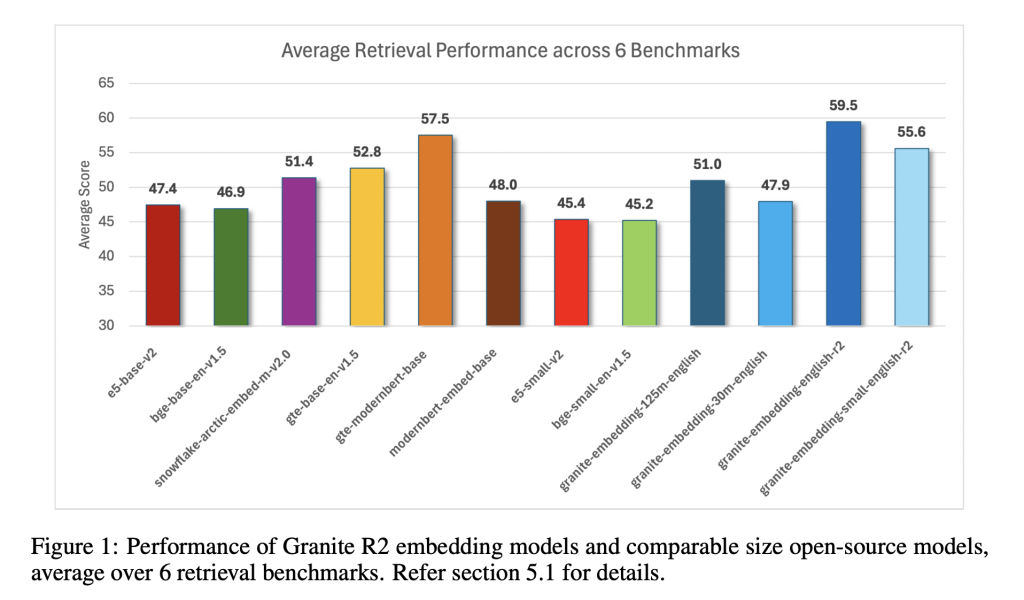

How do they perform on benchmarks?

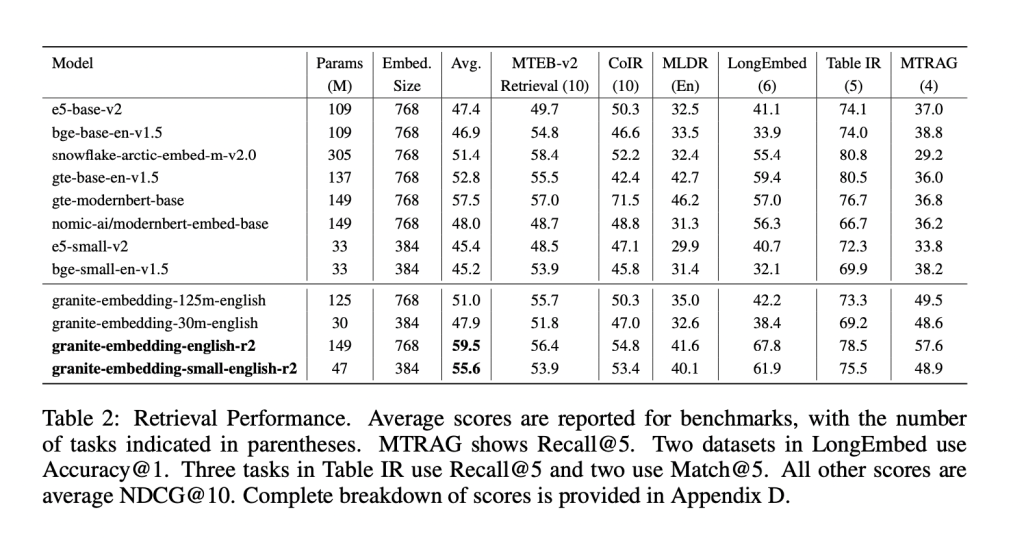

The granite R2 model provides strong results in widely used search benchmarks. exist MTEB-V2 and Bellthe larger granite-embedded-English-r2 perform better than size models such as BGE pedestal, E5 and Arctic embedding. The smaller model, the Granite Plug-Mer-English-r2, achieves model accuracy, close to two to three times the model, making it particularly attractive for delay-sensitive workloads.

Both models also perform well in the professional field:

- Long-term search (MLDR, long-term installation) 8K context support is crucial.

- Table Retrieval Tasks (OTT-QA, FinQA, OpenWikitobles) Where structured reasoning is needed.

- Code Retrieval (COIR)handles text-to-code and code-to-text queries.

Are they fast enough for mass use?

Efficiency is one of the outstanding aspects of these models. On NVIDIA H100 GPU Granite-Embedding-Mall-English-R2 Encoding almost 200 files per secondwhich is smaller than BGE, while E5 is smaller. Larger granite inserts – English – r2 can also be reached 144 files per secondperforms better than many modern-based alternatives.

Crucially, these models are still practical even on CPUs, allowing businesses to run them in less GPU-intensive environments. This balance Speed, compact size and search accuracy Adapt them to real-world deployments.

What does this mean for retrieval in practice?

IBM’s granite embedding R2 model shows that the embedding system does not require a large number of parameter counts to be effective. They combined Long text support, benchmark-leading accuracy and high throughput In a compact architecture. For companies that build search pipelines, knowledge management systems or rag workflows, Granite R2 offers Prepare for production, commercially viable alternatives to existing open source options.

Summary

In short, IBM’s granite embedding R2 model strikes an effective balance between compact design, novel capabilities and powerful retrieval performance. They provide a practical alternative to larger open source embeddings with optimized throughput for GPU and CPU environments, as well as an Apache 2.0 license that enables unlimited commercial use. For businesses deploying rags, searches, or large-scale knowledge systems, Granite R2 is an efficient and production-ready option.

Check Paper, Granite – Insert – small-english-r2 and Granite-Embedding-English-R2. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.