MBZUAI researchers release K2 thinking: A 32B open source system for advanced AI inference, better than 20 times larger inference model

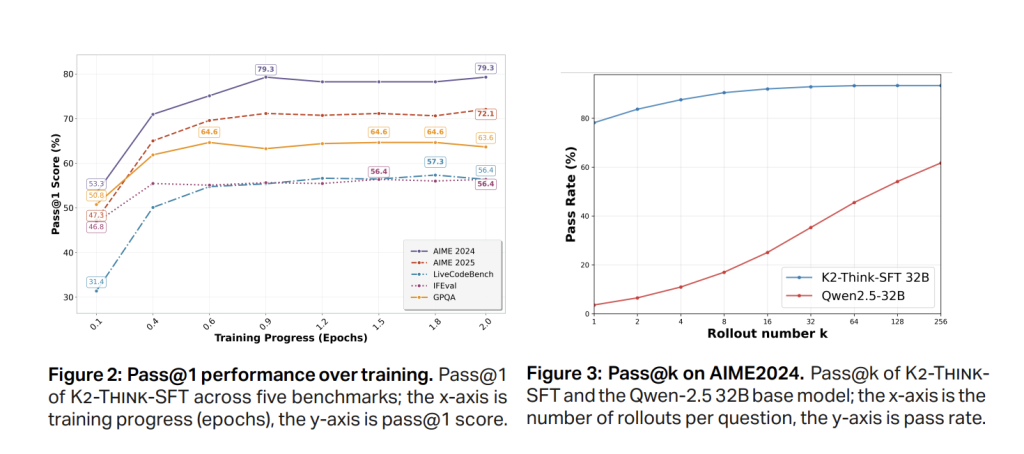

A team of researchers from the MBZUAI Foundation Model and G42 released K2 Think, a 32B parameter open reasoning system for advanced AI reasoning. It combines long-time imaginary supervised fine-tuning with learning from verifiable rewards, proxy planning, test time scaling, and inference optimization (speculative decoding + crystal-scale hardware). The result is a border-level mathematical performance, with significantly lower parameter counts and significant competitive results in code and science – combined with transparent, fully open release weights, data and code.

System Overview

The K2 Think is built with the training-ready open weight QWEN2.5-32B base model and adds a lightweight test time calculation scaffolding. The design emphasizes parameter efficiency: deliberately select a 32B skeleton to achieve rapid iteration and deployment while leaving the bathroom for post-training gain. The core recipe combines six “pillars”: (1) fine-tuning of long chain (COT) supervision; (2) reinforced learning (RLVR) with verifiable rewards; (3) agent planning before solving; (4) test time scaling for the best N by using the validator; (5) speculative decoding; (6) infer wafer-scale engines.

The goal is simple: improve the pass on competitive math benchmarks, maintain strong code/scientific performance, and control response length and wall-mounted delays with cues from flat thinking and hardware-aware inferences.

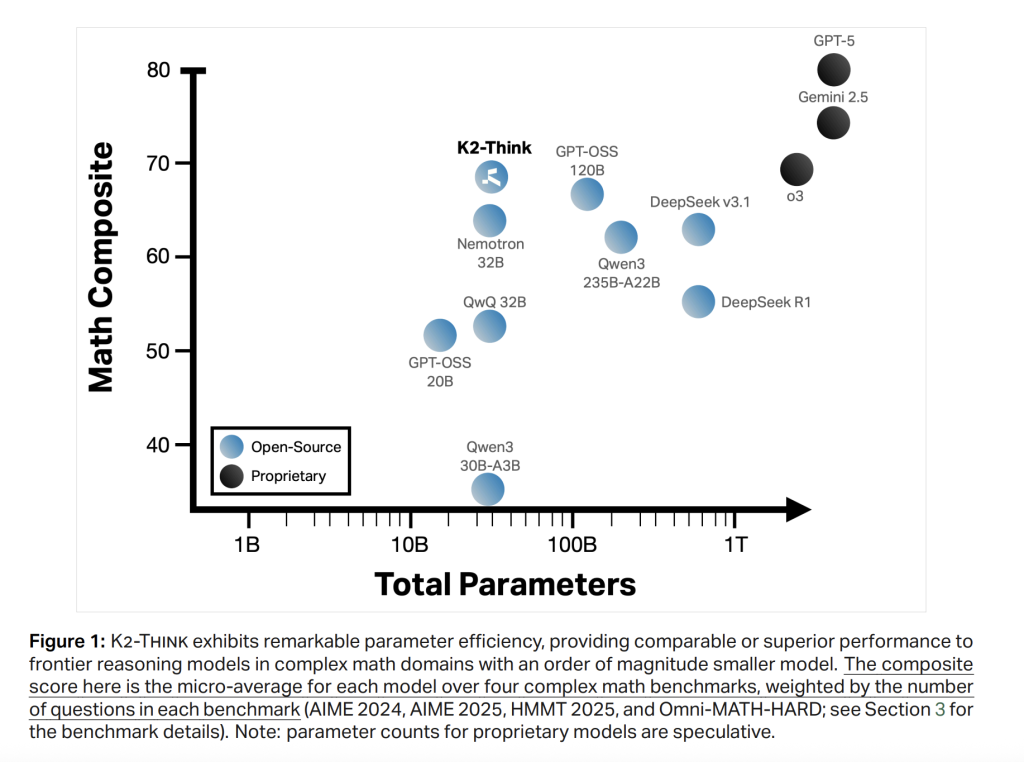

Pillar 1: Long Bed SFT

Phase 1 SFT uses well-planned, longer thought traces and instructions/response pairs covering mathematics, code, science, following and general chat (AM-INKINGING-V1-DISTISTILD). The effect is to teach the basic model to externalize intermediate reasoning and adopt a structured output format. Fast pass @1 return occurs early (≈0.5 period), AIME’24 stabilizes about 79%, Aime’25 is about 72% around the SFT checkpoint before RL, indicating convergence.

Pillar 2: RL with verifiable rewards

k2 Think and then train with RLVR mastera ~92K – rollout, six-domain dataset (math, code, science, logic, simulation, table), designed to be verifiable end-to-end correctness. Implement use Verl A library with GRPO-style strategy-level algorithms. Notable observations: Strong SFT checkpoints produce moderate absolute gain and can be smooth/degraded, while applying the same RL formula directly on the base model shows a large relative improvement (e.g., Aime’24 is more trained than training on Aime’24), thus supporting a trade-off between SFT intensity and RL headroom.

The second ablation showed poor performance of multi-stage RL with reduced initial context windows (e.g., 16K → 32K) – the effect of restoring the SFT baseline – making the SFT strategy below the maximum sequence length of the SFT strategy can disrupt the learned inference pattern.

Pillars 3–4: Agent “before plan” and test time scaling

When inferring, the system first causes compactness plan Before generating the complete solution, then perform the best N (e.g. n = 3) sampling using the validator to select the most likely answer. Two effects were reported: (i) consistent mass growth of combined stents; (ii) short Despite the increase plan, the final response is a decrease in the mean value across the benchmark, down by ~11.7% (e.g., Omni-Hard), with the overall length comparable to the larger open model. This is important for both latency and cost.

Table-level analysis shows that the response length considered by K2 is short Compared to qwen3-235b-a22b, the same range as the mathematically gpt-oss-1220; after adding the plan and validator, K2 believes that the average token drops with its own post-training checkpoints (e.g. Aime’24 -6.7%, Aime’25 -3.9%, HMMT25-7.25 -7.2%, Omni-Hard-11.7%, LCBBV5-10.10.5%, GPQA2, GPQA.

Pillars 5–6: Speculative Decoding and Wafer Scale Inference

K2 Thinking about the Goal Cerebellar wafer scale engine infer Speculative decodingeach ad throughput is above 2,000 tokens/secwhich makes testing time scaffolding practical in production and research cycles. The hardware-aware inference path is a core part of the distribution and is consistent with the system’s “small but rich” philosophy.

Evaluation Agreement

Benchmarks cover competitive-level mathematics (Aime’24, Aime’25, HMMT’25, Omni-Math-Hard), code (LiveCodeBench V5; Scicode sub/Main), and scientific knowledge/reasoning (GPQA-Diamond; HLE). Research team reported standardized settings: maximum generation length 64K token, temperature 1.0, Top-P 0.95, stop mark average per score 16 Independent Pass @1 Evaluate to reduce operational variance.

result

Mathematics (Aime’24/’25, HMMT25, Omni-Hard’s micro-average). K2 believes it has reached 67.99lead the open number of queues and compare it to larger systems; IT release 90.83 (aime’24), 81.24 (aime’25), 73.75 (hmmt25) and 60.73 On Omni-Hard – the latter is the most difficult split. Compared with DeepSeek v3.1 (671b) and GPT-Oss-1220b (120b), positioning is consistent with strong parameter efficiency.

Code. livecodebench v5 score 63.97even larger open models over similar size peers (e.g. >QWEN3-235B-A22B at 56.64). On Scicode, K2 thinks it is 39.2/12.0 (Sub/Main), the best open system closely tracks the accuracy of sub-problems.

science. GPQA-Diamond Arrival 71.08; HLE is 9.95. The model is not only a math expert: it remains competitive in knowledge-heavy tasks.

Key figures that are clear at a glance

- backbone: QWEN2.5-32B (Open Weight), trained by long COT SFT + RLVR (GRPO through Verl).

- RL data: Masters (~92k tips) are all over mathematics/code/science/logic/simulation/tables.

- Reasoning scaffolding: Before your mind+verifier, use validators; shorter outputs (e.g., −11.7% tokens on Omni-Hard) are more accurate.

- Throughput target: ~2,000 Tok/s Speculative decoding is performed on brain WSE.

- Mathematics micro vg: 67.99 (Aime’24 90.83Aime’25 81.24hmmt’25 73.75Omni-Hard 60.73).

- Code/Science: LCBV5 63.97; Scicode 39.2/12.0; GPQA-D 71.08; hle 9.95.

- Safee-4 macro: 0.75 (Rejected 0.83, Conv.Brotness 0.89, Cybersecurity 0.56, Jailbreak 0.72).

Summary

K2 thinking proves Integrated training + test time calculation + hardware-aware inference Most of the gap can be narrowed to larger proprietary inference systems. At 32B, it can be fine-tuned and served. With your mind and Bond Buddhists, it can control the token’s budget; with speculative decoding of wafer-scale hardware, it can achieve ~2K tok/s According to the request. K2 thinks it is Completely open system-Weights, training data, deployment code and test time optimization code.

Check Paper,,,,, Embrace face, github and direct entry model. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.