introduce

Tencent’s Hunyuan team has released Hunyuan-Mt-7b (Translation Model) and Hunyuan-Mt-Chimera-7b (Enseok model). Both models are designed for multilingual machine translation and are introduced with Tencent’s involvement WMT2025 regular machine translation sharing tasksHunyuan-MT-7B ranks first among them 30 pairs of 31 language pairs.

Model Overview

Hunyuan-Mt-7b

- one 7b Parameter Translation Model.

- support Mutual translation across 33 languagesinclude Chinese ethnic minority languages Such as Tibetans, Mongolians, Uyghurs and Kazakhstans.

- Both are optimized High-resource and low-resource translation tasksimplement the latest results in a fairly large model.

Hunyuan-Mt-Chimera-7b

- one Comprehensive fusion model from weak to mutation.

- Combine multiple translation outputs at inference time and use enhanced learning and aggregation techniques to generate refined translations.

- represent The first open source translation model of this typeimprove the output of a single system other than translation quality.

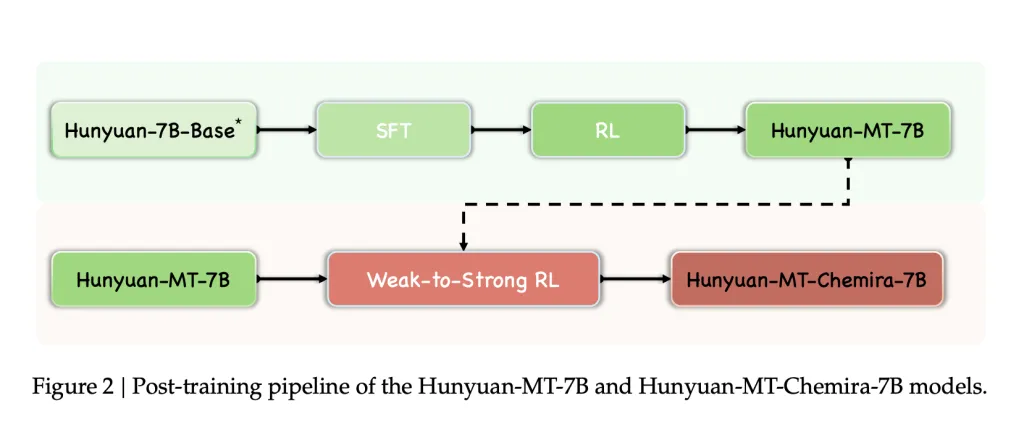

Training framework

use Five-stage framework Designed for translation tasks:

- General training

- The 1.3 trillion tokens cover 112 languages and dialects.

- A multilingual corpus of knowledge value, authenticity and writing style was evaluated.

- Maintain diversity through discipline, industry and theme marking systems.

- Pre-training for MT

- Monolingual Corpora for MC4 and Oscar filtered using FastText (language ID), Minlsh (Dewuplication) and Kenlm (Perplexity Feltering).

- Parallel corpus from opus and caracrawl and filtered with cometkiwi.

- Replay general training data (20%) to avoid catastrophic forgetting.

- Supervised fine-tuning (SFT)

- Phase I: ~3M parallel pairs (Flores-200, WMT test set, curated Mandarin-small data, synthetic pairs, guidance data).

- Phase 2: Select ~268K high quality pairs through automatic scoring (Cometkiwi, Gemba) and manual verification.

- Strengthening Learning (RL)

- algorithm: grpo.

- Rewards function:

- Xcomet-XXL and DeepSeek-V3-0324 quality scores.

- Rewards of term perception (TAT-R1).

- Repeat penalties to avoid degradation of output.

- Worse RL

- Multiple candidate outputs generated and summarized by reward-based output

- application Hunyuan-Mt-Chimera-7bimprove translation robustness and reduce duplicate errors.

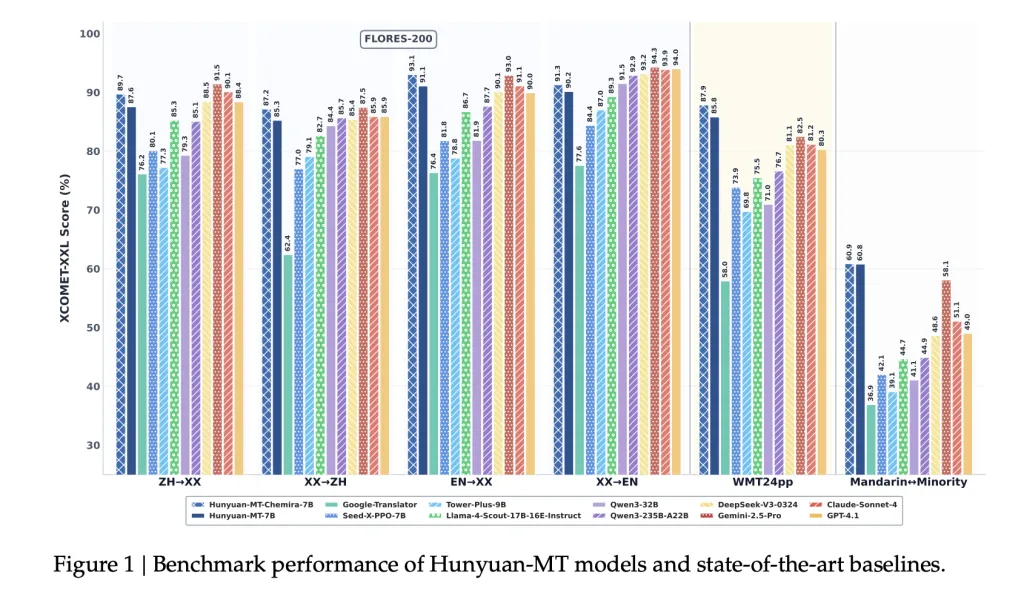

Benchmark results

Automatic evaluation

- wmt24pp (English⇔xx): Hunyuan-Mt-7b has been implemented 0.8585 (XCOMET-XXL)surpassing larger models such as Gemini-2.5-Pro (0.8250) and Claude-Sonnet-4 (0.8120).

- Flores-200 (33 languages, 1056 pairs): Hunyuan-MT-7B score 0.8758 (Xcomet-XXL)exceeding the open source baseline including QWEN3-32B (0.7933).

- Mandarin⇔Some languages:Score 0.6082 (XCOMET-XXL)higher than Gemini-2.5-Pro (0.5811), show significant improvements in low resource settings.

Comparison results

- Better Google Translator 15-65% of the assessment category.

- Better than professional translation models, e.g. Tower-plus-9b and Seed-X-PPO-7B Although there are fewer parameters.

- Chimera-7b Flores-200 (Flores-200) grew by about 2.3%, especially for Chinese and non-English translations.

Human Assessment

The customized evaluation set (covering social, medical, legal and Internet fields) compares the Hunyuan-MT-7B with the state-of-the-art model:

- Hunyuan-Mt-7b:avg. 3.189

- Gemini 2.5-Pro:avg. 3.223

- DeepSeek-V3:avg. 3.219

- Google Translate:avg. 2.344

This shows that despite being smaller at the 7b parameter, the Hunyuan-MT-7B approaches the quality of a larger proprietary model.

Case study

The report highlights several realities:

- Cultural Reference: Unlike Google Translate’s “sweet potato”, it correctly translates “little sweet potato” into the “rednote” platform.

- idiom: : Make “You are killing me” “You are really going to make me laugh to death” (expression entertainment), avoiding literal misunderstandings.

- Medical Terms: Accurately translate “uric acid kidney stones” while the baseline produces a deformed output.

- A few languages: For Kazakhs and Tibetans, Hunyuan-Mt-7b produces coherent translations, the base line fails or outputs absurd text.

- Chime enhancement: Added improvements to game terms, enhancers and sports terms.

in conclusion

Tencent’s release Hunyuan-Mt-7b and Hunyuan-Mt-Chimera-7b Establish new standards for open source translation. By integrating a carefully designed training framework with dedicated attention Low Resources and Minority Translationthese models achieve mass with or exceed the mass of larger enclosed systems. The launch of these two models provides the AI research community with accessible, high-performance tools for multilingual translation research and deployment.

Check About paper hugging faces, github pages and models. All credits for this study are to the researchers on the project. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.