Local rags and agent rags: Which way can promote enterprise AI decisions?

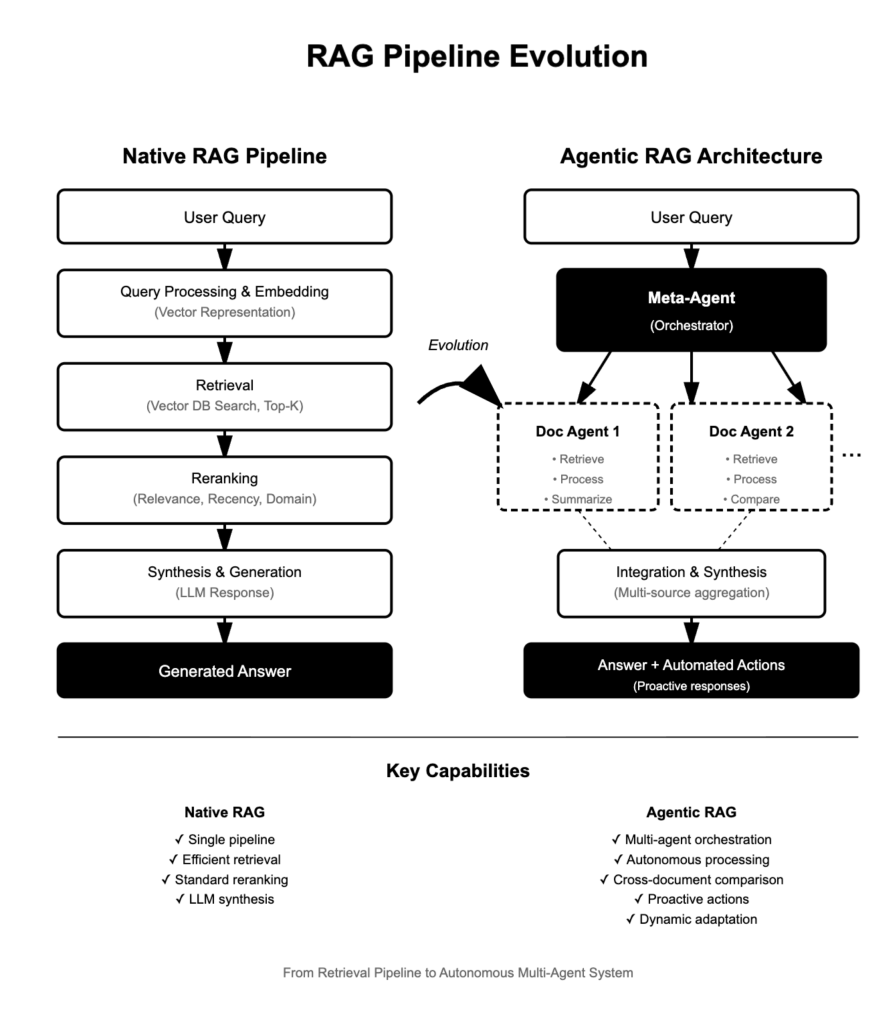

The Retrieval Augmentation Generation (RAG) has become a cornerstone technology for enhancing large language models (LLMs) with real-time, domain-specific knowledge. However, the landscape is rapidly shifting – the most common implementation is the “local rag” pipeline, and a new paradigm called “agent rag” is redefining the possibilities in AI-driven information synthesis and decision support.

This machine rag: standard pipe

architecture

The local RAG pipeline utilizes retrieval and generation-based approaches to answer complex queries while ensuring accuracy and relevance. Pipelines usually involve:

- Query processing and embedding: If needed, use LLM or a dedicated embedding model to rewrite the user’s problem into the vector representation and prepare it for semantic search.

- Search: The system searches vector databases or document storage and uses similarity indicators (Cosine, Euclidean, Dot products) to identify TOP-K-related blocks. An efficient ANN algorithm optimizes the speed and scalability of this stage.

- Reread: The search results are re-dependent based on correlation, proximity, domain specificity, or user preferences. Remanagement models from rules-based to fine-tuning ML systems can improve the highest quality of information.

- Synthesis and generation: LLM integrates re-information to generate a coherent, context-aware response for the user.

Common optimizations

Recent advances include dynamic rereading (adjusting depth through query complexity), convergence-based strategies that summarize rankings from multiple queries, and a hybrid approach that combines semantic allocation with proxy-based proxy-based selection for optimal retrieval robustness and latency.

Agent rag: autonomous, multi-agent information workflow

What is an agent rag?

Agentic Rag is an agent-based rag method that uses multiple autonomous agents to answer questions and process documents in a highly coordinated manner. Agent rags are not a single search/generation pipeline, but structure their workflow for in-depth reasoning, multi-article comparison, planning, and real-time adaptability.

Key Components

| Element | describe |

|---|---|

| File Agent | Each document is assigned its own proxy, able to answer queries about the document and perform summary tasks, and work independently within its scope. |

| Yuan Agent | Plan all document agents, manage their interactions, integrate outputs and integrate comprehensive answers or actions. |

Features and benefits

- autonomy: The agent operates independently, retrieves, processes, and generates answers or operations for a specific document or task.

- Adaptability: The system dynamically adjusts its policies based on a new query or changing the data context (e.g., re-upgrade depth, document priority, tool selection).

- Positiveness: Agents can foresee demand, take pre-emptive steps (e.g., extract other resources or suggest action), and learn from previous interactions.

Advanced features

Agent rag goes beyond “passive” retrieval – Agents can compare documents, summarize or compare specific parts, summarize multi-source insights, and even call tools or APIs for enriching reasoning. This can:

- Automation research and multi-database aggregation

- Complex decision support (e.g., comparing technical features, summarizing key differences between product papers)

- Execution support tasks requiring independent synthesis and real-time action recommendations.

Apply

Agent rags are ideal for scenarios that require subtle information processing and decision making:

- Enterprise knowledge management: Coordinate the answer across heterogeneous internal repositories

- AI-driven research assistant: Cross-document synthesis by tech writers, analysts or executives

- Automatic workflow: Triggering actions (e.g., responding to invitations, updating records) perform multi-step inference after multiple documents or databases.

- Complex compliance and security audits: Real-time summary and comparison of evidence from various sources.

in conclusion

Natural rag pipes have standardized the process of embedding, retrieving, rereading and synthesizing answers from external data, allowing LLMs to act as dynamic knowledge engines. Agentic Rag further pushes boundaries by introducing autonomous agents, orchestration layers and proactive adaptive workflows, which transforms rags from retrieval tools to a comprehensive proxy framework for advanced inference and multi-document intelligence.

Organizations seeking to transcend basic augmentation and into the deeper depths, flexible AI orchestration will find a blueprint for the next generation of intelligent systems in the proxy rag.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.