What is DeepSeek-V3.1 and why is everyone talking about it?

Chinese artificial intelligence startups DeepSeek issued DeepSeek-V3.1this is the latest flagship language model. It is built on DeepSeek-V3adds a lot of enhancements to inference, tool usage and coding performance. It is worth noting that the DeepSeek model quickly gained reputation Deliver OpenAI and human-level performance at a fraction of the cost.

Model architecture and functions

- Mixed mindset: DeepSeek-v3.1 supports both thinking (After thinking reasoning, more deliberate) and No thought (Direct, Stream of Consciousness) Generation, can be switched through chat templates. This is different from previous versions, providing flexibility for various use cases.

- Tools and proxy support: The model has been targeted Tool calls and Agent Tasks (e.g., using API, code execution, search). Tool calls use a structured format, the model supports custom code proxy and search proxy, and provides detailed templates in the repository.

- Large-scale, effective activation: This model has 671B total parametersand 37b Activated Each Token-one A mixture of Experts (MOE) Design reduces inference costs while maintaining capacity. this Context window yes 128K tokenmuch bigger than most competitors.

- Long context extension: DeepSeek-V3.1 use Two-phase novel extension method. Phase 1 (32K) received training 630b token (10 times more than V3), the second (128K) 209b Token (3.3 times higher than V3). The model has been trained FP8 microscope Effective arithmetic on next-generation hardware.

- Chat Template: Template support More conversations Use system prompts, user query and assistant response explicit tokens. this thinking and No thought The mode is from

and the marking of time series.

Performance Benchmark

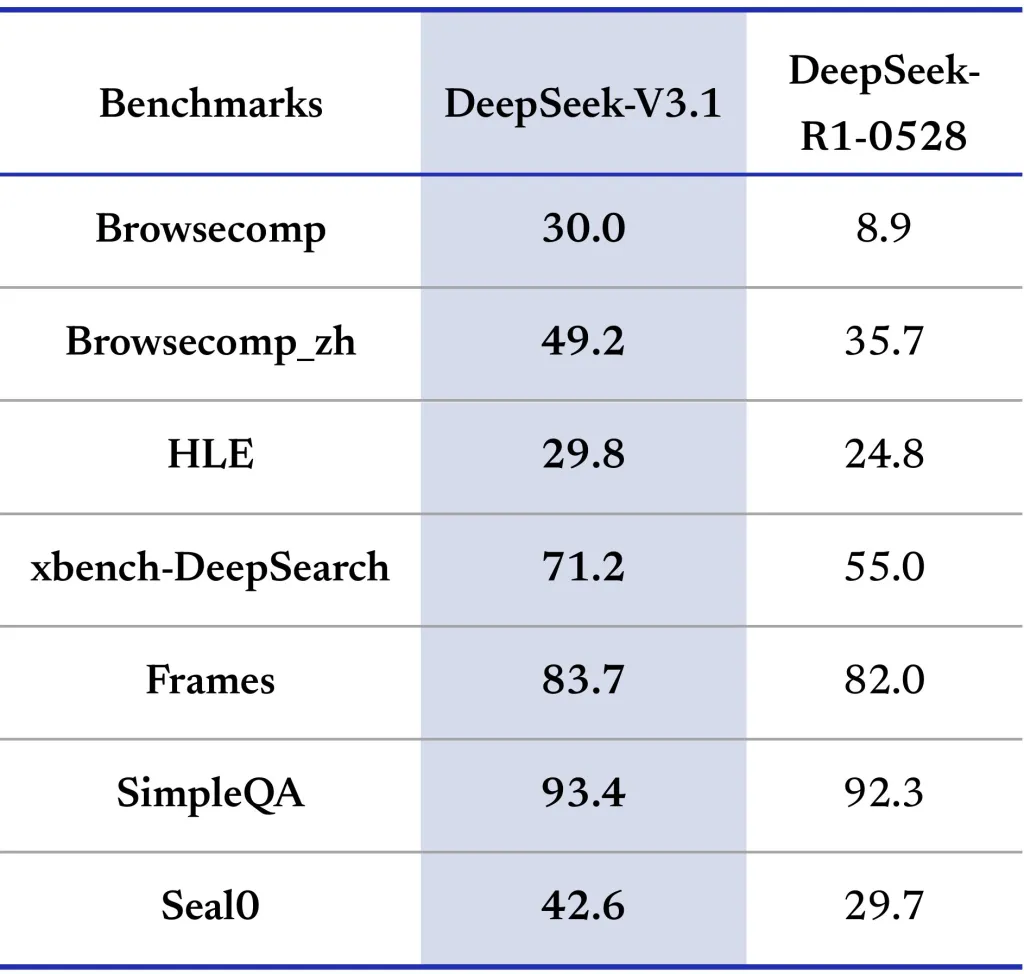

DeepSeek-V3.1 is Evaluate in various benchmarks (See the table below), including general knowledge, coding, mathematics, tool usage, and proxy tasks. Here are the highlights:

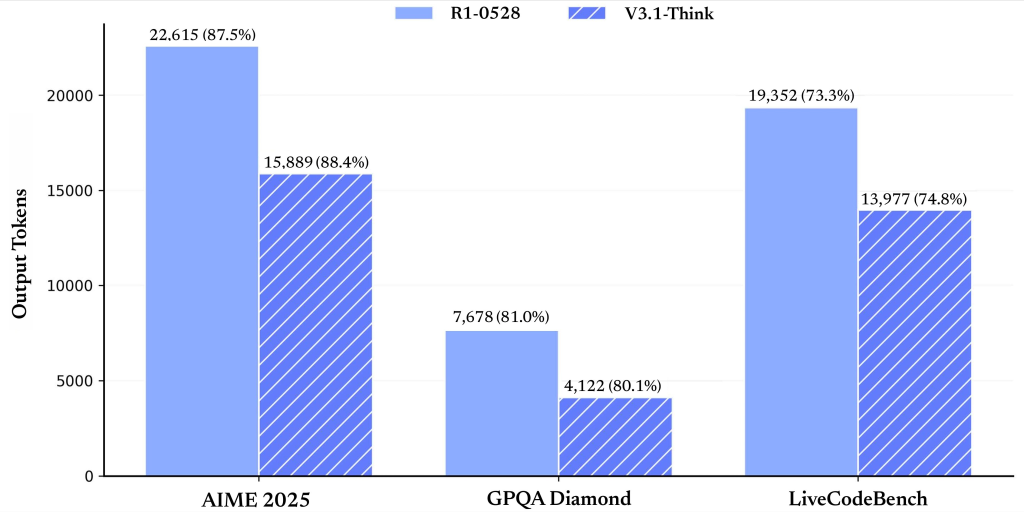

| Metric system | v3.1 Non-thinking | v3.1 Thinking | Competitors |

|---|---|---|---|

| mmlu-redux (em) | 91.8 | 93.7 | 93.4 (R1-0528) |

| mmlu-pro (EM) | 83.7 | 84.8 | 85.0 (R1-0528) |

| GPQA-Diamond (via @1) | 74.9 | 80.1 | 81.0 (R1-0528) |

| livecodebench (via @1) | 56.4 | 74.8 | 73.3 (R1-0528) |

| Aimé2025 (by @1) | 49.8 | 88.4 | 87.5 (R1-0528) |

| SWE Basics (Proxy Mode) | 54.5 | – | 30.5 (R1-0528) |

this Thinking mode Always match or exceed the latest versions of the previous ones, especially in terms of coding and math. this Non-thinking mode Faster but slightly less accurate, making it ideal for delay-sensitive applications.

Tools and code proxy integration

- Tool calls: Support structured tool calls in non-thinking mode, allowing scripting workflows with external APIs and services.

- Code proxy: Developers can build custom agents by following the provided track template that details interactive protocols for code generation, execution, and debugging. DeepSeek-V3.1 can use external search tools to latest information, a feature that is critical to business, finance and technology research applications.

deploy

- Open Source, MIT License: All model weights and codes are Free to provide Under hugging face and model size MIT Licenseencourage research and commercial use.

- Local Inference: The model structure is compatible with DeepSeek-V3 and provides detailed instructions for local deployment. Due to the size of the model, running requires a large amount of GPU resources, but open ecosystems and community tools adopt lower barriers.

Summary

DeepSeek-v3.1 represents a milestone in the democratization of advanced AI, demonstrating an open source, cost-effective and highly functional language model. Its fusion Scalable reasoning,,,,, Tool Integrationand Perform outstandingly Positioning it as a practical choice for research and applied AI development in coding and mathematical tasks.

Check Model embracing face. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Michal Sutter is a data science professional with a master’s degree in data science from the University of Padua. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels in transforming complex data sets into actionable insights.