Deep Learning Framework Showdown: Pytorch vs Tensorflow 2025

Choice between Pytorch and Tensor Still one of the most discussed decisions in the development of AI. Both frameworks have evolved dramatically since their inception, converging in certain areas while maintaining unique advantages. This article explores the latest models, synthetic availability, performance, deployment and ecosystem considerations for comprehensive survey documents from Alfaisal University, Saudi Arabia, to guide practitioners in 2025.

Philosophy and Developer Experience

Pytorch Use one Dynamic (Definition Division) paradigm makes model development feel like regular Python programming. The researchers accepted this immediacy: debugging is simple and the model can be changed at any time. Pytorch architecture – around torch.nn.Module– Fusion modular, object-oriented design. The training loop is clear and flexible, with full control of each step, which is ideal for experimentation and custom architecture.

Tensor,initial Still (Define and run) framework, rotated with Tensorflow 2.x, and persists urgent execution by default. KERAS Advanced API (now integrated in depth) simplifies many standard workflows. Users can use it tf.keras.Model And take advantage of the top-tier people like model.fit() For training, a model for common tasks is reduced. However, highly customizable training programs may need to go back to Tensorflow’s underlying API, which is often easier to add complexity in Pytorch due to Pythonic backtracking and the ability to use standard Python tools. TensorFlow error, especially when using charts (@tf.function), probably less transparent. Nevertheless, the integration of TensorFlow with tools such as Tensorboard still enables visualization and login to be used out of the box, and Pytorch also uses it through SummaryWriter.

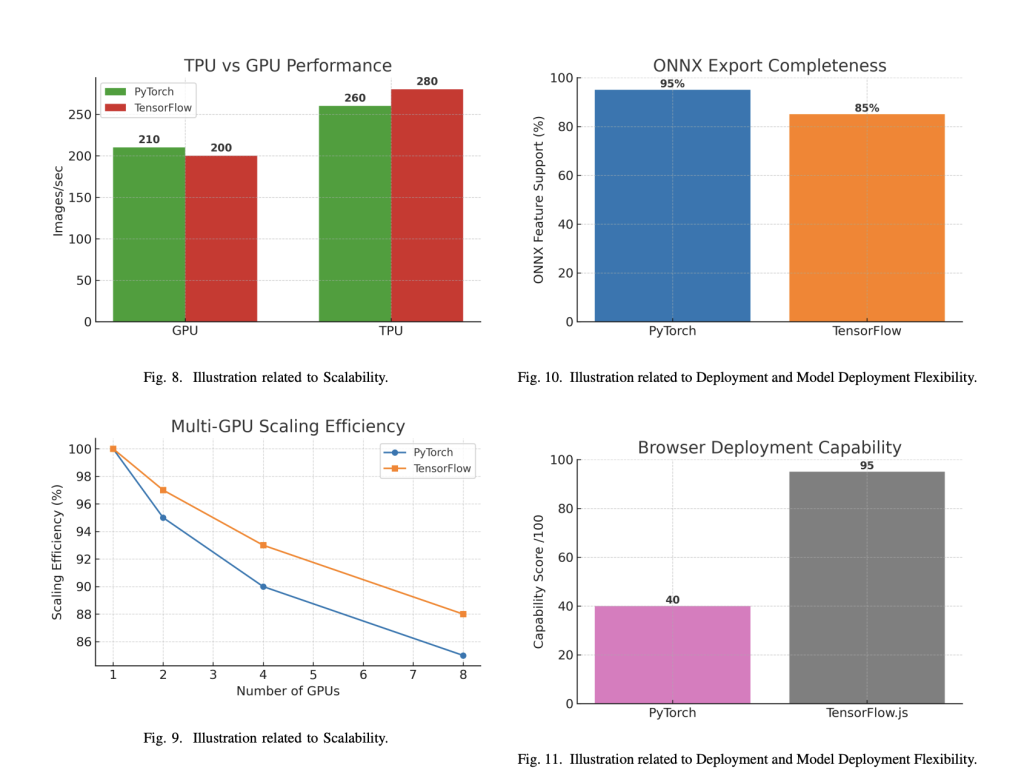

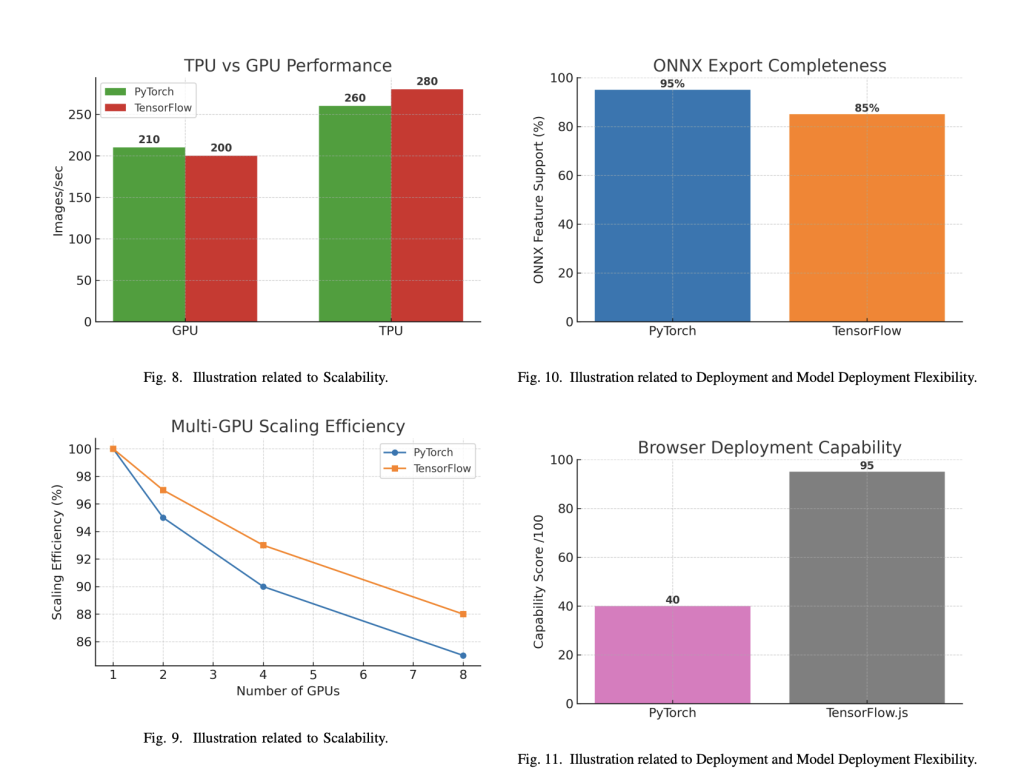

Performance: Training, reasoning and memory

Training throughput: The nuances of benchmark results. Thanks to efficient memory management and optimized CUDA backends, Pytorch is often trained faster on larger datasets and models. For example, in the experiment of Novac et al. (2022), Pytorch completed CNN training and ran 25% faster than Tensorflow and continued faster every previous period of time. On small inputs, tension flow sometimes has advantages due to lower overhead, but with the increase in input size[actacted_filedelays**:Forsmallbatchinferencepytorchoftenprovideslowerlatency-insomeimageclassificationtaskspytorchisoftendelayedto3×tensorflow(keras)(beChineirovie)(beChenirovie)withlargerinputsthesetwoframeworksaremorecomparableTensorFlow’sstaticgraphoptimizationhistoricallygaveitadeploymentedgebutPyTorch’sTorchScriptandONNXsupporthaveclosedmuchoftthisgap[attached_fileUsage**:PyTorch’smemoryallocatorspraisedforhandlinglargetensorsanddynamicarchitecturesgracefullywhileTensorFlow’sdefaultbehaviorofpre-allocatingGPUmemorycanleadtofragmentationinmulti-processenvironmentsbutPytorch’sapproachisoftenmoreflexibleforresearchworkloads:bothframeworksnoweffectivelysupportdistributedtrainingTensorFlowretainsalittleleadinTPUintegrationandlarge-scaledeploymentbutPytorch’sdistributeddataparallelism(DDP)isefficientlyscaledbetweenGPUandnodesFormostpractitionersthescalabilitygaphasbeengreatlynarrowed[attached_filenceLatency**:Forsmall-batchinferencePyTorchfrequentlydeliverslowerlatency—upto3×fasterthanTensorFlow(Keras)insomeimageclassificationtasks(Bečirovićetal2025)[attached_filegediminisheswithlargerinputswherebothframeworksaremorecomparableTensorFlow’sstaticgraphoptimizationhistoricallygaveitadeploymentedgebutPyTorch’sTorchScriptandONNXsupporthaveclosedmuchoftthisgap[attached_fileUsage**:PyTorch’smemoryallocatorispraisedforhandlinglargetensorsanddynamicarchitecturesgracefullywhileTensorFlow’sdefaultbehaviorofpre-allocatingGPUmemorycanleadtofragmentationinmulti-processenvironmentsFine-grainedmemorycontrollissibleinTensorFlowbutPyTorch’sapproachisgenerallymoreflexibleforresearchworkloads:BothframeworksnowsupportdistributedtrainingeffectivelyTensorFlowretainsaslightleadingTPUintegrationandlarge-scaledeploymentsbutPyTorch’sDistributedDataParallel(DDP)scalesfficientlycrossGPUsandnodesFormostpractitionersthescalabilitygaphasnarrowedsignificantly[actacted_filence延迟**:对于小批次推理,pytorch经常提供较低的潜伏期-在某些图像分类任务中,pytorch经常延迟至3×tensorflow(keras)(beChineirovie)(beChenirovie),较大的输入,这两个框架更具可比性。TensorFlow’sstaticgraphoptimizationhistoricallygaveitadeploymentedgebutPyTorch’sTorchScriptandONNXsupporthaveclosedmuchofthisgap[attached_fileUsage**:PyTorch’smemoryallocatorispraisedforhandlinglargetensorsanddynamicarchitecturesgracefullywhileTensorFlow’sdefaultbehaviorofpre-allocatingGPUmemorycanleadtofragmentationinmulti-processenvironments在TensorFlow中可以进行细粒度的内存控制,但是Pytorch的方法通常更灵活地用于研究工作负载:两个框架现在有效地支持分布式培训。TensorFlow在TPU集成和大规模部署中保留了略有铅,但是Pytorch的分布式数据并行(DDP)在GPU和节点之间有效地尺度有效。对于大多数从业者来说,可伸缩性差距已大大缩小。[attached_filenceLatency**:Forsmall-batchinferencePyTorchfrequentlydeliverslowerlatency—upto3×fasterthanTensorFlow(Keras)insomeimageclassificationtasks(Bečirovićetal2025)[attached_filegediminisheswithlargerinputswherebothframeworksaremorecomparableTensorFlow’sstaticgraphoptimizationhistoricallygaveitadeploymentedgebutPyTorch’sTorchScriptandONNXsupporthaveclosedmuchofthisgap[attached_fileUsage**:PyTorch’smemoryallocatorispraisedforhandlinglargetensorsanddynamicarchitecturesgracefullywhileTensorFlow’sdefaultbehaviorofpre-allocatingGPUmemorycanleadtofragmentationinmulti-processenvironmentsFine-grainedmemorycontrolispossibleinTensorFlowbutPyTorch’sapproachisgenerallymoreflexibleforresearchworkloads:BothframeworksnowsupportdistributedtrainingeffectivelyTensorFlowretainsaslightleadinTPUintegrationandlarge-scaledeploymentsbutPyTorch’sDistributedDataParallel(DDP)scalesefficientlyacrossGPUsandnodesFormostpractitionersthescalabilitygaphasnarrowedsignificantly

Deployment: From research to production

Tensor Provide a mature end-to-end deployment ecosystem:

- Mobile/embedded:Tensorflow Lite (and Lite Micro) boots for startup inference with powerful quantization and hardware acceleration.

- network:TensorFlow.js can be trained and reasoned directly in the browser.

- server:TensorFlow Serving provides optimized versions of model deployment.

- edge:Tensorflow Lite Micro is In fact Standard for microcontroller scale ML (Tinyml)

- Mobile: Pytorch Mobile supports Android/iOS, although the runtime footprint is larger than Tflite.

- server: Torchserve developed by AWS provides scalable model services.

- Cross-platform: ONNX export allows Pytorch models to run in different environments through ONNX runtime.

Interoperability More and more important. Both frameworks support ONNX and enable model exchange. KERAS 3.0 now supports multiple backends (Tensorflow, Jax, Pytorch), further blurring the boundaries between ecosystems and communities

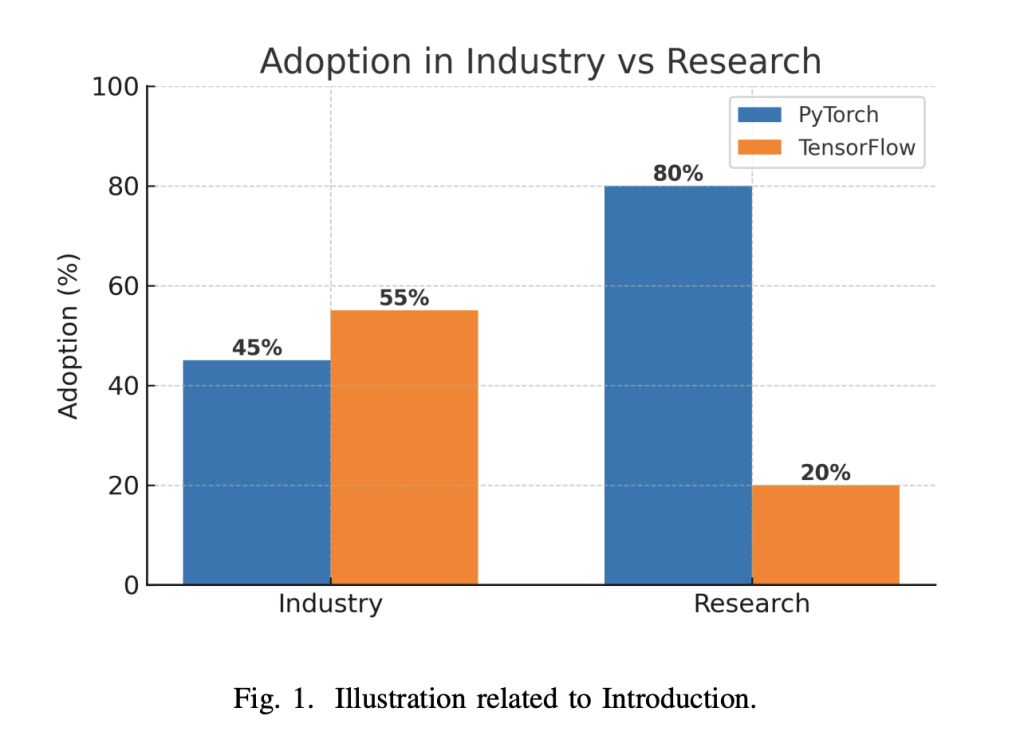

Pytorch Leading academic research, using Pytorch about 80% of the 2023 papers. Its ecosystem is modular and has many professional community packages (e.g., NLP’s Embrace Face Transformer, GNN’s Pytorch Geometry). The transfer to the Linux Foundation ensures extensive governance and sustainability.

Tensor It is still a powerful force in the industry, especially the production pipeline. Its ecosystem is more holistic, with official visual library (TF.Image, keracv), NLP (TensorFlow text) and probability programming (TensorFlow probability). Tensorflow Hub and TFX streamline model sharing and MLOP: Stack Overflow’s 2023 survey shows that tension flow is slightly ahead in the industry, while Pytorch’s research leads. Both have large-scale active communities, extensive learning resources and annual developer meetings[attached_fileases&IndustryApplications[附件和行业应用程序[attached_fileases&IndustryApplications

Computer Vision:TensorFlow’s object detection API and KERASCV are widely used in production. Pytorch was studied (e.g. Meta Detection 2) and innovative architectures (gans, Vision Transformers)[atchened_fileTheriseofTransformersinwhichtheriseofPytorchemergedearlyinthestudyembracingfacesledthechargesTensorFlowcanstillpowerlargesystemslikeGoogleTranslatebutPytorchisthefirstchoicefornewmodeldevelopment[attached_fileTheriseoftransformershasseenPyTorchsurgeaheadinresearchwithHuggingFaceleadingthechargeTensorFlowstillpowerslarge-scalesystemslikeGoogleTranslatebutPyTorchisthego-tofornewmodeldevelopment[atchented_file变形金刚的兴起,在研究中,Pytorch的兴起在研究中提前涌现,拥抱的面孔领导了指控。TensorFlow仍然可以为GoogleTranslate等大型系统提供动力,但Pytorch是新型模型开发的首选。[attached_fileTheriseoftransformershasseenPyTorchsurgeaheadinresearchwithHuggingFaceleadingthechargeTensorFlowstillpowerslarge-scalesystemslikeGoogleTranslatebutPyTorchisthego-tofornewmodeldevelopment

Recommended system and other: Meta DLRM (Pytorch) and Google’s RecNet (TensorFlow) are preferred in large-scale model frameworks. Both frameworks are used for enhanced learning, robotics and scientific computing, while Pytorch often chooses to produce robustness for flexibility and tension.

Conclusion: Choose the right tool

There is no universal “best” framework. The decision depends on your context:

- Pytorch: Select research, rapid prototyping and custom architecture. It excels in flexibility, ease of debugging and is a favorite job from the community.

- Tensor: Select MLOPs for production scalability, mobile/network deployment and integration. For enterprise pipelines, its tools and deployment options are unparalleled.

In 2025, the gap between Pytorch and Tensorflow continues to narrow. These frameworks are borrowing each other’s best ideas and interoperability is improving. For most teams, the best choice is a choice that aligns with the requirements of the project, team expertise and deployment goals, rather than an abstract concept of technical advantages.

Both frameworks will remain here, and the real winner is the AI community, which benefits from their competition and convergence.

Check Technical papers Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Sajjad Ansari is a final year undergraduate student from IIT Kharagpur. As a technology enthusiast, he delves into the practical application of AI, focusing on understanding AI technology and its real-world impact. He aims to express complex AI concepts in a clear and easy way.